-

Enhance your spatial computing app with RealityKit audio

Elevate your spatial computing experience using RealityKit audio. Discover how spatial audio can make your 3D immersive experiences come to life. From ambient audio, reverb, to real-time procedural audio that can add character to your 3D content, learn how RealityKit audio APIs can help make your app more engaging.

Chapters

- 0:00 - Introduction

- 2:01 - Spatial audio

- 11:43 - Collisions

- 16:57 - Reverb presets

- 18:24 - Immersive music

- 20:41 - Mix groups

Resources

Related Videos

WWDC24

-

Search this video…

Hi, I’m James and I work with the RealityKit audio team. Today, we’ll talk about some brand new Audio APIs in RealityKit that will make your spatial computing experiences more interactive and immersive. My colleague Yidi has been working on a game that allows you to fly a virtual spaceship in your real surroundings, and not just in your real surroundings, but even in a custom virtual environment, and even through a portal into outer space! The app uses the new SpatialTrackingService API to fluidly track hand gestures for maneuvering the spaceship.

It uses new physics features like force effects to create an increasingly treacherous asteroid field, and physics joints to attach a trailer carrying precious cargo which swings around if you fly too wildly. And it uses the new portal crossing component so that you can fly the spaceship through a portal into outer space, and back.

Each of these new features ignites a dynamic interplay between the virtual and the real.

Check out the session “Discover RealityKit APIs for iOS, macOS, and visionOS” to learn more about how this game was built. In this session, I am going to make this game a lot more immersive and interactive using the power of RealityKit audio. Let’s explore how. You can download the sample project linked to this session to follow along. I’ll start by adding spatial audio to the spaceship using audio file playback as well as real-time generated audio. Next, I will add spatial audio for collisions between virtual objects, as well as between virtual and real objects. Then, I will include reverb in our immersive environment to take us along with the spaceship to a new space. I will use ambient audio for music playback to set the vibe. And last, I will let our audience tailor the sounds to their liking using audio mix groups. Let’s start with the audio for the spaceship itself. The spaceship has a multilayered sound design, which mirrors its visuals.

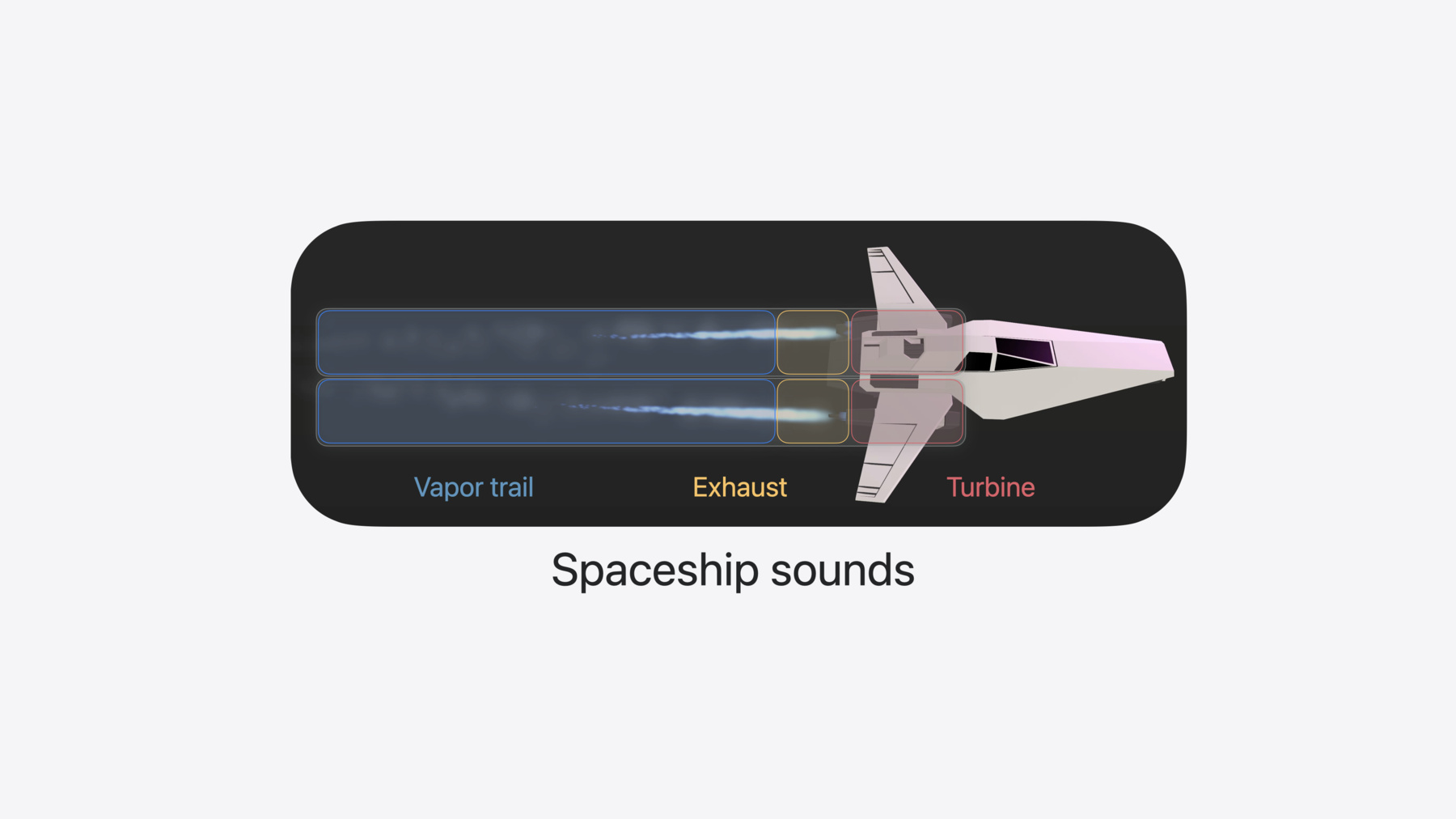

The spaceship has two engines. Each engine has a vapor trail, an exhaust, and a turbine. In the visual realm, the vapor trail is a particle effect which emits regardless of how high the throttle is, whereas the exhaust gets longer as the spaceship’s throttle goes up. There is no visual representation of the turbine, as it is encased in body of the spaceship, but we can expose the invisible with sound design. For the vapor trail, I will play a soft noisy sound which stays constant in loudness regardless of how high the throttle is. For the exhaust, I will play a more powerful noisy sound which gets louder as the throttle goes up. For the turbine, I will dynamically generate a sound whose parameters are dependent on the throttle. Let’s take a quick listen.

First, we will hear the vapor trail audio playback, and as I rotate it away from us, it gets a little quieter and darker. Then, I will flip on the exhaust and manipulate the throttle. Hear how it gets louder as the throttle goes up.

Let’s see how this looks in code.

I’ll load the vapor trail audio file from our app’s bundle. And then I’ll play it from the engine entity right away using Entity.playAudio. Audio playback in RealityKit is spatial by-default. With only a couple lines of code, our audio renders with six degrees of freedom, so that its level and tonality is updated as you or the audio source moves or rotates. They have a physically based distance attenuation, so that as the audio source moves away from you, it gets quieter in a natural way. Last, spatial audio sources have reverb applied to them which is simulated in real-time from your real surroundings.

One thing to note is that audio played spatially is mixed down to a single mono channel before being spatialized. It is recommended to author mono files to ensure no unexpected mixdown artifacts are added in the spatial rendering.

Our vapor trail audio file is short and is authored to loop seamlessly, without any pops and clicks. You can set the shouldLoop property on the AudioFileResource.Configuration, to make this short file play as if it were a continuous noisy sound.

I am playing the same short looping file from both engines. I can set the shouldRandomizeStartTime property, so that when both engines emit sound, they start playing from different positions in the file. By using a combination of looping and randomizing start time of playback, we can be efficient with our resources while delivering a rich audio experience. The noisy vapor trail audio file is authored to loop seamlessly, which also means that it starts abruptly. We can polish up our audio experience by fading in the audio. First, let’s hold onto the AudioPlaybackController that is returned from the Entity.playAudio method. The AudioPlaybackController is like a remote control for the particular sound event instance of an AudioResource playing from an Entity. Each time you call Entity.playAudio or Entity.prepareAudio, an AudioPlaybackController is returned, which you can optionally hold onto for the purposes of configuration, or for transport controls like play, pause, and stop, during runtime. I will set the gain to -.infinity. The AudioPlaybackController gain property is in relative decibels, where -.infinity is silent, and zero is nominally loud. All sounds are clamped to a maximum of zero decibels.

Then, I will call the AudioPlayback Controller.fade(to:duration:) method, so that it fades in to zero decibels over one second. We have the vapor trail audio playing, but when we fly the spaceship, we want it to sound natural and dynamic. For example, when the spaceship is oriented with its engines facing us, we want to hear the detail of the engine sounds. But when it is oriented with its engines facing away from us, the sound should be muted and darker. I will configure the spatial rendering of our engines with the SpatialAudioComponent and directivity.

First, I will add a new Entity() which will represent just the audioSource. Next, I will add it as a child of the engine. Then, I will play the audio from the audioSource, rather than the engine. I will set a SpatialAudioComponent on the audioSource Entity. And I will set the directivity of the SpatialAudioComponent to a beam pattern with a focus of 0.25. Directivity defines the way in which audio propagates from a spatial audio source. The beam directivity pattern operates in the range of zero to one, where zero is omnidirectional or where all sounds propagate in all directions with the same tonality, and one is a tight beam pattern where the sound is quieter and darker at the rear than it is at the front.

Last, I will rotate the audioSource so that the sound emanates from the opening of the engine where the exhaust is. Now, as the ship rotates, we hear a change in level and tonality. The SpatialAudioComponent allows you to set the directional characteristics of your spatial audio source. It also allows you to set the loudness for all sound events emitted from an entity. Since it conforms to the Component protocol, the SpatialAudioComponent makes it easy to manipulate the loudness of an audio source dynamically in the context of a custom system. For example, a single throttle value drives the physics, graphics, and audio for the spaceship. The engine’s exhaust sound is silent when the throttle is 0, it is at full loudness when the throttle is 1, and it interpolates between those two extremes. I have a custom audio system which reads the throttle value each update, it maps the value from the linear throttle value to logarithmic decibels, and then updates the SpatialAudioComponent’s gain property with the decibels value. Our implementation of the spaceship audio is becoming more dynamic. As the spaceship flies around, we hear the loudness and tonality shift due to the directivity pattern, and the exhaust getting louder and quieter as the throttle goes up and down. Behind the blue glow and the noise is a pair of turbines. While we can’t see them, we can hear them spinning up faster and slowing down as the throttle goes up and down. In the Hangar view, we will rev our spaceship’s turbines to hear how they sound. Notice how the frequencies of the sound shift along with the throttle.

Let’s see how we can accomplish this in code.

I’ll start with a method that takes in an Entity representing one of the engines. Then, I’ll call a variant of the Entity.playAudio method Instead of playing an audio resource from this Entity, I’ll provide a callback in which I can write samples directly to the buffers rendered by the audio system. This variant of Entity.playAudio returns an AudioGeneratorController, which must be retained by the app in order for the audio to continue streaming. The audio buffers written with the AudioGeneratorController will behave just like audio resources played on entities. Playback is spatial by default, but you can configure the spatial, ambient, and channel audio components on the entities to control the rendering of the buffers, just as you would with audio file playback.

We have a custom audioUnit in our app, called AudioUnitTurbine. This audioUnit has an Objective-C interface with real-time safe code in its render block. The AudioUnitTurbine has a single throttle value in the range of 0 to 1, and the audioUnit implementation uses this value to drive several oscillators. First, I’ll instantiate() the audio unit. Next, I will prepare the configuration for the AudioGeneratorController. Then, I’ll create the format for the output of the audioUnit and set it on the audioUnit.outputBusses. I’ll allocate the audio unit’s render resources. And capture the audioUnit.internalRenderBlock. Next, I’ll configure the AudioGeneratorController with our audio generator configuration. And last, I’ll call the audioUnit.internalRenderBlock on the audio data provided in the audio generator’s callback.

Real-time audio with AudioGeneratorController allows you to pipe audio from audio units offered by Apple, your own custom audio units, or the output of your own audio engines. The audio is rendered by the ray-traced spatial audio provided by RealityKit and visionOS. Your sounds, however you make them, will receive acoustics in the shared space, as well as mixed, progressive, and full immersive spaces consistent with the other sounds of the system.

Audio playback in RealityKit is spatial by default, and we’ve gone to great lengths to make the default behavior as naturalistic as possible. Of course, our spaceship soars between the realistic and the magical, so we can toy with physics a bit. For example, when the spaceship flies close by us, we can hear the music emanating quietly from its cabin, through its windows. Let’s take a listen.

If we were to play back this audio track with the default spatial audio component parameters, we would always hear the stereo playing. We can achieve the desired effect by customizing the distance attenuation of the spatial audio component. By customizing distance attenuation, you can make certain sounds only audible if you are very close to the source. Alternatively, you can customize it so that sounds are heard even if they are far away. A rolloff factor of 1 is the naturalistic default for spatial audio sources. Setting rolloff factor to 2 will cause sounds to attenuate twice as fast as natural, and a rolloff factor of 0.5 will cause sounds to attenuate half as fast as natural. Now, let’s configure the distance attenuation for our audio.

Here, I’ll configure the distanceAttenuation with a rolloff factor of 4, so that we only hear certain sounds when the ship gets really close to us. I’ll turn down the gain of this spatial audio source so that we only hear the spaceship’s music when it’s very close to us. Next, let’s look into how we can make the entities in our content emit sounds when they collide with one other. First, let’s take a listen. Note when the spaceship collides with the concrete floor, wood boxes, the metal beams, or the glass.

The spaceship can collide with moving virtual objects like the asteroids, as well as static virtual objects, like cement floors, wooden cubes, and metal beams in the Studio environment. In mixed immersion, the spaceship can collide with the mesh reconstructed from the real objects around you. I’ll make these collisions even more impactful by integrating audio informed by the physical materials in our app.

First, I’ll need to respond to CollisionEvents. I’ll write a method which handles the .Began collision event, which gives you information about the collision between two entities. I’ll retrieve an AudioFileResource I have already loaded, and then I’ll play it on the first entity in the collision.

Now, this is a good start, but this means that the same sound will play every time any two types of objects collide, at the same loudness regardless of how hard they collide into one another. I will refine our implementation by using the AudioFileGroupResource. AudioFileGroupResources are constructed from an array of audio file resources. Each time you call Entity.playAudio with an AudioFileGroupResource, a random selection from the audio file resources is played. For our collision audio, I can load a set of sounds which are subtle variations of the same sound event.

This way, each time two objects collide, the sound naturally varies.

The spaceship and the asteroids are very dynamic. They can move fast and slow, and so when they collide, the loudness of the collision sounds should be representative of the impact. As we did when we faded-in the vapor trail audio, I will retrieve the audio playback controller from the call to Entity.playAudio. Then, I will set the gain of the AudioPlaybackController to a level computed from the velocities of both entities.

This ensures that when the two objects collide head-on at high-speed, the collision sound is powerful.

While if they collide at low speed, the collision sound is appropriately attenuated.

In our current implementation, I am using the same set of sounds for any two objects that collide. When the spaceship collides with an asteroid, a computer screen, or a plant, I can emit a sound tailored to the pair of materials involved in the collision.

I will start by defining a set of sounds that we expect to interact with in our app. For example, our spaceship is made of plastic, and the asteroids are made of rock. The surfaces in the Studio environment are all labeled with materials like concrete, wood, metal and glass. In our app, I’ll define an AudioMaterial enum and a custom AudioMaterialComponent which stores the AudioMaterial on an entity. I’ll set the AudioMaterialComponent on all of the virtual objects from which we want to emit collision sounds. For example, I’ll set the rock audio material on the asteroids, and the plastic audio material on the spaceship.

When two virtual objects collide, I’ll first try to read the audioMaterials we set on them. Then, I’ll select from a set of AudioFile GroupResources already loaded and indexed by the pairing of audioMaterials. As a result, the collision sound between the spaceship and an asteroid is informed both by plastic and rock. And a collision between the spaceship and this table is informed by plastic and wood.

Our implementation now supports emitting audio when the virtual objects collide with with one another. Since the surfaces in the virtual Studio environment were all labeled with materials, it offered us the ability to provide custom collision sounds when our spaceship collided with those surfaces or objects. But, we don’t have to limit ourselves only to a virtual space. We can add this exact same behavior to our surfaces and objects from our real world space. We can use the ARKit scene reconstruction mesh from our real world environment which provides a mesh classification type to identify the different surfaces and objects in the room to then associate a sound that plays when our spaceship collides with them. Let’s see how we can implement this behavior. First, I’ll need to determine which face of the mesh the dynamic object collided with. Next, I’ll read the mesh classification for this particular face. Then, I’ll map the mesh classification to our custom audio material enum. Now, I can play back the correct sound for the pair of materials of our virtual and real objects. Let’s hear what happens when these dynamic objects collide with the real world around us. Notice when the spaceship collides with different types of objects, the collision sounds are different. For example, listen to when the spaceship collides with a table, the iMac, or the chair.

Awesome! I must say, it’s as much fun to fly our spaceship around the real world as it is to fly our spaceship in between flying asteroids in this studio immersive environment. When we enter this virtual space, we see that our virtual objects are lit differently with a custom image-based light. We should do the same thing for audio, so that our virtual audio sources sound like they are projecting into this virtual environment, and not the room I’m in right now. We can use the new ReverbComponent and reverb presets to bring us into a new acoustical world. All you need to do is place a ReverbComponent somewhere in your entity hierarchy. Only one reverb is active at a time. Depending on your workflow, you can author this content into your scene in Reality Composer Pro, or using the RealityKit API, like I am here. In visionOS 1, all spatial audio sounds in RealityKit are reverberated by the real-time simulated acoustics of the real-world. This is appropriate for mixed immersion use cases, where the user can see their real surroundings through the visual passthrough. The audio in mixed immersion use cases should sound how they look: just like they are in the user's space.

With these new reverb presets in visionOS 2, you can take your users to a world other than their own with progressive and full immersive spaces. In progressive immersive environments, the user’s real acoustics are blended with the reverb preset based on immersion level. In full immersive environments, your spatial audio sources will be reverberated only by the preset set on the ReverbComponent. Check out Jonathan’s session "Enhance the immersion of media viewing in custom environments" from this year’s WWDC, to see how we author reverb into your Reality Composer Pro package in the Destination Video sample. Now, let’s see how we set the vibe with ambient music playback. When we enter certain phases of the app, different music plays which suits the particular mood. For example, in the Joy Ride phase, we hear light, spacey music which seems to float in mid-air. But when we move into the Work phase, the music plays back with drive.

An important aspect of the spaceship is that you can fly it all around you, not just in front of you. New planets may spawn behind you, requiring you to look around your environment. We want our music to be always present, no matter where the spaceship takes us, And we want the music to have no discernible forward direction, which may detract from the spaciousness of the app. We also want the channels of the audio file to come from world-locked directions, so that it doesn’t feel like it is coming from inside our heads. We made a music track which has four channels, with a channel layout called quadraphonic. The quadraphonic channel layout spreads the four channels equidistantly around you, so there is no salient forward direction that you may have with other multichannel layouts designed for cinematic presentations. We authored our music to have an even distribution of audio signal across the channels, so that the mix feels balanced wherever you look. We also made sure that our music track, like with our engine’s vapor trail and exhaust sounds, loops seamlessly without any pops and clicks. Let’s see how we can integrate this music track into our app with RealityKit.

Multichannel audio files loaded into RealityKit must have their channel layout written into the file, otherwise RealityKit will not be able to render the individual channels of the audio file from the correct angles. I will set the loadingStrategy to .stream, as opposed to preload, which is the default. The stream loading strategy will stream the audio data from disk, decoding in real-time, whereas the preload loading strategy will load and decode the audio data as part of the loading process. The stream loading strategy reduces memory usage but may incur additional latency. Our ambient music doesn’t have strict latency requirements like collision sounds do, for example, but our ambient files are quite large, so the stream loading strategy works best for this use case. Then, I set the AmbientAudioComponent() on the music playing entity. Ambient audio sources are rendered with three degrees of freedom. Source and head rotations are observed, but translation is not. Our app now has several different categories of audio. Depending on what mood I’m in, sometimes I like hearing the dynamic audio from the spaceship, the collision sounds, and the music all at once. But sometimes I just want to listen to the music. The audio mixer in the app allows me to individually control the levels of each category of audio so I can create the perfect vibe while I bask in the sensation of flight. Let’s see how it works.

First, I’ll turn down the music.

Next, I’ll pull down the planet tones.

And last, I’ll bring down the spaceship sounds Now I’ll bring back the planet tones.

And then fill out the mix with the music.

Now, let’s see how to create this in code. I will start by loading our music audio resource. In the AudioFileResource configuration, I’ll set the mixGroupName property to “Music”. This will be the name of the mix group that we will adjust in the audio mixer, which will in turn manipulate the levels of all of the music resources in our app. Next, I’ll create an Entity which will hold an AudioMixGroupsComponent. When the level is updated for our mix group, I’ll create the AudioMixGroup, and set its gain. And last, I’ll construct the AudioMixGroupsComponent and set it on the audioMixerEntity. You can update the AudioMixGroupsComponent with updates to the UI like we did here. As the AudioMixGroupsComponent conforms to the component protocol, it lends itself to being updated in the context of a custom RealityKit system, as well.

Awesome, with audio mix groups, we now have the ability to control the different sounds in our app to tailor the experience to our mood.

We’ve used spatial audio sources with real-time generated audio to bring the spaceship to life, and we’ve authored collision sounds that excite the interface between the virtual and the real. Then, we brought these spatial sounds along with us into an immersive environment with reverb presets. And last, we used audio mix groups to create an ideal mix of the music and sounds in our app. I hope you get to play with this app and I can’t wait to hear what you do with RealityKit audio!

-

-

3:11 - Play vapor trail audio

// Vapor trail audio import RealityKit func playVaporTrailAudio(from engine: Entity) async throws { let resource = try await AudioFileResource(named: "VaporTrail") engine.playAudio(resource) } -

4:02 - Make vapor trail audio playback more dynamic

// Vapor trail audio import RealityKit func playVaporTrailAudio(from engine: Entity) async throws { let resource = try await AudioFileResource( named: "VaporTrail", configuration: AudioFileResource.Configuration( shouldLoop: true, shouldRandomizeStartTime: true ) ) let controller: AudioPlaybackController = engine.playAudio(resource) controller.gain = -.infinity controller.fade(to: .zero, duration: 1) let audioSource = Entity() audioSource.orientation = .init(angle: .pi, axis: [0, 1, 0]) audioSource.components.set( SpatialAudioComponent(directivity: .beam(focus: 0.25)) ) engine.addChild(audioSource) let controller = audioSource.playAudio(resource) } -

7:10 - Exhaust audio

// Exhaust audio import RealityKit func updateAudio(for exhaust: Entity, throttle: Float) { let gain = decibels(amplitude: throttle) exhaust.components[SpatialAudioComponent.self]?.gain = Audio.Decibel(gain) } func decibels(amplitude: Float) -> Float { 20 * log10(amplitude) } -

8:17 - Turbine audio

// Turbine audio import RealityKit var turbineController: AudioGeneratorController? func playTurbineAudio(from engine: Entity) { let audioUnit = try await AudioUnitTurbine.instantiate() let configuration = AudioGeneratorConfiguration(layoutTag: kAudioChannelLayoutTag_Mono) let format = AVAudioFormat( standardFormatWithSampleRate: Double(AudioGeneratorConfiguration.sampleRate), channelLayout: .init(layoutTag: configuration.layoutTag)! ) try audioUnit.outputBusses[0].setFormat(format) try audioUnit.allocateRenderResources() let renderBlock = audioUnit.internalRenderBlock turbineController = try engine.playAudio(configuration: configuration) { isSilence, timestamp, frameCount, outputData in var renderFlags = AudioUnitRenderActionFlags() return renderBlock(&renderFlags, timestamp, frameCount, 0, outputData, nil, nil) } } -

11:28 - Setting distance attenuation and gain

import RealityKit func configureDistanceAttenuation(for spaceshipHifi: Entity) { spaceshipHifi.components.set( SpatialAudioComponent( gain: -18, distanceAttenuation: .rolloff(factor: 4) ) ) } -

12:36 - Loudness variation

// Loudness variation import RealityKit func handleCollisionBegan(_ collision: CollisionEvents.Began) { let resource: AudioFileGroupResource // … let controller = collision.entityA.playAudio(resource) controller.gain = relativeLoudness(for: collision) } -

14:44 - Defining audio materials

// Audio materials import RealityKit enum AudioMaterial { case none case plastic case rock case metal case drywall case wood case glass case concrete case fabric } struct AudioMaterialComponent: Component { var material: AudioMaterial } -

14:53 - Setting audio materials

// Setting Audio Materials asteroid.components.set( AudioMaterialComponent(material: .rock) ) spaceship.components.set( AudioMaterialComponent(material: .plastic) ) -

15:04 - Handling collision audio

// Audio materials import RealityKit func handleCollisionBegan(_ collision: CollisionEvents.Began) { guard let audioMaterials = audioMaterials(for: collision), let resource: AudioFileGroupResource = collisionAudio[audioMaterials] else { return } let controller = collision.entityA.playAudio(resource) controller.gain = relativeLoudness(for: collision) } -

17:18 - Reverb presets

// Reverb presets import Studio func prepareStudioEnvironment() async throws { let studio = try await Entity(named: "Studio", in: studioBundle) studio.components.set( ReverbComponent(reverb: .preset(.veryLargeRoom)) ) rootEntity.addChild(studio) } -

20:05 - Immersive music

// Immersive music import RealityKit func playJoyRideMusic(from entity: Entity) async throws { let resource = try await AudioFileResource( named: “JoyRideMusic”, configuration: .init( loadingStrategy: .stream, shouldLoop: true ) ) entity.components.set(AmbientAudioComponent()) entity.playAudio(resource) } -

21:57 - Using AudioMixGroup with a RealityKit entity

// Audio mix groups import RealityKit let resource = try await AudioFileResource( named: “JoyRideMusic”, configuration: .init( loadingStrategy: .stream, shouldLoop: true, mixGroupName: “Music” ) ) var audioMixerEntity = Entity() func updateMixGroup(named mixGroupName: String, to level: Audio.Decibel) { var mixGroup = AudioMixGroup(name: mixGroupName) mixGroup.gain = level let component = AudioMixGroupsComponent(mixGroups: [mixGroup]) audioMixerEntity.components.set(component) }

-