-

Build compelling spatial photo and video experiences

Learn how to adopt spatial photos and videos in your apps. Explore the different types of stereoscopic media and find out how to capture spatial videos in your iOS app on iPhone 15 Pro. Discover the various ways to detect and present spatial media, including the new QuickLook Preview Application API in visionOS. And take a deep dive into the metadata and stereo concepts that make a photo or video spatial.

Chapters

- 0:00 - Introduction

- 1:07 - Types of stereoscopic experiences

- 4:13 - Tour of the new APIs

- 13:14 - Deep dive into spatial media formats

Resources

- Creating spatial photos and videos with spatial metadata

- Writing spatial photos

- Converting side-by-side 3D video to multiview HEVC and spatial video

- Forum: Spatial Computing

- AVCam: Building a camera app

Related Videos

WWDC24

- Bring your iOS or iPadOS game to visionOS

- Enhance the immersion of media viewing in custom environments

- Optimize for the spatial web

- What’s new in Quick Look for visionOS

WWDC23

-

Search this video…

Hi, I'm Vedant, an engineer on Apple Vision Pro. In this session, I'll be showing you how to build compelling experiences with Spatial Photos and Spatial Videos. It's been a year since we announced Spatial Photos and Videos, a new medium unlocking a whole new dimension to visual storytelling, and we've been amazed by how people are using it to relive personal moments with family, share stories from around the world, transport us underwater and into the air, and so much more.

And now, with machine learning, any photo can be converted into a spatial photo, allowing you to relive old memories in a brand new way.

Today, I'll be showing you how you can add spatial media experiences to your own apps! First, we'll take a look at the different types of stereoscopic video experiences available, to understand what makes spatial so special.

Then, we'll take a tour of all the new spatial media APIs, and the capabilities they enable.

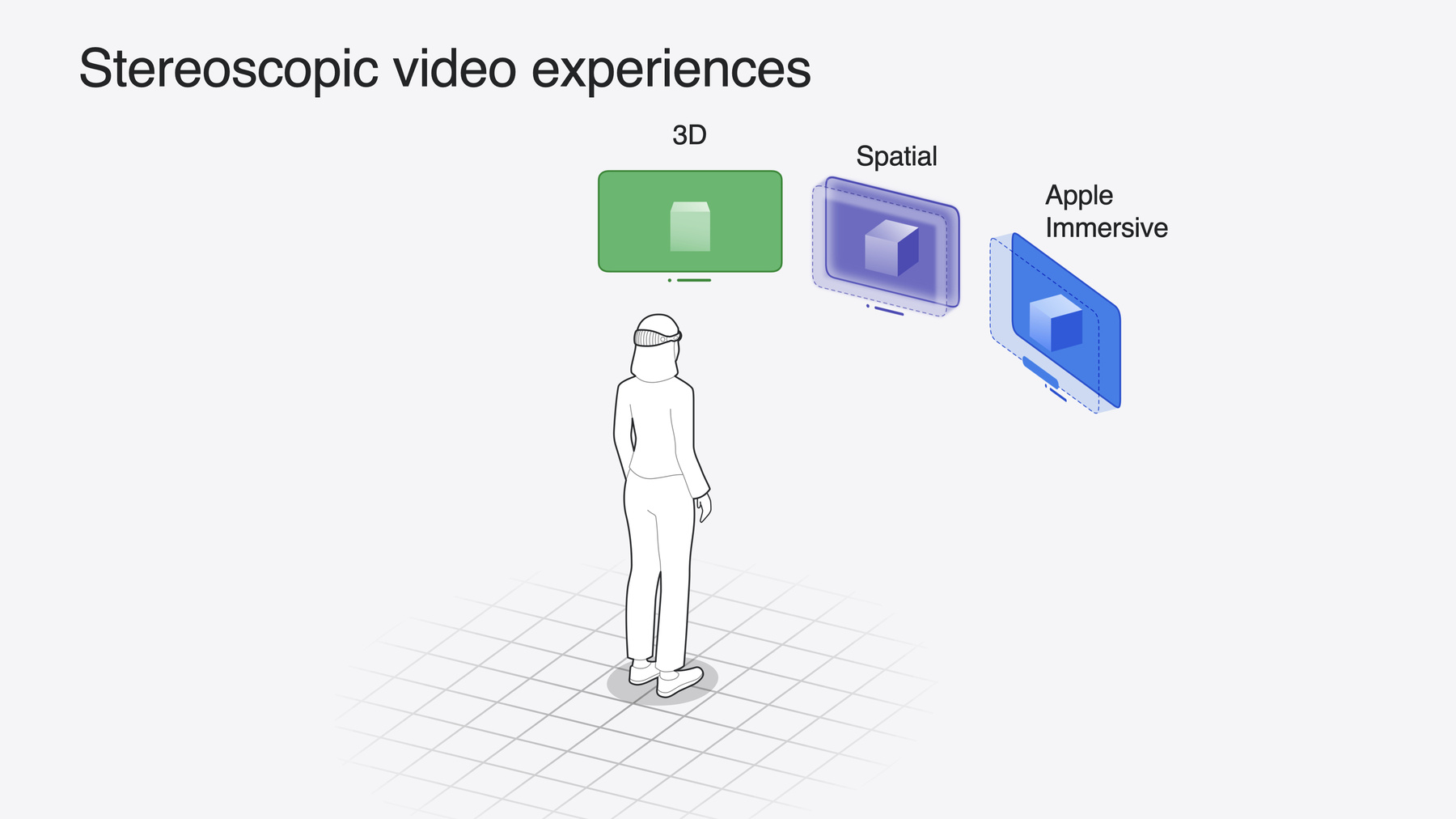

Finally, for the power users who want to create custom spatial media, we'll take a deep dive into the spatial media formats. Let's start by looking at the different types of stereo video. Stereoscopy is a great way to enhance video for Vision Pro, and Apple offers several formats that support it. First there's 3D video, like the 3D movies available in the AppleTV and Disney+ apps. These render on a flat screen, but since the content is stereoscopic, it has depth, movements in the video can appear to pop out of the screen, or recede into it.

Then there's spatial video, that you can capture on Vision Pro and iPhone 15 Pro. It renders through a window, with a faint glow around the edges and custom playback controls. This enhances the sense of depth, and softens some common sources of discomfort. Spatial video works great as a point-and-shoot format. People can shoot comfortable, compelling content, on devices like iPhone, without needing to be an expert in the rules of 3D filmmaking. For high-end professional content, there's Apple Immersive video, shot in 180-degree 8K resolution, with Spatial Audio. In visionOS 2.0, this now comes with a preview presentation, that, like Spatial Videos renders content through a smaller floating window. This let's people watch their videos, in stereo, while still being able to interact with other apps.

Each of these experiences look great like this, but they really shine in full immersion, where other apps get backgrounded, and the content takes focus. They each have their way of displaying, in full immersion. 3D video docks into an environment, where the screen moves backwards and enlarges. This is my favorite way to watch movies, both 2D and 3D.

To learn how to add docking to your own apps, check out the video titled "Enhance video playback and immersion in your custom app environment" Spatial video expands into it's immersive presentation, where the content is scaled to match the real size of objects. The edge of the frame disappears and the content blends seamlessly into the real world, so it feels like you're in the content, not just looking at it.

People can fully immerse themselves in a spatial video by tapping the Immersive button in the top-right corner, and collapse it with the exit button at the bottom.

This allows them to return to a windowed presentation that allows multi-tasking with other apps in the shared space.

For Apple Immersive Video, the video expands into it's native experience, the video screen wraps around the viewer, and passthrough is disabled altogether, putting you right inside the action. This unlocks new possibilities for professional creative storytelling, where the viewer can be transported into the story, in the highest possible fidelity.

Together, 3D video, Spatial Video, and Apple Immersive video create a rich ecosystem of stereoscopic content, each well-suited to different contexts. And these are just some of the stereo media experiences available on visionOS. There's also Spatial photos, which use the same portal and immersive presentations as spatial video. Custom video experiences, that you can build with APIs and AVFoundation, and RealityKit. And, the same way photos and videos are enhanced with stereoscopy, real-time 3D content can also look amazing in stereo, especially when that content is interactive. For more details on how to achieve that, checkout the video: "Bring your iOS or iPadOS game to visionOS" Now that we know what spatial media is, let's see how we can add them to our apps. In this section, we'll go over three new areas of APIs. Recording spatial videos, detecting if a file is spatial and loading it, and different options for presenting it on visionOS. You'll notice throughout this section that there's no new framework for spatial media. That's because it's all been integrated into the existing Apple frameworks you already know and love. AVFoundation, PhotoKit, QuickLook, WebKit, and more. With a just a few lines of code, you can add spatial media to your apps, the same way you would for other types of media. First, a look at how spatial video gets recorded.

On the iPhone 15 Pro, we made the enabling change to put the wide and ultrawide cameras side-by-side, instead of diagonally. This means that when the phone is held in landscape orientation, the two cameras are on the same horizontal baseline, oriented just like human eyes are. This is what enables spatial video, in the Camera app. And we're now making that API public, so any app can record spatial video. Let's see how.

We'll write some code to record normal 2D video, then extend it for spatial.

With AVCapture, we'll be using the following data flow. We first need a deviceInput, that represents input from a CaptureDevice, like a camera. We then need an MovieFileOutput, that manages the output and writes it to disk. The input and output are connected with an AVCapture Connection, and an AVCaptureSession coordinates the data flow between these components.

Let's write that out in code. We first create an AVCaptureSession, then create an AVCaptureDevice, setting it to the default systemPreferredCamera, for now.

Then, we add an input to the session.

Add an output to the session.

Commit the configuration, and start running it.

If you've used the Camera Capture APIs before, this flow should look extremely familiar to you. If you haven't, I'd recommend checking out the AVCam sample code project, on developer.apple.com Now, let's extend this code to record spatial video. We just have to do three things. Change the AVCaptureDevice, pick a supported video format, and enable spatial video recording, on the output.

First, we'll change the AVCaptureDevice. We'll use the builtInDualWideCamera, since spatial video requires the wide and ultra wide cameras to be streaming simultaneously.

Next, we'll pick a video format that supports spatial video. We iterate through the formats in the videoDevice, checking if isSpatialVideoCaptureSupported is true. When we find one for which it is, we make it the activeFormat on the videoDevice. Let's make sure to lock and unlock the videoDevice appropriately. And let's add some error handling. We'll return false if we don't find any spatial formats that meet the criteria.

Finally, let's check that spatial video capture is supported and when it is, set isSpatialVideoCaptureEnabled to true.

Note that this only works on iPhone 15Pro, and isSpatialVideoCaptureSupported will return false on other devices. In that case, you'll need to handle that error appropriately.

And that's it! With just those three small changes, we're all set to record spatial video! All of the complexity of synchronization, camera calibration, encoding, and metadata writing are abstracted away. You just get a fully formed spatial video file on disk, from the movieFileOutput. And while it really is that easy, there's two more things we can add to get even better quality recordings. Improved video stabilization and a great preview. Let's see how we can do that.

To get the best quality stabilization, set the preferredVideoStabilizationMode to cinematicExtendedEnhanced. This uses a longer lookahead window and crops more of the frame, to get even smoother videos. Smooth video is always desirable, but it's especially important on visionOS, since the screen sizes can be made much-much larger, than other devices.

You can get a video preview feed with the AVCaptureVideoPreviewLayer API, the same way you would for other video formats. However, since the iPhone display is monocular, we can't give you a spatial preview. So it always shows the wide camera, regardless of device orientation.

It's important to note, that the two cameras used for spatial video the wide and the ultra wide, are actually different kinds of cameras! The wide is the best camera on iPhone for general photography, and the ultra wide is optimised for dramatic wide angle shots and close-up focus. The two cameras each have different light-gathering abilities, and minimum focus distances. When shooting spatial, most of the time these differences aren't too noticeable, thanks to the computational photography techniques we use to correct them. However, when shooting spatial videos in low-light, the noise levels in each camera might be different, causing discomfort when viewed in Vision Pro. Similarly, shooting a subject closer than one of the cameras' minimum focus distances, can also cause a focus mismatch, which can be uncomfortable. Since the iPhone preview is monocular, you might not notice these issues until you view the videos later, on Vision Pro. And by then, the moment you were trying to capture might have passed, and it's too late to re-record it.

To address this problem, we created a new spatialCaptureDiscomfortReasons variable on AVCaptureDevice. You can observe this in your app with Key Value Observation, and render appropriate Guidance UI when the .subjectTooClose or .notEnoughLight conditions are detected.

Here's the iPhone Camera app, surfacing warnings when I get too close to my subject, and dismissing them when I move back. We recommend that you show some similar guidance UI in your apps.

Now that we recorded some spatial video, let's see how we can play it back. The first step is to detect that an asset is spatial, and load it.

There's a few different ways to do that, PhotosPicker, PhotoKit, and AVAssetPlaybackAssistant.

The PhotosPicker API presents a view that helps users choose assets from their Photo Library. It now supports filtering on spatial assets. Specify .spatialMedia in the matching parameter, and it will filter the user's library to only show them their spatial photos and videos.

If you don't need input from the user, you can use PhotoKit to programmatically fetch all spatial assets from the user's Photo Library. Just set spatialMedia as your PHAssetMediaSubtype, when constructing your fetch predicate.

We can also modify the fetch request to only return spatial photos.

Or, only return spatial videos.

And for video, you can also use AVAssetPlaybackAssistant. It's playbackConfigurationOptions can be queried to check if a local asset is spatial.

This is a great option if your app loads videos from sources outside of the Photo Library.

Now that we can load our spatial content, let's present it. Again, there's a few different options to do this.

There is PreviewApplication API, in the QuickLook framework, that supports photos and videos, the Element FullScreen API, in Javascript, that supports loading spatial photos from the web, and AVPlayerViewController, in AVKit thats supports spatial video.

QuickLook is the system standard way to quickly open files on visionOS. With the new PreviewApplication API, you can now spawn a QuickLook scene from inside your own app, that hosts your own content. It supports both spatial photos and videos, and uses the full spatial presentation, just like the Photos app. For more information about the PreviewApplication API, check out the video: "What's new in Quick Look for spatial computing" Then, there’s the Element FullScreen API in Javascript, which allows spatial photos to be opened from a webpage in Safari. Like PreviewApplication, this opens in a new scene, and comes with the full spatial presentation. To learn more about the FullScreen API check out the video: "Optimize for the Spatial Web" Finally, there’s AVPlayerViewController, our cross-platform API for playing all types of video content. It supports both 2D & 3D video, and so it’s a great option when you want to display both types of content, with a consistent presentation style. It also support HTTP Live Streaming, for spatial video.

There are a few things to keep in mind, when using AVPlayerViewController with spatial video. The video only displays in 3D when it becomes fullscreen. In the inline presentation, it’ll display as 2D. The easiest way to make content fullscreen is to size the AVPlayerViewController, to match the frame of the WindowScene.

Note that even in fullscreen, AVPlayerViewController will use the 3D video presentation, not the spatial one. Since spatial videos are built on top of MVHEVC, they’re also valid as 3D videos, which is how AVPlayerViewController will treat them. If your app needs the full spatial presentation, use PreviewApplication, instead.

Finally, as you design your apps, try to minimize the amount of UI placed directly on top of the video. Uncluttered UI keeps the focus on the content, and minimizes the risk of depth conflicts.

We’ve now seen multiple ways to record, detect, load, and present spatial media. As an app developer, the APIs we just saw are all you need to get started using spatial media in your apps.

But for those who want to create custom spatial media, let’s take a look under the hood.

At a file format level, a spatial video is a stereo MV-HEVC video with extra spatial metadata.

And a spatial photo is a stereo HEIC, with two images in a stereo group, along with some spatial metadata. To understand how to read and write these files, we have two sample code projects and an article, on developer.apple.com. Now, let’s take a deeper look at the spatial metadata, common to both file formats. Spatial metadata comprises of a few things. There’s the projection. That defines the relationship between objects in the world, and pixels in the image. Spatial photos and videos always use the rectilinear projection.

Then there’s the baseline and field of view, these describe physical properties of the camera that capture the spatial asset. And there’s disparity adjustment, which controls the 3D effect in the windowed presentation. Additionally, there are some guidelines for how the left and right images should be created. Since these are image characteristics, stored as pixels in the image, they’re not explicitly metadata fields, and aren’t enforced when checking if a file is spatial. However, they’re still important to understand and get right for the optimal viewing experience.

For optimal image characteristics the left and right images should be stereo rectified, have optical axis alignment, and have no vertical disparity. That’s a lot of new technical terms! To understand them better, let’s see an example.

Here’s Howie the hummingbird, who’ll be the model for our spatial photoshoot. To capture him, I’ve got two cameras in a stereo pair, aligned horizontally.

They each take a synchronized picture, that forms the left and right images of the stereo pair. These are pinhole cameras, so the images are rectilinear. meaning that straight lines in the world, appear straight in the images.

Our first metadata field is the baseline, sometimes called the inter-axial distance, It defines the horizontal distance between the centers of the two cameras. The optimal distance to set the baseline, depends on the use-case. A value around 64mm will produce images closest to human vision, since that’s how far apart our eyes tend to be. But smaller values can be great especially when shooting close up scenes, and really large baselines are used to capture depth in far away objects, like for stereo landscape photography. The tradeoff for wide baselines is a miniaturization effect due to exaggerated depth.

For this example, I’ll stick to a 64mm baseline.

The next piece of metadata, the field of view, is the horizontal angle, in degrees, captured by each camera. Generally, the higher the field of view, the more immersive, since more of the scene is in view. However, for a fixed sensor, higher fields of view will spread more content across the same number of pixels, so the angular resolution will be lower. Additionally, since our projection is rectilinear. Fields of view greater that 90 degrees will be inefficient in their angular sampling density especially on the edges, and thus, aren’t really recommended. For this example, I’m going to use 60 degrees for the field of view.

Now let’s check all of our image characteristics. The first guideline states that the images should be stereo rectified, meaning that that the optical axes of the two cameras are parallel, as shown here. And the two images are on the same plane. If the optical axes of the cameras aren’t parallel, as shown here, the images will not be co-planar, and thus, aren’t stereo rectified. If that’s the case, you’ll have to undistort them, with an image processing technique, called stereo rectification.

Next, let's make sure the image centers are aligned with the optical centers. This means that the center pixel of each image is aligned with the optical axis. This is true by default for pinhole cameras, but there’s a few subtle ways we can accidentally break this alignment.

To see, let’s take a closer look at our images.

Here’s our stereo image pair, with an image center that’s aligned with the optical axis. But if we crop the images, the center pixel will change. The new center pixel is no longer aligned with the optical axis, so this cropped stereo pair is sub-optimal. Similarly, shifting the images left and right will also change the center pixel, and break this guideline. Applying these kinds of horizontal shifts is a common practice in 3D filmmaking, to achieve artistic effect. But it isn't recommended for spatial media. To achieve those effects, we have the disparity adjustment metadata, which we’ll come back to later. First, let’s make sure we have no vertical disparity. The easiest way to do this is to take your left and right images, and identify a feature point common to both images. Here, the beak of the bird. And draw a line between them. If that line is perfectly horizontal, then the images are correctly aligned, with no vertical disparity.

Here’s a different stereo pair, where the cameras were misaligned.

We can check for vertical disparity by marking out feature points, and connecting them. Here, the line between the feature points isn’t perfectly horizontal. This means that the images have vertical disparity, and will be uncomfortable to view in Vision Pro.

Let’s review what’ve done so far. We’ve got our left and right frames, that we’ve ensured are rectilinear, stereo rectified, optical axis aligned, and have no vertical disparity. We also know our baseline and field of view.

Now let’s see how this content gets rendered. That’s where the magic happens, and where all this careful camera calibration will finally pay off.

With the information provided, the renderer can construct a camera model that defines how objects in the world were captured by the cameras.

Those rays are then traced out in reverse, to render two images, at the correct size and distance.

When viewed in Vision Pro, one image is displayed to each of the viewer’s eyes. Their visual system fuses those two images into a 3D scene, and perceives a 3D object. When the files are constructed correctly, with accurate metadata, and ensuring all the image characteristics guidelines are met, the perceived image that your eyes see, will exactly match the real object that the cameras saw.

You’re effectively teleported back to that moment, replacing the cameras with your eyes. This is what creates that magical feeling of "reliving a moment", in first-person.

Our spatial photo can be equally powerful in the shared space. In this mode, visionOS displays these images in a window, scaling down the scene while still rendering it stereoscopically. The goal here is not to be perfectly true-to-life, but rather to provide an artistically and emotionally resonant 3D effect. To make sure people are comfortable viewing content in a window, we introduce the last piece of spatial metadata, the disparity adjustment.

If you’re familiar with stereo photography, the disparity adjustment is a hint to the renderer, on where to place the zero parallax plane. It’s sometimes called the convergence adjustment or a horizontal image translation.

Really it just represents a horizontal offset, encoded as a percentage of the image width.

A negative value will push the left frame to the right, and the right frame to the left. A positive value will push them in the opposite direction.

As the images shift horizontally, the 3D scene perceived by your brain will shift in depth, along the z-axis. A negative value therefore, will make content appear closer to the viewer, and a positive value will push it further away. Be careful when setting this value! Too large of a negative value can cause depth conflicts, and too large of a positive value can cause your eyes to diverge, especially for objects in the background, which can be uncomfortable. For this scene, I’m going to pick a value that places the hummingbird just beyond the front of the window. A shift of even a couple percentage points is enough to dramatically alter the perception of the image, so make sure to test in Vision Pro, when picking a value for the disparity adjustment.

We can now add in our horizontal disparity adjustment, and with that, we have all the inputs we need to construct a spatial asset. All that’s left is to package up this data into the appropriate file format. Stereo MVHEVC for video, and a stereo HEIC for photos.

Our final creation now renders correctly in Apple Vision Pro, and looks amazing, in both the windowed presentation, and when fully immersive.

Let’s wrap it up. In this talk, we’ve learned about the multiple types of stereo media on visionOS. 3D videos, Apple immersive videos, and spatial photos and videos. We’ve seen how to capture, detect, and display spatial photos and videos in your apps, and we’ve discovered how to create your own spatial media with accurate metadata and perfectly-aligned images for a great experience in Vision Pro.

That’s it from me! I can’t wait to see all the amazing new experiences you’ll build with spatial media.

-

-

6:19 - Spatial video capture on iPhone 15 Pro

class CaptureManager { var session: AVCaptureSession! var input: AVCaptureDeviceInput! var output: AVCaptureMovieFileOutput! func setupSession() throws -> Bool { session = AVCaptureSession() session.beginConfiguration() guard let videoDevice = AVCaptureDevice.default( .builtInDualWideCamera, for: .video, position: .back ) else { return false } var foundSpatialFormat = false for format in videoDevice.formats { if format.isSpatialVideoCaptureSupported { try videoDevice.lockForConfiguration() videoDevice.activeFormat = format videoDevice.unlockForConfiguration() foundSpatialFormat = true break } } guard foundSpatialFormat else { return false } let videoDeviceInput = try AVCaptureDeviceInput(device: videoDevice) guard session.canAddInput(videoDeviceInput) else { return false } session.addInput(videoDeviceInput) input = videoDeviceInput let movieFileOutput = AVCaptureMovieFileOutput() guard session.canAddOutput(movieFileOutput) else { return false } session.addOutput(movieFileOutput) output = movieFileOutput guard let connection = output.connection(with: .video) else { return false } guard connection.isVideoStabilizationSupported else { return false } connection.preferredVideoStabilizationMode = .cinematicExtendedEnhanced guard movieFileOutput.isSpatialVideoCaptureSupported else { return false } movieFileOutput.isSpatialVideoCaptureEnabled = true session.commitConfiguration() session.startRunning() return true } } -

9:13 - Observing spatial capture discomfort reasons

let observation = videoDevice.observe(\.spatialCaptureDiscomfortReasons) { (device, change) in guard let newValue = change.newValue else { return } if newValue.contains(.subjectTooClose) { // Guide user to move back } if newValue.contains(.notEnoughLight) { // Guide user to find a brighter environment } } -

9:58 - PhotosPicker

import SwiftUI import PhotosUI struct PickerView: View { @State var selectedItem: PhotosPickerItem? var body: some View { PhotosPicker(selection: $selectedItem, matching: .spatialMedia) { Text("Choose a spatial photo or video") } } } -

10:14 - PhotoKit - all spatial assets

import Photos func fetchSpatialAssets() { let fetchOptions = PHFetchOptions() fetchOptions.predicate = NSPredicate( format: "(mediaSubtypes & %d) != 0", argumentArray: [PHAssetMediaSubtype.spatialMedia.rawValue] ) fetchResult = PHAsset.fetchAssets(with: fetchOptions) } -

10:36 - AVAssetPlaybackAssistant

import AVFoundation extension AVURLAsset { func isSpatialVideo() async -> Bool { let assistant = AVAssetPlaybackAssistant(asset: self) let options = await assistant.playbackConfigurationOptions return options.contains(.spatialVideo) } }

-