-

Create enhanced spatial computing experiences with ARKit

Learn how to create captivating immersive experiences with ARKit's latest features. Explore ways to use room tracking and object tracking to further engage with your surroundings. We'll also share how your app can react to changes in your environment's lighting on this platform. Discover improvements in hand tracking and plane detection which can make your spatial experiences more intuitive.

Chapters

- 0:00 - Introduction

- 2:30 - Room tracking

- 5:46 - Plane detection

- 6:46 - Object tracking

- 9:34 - World tracking

- 11:38 - Hand tracking

Resources

Related Videos

WWDC24

- Build a spatial drawing app with RealityKit

- Explore object tracking for visionOS

- Render Metal with passthrough in visionOS

WWDC23

-

Search this video…

Hi, my name is Divyesh. In this session, you’ll learn about some exciting updates coming to ARKit on visionOS. Last year, we introduced ARKit as the host of core, real-time algorithms that power the entire operating system. We’re amazed by the phenomenal spatial experiences you’ve built using ARKit’s tracking and scene understanding capabilities. Blackbox, for instance, leverages ARKit to detect hand gestures needed to solve engaging puzzles.

Super Fruit Ninja also uses hand tracking for slicing through fruits, and scene understanding features for having the remnants splash onto the floor in a realistic way.

With your feedback, we spent the past year developing several new features to help your apps further understand and engage with your surroundings. Let’s go over some of the advancements we’ve made. I’ll begin with some improvements in scene understanding. We have a new room tracking feature. And an update to plane detection, which can help you customize experiences based on the room you’re in. I’ll also tell you about the new object tracking capability, which can help you create interactive visualizations for real-world items. Afterwards, I’ll explain how ARKit’s world tracking has become more robust for various lighting conditions. Finally, I’ll go over ways your app can benefit from our latest improvements in hand tracking. Before diving in, let’s start with a quick review of ARKit on visionOS.

To recap, when your visionOS app presents a Full Space, it can receive data from ARKit in the form of Anchors.

Anchors represent a position and orientation in three-dimensional space. For example, plane detection data is delivered in the form of PlaneAnchors, which hold information about surfaces detected in the real world.

ARKit will deliver Anchors to your app through Data Providers. A Data Provider is the interface for configuring an individual ARKit feature, and receiving its data. The PlaneDetectionProvider is the interface for receiving PlaneAnchors.

You can run an ARKitSession by supplying a set of Data Providers that you want to use together in your experience. If you’re new to ARKit’s APIs or would like more context, I highly recommend watching last year’s session, "Meet ARKit for spatial computing" With that, let’s get into our first new feature, room tracking.

Many apps on visionOS are set within the room a person is in. With mesh and plane anchor data, you can already augment the setting with realistically placed virtual content.

Now, you can take your engagement to a whole new level. Imagine having a virtual pet greet you when you walk into your bedroom. Room tracking makes it possible to tailor unique experiences like this, for each space that you visit.

As you look around, ARKit can identify the boundaries of the room that you’re currently in. It uses this information to compute precisely-aligned geometries of the detected walls and floor. Furthermore, ARKit can recognize transitions between rooms. When you enter a new area, it will switch to delivering data for the space that you now occupy. This can help your app exhibit different experiences based on the room that you’re in.

All this information gets surfaced through the new Room Tracking data provider, which requires world sensing authorization.

The RoomTrackingProvider can tell you about the rooms that have been visited.

This interface lets you access the current room’s anchor, if ARKit determines that you’re in a confined space. You can use the current room anchor to shape your experience according to this interior’s layout.

Your app can also listen for asynchronous room tracking updates, similar to how you would receive anchors from our other data providers. This is a good way to receive the latest information about the space you’re currently in, especially as you transition between rooms Note that the RoomTrackingProvider only updates anchor data for the room that you’re currently in. Let’s look at what’s delivered. Each RoomAnchor holds spatial information about a specific, confined space.

This anchor can indicate whether it models the current room or not. This is useful when you want certain content to only appear in the space that you’re presently in.

Each RoomAnchor also holds the geometry of the surrounding walls and floor. It’s comprised of a collection of vertices, edges, and triangular faces that define the shape of a room. Note that this mesh is more aligned with the room’s boundaries, compared to the geometries you’d find in mesh and plane anchors.

You can also choose to get the mesh as an array of disjoint geometries, one for each complete wall or floor. These can be useful for occluding virtual content that’s placed outside of your room, or for augmentations like opening a virtual portal along an entire wall.

You can also test whether a given three-dimensional point exists within the room’s boundaries. When used with world tracking, you can create magical experiences where virtual objects come to life when you enter a certain room.

Lastly, this new Anchor offers the room’s associated plane and mesh anchor IDs. These can help you optimize your app when combined with other scene understanding features. For example, you may want to avoid performing expensive operations for planes and meshes that exist outside the current room.

These are just a few ways to elevate your app with room tracking. We’re excited to see you do even more! Next, let’s talk about an update to plane detection. As ARKit detects planar surfaces in your surroundings, it can give you information about them in the form of PlaneAnchors. These are useful for placing virtual content around surfaces like placing a board game on a table, or a virtual poster on a wall.

The PlaneDetectionProvider can inform your app of detected horizontal surfaces, and vertical surfaces.

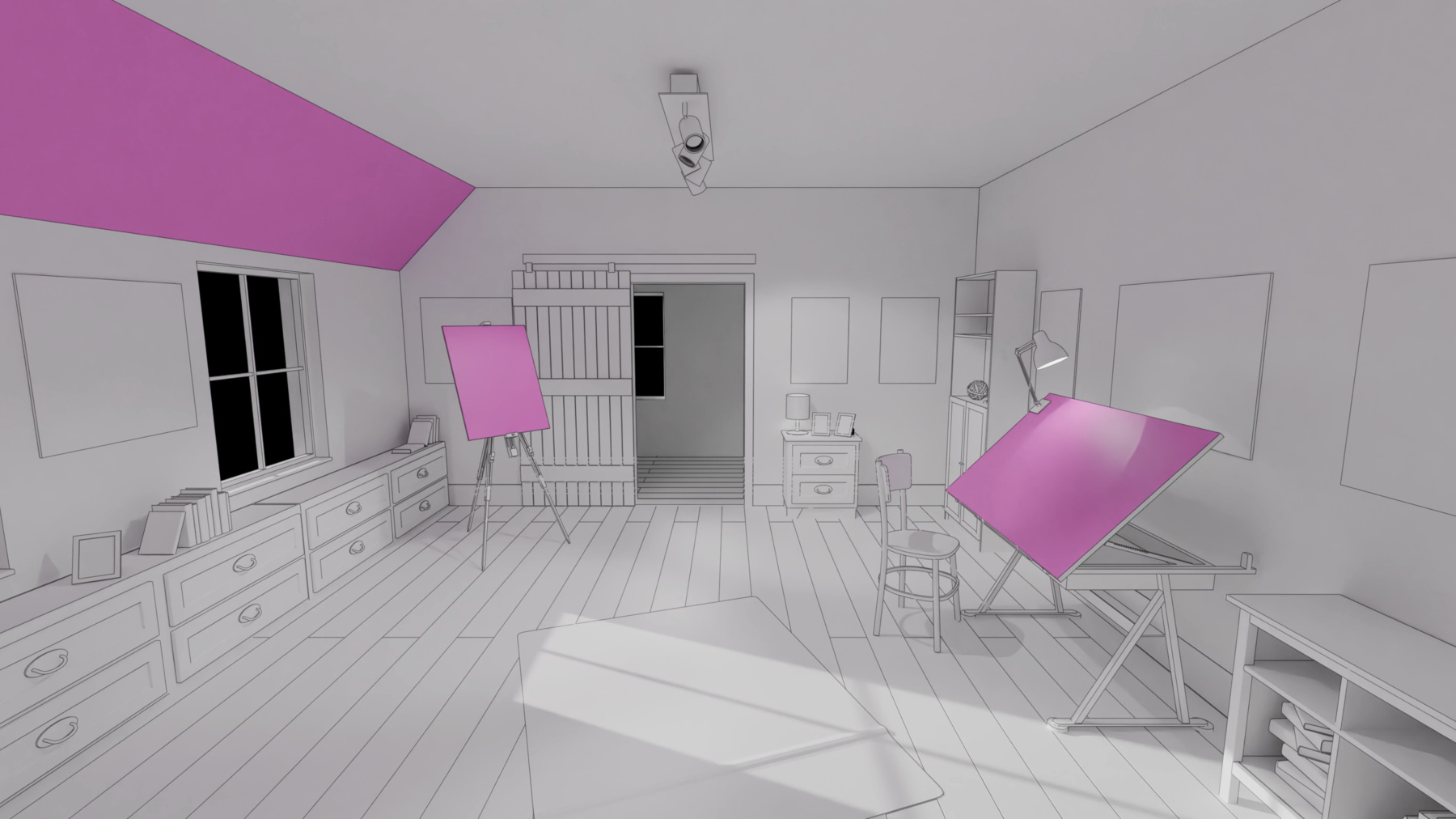

This year, we’re introducing a new Slanted plane alignment, used for detecting angled surfaces.

Your app will continue to receive horizontally and vertically aligned plane anchors, without any changes. If you’d like to also get Slanted plane anchors, we’ve made it easy for you to do so with just one change. All you’ll need to do is update the alignments you configure your PlaneDetectionProvider with.

Simply include the new Slanted alignment. It’s that easy! Now, let’s take a look at object tracking, which is new to visionOS.

ARKit can now track real-world objects that are statically placed in your environment. With object tracking, you can get the position and orientation of each of these items, to anchor virtual content to them. For example, an educational app can exhibit 3D visualizations while a person observes certain instruments.

To have ARKit track these items for you, you’ll need to supply a set of ReferenceObjects. Reference objects encode spatial features of known, real-world items. To make a ReferenceObject, you’ll first need a 3D model of the item you want to track, in a USDZ format.

Then, you can use CreateML’s new spatial object tracking feature in order to generate a ReferenceObject from your asset.

Finally, you can use the ReferenceObject to track with ARKit.

To learn more about creating a ReferenceObject, be sure to check out this year’s session, "Explore object tracking for visionOS".

For this session, we’ll focus on the last step of tracking a physical item with ARKit, after a ReferenceObject is made.

Let’s look at how simple this is with the new API.

A ReferenceObject can be loaded at runtime either with a file URL, or from a Bundle.

Here, we load a globe ReferenceObject with its URL.

After successfully loading the reference object, we can configure a new ObjectTrackingProvider with it.

Next, we can run the new ObjectTrackingProvider on an ARKitSession.

After the data provider enters a running state, we can start processing incoming tracking results.

These tracking results will be delivered in the form of ObjectAnchors, with each representing a tracked item.

The ObjectAnchor holds an axis-aligned bounding box of the detected object. You can get the center and extent of the bounding box, as well as its minimum and maximum 3D coordinate points.

This anchor also holds its corresponding ReferenceObject. You can access the underlying USDZ file path from it, if the ReferenceObject included one.

You can listen for ObjectAnchor updates similar to how you would with our other data providers.

The latest tracking updates can then be used to supplement your objects with sensational virtual content. For example, you can add an orbiting body to the globe with realistic shadows, and even peek into the core! We’re also publishing some sample apps that you can download and try out on your device. Be sure to check them out later, for more detailed information. Now, I’ll share some improvements to world tracking, which is a foundational part of this platform. ARKit relies on a variety of cameras and sensors to run sophisticated algorithms, like world tracking.

If a person’s physical location has insufficient lighting, it can affect sensor data, and possibly cause algorithms to not operate seamlessly. For example, being in a dark, unlit room may affect the quality of world tracking in your experience.

This year, we’ve added a mechanism for reacting to such changes in lighting. If the system detects that tracking is limited due to low light conditions or other physical factors, it will only track changes to its orientation, but not its position in space.

Your app will already benefit from orientation-based tracking at a system level. This means that you won’t have to worry about complete tracking loss due to lighting, if you’re ever in a challenging environment.

If you’d like to be well-prepared for these scenarios, we’re offering APIs that can inform you about these changes in tracking, so that you can adjust your experience accordingly. For example, you may want to gracefully rearrange content you’ve placed, while their anchored positions are not updated. If your app is truly dependent on full world tracking, you may want to ask the person to move towards a brighter environment to resume the experience.

If you’re using SwiftUI, this indication is available through the new "world tracking limitations" environment value.

As an example, you can listen for changes to this value, in order to rearrange content when positional tracking is unavailable.

If you’re using ARKit, we’ve expanded the DeviceAnchor to now hold a “.trackingState” property which indicates whether tracking is fully functional, or limited to only orientation-based tracking. If the system switches to orientation-based tracking, your existing world anchors may be marked as “not tracked.” When world tracking recovers, the tracked status of your persisted anchors will be restored.

Lastly, I’ll cover some advancements in hand tracking, which is a primary means of input on this platform.

With the introduction of visionOS, we added the ability to track a person’s hands and fingers. Your app can use this information to detect gestures, and anchor virtual content to your hands.

Last year, we established two avenues for getting this information in the form of HandAnchors. You can either poll the HandTrackingProvider for the latest updates, or you can asynchronously receive HandAnchors as they become available. We’re delighted to share that the HandTrackingProvider will now deliver this data at display rate! Asynchronous HandAnchor updates have some latency, but they’ll be smoother with the increased, display-aligned frequency that they’re delivered at. This effect will be especially perceivable while wearing Apple Vision Pro. If you want hand tracking results with minimal delay, ARKit can now predict HandAnchors that are expected to exist at a given time in the near future. Whether your app renders with Compositor Services or RealityKit, you can leverage the new hands prediction API to get tracking results faster.

Let’s look at an example. If you’re using Compositor Services, you should target the "trackable anchor time", which is new to visionOS. Predicting HandAnchors at this timestamp will help you achieve the best content registration.

You can convert that timestamp into seconds, and give it to ARKit to make a sophisticated forward prediction.

Here’s an app that uses Compositor Services to render a virtual teapot. By using predicted HandAnchors, the anchored teapot remains fixed to the hand, even as it moves around. Note that because this is a low-latency prediction, it may come at the expense of some accuracy. For more details about rendering using Compositor Services, I recommend watching this year’s session, "Render Metal with passthrough in visionOS".

You may also want to revisit the examples shown in last year’s session, "Meet ARKit for spatial computing".

Both of these hand tracking improvements have advantages for different use cases. Display-aligned HandAnchor updates are great for gesture detection and drawing smooth strokes in experiences where latency isn’t critical. Hand predictions are useful when you want content as attached to your hand as possible, while fluidity isn’t so important.

We’ve also surfaced these advancements on the RealityKit side, for hand AnchorEntities.

If you’re using RealityKit to attach content near your hands, you can choose between "continuous" or "predicted" hand tracking, depending on your needs.

For a detailed example, watch the new session, "Build a spatial drawing app with RealityKit". And that’s what’s new in ARKit this year. To summarize, I covered our latest improvements which can help you elevate your spatial computing experiences.

You also learned about the new room tracking and object tracking capabilities, which allow you to take your creativity even further.

And we have many more remarkable sessions for you to explore the new visionOS.

My entire team and I are so excited to see the wonderful things you’ll make, with the newest ARKit! Thanks for watching!

-

-

3:35 - RoomTrackingProvider

// RoomTrackingProvider @available(visionOS, introduced: 2.0) public final class RoomTrackingProvider: DataProvider, Sendable { /// The room which a person is currently in, if any. public var currentRoomAnchor: RoomAnchor? { get } /// An async sequence of all anchor updates. public var anchorUpdates: AnchorUpdateSequence<RoomAnchor> { get } ... } -

4:20 - RoomAnchor

@available(visionOS, introduced: 2.0) public struct RoomAnchor: Anchor, Sendable, Equatable { /// True if this is the room which a person is currently in. public var isCurrentRoom: Bool { get } /// Get the geometry of the mesh in the anchor's coordinate system. public var geometry: MeshAnchor.Geometry { get } /// Get disjoint mesh geometries of a given classification. public func geometries(of classification: MeshAnchor.MeshClassification) -> [MeshAnchor.Geometry] /// True if this room contains the given point. public func contains(_ point: SIMD3<Float>) -> Bool /// Get the IDs of the plane anchors associated with this room. public var planeAnchorIDs: [UUID] { get } /// Get the IDs of the mesh anchors associated with this room. public var meshAnchorIDs: [UUID] { get } } -

8:06 - Load Object Tracking referenceobject

// Object tracking Task { do { let url = URL(fileURLWithPath: "/path/to/globe.referenceobject") let referenceObject = try await ReferenceObject(from: url) let objectTracking = ObjectTrackingProvider(referenceObjects: [referenceObject]) } catch { // Handle reference object loading error. } ... } -

8:27 - Run ARKitSession with ObjectTracking provider

let session = ARKitSession() Task { do { try await session.run([objectTracking]) } catch { // Handle session run error. } for await event in session.events { switch event { case .dataProviderStateChanged(_, newState: let newState, _): if newState == .running { // Ready to start processing anchor updates. } ... } } } -

8:43 - ObjectAnchor

// ObjectAnchor @available(visionOS, introduced: 2.0) public struct ObjectAnchor: TrackableAnchor, Sendable, Equatable { /// An axis-aligned bounding box. public struct AxisAlignedBoundingBox: Sendable, Equatable { ... } /// The bounding box of this anchor. public var boundingBox: AxisAlignedBoundingBox { get } /// The reference object which this anchor corresponds to. public var referenceObject: ReferenceObject { get } } -

11:03 - World Tracking - reacting to changes in lighting conditions

struct WellPreparedView: View { @Environment(\.worldTrackingLimitations) var worldTrackingLimitations var body: some View { ... .onChange(of: worldTrackingLimitations) { if worldTrackingLimitations.contains(.translation) { // Rearrange content when anchored positions are unavailable. } } } } -

12:51 - Hands prediction

// Hands prediction func submitFrame(_ frame: LayerRenderer.Frame) { ... guard let drawable = frame.queryDrawable() else { return } // Get the trackable anchor time to target. let trackableAnchorTime = drawable.frameTiming.trackableAnchorTime // Convert the timestamp into units of seconds. let anchorPredictionTime = LayerRenderer.Clock.Instant.epoch.duration(to: trackableAnchorTime).timeInterval // Predict hand anchors for the time that provides best content registration. let (leftHand, rightHand) = handTracking.handAnchors(at: anchorPredictionTime) ... }

-