-

Capture machine-readable codes and text with VisionKit

Meet the Data Scanner in VisionKit: This framework combines AVCapture and Vision to enable live capture of machine-readable codes and text through a simple Swift API. We'll show you how to control the types of content your app can capture by specifying barcode symbologies and language selection. We'll also explore how you can enable guidance in your app, customize item highlighting or regions of interest, and handle interactions after your app detects an item.

For more on interacting with Live Text through still images or paused video frames, watch "Add Live Text interaction to your app" from WWDC22.Resources

Related Videos

Tech Talks

WWDC22

-

Search this video…

Ron Santos: Hey, hope you're well. I'm Ron Santos, an input engineer. Today I’m here to talk to you about capturing machine-readable codes and text from a video feed, or, as we like to call it, data scanning. What exactly do we mean by data scanning? It’s simply a way of using a sensor, like a camera, to read data.

Typically that data comes in the form of text. For example, a receipt with interesting information like telephone numbers, dates, and prices.

Or maybe data comes as a machine-readable code, like the ubiquitous QR code. You’ve probably used a data scanner before, maybe in the Camera app or by using the Live Text features introduced in iOS 15. And I bet you’ve used apps in your day-to-day life with their own custom scanning experience. But what if you had to build your own data scanner? How would you do it? The iOS SDK has more than one solution for you, depending on your needs.

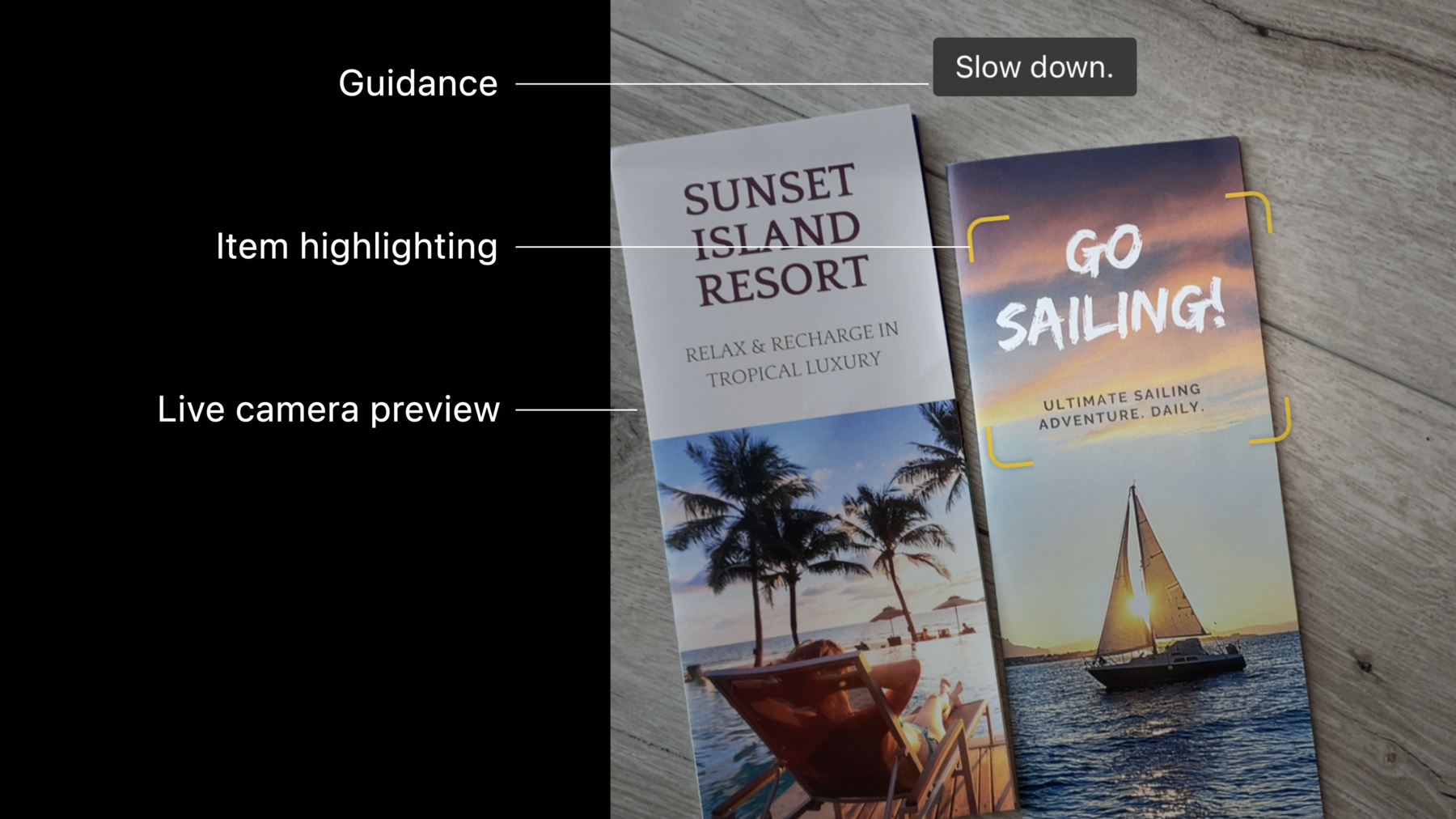

One option is that you could use the AVFoundation framework to set up the camera graph, connecting inputs and outputs to a session and configuring it to yield AVMetadataObjects like machine-readable codes. If you also wanted to capture text, another option would be to combine both the AVFoundation and the Vision frameworks together. In this diagram, instead of metadata output, you create video data output. The video data output results in the delivery of sample buffers that can be fed to the Vision framework for use with text and barcode recognition requests, resulting in Vision observation objects. For more on using Vision for data scanning, check out the “Extract document data using Vision” from WWDC21. Okay, so that’s using AVFoundation and Vision for data scanning. In iOS 16, we have a new option that encapsulates all of that for you. Introducing the DataScannerViewController in the VisionKit framework. It combines the features of AVFoundation and Vision specifically for the purpose of data scanning. The DataScannerViewController users are treated to features like a live camera preview, helpful guidance labels, item highlighting, tap-to-focus which is also used for selection, and lastly, pinch-to-zoom to get a closer look.

And let’s talk about features for developers like you. The DataScannerViewController is a UIViewController subclass that you can present however you choose. Coordinates for recognized items are always in view coordinates, saving you from converting from image space, to Vision coordinates, to view coordinates. You’ll also be able to limit the active portion of the view by specifying a region-of-interest, which is also in view coordinates. For text recognition, you can specify content types to limit the type of text you find. And for machine-readable codes, you can specify exactly which symbologies to look for. I get it; I use your apps, and I understand that data scanning is only a small portion of their functionality. But it could require a lot of code. With DataScannerViewController, our goal is to perform the common tasks for you, so you can focus your time elsewhere. Next, I’ll walk you through adding it to your app. Let’s start with the privacy usage description. When apps try to capture video, iOS asks the user to grant their explicit permission to access the camera. You’ll want to provide a descriptive message justifying your need. To do that, add a “privacy - camera usage description” to your app’s Info.plist file. Remember, be as descriptive as possible, so users know what they’re agreeing to. Now onto the code. Wherever you would like to present a data scanner, start by importing VisionKit.

Next, because data scanning isn’t supported on all devices, use the isSupported class property to hide any buttons or menus exposing the functionality, so users aren’t presented with something they can’t use.

If you’re curious, any 2018 and newer iPhone and iPad devices with the Apple Neural Engine support data scanning. You’ll also want to check for availability. Recall the privacy usage description? Scanning is available if the user approves the app for camera access and if the device is free of any restrictions, like the Camera access restriction set here, in Screen Time’s Content & Privacy Restrictions. Now you’re ready to configure an instance. That’s done by first specifying the types of data you’re interested in. For example, you can scan for both QR codes and text.

You can optionally pass a list of languages for the text recognizer to use as a hint for various processing aspects, like language correction. If you have an idea what languages to expect, list them out. It’s especially useful when two languages have similar looking scripts. If you do not provide any languages, the user’s preferred languages are used by default. You can also request a specific text content type. In this example, I want my scanner to look for URLs. Now that you stated the types of data to recognize, you can create your DataScanner instance. In the previous example, I specified a barcode symbology, a recognition language, and a text content type. Let me take a moment to explain the other options for each of those. For barcode symbologies, we support all the same symbologies as Vision’s barcode detector. In terms of languages, as part of the LiveText feature, DataScannerViewController supports the same exact languages. And in iOS 16, I’m happy to say we’re adding support for Japanese and Korean. Of course, this can change again in future. So use the supportedTextRecognitionLanguages class property to retrieve the most up to date list. Finally, when scanning for text with specific semantic meaning, the DataScannerViewController can find these seven types.

We’re now ready to present the Data Scanner to the user. Present it like any other view controller, going fullscreen, using a sheet, or adding it to another view hierarchy altogether. It’s all up to you. Afterwards, when presentation completes, call startScanning() to begin looking for data. So now I want to take a step back and spend some time going over Data Scanner’s initialization parameters. I used one here, recognizedDataTypes. But there are others that can help you customize your experience.

Let’s go through each one. recognizedDataTypes allows you to specify what kind of data to recognize. Text, machine-readable codes, and what types of each. qualityLevel can be balanced, fast, or accurate. Fast will sacrifice resolution in favor of speed in scenarios where you expect large and easily-legible items, like text on signs. Accurate will give you the best accuracy, even with small items like micro QR codes or tiny serial numbers. I recommend starting with balanced, which should work great for most cases. recognizesMultipleItems gives you the option to look for one or more items in the frame, like if you want to scan multiple barcodes at a time. When it’s false, the center-most item is recognized by default until the user taps elsewhere. Enable high frame rate tracking when you draw highlights. It allows the highlights to follow items as closely as possible when the camera moves or the scene changes. Enable pinch-to-zoom or disable it. We also have methods you can use to modify the zoom level yourself. When you enable guidance, labels show at the top of the screen to help direct the user. And, finally, you can enable system highlighting if you need it, or you can disable it to draw your own custom highlighting.

Now that you know how to present the data scanner, let’s talk about how you’d ingest the recognized items, and also how you’d draw your own custom highlights.

First, provide a delegate to the data scanner. Now that you have a delegate, you can implement the dataScanner didTapOn method, which is called when the user taps on an item. With it, you’ll receive an instance of this new type RecognizeItem. RecognizedItem is an enum that holds text or a barcode as an associated value. For text, the transcription property holds the recognized string. For barcodes, if its payload contains a string, you can retrieve it with the payloadStringValue. Two other things you should know about RecognizedItem: First, each recognized item has a unique identifier you can use to track an item throughout its lifetime. That lifetime starts when the item is first seen and ends when it’s no longer in view. And second, each RecognizedItem has a bounds property. The bounds isn’t a rect, but it consists of four points, one for each corner. Next, let’s talk about three related delegate methods that are called when recognized items in the scene change. The first is didAdd, called when items in the scene are newly recognized. If you wanted to create your own custom highlight, you’d create one here for each new item. You can keep track of the highlights using the ID from its associated item. And when adding your new view to the view hierarchy, add them to DataScanner’s overlayContainerView, so they appear above the camera preview, but below any other supplemental chrome.

The next delegate method is didUpdate, which is called when the items move or the camera moves. It can also be called when transcription for recognized text change. They change because the longer the scanner sees the text, the more accurate it’ll be with its transcription. Use the IDs from the updated items to retrieve your highlights from the dictionary you just created, and then animate the views to their newly updated bounds. And finally, the didRemove delegate method, which is called when items are no longer visible in the scene. In this method, you can forget about any highlight views you associated with the removed items, and you can remove them from the view hierarchy. In summary, if you draw your own highlights over items, those three delegate methods will be crucial for you to control animating highlights into the scene, animating their movement, and animating them out. And for each of those three previous delegate methods, you’ll also be given an array of all the items currently recognized. That may come in handy for text recognition because the items are placed in their natural reading order, meaning the user would read the item at index 0 before the item at index 1 and so on. That’s an overview of how to use the DataScannerViewController. Before wrapping up, I wanted to quickly mention a few other features, like capturing photos. You can call the capturePhoto method, which will asynchronously return a high quality UIImage.

And if you aren’t creating custom highlights, you might not need these three delegate methods. Instead, you can use the recognizedItem property. It’s an AsyncStream that will be continuously updated as the scene changes.

Thanks for hanging out. Remember, the iOS SDK gives you options for creating computer vision workflows with the AVFoundation and Vision frameworks. But maybe you’re creating an app that scans text or machine-readable codes with a live video feed, like a Pick-and-pack app, a back-of-the-warehouse app, or a point-of-sale app. If so, then give the DataScannerViewController in VisionKit a look. As I went over today, it has a number of initialization parameters and delegate methods that you can use to provide a custom experience that matches your app’s style and needs.

And finally, I wanted to give a shout out to the “Add Live Text interaction to your app” session, where you can learn about VisionKit’s Live Text abilities for static images.

Until next time, peace.

-

-

4:40 - Creating a data scanner instance and present it

import VisionKit // Specify the types of data to recognize let recognizedDataTypes:Set<DataScannerViewController.RecognizedDataType> = [ .barcode(symbologies: [.qr]), // uncomment to filter on specific languages (e.g., Japanese) // .text(languages: ["ja"]) // uncomment to filter on specific content types (e.g., URLs) // .text(textContentType: .URL) ] // Create the data scanner, present it, and start scanning! let dataScanner = DataScannerViewController(recognizedDataTypes: recognizedDataTypes) present(dataScanner, animated: true) { try? dataScanner.startScanning() } -

8:11 - Set a delegate

// Specify the types of data to recognize let recognizedDataTypes:Set<DataScannerViewController.RecognizedDataType> = [ .barcode(symbologies: [.qr]), .text(textContentType: .URL) ] // Create the data scanner, present it, and start scanning! let dataScanner = DataScannerViewController(recognizedDataTypes: recognizedDataTypes) dataScanner.delegate = self.present(dataScanner, animated: true) { try? dataScanner.startScanning() } -

8:19 - Handling tap interactions

func dataScanner(_ dataScanner: DataScannerViewController, didTapOn item: RecognizedItem) { switch item { case .text(let text): print("text: \(text.transcript)") case .barcode(let barcode): print("barcode: \(barcode.payloadStringValue ?? "unknown")") default: print("unexpected item") } } -

9:11 - Adding custom highlights via the didAdd delegate method

// Dictionary to store our custom highlights keyed by their associated item ID. var itemHighlightViews: [RecognizedItem.ID: HighlightView] = [:] // For each new item, create a new highlight view and add it to the view hierarchy. func dataScanner(_ dataScanner: DataScannerViewController, didAdd addItems: [RecognizedItem], allItems: [RecognizedItem]) { for item in addedItems { let newView = newHighlightView(forItem: item) itemHighlightViews[item.id] = newView dataScanner.overlayContainerView.addSubview(newView) } } -

9:37 - Animating custom highlights during the didUpdate delegate method

// Animate highlight views to their new bounds func dataScanner(_ dataScanner: DataScannerViewController, didUpdate updatedItems: [RecognizedItem], allItems: [RecognizedItem]) { for item in updatedItems { if let view = itemHighlightViews[item.id] { animate(view: view, toNewBounds: item.bounds) } } } -

10:03 - Removing custom highlights during the didRemove delegate callback

// Remove highlights when their associated items are removed. func dataScanner(_ dataScanner: DataScannerViewController, didRemove removedItems: [RecognizedItem], allItems: [RecognizedItem]) { for item in removedItems { if let view = itemHighlightViews[item.id] { itemHighlightViews.removeValue(forKey: item.id) view.removeFromSuperview() } } } -

10:54 - Take a still photo and save it to the camera roll

// Take a still photo and save to the camera roll if let image = try? await dataScanner.capturePhoto() { UIImageWriteToSavedPhotosAlbum(image, nil, nil, nil) } -

11:10 - Using the recognizedItems async stream to keep track of items

// Send a notification when the recognized items change. var currentItems: [RecognizedItem] = [] func updateViaAsyncStream() async { guard let scanner = dataScannerViewController else { return } let stream = scanner.recognizedItems for await newItems: [RecognizedItem] in stream { let diff = newItems.difference(from: currentItems) { a, b in return a.id == b.id } if !diff.isEmpty { currentItems = newItems sendDidChangeNotification() } } }

-