-

Add Live Text interaction to your app

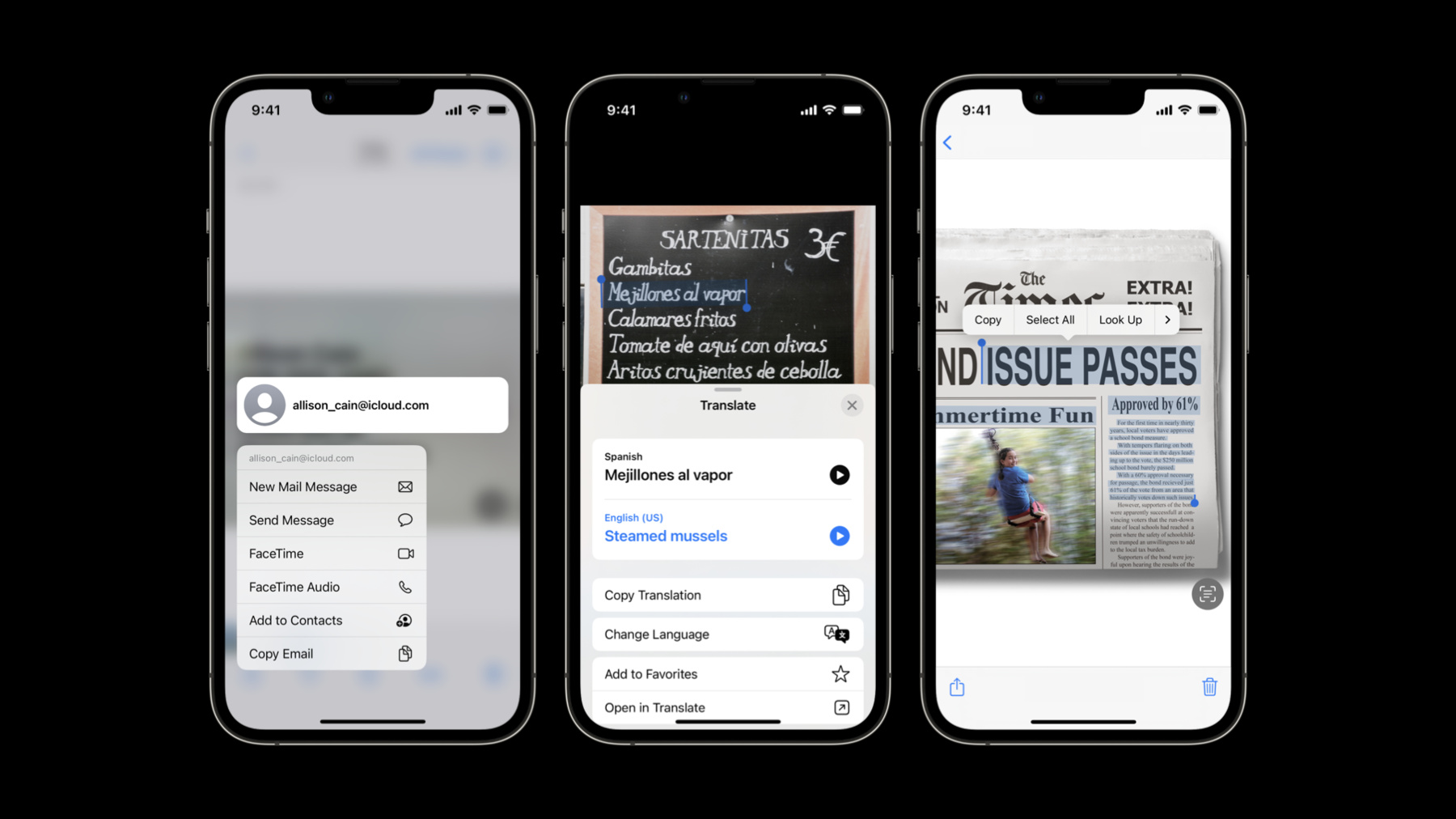

Learn how you can bring Live Text support for still photos or paused video frames to your app. We'll share how you can easily enable text interactions, translation, data detection, and QR code scanning within any image view on iOS, iPadOS, or macOS. We'll also go over how to control interaction types, manage the supplementary interface, and resolve potential gesture conflicts.

To learn more about capturing and interacting with detected data in live camera feeds, watch "Capture machine-readable codes and text with VisionKit" from WWDC22.Resources

Related Videos

WWDC23

WWDC22

-

Search this video…

♪ Mellow instrumental hip-hop music ♪ ♪ Hi! My name is Adam Bradford. I'm an engineer on the VisionKit team, and if you're looking to add Live Text to your app, you're in the right place. But first, what is Live Text? Live Text analyzes an image and provides features for the users to interact with its content, such selecting and copying text, perform actions like lookup and translate, providing data-detection workflows, such as mapping an address, dialing a number, or jumping to a URL. Live Text even allows for QR code interaction. Imagine how you could put this to use in your app? You want to know more? Well, you're in the right place. For this session, I'm going to start with a general overview of the Live Text API. Then I will explore how to implement this API in an existing application. Next, I will dive into some tips and tricks which may help you when adding Live Text to your app. Now for an overview of the Live Text API. At a high level, the Live Text API is available in Swift. It works beautifully on static images and can be adapted to be used for paused video frames. If you need to analyze video in a live camera stream to search for items like text or QR codes, VisionKit also has a data scanner available. Check out this session from my colleague Ron for more info. The Live Text API is available starting on iOS 16 for devices with an Apple Neural Engine, and for all devices that support macOS 13. It consists of four main classes. To use it, first, you'll need an image. This image is then fed into an ImageAnalyzer, which performs the async analysis. Once the analysis is complete, the resulting ImageAnalysis object is provided to either an ImageAnalysisInteraction or ImageAnalysisOverlayView, depending on your platform. Seems pretty straightforward so far, right? Now, I'm going to demonstrate how one would add it to an existing application. And here's our application. This is a simple image viewer, which has an image view inside of a scroll view. Notice, I can both zoom and pan. But try as I might, I cannot select any of this text or activate any of these data detectors. This simply will not do. Here's the project in Xcode. To add Live Text to this application, I'll be modifying a view controller subclass. First, I'm going to need an ImageAnalyzer, and an ImageAnalysisInteraction. Here, I'm simply overriding viewDidLoad and adding the interaction to the imageview. Next, I need to know when to perform the analysis.

Notice that when a new image is set, I first reset the preferredInteractionTypes and analysis which were meant for the old image. Now everything is ready for a new analysis. Next, I'm going to create the function we will use and then check that our image exists.

If so, then create a task. Next, create a configuration in order to tell the analyzer what it should be looking for. In this case, I'll go with text and machine-readable codes. Generating the analysis can throw, so handle that as appropriate. And now finally, I'm ready to call the method analyzeImageWithConfiguration, which will start the analysis process. Once the analysis is complete, an indeterminate amount of time has passed, and the state of the application may have changed, so I will check that both the analysis was successful and that the displayed image has not changed. If all of these checks pass, I can simply set the analysis on the interaction and set the preferredInteractionTypes. I'm using .automatic here, which will give me the default system behavior. I think this is ready for a test. Oh, look at that! I see the Live Text button has appeared, and yep, I can now select text. Notice how these interface elements are positioned for me automatically, and keep their position inside of both the image bounds and the visible area, with no work on my part. OK, notice that tapping the Live Text button will both highlight any selectable items, underline data detectors, and show Quick Actions. I can easily tap this Quick Action to make a call, and even see more options by long-pressing. You have to admit, this is pretty cool. With just these few lines of code, I've taken an ordinary image and brought it to life. This simple application now has the ability to select text on images, activate data detectors, QR codes, lookup, translate text, and more. Not too shabby from just this few lines of code, if you ask me. And now that you've have seen how to implement Live Text, I'm going to go over a few tips and tricks that may help you with your adoption. I'll start by exploring interaction types. Most developers will want .automatic, which provides text selection, but will also highlight data detectors if the Live Text button is active. This will draw a line underneath any applicable detected items and allows one-tap access to activate them. This is the exact same behavior you would see from built-in applications. If it makes sense for your app to only have text selection without data detectors, you may set the type to .textSelection and it will not change with the state of the Live Text button. If, however, it makes sense for your app to only have data detectors without text selection, set the type to .dataDetectors. Note that in this mode, since selection is disabled, you will not see a Live Text button, but data detectors will be underlined and ready for one-tap access. Setting the preferredInteractionTypes to an empty set will disable the interaction. And also, a last note, with text selection or automatic mode, you'll find you can still activate data detectors by long-pressing. This is controlled by the allowLongPressForDataDetectorsIn TextMode property, which will be active when set to true, which the default. Simply set to false to disable this if necessary. I would like to now take a moment and talk about these buttons at the bottom, collectively known as the supplementary interface. This consists of the Live Text button, which normally lives in the bottom right-hand corner, as well as Quick Actions which appear on the bottom left. Quick Actions represent any data detectors from the analysis and are visible when the Live Text button is active. The size, position, and visibility are controlled by the interaction. And while the default position and look matches the system, your app may have custom interface elements which may interfere or utilize different fonts and symbol weights. Let's look at how you can customize this interface. First off, the isSupplementary InterfaceHidden property. If I wanted to allow my app to still select text but I did not want to show the Live Text button, if I set the SupplementaryInterfaceHidden to true, you will not see any Live Text button or Quick Actions. We also have a content insets property available. If you have interface elements that would overlap the supplementary interface, you may adjust the content insets so the Live Text button and Quick Actions adapt nicely to your existing app content when visible. If your app is using a custom font you'd like the interface to adopt, setting the supplementaryInterfaceFont will cause the Live Text button and Quick Actions to use the specified font for text and font weight for symbols. Please note that for button-sizing consistency, Live Text will ignore the point size. Switching gears for a moment, if you are not using UIImageview, you may discover that highlights do not match up with your image. This is because with UIImageView, VisionKit can use its ContentMode property to calculate the contentsRect automatically for you. Here, the interaction's view has a bounds that is bigger than its image content but is using the default's content rect, which is a unit rectangle. This is easily solved by implementing the delegate method contentsRectForInteraction and return a rectangle in unit coordinate space describing how the image content relates to the interaction's bounds in order to correct this. For example, returning a rectangle with these values would correct the issue, but please return the correct normalized rectangle based on your app's current content and layout. contentsRectForInteraction will be called whenever the interaction's bounds change, however, if your contentsRect has changed but your interaction's bounds have not, you can ask the interaction to update by calling setContentsRectNeedsUpdate(). Another question you may have when adopting Live Text may be, Where is the best place to put this interaction? Ideally, Live Text interactions are placed directly on the view that hosts your image content. As mentioned before, UIImageView will handle the contentsRect calculations for you, and while not necessary, is preferred. If you are using UIImageview, just set the interaction on the imageView and VisionKit will handle the rest. However, if your ImageView is located inside of a ScrollView, you may be tempted to place the interaction on the ScrollView, however, this is not recommended and could be difficult to manage as it will have a continually changing contentsRect. The solution here is the same, place the interaction on the view that hosts your image content, even if it is inside a ScrollView with magnification applied. I'm going talk about gestures for a moment, Live Text has a very, very rich set of gesture recognizers, to say to least. Depending on how your app is structured, it's possible you may find the interaction responding to gestures and events your app should really handle or vice versa. Don't panic. Here are a few techniques you can use to help correct if you see these issues occur. One common way to correct this is to implement the delegate method interactionShouldBeginAtPointFor InteractionType. If you return false, the action will not be performed. A good place to start is to check if the interaction has an interactive item at the given point or if it has an active text selection. The text selection check is used here so you will be able to have the ability tap off of the text in order to deselect it. On the other hand, if you find your interaction doesn't seem to respond to gestures, it may be because there's a gesture recognizer in your app that's handling them instead. In this case, you can craft a similar solution using your gestureRecognizer's gestureRecognizerShouldBegin delegate method. Here, I perform a similar check and return false if there is an interactive item at the location or there's an active text selection. On a side note. In this example, I'm first converting the gestureRecognizer's location to the window's coordinate space by passing in nil, and then converting it to the interaction's view. This may be necessary if your interaction is inside of a ScrollView with magnification applied. If you find your points aren't matching up, give this technique a try. Another similar option I have found to be useful is to override UIView's hitTest:WithEvent. Here, once again, similar story, I perform the same types of checks as before, and in this case, return the appropriate view. As always, we want your app to be as responsive as possible, and while the Neural Engine makes analysis extremely efficient, there a few ImageAnalyzer tips I'd like to share for best performance. Ideally, you want only one ImageAnalyzer shared in your app. Also, we support several types of images. You should always minimize image conversions by passing in the native type that you have; however, if you do happen to have a CVPixelBuffer, that would be the most efficient. Also, in order to best utilize system resources, you should begin your analysis only when, or just before, an image appears onscreen. If your app's content scrolls -- for example, it has a timeline -- begin analysis only once the scrolling has stopped. Now this API isn't the only place you'll see Live Text, support is provided automatically in a few frameworks across the system your app may already use. For example, UITextField or UITextView have Live Text support using Camera for keyboard input. And Live Text is also supported in WebKit and Quick Look. For more information, please check out these sessions. New this year for iOS 16, we've added Live Text support in AVKit. AVPlayerView and ViewController support Live Text in paused frames automatically via the allowsVideoFrameAnalysis property, which is enabled by default. Please note, this is only available with non-FairPlay protected content. If you're using AVPlayerLayer, then you are responsible for managing the analysis and the interaction but it is very important to use the currentlyDisplayedPixelBuffer property to get the current frame. This is the only way to guarantee the proper frame is being analyzed. This will only return a valid value if the video play rate is zero, and this is a shallow copy and absolutely not safe to write to. And once again, only available for non-FairPlay protected content. We are thrilled to help bring Live Text functionality to your app. On behalf of everybody on the Live Text team, thank you for joining us for this session. I am stoked to see how you use it for images in your app. And as always, have fun! ♪

-

-

2:37 - Live Text Sample Adoption

import UIKit import VisionKit class LiveTextDemoController: BaseController, ImageAnalysisInteractionDelegate, UIGestureRecognizerDelegate { let analyzer = ImageAnalyzer() let interaction = ImageAnalysisInteraction() override func viewDidLoad() { super.viewDidLoad() imageview.addInteraction(interaction) } override var image: UIImage? { didSet { interaction.preferredInteractionTypes = [] interaction.analysis = nil analyzeCurrentImage() } } func analyzeCurrentImage() { if let image = image { Task { let configuration = ImageAnalyzer.Configuration([.text, .machineReadableCode]) do { let analysis = try await analyzer.analyze(image, configuration: configuration) if let analysis = analysis, image == self.image { interaction.analysis = analysis; interaction.preferredInteractionTypes = .automatic } } catch { // Handle error… } } } } }

-