Retired Document

Important: OpenCL was deprecated in macOS 10.14. To create high-performance code on GPUs, use the Metal framework instead. See Metal.

OpenCL On OS X Basics

Tools provided on OS X let you include OpenCL kernels as resources in Xcode projects, compile them along with the rest of your application, invoke kernels by passing them parameters just as if they were typical functions, and use Grand Central Dispatch (GCD) as the queuing API for executing OpenCL commands and kernels on the CPU and GPU.

If you need to create OpenCL programs at runtime, with source loaded as a string or from a file, or if you want API-level control over queueing, see The OpenCL Specification, available from the Khronos Group at http://www.khronos.org/registry/cl/.

Concepts

In the OpenCL specification, computational processors are called devices. An OpenCL device has one or more compute units. A workgroup executes on a single compute unit. A compute unit is composed of one or more processing elements and local memory.

A Mac computer always has a single CPU. It may not have any GPUs or it may have several. The CPU on a Mac has multiple compute units, which is why it is called a multicore CPU. The number of compute units in a CPU limits the number of workgroups that can execute concurrently.

CPUs usually contain between two and eight compute units, sometimes more. A graphics processing unit (GPU) typically contains many compute units-GPUs in current Mac systems feature tens of compute units, and future GPUs may contain hundreds. To OpenCL the number of compute units is irrelevant. OpenCL considers a CPU with eight compute units and a GPU with 100 compute units each to be a single device.

The OS X v10.7 implementation of the OpenCL API facilitates designing and coding data parallel programs to run on both CPU and GPU devices. In a data parallel program, the same program (or kernel) runs concurrently on different pieces of data and each invocation is called a work item and given a work item ID. The work item IDs are organized in up to three dimensions (called an N-D range).

A kernel is essentially a function written in the OpenCL language that enables it to be compiled for execution on any device that supports OpenCL. However, a kernel differs from a function called by another programming language because when you invoke “a” kernel, what actually happens is that many instances of the kernel execute, each of which processes a different chunk of data.

The program that calls OpenCL functions to set up the context in which kernels run and enqueue the kernels for execution is known as the host application. The host application is run by OS X on the CPU. The device on which the host application executes is known as the host device. Before it runs the kernels, the host application typically:

Determines what compute devices are available, if necessary.

Selects compute devices appropriate for the application.

Creates dispatch queues for selected compute devices.

Allocates the memory objects needed by the kernels for execution. (This step may occur earlier in the process, as convenient.)

The host application can enqueue commands to read from and write to memory objects that are also accessible by kernels. See Memory Objects in OS X OpenCL. Memory objects are used to manipulate device memory. There are two types of memory objects used in OpenCL: buffer objects and image objects. Buffer objects can contain any type of data; image objects contain data organized into pixels in a given format.

Although kernels are enqueued for execution by host applications written in C, C++, or Objective-C, a kernel must be compiled separately to be customized for the device on which it is going to run. You can write your OpenCL kernel source code in a separate file or include it inline in your host application source code.

OpenCL kernels can be:

-

Compiled at compile time, then run when queued by the host application.

or

-

Compiled and then run at runtime when queued by the host application.

or

-

Run from a previously-built binary.

A work item is a parallel execution of a kernel on some data. It is analogous to a thread. Each kernel is executed upon hundreds of thousands of work items.

A workgroup is a set of work items that execute concurrently and share data. Each workgroup is executed on a compute unit.

Workgroup dimensions determine how kernels operate upon input in parallel. The application usually specifies the dimensions based on the size of the input. There are constraints; for example, there may be a maximum number of work items that can be launched for a certain kernel on a certain device.

Essential Development Tasks

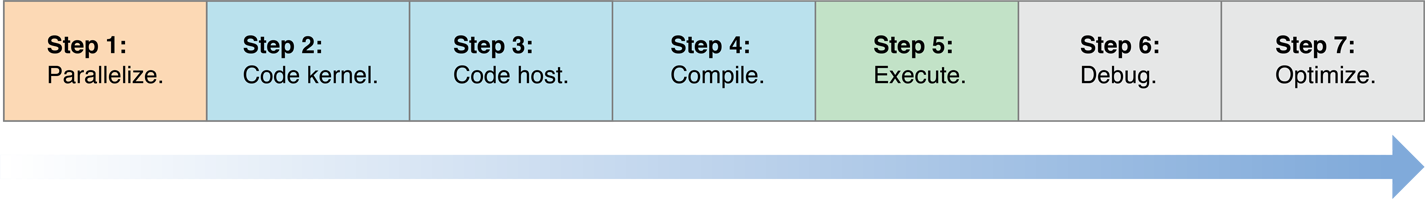

As of OS X v10.7, the OpenCL development process includes these major steps:

-

Identify the tasks to be parallelized.

Determining how to parallelize your program effectively is often the hardest part of developing an OpenCL program. See Identifying Parallelizable Routines.

-

Write your kernel functions.

The Basic Kernel Code Sample shows how you can store your kernel code in a file that can be compiled using Xcode.

-

Write the host code that will call the kernel(s).

See Using Grand Central Dispatch With OpenCL for information about how the host can use GCD to enqueue the kernel.

See Memory Objects in OS X OpenCL for information about how the host passes parameters to and retrieves results from the kernel.

See Sharing Data Between OpenCL and OpenGL for information about how the OpenCL host can share data with OpenGL applications.

See Controlling OpenCL / OpenGL Interoperation With GCD for information about how the OpenCL host can synchronize processing with OpenGL applications using GCD.

See Using IOSurfaces With OpenCL for information about how the OpenCL host can use IOSurfaces to exchange data with a kernel.

The Basic Host Code Sample shows how you can store your host code in a file that can be compiled with Xcode.

-

Compile using Xcode.

See Hello World!.

-

Execute.

-

Debug (if necessary).

See Debugging.

Improve performance (if necessary):

If your kernel(s) will be running on a CPU, see Autovectorizer and, for suggestions about additional optimizations, see Improving Performance On the CPU.

If your kernel(s) will be running on a GPU, see Tuning Performance On the GPU.

Copyright © 2018 Apple Inc. All Rights Reserved. Terms of Use | Privacy Policy | Updated: 2018-06-04