-

Render Metal with passthrough in visionOS

Get ready to extend your Metal experiences for visionOS. Learn best practices for integrating your rendered content with people's physical environments with passthrough. Find out how to position rendered content to match the physical world, reduce latency with trackable anchor prediction, and more.

Chapters

- 0:00 - Introduction

- 1:49 - Mix rendered content with surroundings

- 9:20 - Position render content

- 14:49 - Trackable anchor prediction

- 19:22 - Wrap-up

Resources

- Interacting with virtual content blended with passthrough

- Improving rendering performance with vertex amplification

- Rendering a scene with deferred lighting in Swift

- How to start designing assets in Display P3

- Forum: Graphics & Games

- Metal Developer Resources

- Rendering at different rasterization rates

Related Videos

WWDC24

WWDC23

-

Search this video…

Hello, and welcome. I'm Pooya. And I am an engineer at Apple. Today, I'll show you how you can leverage the full power of visionOS, by extending Metal beyond full immersion style, into mixed immersion. Best of all, the tools and frameworks you'll use to bring Metal to mixed immersion are likely ones you're already familiar with.

Last year, we showed you how to use ARKit, Metal, and Compositor Services to provide an experience in full immersion using your own rendering engine.

And now this year, I am excited to talk about how, with Metal, you can blur the line between the real world and people's imaginations, by rendering in mixed immersion style.

You might already be using Compositor Services to implement your rendering engine with Metal and ARKit as an alternative to rendering in RealityKit. You can use Compositor Services to create a rendering session, Metal APIs to render your beautiful frames, and ARKit to get access to world and hand tracking.

In today’s session, I will share how to use Metal, ARKit, and CompositorServices APIs, to create an application that provides a mixed immersive experience. The first step is to seamlessly blend your app's rendered content with the physical surroundings. Next, I'll show you how to improve the positioning of your rendered content in relation to the physical environment. Finally, I'll explain how to acquire and use the correct prediction time for trackable anchor poses. For example, when you are tracking a person's hands and the virtual objects they are interacting with.

Now lets cover the first step, Mix rendered content with surroundings.

You'll get the most out of today's session if you have previous experience with the Compositor Services APIs, Metal APIs, and Metal rendering techniques. If you haven’t used these before, you can check out some of our previous videos.

You can also access code samples and documentation at developer.apple.com Now let's dive in.

In mixed immersion style, both rendered content and the person's physical surroundings are visible. There are a few steps you can take to make sure this effect is as realistic as it can be.

First, you'll clear your drawable texture to the correct value. That value is going to be different from the one you may have used in full immersion style.

Second is to ensure that your rendering pipeline is producing correct color and depth values, like pre-multiplied alpha and P3 color space, which is what visionOS expects.

Then you'll use the scene understanding provided by ARKit to anchor your rendered content in the real world and perform physics simulations.

Finally, you'll designate the type of upper limb visibility that fits your app's experience.

The first step in the journey to bring your app to mixed immersion style is very simple.

Just add mixed as one of the options when you set your immersionStyle. By default, SwiftUI creates a window scene, even if the first scene in your app is an ImmersiveSpace.

You can add PreferredDefaultSceneSessionRole key to your application scene manifest to change the default scene behavior. If you're using a Space with a Compositor Space Content, you will want to use CPSceneSessionRoleImmersiveApplication. You can also adjust the InitialImmersionStyle key to your application scene. If you are intending to launch into mixed immersion, you will want to use UIImmersionStyleMixed.

At the beginning of your render loop, you should clear your drawable textures. The clear color value will change based on which immersion style you are rendering in. Here's a typical render loop. The app acquires a new drawable, configures the pipeline state with load and clear actions, encodes its GPU workload, presents the drawable, and finally, commits.

During the Encode stage, you will first clear the color and depth textures, since your render might not touch every pixel in the textures.

The depth value should always be cleared to zero value. However, the correct value for your color texture depends on which immersion style you are using. In full immersion style, you should clear the color texture to (0,0,0,1). In mixed immersion, you should use all zeros.

Here's the code. In this example, I've created a renderPassDescriptor and defined its color and depth texture.

Then I have adjusted the load and store actions to ensure the texture is cleared before rendering starts. I have cleared the depth and color attachments to all zeros. Because this example is for mixed immersion style, the alpha value in the color texture is set to zero.

Next, you will confirm your app is rendering color values in the supported convention You're likely already familiar with alpha blending, which is used to combine two textures, background and foreground, in a realistic way. The color pipeline on visionOS uses the pre-multiplied alpha color convention. This means you will multiple the color channel in your shaders by the alpha value before you pass it to Compositor Services.

The visionOS color pipeline functions In P3 display color space. For better color consistency between your app's rendered content and the passthrough, assets should be in display P3 color space. For more information, please refer to the documentation on developer.apple.com Compositor services uses both color and depth textures from the renderer for performing the compositing operation. It is worth noting, compositor services expect the depth texture to be in reverse Z convention.

Renderer content for a given pixel will show up on a display, if it has an alpha channel larger than 0 and a valid perspective depth value. To avoid a parallax affect, it is the renderer's responsibility to provide the correct depth value across all pixels.

Also for better system performance, the renderer should pass a zero depth value for any pixels that have a zero alpha value. Next, you'll use data provided by ARKit to incorporate scene understanding with your rendered content.

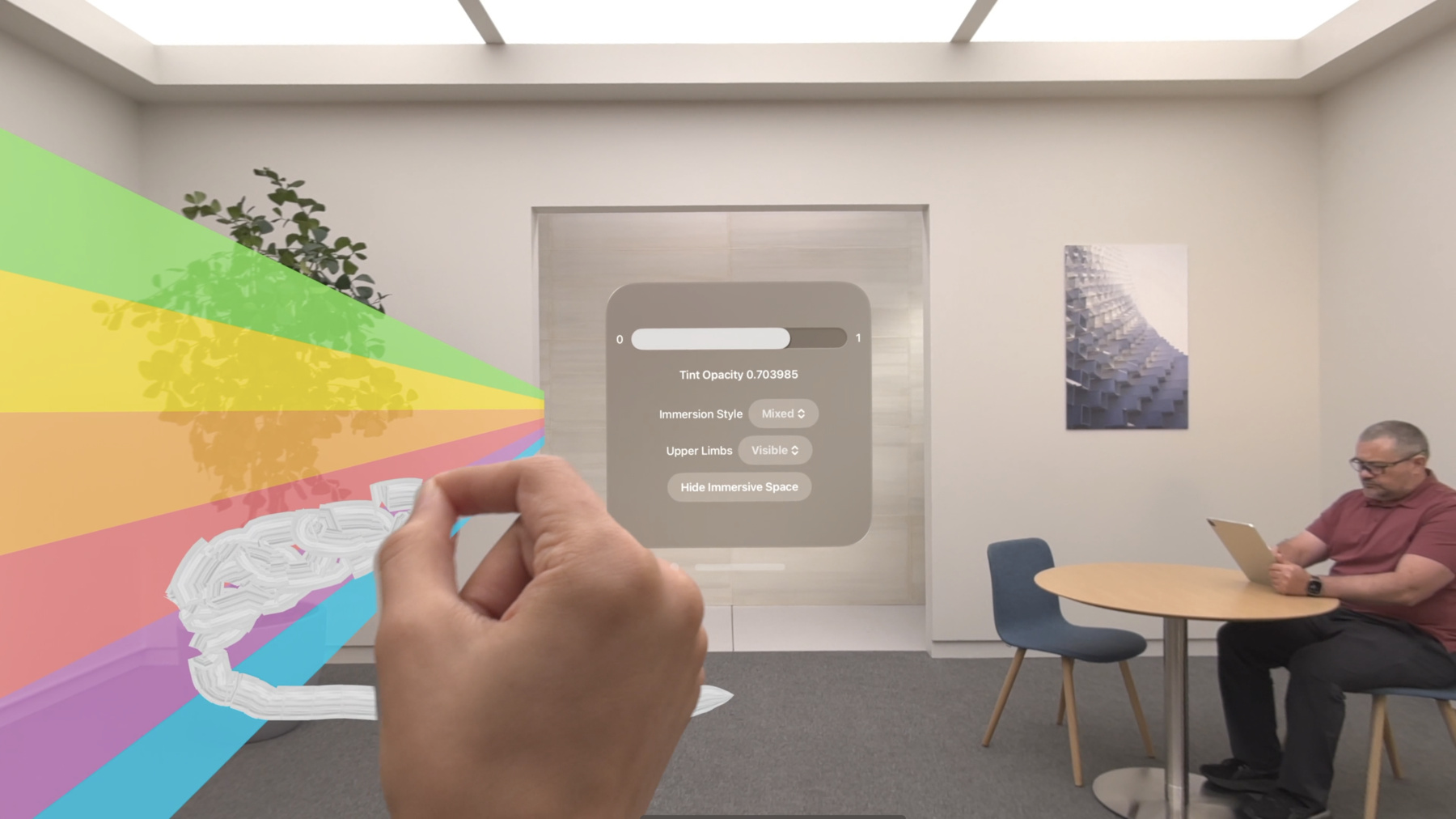

In mixed immersion style, rendered content is visible alongside the user's physical surroundings. So to ensure a more realistic experience, you'll integrate scene understanding into your app's rendering logic. With ARKit, you can Anchor your rendered content to objects and surfaces in the real world, perform any physics simulation you need for realistic interactions between your rendered content and physical objects in the environment, or occlude any rendered content that appears behind a physical object. To learn more about ARKit on visionOS, check out "Meet ARKit for spatial computing". We're almost done! The last step is to designate how visionOS should handle the visibility of the person's hands and arms, known as "upper limb visibility".

You've got three choices when it comes to upper limb visibility in mixed immersion style. The first one is Visible If you choose Visible mode, the hand will always appear on top, regardless of the relative depth of the object.

The second mode is Hidden. In this mode, the hand will always be occluded by the rendered content. Finally, you can choose Automatic mode.

Here, the hand's visibility will change based on the depth of the rendered content. The hand will appear visible if it's in front of the object, or fade out as its depth increases. The system does the work for you.

Let's dive into exactly how this works. Imagine the person wearing the headset is in mixed immersion style, There are two rendered objects in the scene, the red circle and the yellow cube. The person's hand is also within the field of view.

After rendering the scene, this is how the drawable depth and color texture would look. Also it's worth highlighting that from the compositor services API, the expected depth texture from a renderer, is in reverse Z values.

If you've designated Automatic for upper limb visibility, Compositor Services will use the depth values you have set for the texture. The framework will use those values to determine if the hand should be fully visible, because it's in front of the object or partially hidden, if it's behind or within the object.

Here's how it's done in code.

Using upperLimbVisibility, you'll let the system know which mode your app is requesting for upper limb visibility. Here, I've requested Automatic mode. After you've visually mixed your rendered content with the physical surroundings, it's important to position your content, so it appears to belong in the environment. To do that, you'll first transform your content from world coordinate space to normalized device coordinate space. I'll show you how to utilize a scene-aware projection matrix to improve content placement. Then, I'll talk about what normalized device coordinate convention is supported for your compositor services framework inputs. And lastly, I'll mention some alternative intermediate conventions you can use in your rendering engine. When you're rendering objects with respect to the location and orientation of Vision Pro, you'll transform your content from 3D world space to a 2.5D space, also known as normalized device coordinate space. This is called the ProjectionViewMatrix. The ProjectionViewMatrix is a combination of the ProjectionMatrix and the ViewMatrix. The ViewMatrix can be expanded into two transforms. First there's a transform which brings the origin space to the device space, called deviceFromOrigin. And second, there's a transform which brings the device space to the view space, called viewFromDevice.

To match the visionOS API the projection view matrix is equal to the projection matrix times the inverse of originFromDevice multiplied by deviceFromView. The deviceFromView transform is acquired by calling cp_view_get_transform in the Compositor Services API. This returns the matrix that brings the rendered view to device space. For originFromDevice, ARKit API provides this data when you call ar_anchor_get_origin_from_anchor.

Now this year, for better positioning of rendered content with real world objects, you can acquire a scene-aware projection matrix. This matrix combines both camera intrinsics and real time per frame scene understanding factors to improve people's experience in mixed immersion styles by better placement of rendered content with real world objects. If an application requests mixed immersion style through Compositor Services, it must use this new API.

Once you've transformed your content from world space to texture coordinate space, this is how the app's drawable color and depth texture would look after rendering.

In texture space, the X axis goes from left to right, and the Y axis goes from top to bottom. Notice that the expected depth values are in reverse Z convention.

To dive in further, this is how the drawable textures would look like in a screen and physical space, given that visionOS uses a foveated color pipeline. This means the size of the physical space is smaller than the screen space texture dimension.

Screen space is where people perceive the values, and physical space is where the actual values are stored in memory.

To learn more about how foveated rendering works, check out "Rendering at different rasterization rates" at developer.apple.com.

Let's go a bit deeper in to the drawable normalized device coordinate space that Compositor Services expects. Normalized device coordinate space has 3 axes: Horizontal X, Vertical Y, and perpendicular winding order, which all impact the orientation of the renderer.

Compositor Services expects the normalized device coordinate space used for rendering both color and depth texture to have the X axis from left to right, the Y axis from bottom to top, and the winding order as front to back.

However, your rendering engine might render its intermediate textures in a different normalized device coordinate space. Compositor Services can also provide the scene-aware projection matrix in a variety of conventions, flipping the Y axis, or the winding order. Ultimately it is the responsibility of your rendering engine to ensure that the final drawable texture is in the expected Compositor Services convention.

Now lets dive into a code sample to elaborate more on how to compose a projection view matrix, out of visionOS APIs.

After getting the deviceAnchor for the given presentation time, the deviceAnchor should be passed to Compositor Services API.

With the given deviceAnchor, now you can iterate through the views on the drawable to compute a projectionViewMatrix per view.

First, acquire the corresponding view. Then with ARKit APIs, obtain the originFromDevice transform. Then with Compositor Services APIs, obtain the deviceFromView transform.

Compose a viewMatrix out of those two, which would give a view from the origin. Call into the computeProjection API, and acquire the projection matrix for the given view with the expected Compositor Services convention, .rightUpBack space. And finally compose a projectionViewMatrix by multiplying the projection with the view matrix transforms. It is also worth noting, your application should acquire these per frame, and you should not reuse either of these transforms from older frames.

After you've positioned your rendered content, it's time to think about what happens when people interact with it.

An anchor represents a position and an orientation in the real world. Trackable anchors are a list of entities the system can gain and lose their tracking states over the course of a session. It's possible to use trackable anchors to position your rendered content. For example, a person's hand is a trackable anchor entity.

To set up a trackable anchor, you’ll first authorize your connection to ARKit. Using your session you will request authorization for the type of data you would like to access. Next, set up a provider. A data provider allows you to poll for or observe data updates, like an anchor change, and ultimately acquire a trackable anchor from ARKit APIs. On a per frame cadence, inside the render loop, first update the logic in your renderer, and then submit your data to compositor services.

Let's look at the render loop in a bit more detail. It consists of two stages. First is the update stage, which includes simulation-side logic, like interactions and simulation physics. This stage usually happens on the CPU side.

You'll need both trackable anchor and device pose to correctly calculate your simulation logic.

Second is the submission stage, where the final result is rendered into a texture. This stage happens on the GPU side. Anchor pose prediction function accuracy improves as the frame presentation time is closer to when the prediction query is called.

For a better anchoring result, you'll query the anchor and device pose again to ensure your result is as accurate as possible.

This diagram defines how the timing affects the different frame sections. There are four timings you can obtain from Compositor Services. The frame timeline represents the work that is being done by your application.

Optimal Input Time, is the time by which your app will finish non-critical tasks like interaction and any physics simulations. At the beginning of the Update stages, the app queries both the trackable anchor and the device anchor.

Just after that optimal input time, is the best time to query the latency-critical input and start rendering your frame.

The rendering deadline is the time by which your CPU and GPU work to render a frame should be finished. Trackable anchor time is when the cameras see the surroundings. For trackable anchors this time should be utilized for anchor prediction. Lastly, presentation time is when your frame will be presented on the display.

This time should be used for your device anchor prediction.

Imagine a scene within the device's field of view, where there is a red sphere that is being held in the person's hand. There's also a yellow cube sitting in the environment.

After rendering, this is how the scene would look in the device display.

Now, for any rendered content that is not attached to a trackable anchor, like this yellow cube, by querying the device's pose at presentation time, you can calculate its transform relative to the device transform.

However, for any rendered content that is attached to a trackable anchor, like the red sphere, you'll use both the trackable anchor position and the device position. For trackable anchor prediction, use the trackable anchor time.

Now lets dive in to a code sample.

In your render loop, before acquiring head pose and trackable anchor pose, your application should perform its non critical workload. This will improve prediction accuracy.

After non critical work is finished, the application starts its workload after the optimal time. At the start of an anchor dependent workload, you should first acquire both presentationTime and trackableAnchorTime from the frame timing data.

Then convert these timestamps, into seconds.

With the presentationTime, query the deviceAnchor. And with the trackableAnchorTime, query the trackable anchor. Then if the trackable anchor is tracked, perform logic relative to its position.

You can learn more about ARKit APIs in the video, "Create enhanced spatial computing experiences with ARKit." You now have all the tools you need to create incredible mixed immersion experiences on visionOS. Using Compositor Services and Metal, you can set up a render loop and display 3D content. And finally, you can use ARKit to make your experience interactive. Please refer to these videos for more information.

Thank you for watching!

-

-

3:07 - Add mixed immersion

@main struct MyApp: App { var body: some Scene { ImmersiveSpace { CompositorLayer(configuration: MyConfiguration()) { layerRenderer in let engine = my_engine_create(layerRenderer) let renderThread = Thread { my_engine_render_loop(engine) } renderThread.name = "Render Thread" renderThread.start() } .immersionStyle(selection: $style, in: .mixed, .full) } } } -

4:43 - Create a renderPassDescriptor

let renderPassDescriptor = MTLRenderPassDescriptor() renderPassDescriptor.colorAttachments[0].texture = drawable.colorTextures[0] renderPassDescriptor.colorAttachments[0].loadAction = .clear renderPassDescriptor.colorAttachments[0].storeAction = .store renderPassDescriptor.colorAttachments[0].clearColor = .init(red: 0.0, green: 0.0, blue: 0.0, alpha: 0.0) renderPassDescriptor.depthAttachment.texture = drawable.depthTextures[0] renderPassDescriptor.depthAttachment.loadAction = .clear renderPassDescriptor.depthAttachment.storeAction = .store renderPassDescriptor.depthAttachment.clearDepth = 0.0 -

9:08 - Set Upper Limb Visibility

@main struct MyApp: App { var body: some Scene { ImmersiveSpace { CompositorLayer(configuration: MyConfiguration()) { layerRenderer in let engine = my_engine_create(layerRenderer) let renderThread = Thread { my_engine_render_loop(engine) } renderThread.name = "Render Thread" renderThread.start() } .immersionStyle(selection: $style, in: .mixed, .full) .upperLimbVisiblity(.automatic) } } } -

13:37 - Compose a projection view matrix

func renderLoop { //... let deviceAnchor = worldTracking.queryDeviceAnchor(atTimestamp: presentationTime) drawable.deviceAnchor = deviceAnchor for viewIndex in 0...drawable.views.count { let view = drawable.views[viewIndex] let originFromDevice = deviceAnchor?.originFromAnchorTransform let deviceFromView = view.transform let viewMatrix = (originFromDevice * deviceFromView).inverse let projection = drawable.computeProjection(normalizedDeviceCoordinatesConvention: .rightUpBack, viewIndex: viewIndex) let projectionViewMatrix = projection * viewMatrix; //... } } -

18:27 - Trackable anchor prediction

func renderFrame() { //... // Get the trackable anchor and presentation time. let presentationTime = drawable.frameTiming.presentationTime let trackableAnchorTime = drawable.frameTiming.trackableAnchorTime // Convert the timestamps into units of seconds let devicePredictionTime = LayerRenderer.Clock.Instant.epoch.duration(to: presentationTime).timeInterval let anchorPredictionTime = LayerRenderer.Clock.Instant.epoch.duration(to: trackableAnchorTime).timeInterval let deviceAnchor = worldTracking.queryDeviceAnchor(atTimestamp: devicePredictionTime) let leftAnchor = handTracking.handAnchors(at: anchorPredictionTime) if (leftAnchor.isTracked) { //... }

-