-

Optimize for the spatial web

Discover how to make the most of visionOS capabilities on the web. Explore recent updates like improvements to selection highlighting, and the ability to present spatial photos and panorama images in fullscreen. Learn to take advantage of existing web standards for dictation and text-to-speech with WebSpeech, spatial soundscapes with WebAudio, and immersive experiences with WebXR.

Chapters

- 0:00 - Introduction

- 1:44 - Interaction

- 8:34 - Media content

- 17:13 - Inspect and debug

Resources

- Web Speech API - Web APIs | MDN

- Web Audio API - Web APIs | MDN

- Forum: Safari & Web

- WebKit.org – Bug tracking for WebKit open source project

- w3.org – Model element

- Adding a web development tool to Safari Web Inspector

- Safari Release Notes

- WebKit Open Source Project

- Web Inspector Reference

Related Videos

WWDC24

-

Search this video…

Hi! I'm Brandel from the Safari team, and I want to talk to you about how to make the most compelling experiences possible for Safari in visionOS 2.0.

Now, the web can be viewed on just about any platform or device you could imagine. It's the result of many decades of effort, by many groups around the world to put the philosophy of web standards into practice, making it easy to author content that runs everywhere. But when a truly new way of computing comes along, there is an opportunity and a responsibility, to extend those existing web capabilities with the abilities that a new platform gives.

Apple Vision Pro calls for that kind of moment.

Some of the features I'm going to tell you about today are new, and some have been around for a while, but all of them are given new significance because of the capabilities of spatial computing. First, I'll cover ways to interact on the platform. In visionOS, Natural Input involves the combination of eyes, hands, and voice. Next, we'll cover the immersive, visual and audio content you'll be able to provide.

You've got some new ways to offer Spatial and Panorama photos, as well as rich 3D models, spatial soundscapes, and immersive Virtual Reality.

Finally we'll come full circle with inspection and debugging, to help you dial things in exactly the way you want for this exciting new platform.

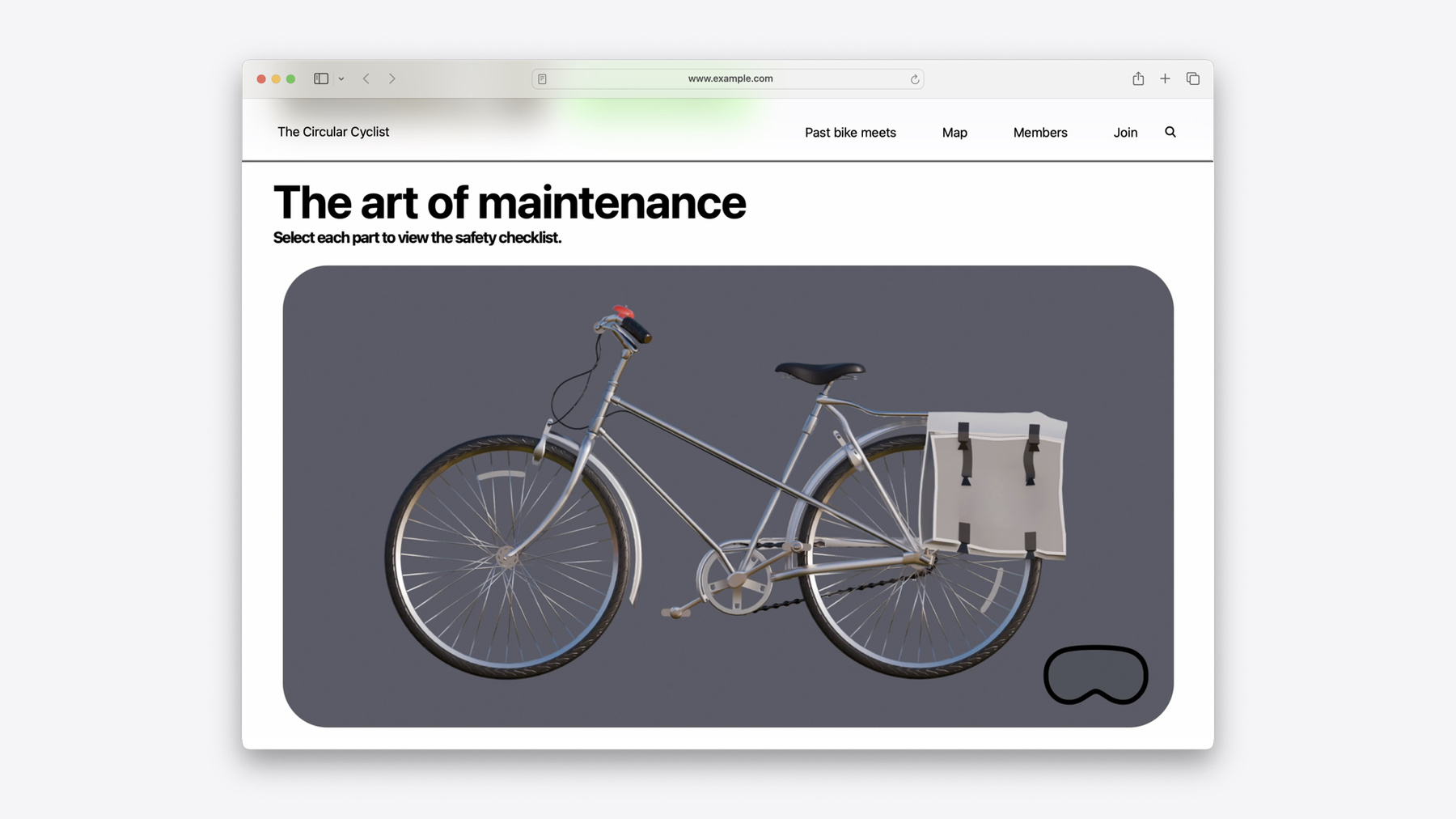

To drive the point home, I'll be adding these features to a fan site I maintain, The Circular Cyclist, all about the bikes and bike paths around Apple Park, but rest assured that everything I cover today applies just as much to a large, commercial website as it does to a small, community-based one like mine.

So, let's begin the first turn with capabilities for interaction.

As you might remember, visionOS 1 introduced Natural Input using your eyes to target the interaction regions in an app or a web page and tapping your fingers together to interact with them. These regions are given a gentle highlight when the user is looking at them.

These Interaction regions aren't a new concept to the web they've been part of it since the very beginning. When you move a cursor over a link, the shape that you're dealing with is the interaction region. But while they've been important, the regions themselves haven't normally been displayed; changes from the cursor and hover events give enough feedback for users to navigate with confidence.

To help with targeting, visionOS shows the shape of the interaction regions directly drawing a highlight, only when a user's eyes are directed at the element.

But to ensure everyone's privacy on Apple Vision Pro, where you look stays private. The highlights are drawn by a process outside of Safari not even the browser knows where the user is looking.

The web has come a long way since those early days, so let's bring this up to date.

With the majority of web content being seen on mobile devices these days, we know that users are tapping more than they're clicking but that also means making tap targets, or interaction regions, that are big enough to select properly. Even without a different background color, consider giving any links you have a little padding inside.

And for Vision Pro, rounding the corners for a highlight shape that matches the look of your site. This will ensure large regions for the eyes to dwell on, without being distracted by sharp corners or other features. Note that these don't even have to be visible in your DOM with a background color or border thickness they're hints to highlight a region that works with the content. And while that covers most of the cases on the web, it doesn't get everything. I have a slightly unconventional navigation scheme on Circular Cyclist for our bike safety checklist, showing the outlines of each bicycle part on hover to show what to look out for.

And, in visionOS 2, you can build complex interaction regions, specified by those same SVG paths, for more fine-grained control. Let me show you how.

The same SVG that drives my hover effect on Mac can set the interaction regions in visionOS, drawing it over the top. Remember that the cursor in Simulator is standing in for where you're looking in visionOS. So when we move it over parts of the bike, that's not a hover effect in Safari, but the privacy-preserving highlight that the rest of the operating system works with. And while the visual effect can seem subtle, it happens exactly where you look, so it's much more noticeable. The next improvement to interaction regions is for links that also contain large media content, like thumbnails to a video or a gallery. Because a persistent highlight could be distracting, media content gets a subtler version of the highlight, which fades down after a few seconds to let your users see content the way it's meant to be seen. Here's my gallery with large, image thumbnails. After dwelling a moment, the highlight settles down and lets the content shine through.

To get the best out of media interaction regions, make sure your anchor tag or other interactive element actually contains the media and you should be all set.

Next, you can use your voice for input on the platform in a couple of different ways: Just like on iOS and iPadOS, you and your users can use voice input for any text field just by tapping the microphone icon that pops up with the keyboard.

But you can get more flexibility when you want it through the WebSpeech API.

It's a proposed web standard that lets you respond to voice in realtime through the SpeechRecognition interface, and even respond back with speech of your own, through the SpeechSynthesis interface.

Because Safari does this processing locally, no data needs to be sent from the device to make it happen. Even if you're familiar with Web Speech, I think you'll really enjoy it on Vision Pro.

And I think it's just the thing for a game I've been wanting to make: Bike! Color! Shout! Players can call out their guesses about the latest bicycle color trends on an 8-bit version of campus trying to get ahead of the bike fashion around the loop. Rather than using a text field to get voice input, I want the player to keep their eyes on the action. I can use SpeechRecognition as a totally separate channel for input without anyone needing to tap or click on anything. Let's take a look at the code: To get started, I create a new SpeechRecognition object. On Safari that's prefixed with webkit, but it follows a standard implementation. It's what does most of my work.

To read results from it, I register a handler to listen for result events. When I get the event back, it has a resultList of all the snippets the recognizer has picked up so far I'll take the latest one.

Inside that result is a number of speech recognition alternatives. I'll grab the first one, and inside each alternative is the transcript. That's what I'll use for the game.

I'm not looking to do any complicated logic for this game, so basic string search against the name of the colors I'm looking for is going to be fine.

Once I'm listening to the right events, I just start the recognizer. This needs to be done on a user event like a tap or click, and there's a permission prompt the user will need to agree to, so it's important that they know why they're being asked for their microphone input. But after that, it's all up and running. Next, let's hook up the feedback.

I want to be able to announce the final score to the user, out loud, once the game's over. I can do that through the SpeechSynthesis API.

Build SpeechSynthesisUtterance objects, with the text you want to be spoken, and pass then in to the speechSynthesis object to speak them. Just like the speech recognition API, no data leaves the device and it all happens for free. There are no API keys or accounts to wrangle. Let's see it all in action! So we're given a countdown and have to guess the color sequence of bikes before they roll past. Once I grant the microphone permissions, I lodge my guess and see how I do: I'm gonna say: pink, orange, green, purple.

Oh. right. All the bikes at Apple Park were silver. While that makes the game accurate to campus today, and every day, maybe I'll have to go back to the drawing board on the game design. Again, this works on Safari everywhere, on Mac, on iPhone and in visionOS. But I really enjoy working with speech on the platform, and both these APIs pair extremely well with some more immersive capabilities we'll get to later. There's a lot more to discover about web speech, so take a look at the "Web Speech API" documentation on MDN for more details and inspiration.

So now you're up to speed with Natural Input. Interaction regions are now more flexible and content-aware, and speech input is a great way to add something extra to your experience. Let's switch gears now, and look at what you and your users on Apple Vision Pro will be able to see and hear on the web: Immersive media. Between Vision Pro's detailed display and spatial truth, it's what makes the platform into a tool for thought, a bicycle for the mind, if you will.

Let's start with Spatial photos. While seeing a spatial photo in the right setting can be amazing, seeing it in the wrong setting can feel pretty weird and disorienting. While developing the platform, we found that spatial photos need to be displayed carefully for the effect to work for you, rather than against you.

To understand more, let's take a look at the Photos app in visionOS.

When we're looking at a whole grid of photos even spatial ones, they're shown as 2D. We only see the spatial view when it's 1-up and centered. When I move, you can also see that the photo is shown a little behind the portal, with a feathered border blending between the photo content and the rest of my scene. And when I enlarge the photo to full view, the feathering effect is still there, we see a lot more of the photo, and really big. Spatial photos look the best when they're displayed at the true scale they were captured at, and this is the scale it was captured on Vision Pro.

All of which is to say, that while Spatial photos are a stunning experience, we feel it's better to see them on their own, at their true size, and centered in your view.

Because your users can put their Safari windows wherever they want at any size and position, it's hard to make that a good experience inside a page. We want you to be able to share your Spatial and Panorama photos in a way that's most impactful for your users.

Fortunately, the web already has a standards-based solution for this, that we think is one good way to address this situation: the element fullscreen API.

Going fullscreen on a display like a Mac or an iPhone, means that your app is the only thing being drawn to the screen. And Element fullscreen means that the whole display is being used for just that element.

Developers often use it for custom controls, for video playback, but we think it's a good fit for the demands of Spatial photos as well.

Element Fullscreen takes your media from Safari into the same exclusive-mode view that you'd get in the Files app or Photos app, without needing to download the photo and open it separately.

Because the whole space can be used, visionOS is able to show the picture at the correct visual scale for the photo and in the center of the user's view.

You kick it off, using the requestFullscreen method on the element. Because fullscreen is such a big change to the user's environment it'll need to be invoked by a user event like a tap or a click. The user will also be able to cancel fullscreen at any time, using the home gesture or pressing the crown button, and you can also invoke it with the document.exitFullScreen method as well. Let's get that into our page, and then check the results.

I use the spatial image file directly in the page, where it'll be displayed monoscopically. By using a source-set to specify the correct file type, I can also support a fallback image for any browsers that don't yet support the format.

Next, I'll take the click event input on the image, and use that event chain to request fullscreen on the element making sure I handle any errors gracefully if they come up.

And here's how it looks. Once I have the image I want, I just tap and I'm shown that feathered, portal view that we saw in Photos, and tapping the immersive button puts me into the exclusive view where I get to see it at full scale, without needing to accept any permissions or download any other apps.

The story is the same with Panorama photos. Let's take a look at how they work in the Photos App.

We see the panoramas in a flat view, in their window. We select a single panorama to view larger, and then tap the immersive button to see it at full scale.

Panoramas can be launched from any file format with large enough dimensions, just follow the same pattern of using a click event to request full screen on the element and you're all set! We get the same process, tap to go into the fullscreen view, and then tap to get the immersive view with the wrap-around display of the panorama photo. Without any downloads, and without any additional user permissions required, it's a great way to get this content out to the widest audience you can.

And while we're looking at spatial media, you can also show off full 3D objects to your visitors straight out of Safari as well, using Quick Look to view 3D model files. 3D assets are a world into themselves, but they all have a few things in common: from big-budget movies all the way to mobile games, a model defines the 3D position of a lot of surfaces, and then what material is on each of those surfaces, so they can be drawn in or rendered into whatever scene you need. And while making 3D assets is a little more complicated than taking a photo, you don't need to be an expert in any of this to use them. You can make beautiful 3D captures using just your phone, running apps like Reality Composer to do Object capture. You can also use models you buy or find online, and if they happen to be in another format, the conversion tools are now built right into Preview on your Mac. Circular Cyclist has a Quick Look model of the Apple Commuter bike, I can keep in my room as a reminder to stay active, and seeing it at real scale is a tangible reminder of what it feels like to get out and ride, without annoying my coworkers and adding a potential tripping hazard to my office space.

And it's less than a line of code - just a single attribute, to let users open a model with Quick Look in visionOS. Use exactly the same syntax as iOS and iPadOS for AR Quick Look. By using rel=ar for links pointing to a USDZ model file. visitors to your website can open these objects and put them anywhere in their scene, and in visionOS, like we saw, the website remains open in Safari along with the 3D models in Quick Look.

And, if you want to make sure your users will be able to see models through quick look before showing them a link, look at the relList of an anchor tag to see if it supports AR.

Next, we have immersive audio and you'll love what you hear.

Immersive audio in Safari starts with the Web Audio API, and Web Audio starts with creating an Audio context.

It lets you create new components, called nodes, including sound generators and effects that let you assemble a soundscape, just like guitar pedals at a concert, or effect machines in an audio mixing booth.

These nodes can be connected together, any way you want, to build an effects chain that you connect back your Audio context, resulting in a sonic environment with adjustable handles for all the generators and effects you create.

And the specific effect, or node that gives you the ability to spatialize audio is called the PannerNode. It lets you control properties like the position, orientation, cone angle and reference distance of the audio that you're sending through it.

Using PannerNodes along with a range of sources lets you position them in space or even move them around dynamically to create the perfect soundscape for whatever you're doing in your experience.

And here's how it works. From an audio context, build your nodes.

Then set the parameters. You can do some pretty sophisticated stuff by driving generator values with the output from other nodes, but I'm just going to set the values directly here.

Finally, connect them together and start playing! And MDN has has great coverage of the "Web Audio API" as well. It's another example of being able to reuse existing, robust web standards in a new context that makes it really special. Even if you're familiar with WebAudio, it's worth checking out in visionOS to see and hear what it can do for you.

And, along with those speech capabilities we covered earlier, spatial audio is the ideal counterpart to our next feature: Immersive Virtual reality, through WebXR.

Now, Virtual Reality is a deep topic, which is why we've got a whole other session on it, but in a nutshell: on the web, you can build it with webXR. It's the W3C standard to make a cross-platform immersive Virtual Reality experience that runs right inside Safari, without the need for up-front downloads. It's perfect for offering quick, bite-sized moments of something truly immersive that anyone can share with a link, and you can make experiences that flex from mobile phone to high-end desktop and everything in between.

My interactive bike safety checklist has a VR mode powered by WebXR and it's the perfect way to brush up on the art of bicycle maintenance. Being prepared is half the journey, though, unfortunately, not the half that gets me out for a ride.

And that's immersive media, Spatial photos and panoramic images with fullscreen API, Immersive audio with WebAudio PannerNodes, and WebXR, there's a lot to play with! And whenever you're developing anything, you often need to get in and inspect how it's working, or figure out why it isn't. Just like on iOS and iPadOS, you can connect Apple Vision Pro to Web Inspector on your Mac to see all the details. Here's how to get started.

First, ensure your Mac and Apple Vision Pro are on the same network. You'll also want to enable Web Inspector while you're there, though on Simulator it's enabled by default. On the physical device, find it under Apps>Safari>Advanced>Web Inspector. Next, you'll need to open your Apple Vision Pro to remote devices. That's at Settings>General>Remote Devices. Then, in Safari on your Mac, go to the Develop menu and you should see an item for connecting your Apple Vision Pro, labeled Use for Development. Your Vision Pro will generate a six-digit number, that you'll need to enter on your Mac. And then you're done! You'll only need to do this the first time you connect the two machines after that the visionOS device will turn up in your Develop menu in Safari, whenever the two devices are running and connected to the same network. From there you can inspect DOM content and CSS rules, use the JavaScript console for inspecting code-intensive experiences like WebXR, and apply any one-off exemptions you need for debugging like relaxing cross-origin policies on your page. Even better, you can have Web Inspector open and up on screen in your virtual Mac, it's a great way to see it all at once. Wow! What a ride. We've covered a lot today, From interaction with eyes, hands and voice, to experiences with immersive media: Panorama and Spatial photos, Quick Look, Immersive audio, and WebXR. You also saw how to get started with inspecting web content in visionOS, to get it exactly the way you want. We're just getting started with visionOS and feeling out the course, but it's an exciting trip with more to come down the road! On the off-chance that it's not a perfectly smooth ride while you build with these features, please file any issues you encounter at bugs.webkit.org. WebKit is an open-source project, so any contributions you make, from filing the smallest bug report all the way to making a code submission improves the project in all the places it's running. And as always, if you've got any feedback to share about Safari or anything else in visionOS, please use the Feedback Assistant to let us know what's going on. And as I close the final turn it's time for you to embark on your own tour! Enable Web Inspector, if you haven't already.

Make sure you get the VisionOS simulator in Xcode to take a look at your web content today.

For the deeper dive on WebXR I mentioned, be sure to catch: "Build Immersive Web Experiences with WebXR" and for more on how to work with 3D around the Apple ecosystem with USD, check out: "What's new in USD and Material X" Thank you for watching, and I'll see you around!

-