-

Lift subjects from images in your app

Discover how you can easily pull the subject of an image from its background in your apps. Learn how to lift the primary subject or to access the subject at a given point with VisionKit. We'll also share how you can lift subjects using Vision and combine that with lower-level frameworks like Core Image to create fun image effects and more complex compositing pipelines.

For more information about the latest updates to VisionKit, check out “What's new in VisionKit." And for more information about person segmentation in images, watch "Explore 3D body pose and person segmentation in Vision" from WWDC23.Resources

Related Videos

WWDC23

-

Search this video…

♪ ♪ Lizzy: Hi! I'm Lizzy, and I'm an engineer working on VisionKit here at Apple. I'm excited to talk to you today about how to bring subject lifting into your apps. Subject lifting was introduced in iOS 16, allowing users to select, lift, and share image subjects. First, I'll go over the basics of what subject lifting is. Then, I'll walk you through how to add subject lifting using new VisionKit API. Finally, my colleague Saumitro will dive deeper and introduce new underlying Vision API.

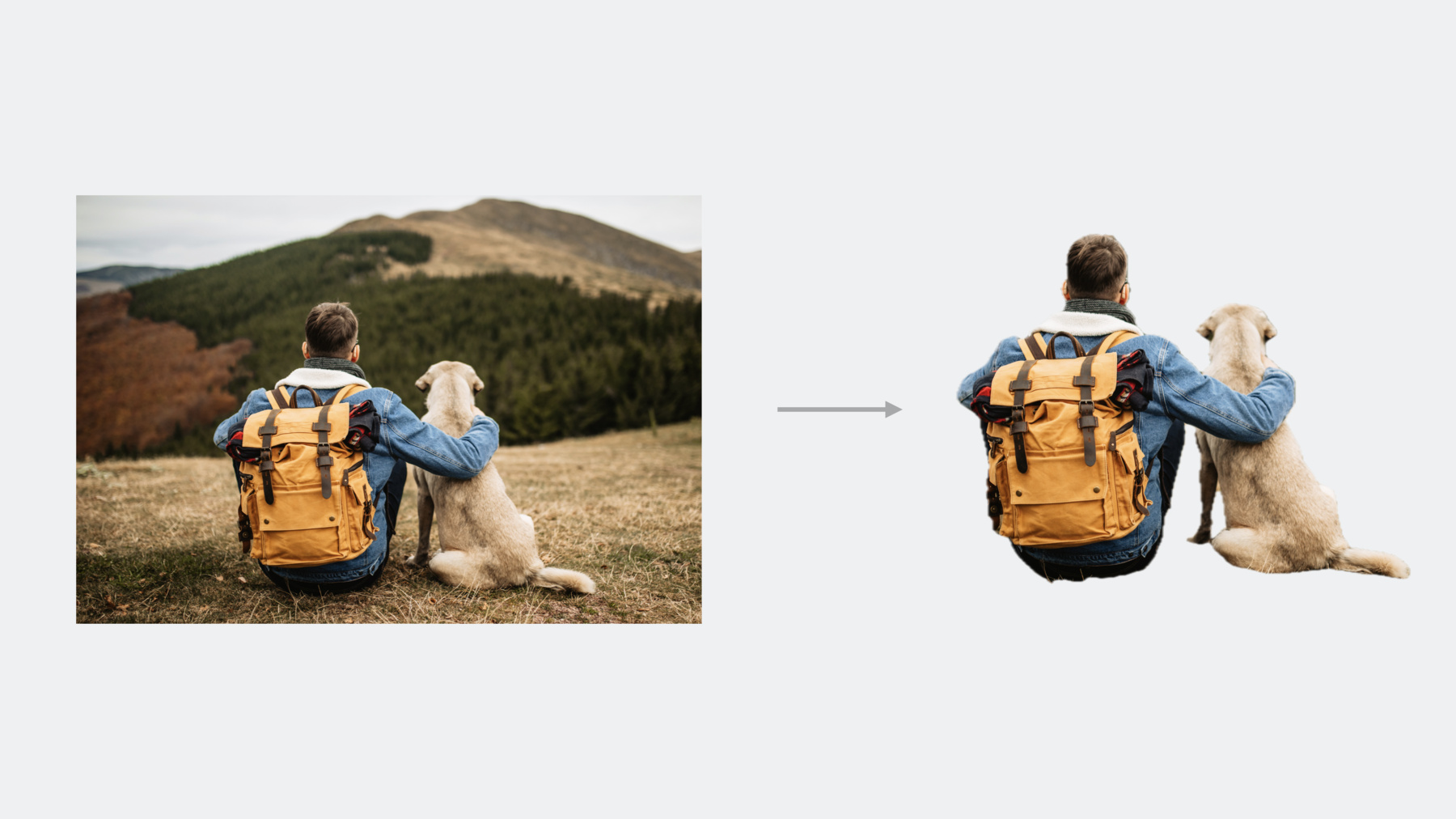

So what exactly is a subject? A subject is the foreground object, or objects, of a photo. This is not always a person or a pet. It can be anything from a building, a plate of food, or some pairs of shoes.

Images can have multiple subjects, like these three cups of coffee here. It's important to note that subjects are not always an individual object. In this example, the man and his dog together are the focal point of the image, making them one combined subject. So how can you get this into an app? There are two separate APIs available to help you add subject lifting to your apps. VisionKit. And Vision. VisionKit allows you to very easily adopt system-like subject lifting behavior, right out of the box. You can easily recreate the subject lifting UI that we all know and love, with just a few lines of code. VisionKit also exposes some basic information about these subjects, so you can give people new ways to interact with image subjects.

This all happens out-of-process, which has performance benefits but means the image size is limited. Vision is a lower-level framework and doesn't have out-of-the-box UI. This means it's not tied to a view, giving you more flexibility.

Image analysis happens in-process, and isn't as limited in image resolution as VisionKit. Finally, this API can be part of more advanced image editing pipelines, such as those using CoreImage. First, let's dive into subject lifting API in VisionKit. To add subject lifting with VisionKit, simply initialize an ImageAnalysisInteraction and add it to a view containing the image. This can be a UIImageView, but doesn't need to be. It's that simple. Now, your image will have system subject lifting interactions. Similarly, on macOS, create a ImageAnalysisOverlayView, and add it as a subview of the NSView that contains your image. You can set the preferred interaction types of your ImageAnalysisInteraction or ImageAnalysisOverlayView to choose which types of VisionKit interactions to support. The default interaction type is .automatic, which mirrors system behavior. Use this type if you want subject lifting, live text, and data detectors. The new imageSubject type only includes subject lifting, for cases when you don't want the text to be interactive. In addition to these UI interactions, VisionKit also allows you to programmatically access the subjects of an image using an ImageAnalysis. To generate an image analysis, simply create an ImageAnalyzer, then call the analyze function. Pass in the desired image and analyzer configuration. You can asynchronously access a list of all of the image's subjects using the subjects property of an ImageAnalysis. This uses the new Subject struct, which contains an image and its bounds. The highlighted subjects property returns a set of the highlighted subjects. In this example, the bottom two subjects have been highlighted. Users can highlight a subject by long-pressing on it, but you can also change the selection state by updating the highlightedSubjects set in code. You can look up a subject by point, using the async subject(at:) method. In this example, tapping here would return the middle subject. If there is no subject at that point, this method will return nil. Finally, you can generate subject images in two ways. For a single subject, just access the subject's image property. If you need an image composed of multiple subjects, use the async image(for:) method, and pass along the subjects that you'd like to include. In this example, if I want an image of just the bottom two subjects, I can use this method to generate this image. Let's see this all come together in a demo. I'm working on a puzzle app. I want to drag the pieces to the puzzle, but I can't lift any of them yet. Let's fix that. First, I'll need to enable subject lifting interactions in this image, so the pieces can be interacted with. I can do this by creating an ImageAnalysisInteraction... ...and simply adding it to my view.

I used the imageSubject interaction type here, since I don't need to include live text.

Awesome! Now I can select puzzle pieces and interact with them like this. This image wasn't pre-processed in any way. This is done with just subject lifting. I added some code to handle dropping puzzle pieces into the puzzle, and even adjusts them into place.

It's looking pretty cool, but I want to make my app feel even more engaging. I'm thinking of adding a drop shadow below each puzzle piece as I hover over it, to give a slight 3D effect. I already have a hover gesture handler here, I just need to add the shadow. I can't easily edit the image, so instead, I'm going to do it with an image layering trick. First, I check that I'm hovering over a subject by calling imageAnalysis.subject(at point:). I have a method addShadow(for subject:), which inserts a copy of the subject image, turns it grey, and offsets it slightly from the original subject position. Then, I add a copy of the subject image on top of the shadow so it looks three dimensional. Finally, if the hover point didn't intersect with a subject, I clear the shadow.

Let's try it out.

Awesome. The pieces now get a shadow effect when I hover over them.

Using VisionKit, I was able to set up subject lifting in my app, and even add a fun subject effect with just a few lines of code.

Next, I'll pass things along to my colleague Saumitro, who will talk about some new Vision API and how to integrate it into your apps. Saumitro: Thanks, Lizzy! Hi, I'm Saumitro, and I'm an engineer on the Vision Team. VisionKit's API is the easiest way to get started with subject lifting. For applications that need more advanced features, Vision has you covered. Subject lifting joins Vision's existing collection of segmentation APIs like saliency and person segmentation. Let's quickly review each of their strengths and see how subject lifting fits in. Saliency requests, like the ones for attention and objectness, are best used for coarse, region-based analysis. Notice that the generated saliency maps are at a fairly low resolution and as such, not suitable for segmentation. Instead, you could use the salient regions for tasks like auto-cropping an image. The person segmentation API shines at producing detailed segmentation masks for people in the scene. Use this if you specifically want to focus on segmenting people. The new person instance segmentation API takes things further by providing a separate mask for each person in the scene. To learn more, check out this session on person segmentation. In contrast to person segmentation, the newly introduced subject lifting API is "class agnostic". Any foreground object, regardless of its semantic class, can be potentially segmented. For instance, notice how it picks up the car in addition to the people in this image. Now let's take a look at some of the key concepts involved. You start with an input image. The subject lifting request processes this image and produces a soft segmentation mask at the same resolution. Taking this mask and applying it to the source image results in the masked image. Each distinct segmented object is referred to as an instance. Vision also provides you with pixelwise information about these instances. This instance mask maps pixels in the source image to their instance index. The zero index is reserved for the background, and then each foreground instance is labeled sequentially, starting at 1. Beyond being contiguously labeled, the ordering of these IDs is not guaranteed. You can use these indices to segment a subset of the foreground objects in the source image. If you're designing an interactive app, this instance mask is also useful for hit testing. I'll demonstrate how to do both of these tasks in a bit. Let's dive into the API. Subject lifting follows the familiar pattern of image-based requests in Vision. You start by instantiating the foreground instance mask request, followed by an image request handler with your input image. You then perform the request. Under the hood, this is when Vision analyzes the image to figure out the subject. While it's optimized to take advantage of Apple's hardware for efficiency, it's still a resource intensive task and best deferred to a background thread so as not to block the UI. One common way to do this is to perform this step asynchronously on a separate DispatchQueue. If one or more subjects are detected in the input image, the results array will be populated with a single observation. From here on, you can query the observation for masks and segmented images. Let's take a closer look at two of the parameters that control which instances are segmented and how the results are cropped. The instances parameter is an IndexSet that controls which objects are extracted in the final segmented image or mask. As an example, this image contains two foreground instances, not including the background instance. Since segmenting all detected foreground instances is a very common operation, Vision provides a handy allInstances property that returns an IndexSet containing all foreground instance indices. For the image here, this includes indices 1 and 2. Note that the background instance 0 is not included. You can also provide just a subset of those indices. Here's just instance 1. And just instance 2. You can also control how the final masked image is cropped. If this parameter is set to false, the output image resolution matches the input image. This is nice when you want to preserve the relative location of the segmented objects, say, for downstream compositing operations. If you set it to true, you get a tight crop for the selected instances. In the examples so far, I've been working with the fully masked image outputs. However, for some operations, like applying masked effects, it can be more convenient to work with just the segmentation masks instead. You can generate these masks by invoking the createScaledMask method on the observation. The parameters behave the same as before. The output is a single channel floating point pixel buffer containing the soft segmentation mask. The mask I just generated is perfectly suited for use with CoreImage. Vision, much like VisionKit, produces SDR outputs. Performing the masking in CoreImage, however, preserves the high dynamic range of the input. To learn more about this, consider checking out the session on adding HDR to your apps. One way to perform this masking is to use the CIBlendWithMask filter. I will start with the source image that needs to be masked. This will typically be the same image you passed in to Vision. The mask obtained from Vision's createScaledMask call. And finally, the new background image that the subject will be composited on top of. Using an empty image for this will result in a transparent background. Alternatively, if you plan to composite the result on top of a new background, you can directly pass it in here. And that's pretty much it. The output will be an HDR-preserved masked and composited image. Now let's put everything together to build a cool subject lifting visual effects app. You can remove the background and show the views underneath it, or replace it with something else. On top of that, you can apply one of the preset effects. And the effects compose with the selected background. You can even tap on a foreground instance to selectively lift it. Let's look at an outline of how I will approach the creation of this app. The core of our app relies on an effects pipeline that accepts inputs from the UI and performs all the work necessary for generating the final output. I'll start by performing subject lifting on the source image. An optional tap will allow the selection of individual instances. The resulting mask will be applied to the source image. And finally, the selected background and visual effects will be applied and composited to produce the final output image. These last two steps will be accomplished using CoreImage. Our top level function takes in the input image, the selected background image and effect, and potentially a tap position from the user for selecting one of the instances. The Effect type here is just a simple enum for our presets. Its output is the final composited image, ready for display in the UI. This task can be deconstructed into two stages. First, generating the subject mask for the selected instances. And second, using that mask to apply the selected effect. Let's start with the first stage. The input to this stage is the source image and the optional tap position. We have already encountered most of the code here, which simply performs the Vision request and returns the mask. The interesting bit is this line which maps the tap position to a set of indices using the label mask. Let's take a closer look. If the tap is missing, I'll default to using all instances. I want to map the tap position to a pixel in the instance mask. There are two pieces of information that are relevant here. First, the UI normalizes the tap position to be in 0, 1 before passing it in. This is nice because I don't have to worry about details like display resolutions and scaling factors. Second, it uses the default UIKit coordinate system which has the origin at the top-left. This is aligned with our image-space coordinate of the pixel buffer. So I can perform this transformation using this existing Vision helper function. I now have all the information necessary to look up the tapped instance label. This involves directly accessing the pixel buffer's data, and I'll show you how to do that next. Once I have the label, I check to see if it's zero. Recall that a zero label implies that the user tapped on a background pixel. In this case, I'll fall back to selecting all instances. Otherwise, I'll return a singleton set with just the selected label. This bit of code fills in how the instance label lookup is performed. As with any pixel buffer, I first need to lock it before accessing its data. Read-only access is sufficient for our purposes. The rows of a pixel buffer may be padded for alignment, so the most robust way to compute the byte offset for a pixel is to use its bytesPerRow value. Since the instanceMask is a single channel UInt8 buffer, I don't have to worry about any further scaling. I'm done reading from the instance mask, so I can unlock the buffer. And with that wrapped up, I have the mask with the selected instance isolated. I can now move on to applying the effects. The first step here is to apply the selected effect to the background. Once that's done, I will use CoreImage to composite the masked subject on top of the transformed background. The first few effects are quite straightforward and direct applications of existing CoreImage filters. For instance, for highlighting the subject, I used the exposure adjustment filter to dim the background. The bokeh effect is slightly more involved. In addition to blurring the background, I want a halo highlighting our selected subject. A white cut out for the subject before blurring would do the trick. A quick way to accomplish this is to reuse our current function and pass in a solid white image for the subject. And with that, I have the base layer for compositing. Finally, I will drop in the CoreImage blending snippet from earlier. This composites the lifted subject on top of the newly transformed background. And with that final piece of the effects pipeline in place, the app is now complete. I hope it gave you a taste of what's possible with the new subject lifting API. In summary, VisionKit is the fastest way to incorporate subject lifting into your apps. For more advanced applications, you can drop down to Vision's API. And finally, CoreImage is the perfect companion for performing HDR-enabled image processing with subject lifting. Lizzy and I hope you enjoyed this video, and we're very excited to see what you build. ♪ ♪

-