-

Visualize and optimize Swift concurrency

Learn how you can optimize your app with the Swift Concurrency template in Instruments. We'll discuss common performance issues and show you how to use Instruments to find and resolve these problems. Learn how you can keep your UI responsive, maximize parallel performance, and analyze Swift concurrency activity within your app.

To get the most out of this session, we recommend familiarity with Swift concurrency (including tasks and actors).Resources

Related Videos

WWDC23

WWDC22

- Eliminate data races using Swift Concurrency

- Track down hangs with Xcode and on-device detection

- What's new in Swift

- What's new in UIKit

WWDC21

-

Search this video…

♪ ♪ Welcome to Visualize and Optimize Swift Concurrency. My name is Mike, and I work on the Swift runtime library. Hi, I'm Harjas, and I work on Instruments. Together, we're going to discuss ways to better understand your Swift Concurrency code and make it go faster, including a new visualization tool available in Instruments 14. Let's start off with a really quick recap of the various parts of Swift Concurrency and how they work together, to make sure you're up to speed. After that, we'll demo the new concurrency instrument. We'll show you how we use it to solve some real performance issues with an app using Swift Concurrency. Finally, we'll discuss the potential problems of thread pool exhaustion and continuation misuse and how to avoid them. Last year, we introduced Swift Concurrency. This was a new language feature that includes async/await, structured concurrency, and Actors. We've been pleased to see a great deal of adoption of these features since then, both inside and outside Apple. Swift concurrency adds several new features to the language which work together to make concurrent programming easier and safer. Async/await are basic syntactic building blocks for concurrent code. They allow you to create and call functions that can suspend their work in the middle of execution, then resume that work later, without blocking an execution thread.

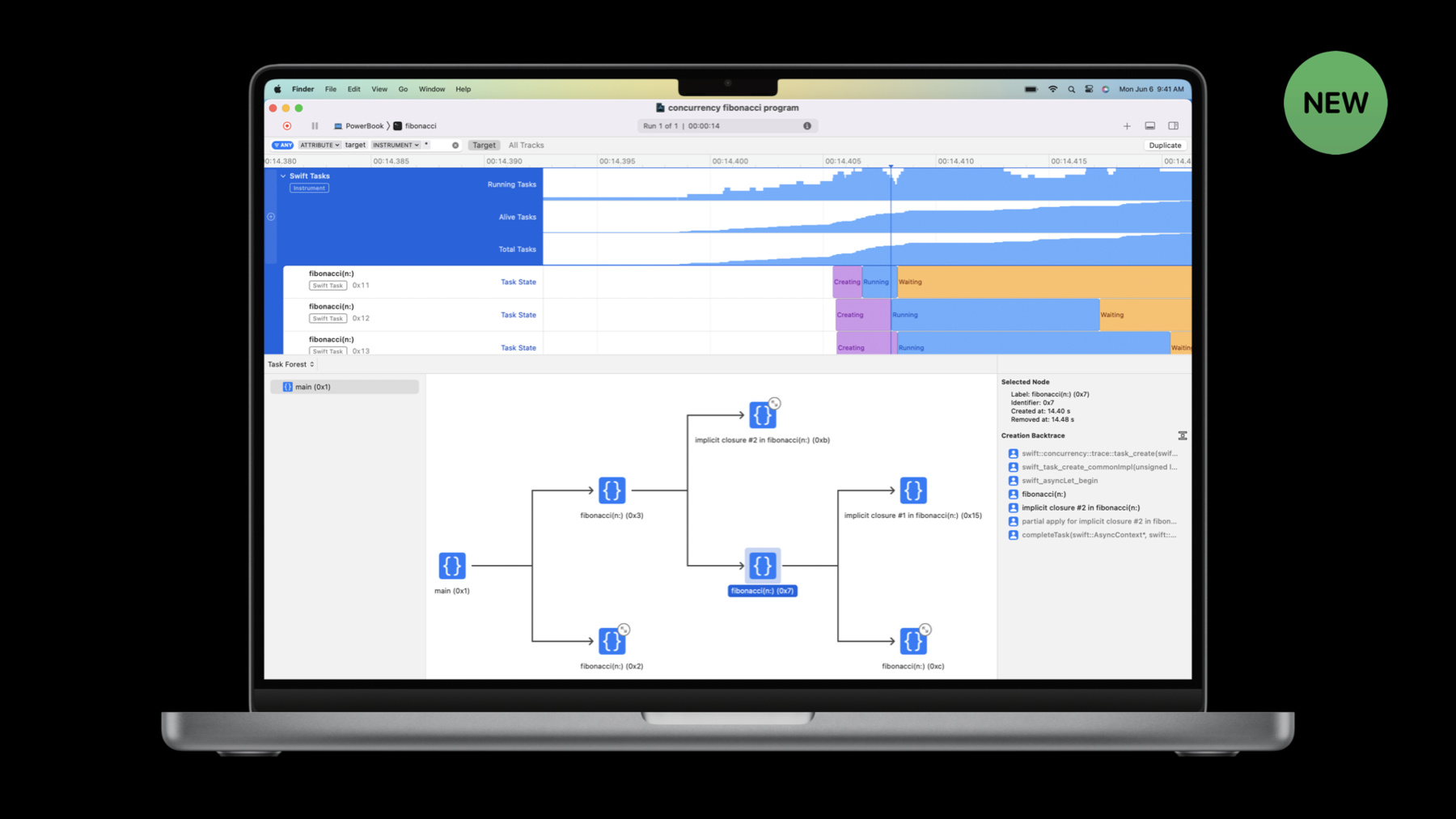

Tasks are the basic unit of work in concurrent code. Tasks execute concurrent code and manage its state and associated data. They contain local variables, handle cancellation, and begin and suspend execution of async code. Structured concurrency makes it easy to spawn child tasks to run in parallel and wait for them to complete. The language provides syntax which keeps the work grouped together and ensures that tasks are awaited or automatically canceled if not used. Actors coordinate multiple tasks that need to access shared data. They isolate data from the outside, and allow only one task at a time to manipulate their internal state, avoiding data races from concurrent mutation. New in Instruments 14, we're introducing a set of instruments that can capture and visualize all of this activity in your app, helping you to understand what your app is doing, locate problems, and improve performance. For a more in-depth discussion of the fundamentals of Swift Concurrency, we have several videos about these features linked in the Related Videos section.

Let's take a look at optimizing an app using Swift Concurrency code. Swift concurrency makes it easy to to write correct concurrent and parallel code. However, it's still possible to write code that misuses concurrency constructs. It's also possible to use them correctly but in a way that doesn't get the performance benefits you were aiming for.

There are a few common problems that can arise when writing code using Swift concurrency that can cause poor performance or bugs. Main Actor blocking can cause your app to hang. Actor contention and thread pool exhaustion hurt performance by reducing parallel execution. Continuation misuse causes leaks or crashes. The new Swift Concurrency instrument can help you discover and fix these problems. Let's take a look at each of these, starting with main Actor blocking. Main Actor blocking occurs when a long-running task runs on the main Actor. The main Actor is a special Actor which executes all of its work on the main thread. UI work must be done on the main thread, and the main Actor allows you to integrate UI code into Swift Concurrency. However, because the main thread is so important for UI, it needs to be available and can't be occupied by a long-running unit of work. When this happens, your app appears to lock up and becomes unresponsive. Code running on the main Actor must finish quickly, and either complete its work or move computation off of the main Actor and into the background. Work can be moved into the background by putting it in a normal Actor or in a detached task. Small units of work can be executed on the main Actor to update UI or perform other tasks that must be done on the main thread. Let's see a demo of this in action. Thanks, Mike. Here we have our File Squeezer application. We built this application to be able to quickly compress all the files in a folder. It seems to work alright for small files. However, when I use larger files, it takes much longer than expected and the UI is completely frozen and does not respond to any interactions. This behavior is very off-putting to users and may make them think that the application has crashed or will never finish. We should strive to ensure that our UI is always responsive for the best user experience. To investigate this performance problem, we can use the new Swift Concurrency template in Instruments. The Swift Tasks and Swift Actors instruments provide a full suite of tools to help you visualize and optimize your concurrency code. When you're just starting to investigate a performance problem you should first take a look at the top-level statistics provided to you by the Swift Tasks instrument. The first of these is Running Tasks, which show you how many tasks are executing simultaneously. Next, we have Alive Tasks, which show how many tasks are present at a given point in time. And finally, Total Tasks; graph the total number of tasks that have been created up until that point in time. When you're attempting to reduce your application memory footprint, you should take a close look at the Alive and Total Tasks statistics. The combination of all of these statistics give you a good picture of how well your code is parallelizing and how many resources you are consuming. One of the many detail views for this instrument is the Task Forest; shown in the bottom half of this window, it provides a graphical representation of the parent-child relationships between Tasks in structured concurrency code. Next, we have our Task Summary view. This shows how much time each Task spends in different states. We have super-charged the view by allowing you to right-click on a Task to be able to pin a Track containing all the information about the selected Task to the timeline. This allows you to quickly find and learn about Tasks of interest that may be running for a very long time or stuck waiting to get access to an Actor. Once you pin a Swift Task to the timeline, you get four key features. First, is the track that shows you what state your Swift Task is in. Second, is the Task creation backtrace in the extended detail view. Third, is the narrative view that provides more context about the state a Swift Task is in. Such as, if it's waiting on a Task, it will inform you which Task you are waiting on. Lastly, you have access to the same pin action in the narrative view as you did in the summary view. So, you can pin a child Task, a thread, or even a Swift Actor to the timeline. This narrative view will be instrumental in finding how a Swift Task is related to your other concurrency primitives and the CPU. Now that we have seen a brief overview of some of the features in the new instrument, let's profile our application and optimize our code. We can do this by pulling up our project in Xcode and pressing Command-I. This will compile our application, open up instruments, and pre-select the target to the File Squeezer application. From here you can pick the Swift Concurrency option in the template picker and start recording.

Once again, I'll drop the large files onto the app.

Again, we see that the app starts spinning and the UI is not responsive. We will let this run for a few more seconds so that Instruments can capture all the information about our application.

Now that we have a trace, we can start investigating. I'm going to fullscreen this trace to better see all the information.

We can use option-drag to zoom in on our area of interest.

In the process track, Instruments shows us exactly where this UI hang was occurring. This can be useful for cases when it is not clear when the hang occurred or how long it lasted. As I mentioned earlier, a good place to start is the top-level Swift Task statistics. What catches my eye right away is the Running Tasks count. For most of the time, only one Task is running. This tells us part of the problem is that all of our work is being forced to serialize. We can use the Task State summary to find our longest running Task and use the pin action to pin it to the timeline.

The narrative view for this Task tells us that it ran on a background thread for a short amount of time, and then ran on the Main Thread for a long time. To further investigate, we can pin the Main Thread to the timeline.

The Main Thread is being blocked by several long running Tasks. This demonstrates the issue of Main Actor blocking that Mike spoke about. So the questions we have to ask ourselves are, "What is this Task doing?" and "Where did this Task come from?" We can switch back to the narrative view to answer both of these questions. The creation backtrace in the extended detail view shows that the task was created in the compressAllFiles function. The narrative shows that the Task is executing closure number one in compressAllFiles. By right-clicking on this symbol, we can open this in the source viewer.

Closure number one inside this function is calling our compression work. Now that we know where this Task was created and what it is doing, we can open our code in Xcode and adapt it so that we don't run these heavy computations on the Main Thread. The compress file function is located within the CompressionState class. The entire CompressionState class is annotated to run on the @MainActor. This explains why the Task also ran on the Main Thread. We need this entire class to be on the MainActor because the @Published property here must only be updated from the Main Thread, otherwise, we could run into runtime issues. So, instead we could try to convert this class into its own Actor. However, the compiler will tell us that we can't do this because essentially we would be saying that this shared mutable state needs to be protected by two different Actors. But that does give us a hint for what the real solution is. We have two different pieces of mutable state here within this class. One piece of state, the 'files' property, needs to be isolated to the MainActor because it is observed by SwiftUI. But access to the other piece of state, the logs, needs to be protected from concurrent access, but which thread accesses logs at any given point doesn't matter. Thus, it doesn't actually need to be on the Main Actor. We still want to protect it from concurrent access, though, so we wrap it in its own Actor. All we need now is add a way for Tasks to hop between the two as needed. We can create a new Actor and call it ParallelCompressor.

We can then copy the log state into the new Actor, and add some extra setup code.

From here, we need to make these Actors communicate with each other. First, let's remove the code that referred to the logs variable from the CompressionState class, and add it to our ParallelCompressor Actor.

Then finally, we need to update CompressionState to invoke compressFile on the ParallelCompressor.

With these changes, let's test our application again. Once again, I'll drop the large files onto our application.

The UI is no longer hung, which is a great improvement, but we aren't getting the speed that we would expect. We really want to take full advantage of all the cores in the machine to do this work as fast as possible. Mike, what else should we be watching out for? Mike: We've solved our hang by moving work off of the Main Actor, but we're still not getting the performance we want. To see why, we need to take a closer look at Actors. Actors make it safe for multiple tasks to manipulate shared state. However, they do this by serializing access to that shared state. Only one task at a time is allowed to occupy the Actor, and other tasks that need to use that Actor will wait. Swift concurrency allows for parallel computation using unstructured tasks, task groups, and async let. Ideally, these constructs are able to use many CPU cores simultaneously. When using Actors from such code, beware of performing large amounts of work on an Actor that's shared among these tasks. When multiple tasks attempt to use the same Actor simultaneously, the Actor serializes execution of those tasks. Because of this, we lose the performance benefits of parallel computation.

This is because each task must wait for the Actor to become available. To fix this, we need make sure that tasks only run on the Actor when they really need exclusive access to the Actor's data. Everything else should run off of the Actor. We divide the task into chunks. Some chunks must run on the Actor, and the others don't. The non-Actor isolated chunks can be executed in parallel, which means the computer can finish the work much faster. Let's see a demo of this in action. Harjas: Thanks, Mike. Let's take a look at the trace of our updated "File Squeezer" application and keep in mind what Mike has just taught us. The Task Summary view shows us that our concurrency code is spending an alarming amount of time in the Enqueued state. This means we have a lot of Tasks waiting to get exclusive access to an Actor. Let's pin one of these Tasks to learn why.

This Task spends quite a while waiting to get onto the ParallelCompressor Actor before it runs the compression work. Let's go ahead and pin the Actor to our timeline.

Here we have some top-level data for the ParallelCompressor Actor. This Actor Queue seems to be getting blocked by some long running Tasks. Tasks should really only stay on an Actor for as long as needed. Let's go back to the Task narrative.

After the enqueue on ParallelCompressor, the Task runs in closure number one in compressAllFiles. So let's start our investigation there. The source code shows us that this closure is primarily running our compression work. Since the compressFile function is part of the ParallelCompressor Actor, the entire execution of this function happens on the Actor; blocking all other compression work. To resolve this issue, we need to pull the compressFile function out of Actor-isolation and into a detached task.

By doing this, we can have the detached task only on an Actor for as long as needed to update the relevant mutable state. So now the compress function can be executed freely, on any thread in the thread pool, until it needs to access Actor-protected state. For example, when it needs to access the 'files' property, it'll move onto the Main Actor. But as soon as it's done there, it moves into the "sea of concurrency" again, until it needs to access the logs property, for which it moves on to the ParallelCompressor Actor. But again, as soon as it's done there, it leaves the Actor again to be executed on the thread pool. But of course, we don't have just one task doing compression work; we have many. And by not being constrained to an Actor, they can all be executed concurrently, only limited by the number of threads.

Of course, each Actor can only execute one task at a time, but most of the time, our Tasks don't need to be on an Actor. So like Mike explained, this allows our compression tasks to executed in parallel and utilize all available CPU cores. So let's make this change now.

We can mark the compressFile function as nonisolated.

This does result in a few compiler errors. By marking it as nonisolated, we told the Swift compiler that we don't need access to the shared state of this Actor. But that isn't entirely true. This log function is Actor-isolated and it needs access to the shared mutable state. In order to fix this, we need to make this function async and then mark all of our log invocations with the await keyword.

Now we need to update our task creation to create a detached task.

We do this to ensure the Task does not inherit the Actor-context that it was created in. For detached tasks, we need to explicitly capture self.

Let's test our application again.

The app is able to compress all the files simultaneously and the UI remains responsive. To verify our improvements, we can check the Swift Actors instrument. Looking at the ParallelCompressor Actor, most of the work executed on the Actor is only for a short amount of time and the queue size never gets out of hand. To recap, we used the Instrument to Isolate the cause of a UI hang, we restructured our concurrency code for better parallelism, and verified performance improvements using data. Now Mike is gonna tell us about some other potential performance issues. Mike: There are two common problems I'd like to cover beyond what we've seen in the demo. First, let's talk about thread pool exhaustion. Thread pool exhaustion can hurt performance or even deadlock an application. Swift concurrency requires tasks to make forward progress when they're running. When a task waits for something, it normally does so by suspending. However, it's possible for code within a task to perform a blocking call, such as blocking file or network IO, or acquiring locks, without suspending. This breaks the requirement for tasks to make forward progress. When this happens, the task continues to occupy the thread where it's executing, but it isn't actually using a CPU core. Because the pool of threads is limited and some of them are blocked, the concurrency runtime is unable to fully use all CPU cores. This reduces the amount of parallel computation that can be done and the maximum performance of your app. In extreme cases, when the entire thread pool is occupied by blocked tasks, and they're waiting on something that requires a new task to run on the thread pool, the concurrency runtime can deadlock. Be sure to avoid blocking calls in tasks. File and network IO must be performed using async APIs. Avoid waiting on condition variables or semaphores. Fine-grained, briefly-held locks are acceptable if necessary, but avoid locks that have a lot of contention or are held for long periods of time. If you have code that needs to do these things, move that code outside of the concurrency thread pool– for example, by running it on a Dispatch queue– and bridge it to the concurrency world using continuations. Whenever possible, use async APIs for blocking operations to keep the system operating smoothly. When you're using continuations, you must be careful to use them correctly. Continuations are the bridge between Swift concurrency and other forms of async code. A continuation suspends the current task and provides a callback which resumes the task when called. This can then be used with callback-based async APIs. From the perspective of Swift concurrency, the task suspends, and then it resumes when the continuation is resumed. From the perspective of the callback-based async API, the work begins, and then the callback is called when the work completes. The Swift Concurrency instrument knows about continuations and will mark the time interval accordingly, showing you that the task was waiting on a continuation to be called. Continuation callbacks have a special requirement: they must be called exactly once, no more, no less. This is a common requirement in callback-based APIs, but it tends to be an informal one and is not enforced by the language, and oversights are common. Swift concurrency makes this a hard requirement. If the callback is called twice, the program will crash or misbehave. If the callback is never called, the task will leak. In this code snippet we use withCheckedContinuation to get a continuation. We then invoke a callback-based API. In the callback, we resume the continuation. This meets the requirement of calling it exactly once. It's important to be careful when the code is more complex. On the left, we've modified the callback to only resume the continuation on success. This is a bug. On failure, the continuation will not be resumed, and the task will be suspended forever. On the right, we're resuming the continuation twice. This is also a bug, and the app will misbehave or crash. Both of these snippets violate the requirement to resume the continuation exactly once. Two kinds of continuations are available: checked and unsafe. Always use the withCheckedContinuation API for continuations unless performance is absolutely critical. Checked continuations automatically detect misuse and flag an error. When a checked continuation is called twice, the continuation traps. When the continuation is never called at all, a message is printed to the console when the continuation is destroyed warning you that the continuation leaked. The Swift Concurrency instrument will show the corresponding task stuck indefinitely in the continuation state. There is much more to look into for the new Swift Concurrency template in Instruments. You can get graphic visualization of structured concurrency, view task creation calltrees, and inspect the exact assembly instructions to get a full picture of the Swift Concurrency runtime. To learn more about how Swift Concurrency works under the hood, watch last year's session on "Swift Concurrency: Behind the Scenes." And to learn more about data races, watch "Eliminate data races using Swift Concurrency." Thanks for watching! And have fun debugging your concurrency code.

-

-

10:24 - CompressionState class

@MainActor class CompressionState: ObservableObject { @Published var files: [FileStatus] = [] var logs: [String] = [] func update(url: URL, progress: Double) { if let loc = files.firstIndex(where: {$0.url == url}) { files[loc].progress = progress } } func update(url: URL, uncompressedSize: Int) { if let loc = files.firstIndex(where: {$0.url == url}) { files[loc].uncompressedSize = uncompressedSize } } func update(url: URL, compressedSize: Int) { if let loc = files.firstIndex(where: {$0.url == url}) { files[loc].compressedSize = compressedSize } } func compressAllFiles() { for file in files { Task { let compressedData = compressFile(url: file.url) await save(compressedData, to: file.url) } } } func compressFile(url: URL) -> Data { log(update: "Starting for \(url)") let compressedData = CompressionUtils.compressDataInFile(at: url) { uncompressedSize in update(url: url, uncompressedSize: uncompressedSize) } progressNotification: { progress in update(url: url, progress: progress) log(update: "Progress for \(url): \(progress)") } finalNotificaton: { compressedSize in update(url: url, compressedSize: compressedSize) } log(update: "Ending for \(url)") return compressedData } func log(update: String) { logs.append(update) } -

11:49 - CompressionState class using ParallelCompressor actor

actor ParallelCompressor { var logs: [String] = [] unowned let status: CompressionState init(status: CompressionState) { self.status = status } func compressFile(url: URL) -> Data { log(update: "Starting for \(url)") let compressedData = CompressionUtils.compressDataInFile(at: url) { uncompressedSize in Task { @MainActor in status.update(url: url, uncompressedSize: uncompressedSize) } } progressNotification: { progress in Task { @MainActor in status.update(url: url, progress: progress) await log(update: "Progress for \(url): \(progress)") } } finalNotificaton: { compressedSize in Task { @MainActor in status.update(url: url, compressedSize: compressedSize) } } log(update: "Ending for \(url)") return compressedData } func log(update: String) { logs.append(update) } } @MainActor class CompressionState: ObservableObject { @Published var files: [FileStatus] = [] var compressor: ParallelCompressor! init() { self.compressor = ParallelCompressor(status: self) } func update(url: URL, progress: Double) { if let loc = files.firstIndex(where: {$0.url == url}) { files[loc].progress = progress } } func update(url: URL, uncompressedSize: Int) { if let loc = files.firstIndex(where: {$0.url == url}) { files[loc].uncompressedSize = uncompressedSize } } func update(url: URL, compressedSize: Int) { if let loc = files.firstIndex(where: {$0.url == url}) { files[loc].compressedSize = compressedSize } } func compressAllFiles() { for file in files { Task { let compressedData = await compressor.compressFile(url: file.url) await save(compressedData, to: file.url) } } } } -

17:46 - CompressionState class using ParallelCompressor with minimal actor-isolation and detached tasks

actor ParallelCompressor { var logs: [String] = [] unowned let status: CompressionState init(status: CompressionState) { self.status = status } nonisolated func compressFile(url: URL) async -> Data { await log(update: "Starting for \(url)") let compressedData = CompressionUtils.compressDataInFile(at: url) { uncompressedSize in Task { @MainActor in status.update(url: url, uncompressedSize: uncompressedSize) } } progressNotification: { progress in Task { @MainActor in status.update(url: url, progress: progress) await log(update: "Progress for \(url): \(progress)") } } finalNotificaton: { compressedSize in Task { @MainActor in status.update(url: url, compressedSize: compressedSize) } } await log(update: "Ending for \(url)") return compressedData } func log(update: String) { logs.append(update) } } @MainActor class CompressionState: ObservableObject { @Published var files: [FileStatus] = [] var compressor: ParallelCompressor! init() { self.compressor = ParallelCompressor(status: self) } func update(url: URL, progress: Double) { if let loc = files.firstIndex(where: {$0.url == url}) { files[loc].progress = progress } } func update(url: URL, uncompressedSize: Int) { if let loc = files.firstIndex(where: {$0.url == url}) { files[loc].uncompressedSize = uncompressedSize } } func update(url: URL, compressedSize: Int) { if let loc = files.firstIndex(where: {$0.url == url}) { files[loc].compressedSize = compressedSize } } func compressAllFiles() { for file in files { Task.detached { let compressedData = await self.compressor.compressFile(url: file.url) await save(compressedData, to: file.url) } } } }

-