-

Bring your world into augmented reality

Follow along as we demonstrate how you can use Object Capture and RealityKit to bring real-world objects into an augmented reality game. We'll show you how to capture detailed items using the Object Capture framework, add them to a RealityKit project in Xcode, apply stylized shaders and animations, and use them as part of an AR experience. We'll also share best practices when working with ARKit, RealityKit, and Object Capture.

To get the most out of this session, we recommend first watching "Dive into RealityKit 2" and "Create 3D models with Object Capture" from WWDC21.Resources

- Using object capture assets in RealityKit

- Creating a photogrammetry command-line app

- Capturing photographs for RealityKit Object Capture

- Capturing photographs for RealityKit Object Capture

- Building an immersive experience with RealityKit

- RealityKit

Related Videos

WWDC22

WWDC21

-

Search this video…

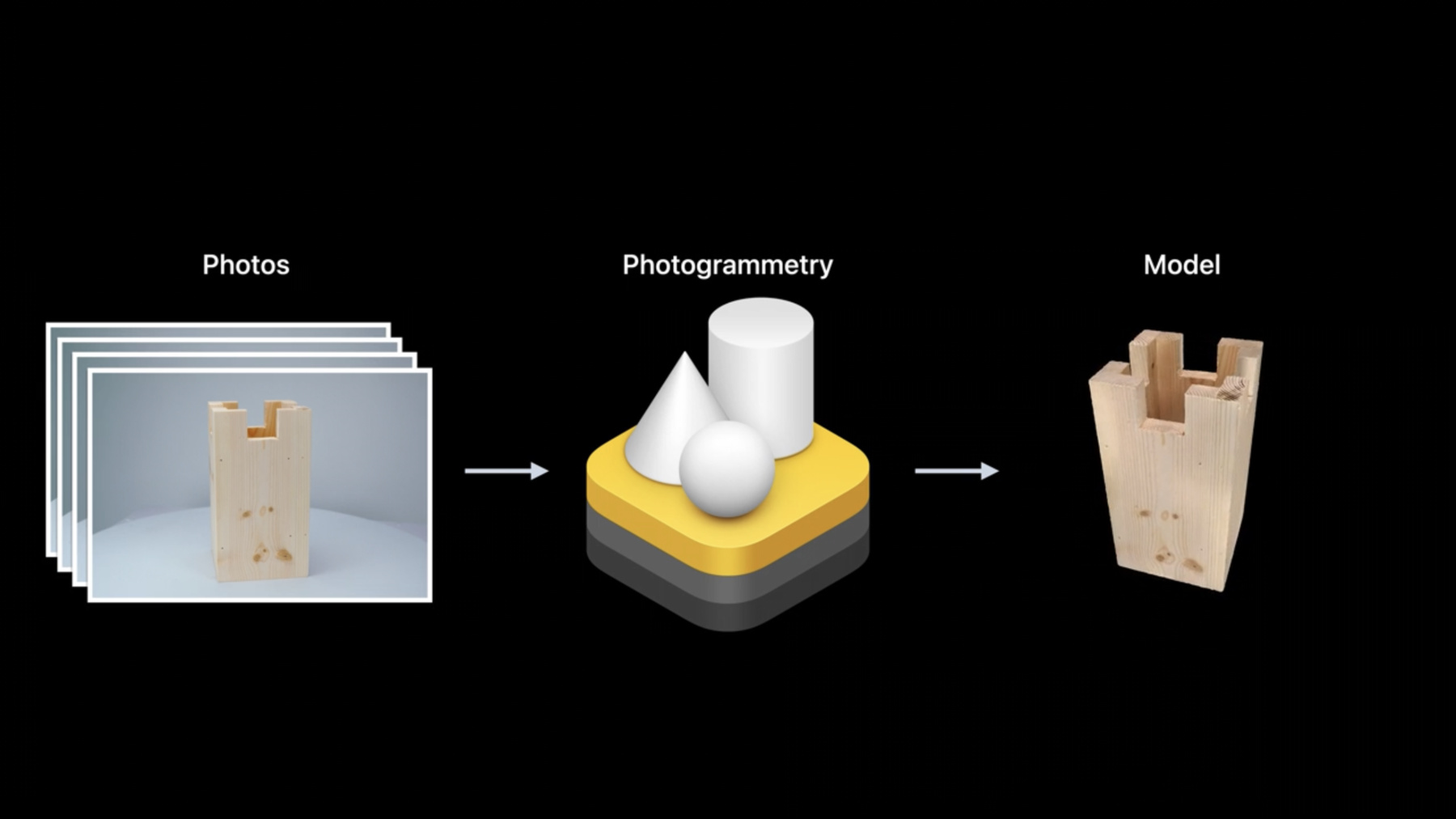

♪ Mellow instrumental hip-hop music ♪ ♪ Hao Tang: Hi, my name is Hao. I'm an engineer on the Object Capture team. Today, my colleague Risa and I will be showing you how to use the Object Capture API and RealityKit to create 3D models of real-world objects and bring them into AR. Let's get started. First, I'll give you a recap of Object Capture, which we launched as a RealityKit API on macOS last year. Then, I'll introduce you to a couple of camera enhancements in ARKit, which allow you to capture high-res photos of your object and can help you better integrate Object Capture into your AR applications. After that, I will go through the best practice guidelines of Object Capture so you can continue to make the best out of this technology. In the last section, Risa will take you through an end-to-end workflow with Object Capture in RealityKit and demonstrate how you can bring real-world objects into an AR experience. Let's start with a quick recap of Object Capture. Object Capture is a computer vision technology that you can leverage to easily turn images of real-world objects into detailed 3D models. You begin by taking photos of your object from various angles with an iPhone, iPad, or DSLR. Then, you copy those photos to a Mac which supports Object Capture. Using the Photogrammetry API, RealityKit can transform your photos into a 3D model in just a few minutes. The output model includes both a geometric mesh as well as various material maps, including textures, that are automatically applied to your model. For more details of the Object Capture API, I would highly recommend that you watch last year's WWDC session on Object Capture. Many developers have created amazing 3D capture apps using Object Capture: Unity, Cinema4D, Qlone, PolyCam, PhotoCatch, just to name a few. In addition to this, we have beautiful-looking models that were created using this API. Here's a few models that were created by Ethan Saadia using the power of Object Capture within the PhotoCatch app. And our friend Mikko Haapoja from Shopify also generated a bunch of great-looking 3D models using this API. The detailed quality of the output 3D models you get with Object Capture is highly beneficial in e-commerce. Here's the GOAT app, for example, that lets you try on a variety of shoes on your feet. All of these shoe models have been created with the Object Capture API which has been designed to capture the finest level of detail on them. This can go a long way in helping you with your purchase decision on a product, or even try out an accurate fit for an object in your space. For example, the Plant Story app lets you preview real-looking 3D models of various plants in your space, all of which have been created with Object Capture. This can help you get a sense of how much space you may need for a plant, or simply see them in your space in realistic detail. Speaking about realism, were you able to spot the real plant in this video? Yes, it's the one in the white planter on the left-most corner of the table. We are very thrilled to see such a stunning and widespread use of the Object Capture API since its launch in 2021. Now, let's talk about some camera enhancements in ARKit, which will greatly help the quality of reconstruction with Object Capture. A great Object Capture experience starts with taking good photos of objects from all sides. To this end, you can use any high-resolution camera, like the iPhone or iPad, or even a DSLR or mirrorless camera. If you use the Camera app on your iPhone or iPad, you can take high-quality photos with depth and gravity information which lets the Object Capture API automatically recover the real-world scale and orientation of the object. In addition to that, if you use an iPhone or iPad, you can take advantage of ARKit's tracking capabilities to overlay a 3D guidance UI on top of the model to get good coverage of the object from all sides. Another important thing to note is that the higher the image resolution from your capture, the better the quality of the 3D model that Object Capture can produce. To that end, with this year's ARKit release we are introducing a brand-new high-resolution background photos API. This API lets you capture photos at native camera resolution while you are still running an ARSession. It allows you to use your 3D UI overlays on top of the object while taking full advantage of the camera sensor on device. On an iPhone 13, that means the full 12 megapixels native resolution of the Wide camera. This API is nonintrusive. It does not interrupt the continuous video stream of the current ARSession, so your app will continue to provide a smooth AR experience for your users. In addition, ARKit makes EXIF metadata available in the photos, which allows your app to read useful information about white balance, exposure, and other settings that can be valuable for post-processing. ARKit makes it extremely easy to use this new API in your app. You can simply query a video format that supports high-resolution frame capturing on ARWorldTrackingConfguration, and if successful, set the new video format and run the ARSession. When it comes to capturing a high-res photo, simply call ARSession's new captureHighResolutionFrame API function, which will return to you a high-res photo via a completion handler asynchronously. It is that simple. We have also recognized that there are use cases where you may prefer manual control over the camera settings such as focus, exposure, or white balance. So we are providing you with a convenient way to access the underlying AVCaptureDevice directly and change its properties for fine-grained camera control. As shown in this code example, simply call configurableCaptureDevice ForPrimaryCamera on your ARWorldTrackingConfiguration to get access to the underlying AVCaptureDevice. For more details on these enhancements, I highly recommend you to check out the "Discover ARKit 6 session" from this year's WWDC. Now, let's go through some best practice guidelines with Object Capture. First things first; we need to choose an object with the right characteristics for Object Capture. A good object has adequate texture on its surface. If some regions of the object are textureless or transparent, the details in those regions may not be reconstructed well. A good object should also be free of glare and reflections. If the object does not have a matte surface, you can try to reduce the specular on it using diffuse lighting. If you would like to flip over the object to capture its bottom, please ensure that your object stays rigid. In other words, it should not change its shape when flipped. And lastly, a good object can contain fine structure to some degree, but you will need to use a high-resolution camera and take close-up photos to recover the fine detail of the object. The next important thing is setting up an ideal capture environment. You will want to make sure that your capture environment has good, even, and diffuse lighting. It is important to ensure a stable background and have sufficient space around the object. If your room is dark, you can make use of a well-lit turntable. Next, we'll look at some guidelines for capturing good photos of your object, which in turn, will ensure that you get a good quality 3D model from Object Capture. As an example, I'll show you how my colleague Maunesh used his iPhone to capture the images of a beautiful pirate ship that was created by our beloved ARKit engineer, Christian Lipski. Maunesh begins by placing the pirate ship in the middle of a clean table. This makes the ship clearly stand out in the photos. He holds his iPhone steadily with two hands. As he circles around the ship slowly, he captures photos at various heights. He makes sure that the ship is large enough in the center of the camera's field of view so that he can capture the maximum amount of detail. He also makes sure that he always maintains a high degree of overlap between any two adjacent photos. After he takes a good number of photos -- about 80 in this case -- he flips the ship on its side, so that he can also reconstruct its bottom. He continues to capture about 20 more photos of the ship in a flipped orientation. One thing to note is that he is holding the iPhone in landscape mode. This is because he is capturing a long object, and in this case, the landscape mode helps him capture maximum amount of detail of the object. However, he may need to use the iPhone in portrait mode if he were to capture a tall object instead.

That's it! The final step in the process is to copy those photos onto a Mac and process them using the Object Capture API. You can choose from four different detail levels, which are optimized for different use cases. The reduced and medium detail levels are optimized for use in web-based and mobile experiences, such as viewing 3D content in AR QuickLook. The reconstructed models for those detail levels have fewer triangles and material channels, thereby consuming less memory. The full and raw detail levels are intended for high-end interactive use cases, such as in computer games or post-production workflows. These models contain the highest geometric detail and give you the flexibility to choose between baked and unbaked materials, but they require more memory to reconstruct. It is important to select the right output level depending on your use case. For our pirate ship, we chose the medium detail level, which only took a few minutes to process it on an M1 Mac. The output 3D model looked so stunning that we actually put together an animated clip of the pirate ship sailing in high seas. And that's the power of Object Capture for you! Ahoy! Now I'll hand it off to Risa, who will be walking you through an end-to-end workflow with Object Capture in RealityKit. Risa Yoneyama: Thanks, Hao. Now that we have gone over the Object Capture API, I am excited to go over an end-to-end developer workflow, to bring your real-life object into AR using RealityKit. We'll walk through each step in detail with an example workflow, so let's dive straight into a demo. My colleague Zach is an occasional woodworker and recently built six oversized wooden chess pieces -- one for each unique piece. Looking at these chess pieces, I'm inspired to create an interactive AR chess game. Previously, you'd need a 3D modeler and material specialist to create high-quality 3D models of real-world objects. Now, with the Object Capture API, we can simply capture these chess pieces directly and bring them into augmented reality. Let's start off by capturing the rook. My colleague Bryan will be using this professional setup, keeping in mind the best practices we covered in the previous section. In this case, Bryan is placing the rook on this turntable with some professional lighting to help avoid harsh shadows in the final output. You can also use the iPhone camera with a turntable, which provides you with automatic scale estimation and gravity vector information in your output USDZ. Please refer to the Object Capture session from 2021 for more details on this. Of course, if you do not have an elaborate setup like Bryan does here, you can also simply hold your iOS device and walk around your object to capture the images. Now that we have all the photos of our rook piece, I'm going to transfer these over to the Mac. I'll process these photos using the PhotogrammetrySession API that was introduced in 2021. Per the best practice guidelines, I'll use the reduced detail level to reconstruct, as we want to make sure our AR app performs well. The final output of the API will be a USDZ file type model. Here is our final output of the rook chess piece I just reconstructed. To save us some time, I've gone ahead and captured the other five pieces ahead of time. You may be wondering how we will create a chess game with only one color scheme for the chess pieces. Let's duplicate our six unique pieces and drag them into Reality Converter. I have inverted the colors in the original texture and replaced the duplicated set with this new inverted texture. This way, we can have a lighter version and a darker version of the chess pieces, one for each player. I'll be exporting the models with the compressed textures option turned on in the Export menu. This will help decrease the memory footprint of the textures.

Now that we have our full set of chess pieces, we are ready to bring the models into our Xcode project. I've created a chessboard using RealityKit by scaling down primitive cubes on the y-axis and alternating the colors between black and white. Here are all the chess pieces I reconstructed, laid out on the chessboard. This is already exciting to see our real-life objects in our application, but let's start adding some features to make it an actual interactive game. Throughout this part of the demo, I would like to showcase several different existing technologies, so we can provide examples of how you might want to combine the technologies to accomplish your desired output. As we'll be going over some practical use cases of advanced topics in RealityKit, I would recommend checking out the RealityKit sessions from 2021 if you are not already familiar with the APIs. I want to start by adding a start-up animation when we first launch the application. I am imagining an animation where the checker tiles slowly fall into place from slightly above its final position, all while the chess pieces also translate in together. To replicate this effect in code, all it takes is two steps. The first step is to translate both our entities up along the y-axis, while also uniformly scaling down the chess piece. The second step and final step is to animate both entities back to its original transform. The code for this is quite simple. I'll start by iterating through the checker tile entities. For each entity, I'll save the current transform of the checker tile as this will be the final position it lands on. Then, I'll move each square up 10 cm on the y-axis. We can now take advantage of the move function to animate this back to our original transform. I also happen to know that this border USDZ that outlines the checkerboard has a built-in animation. We can use the playAnimation API to start the animation simultaneously. I've added the exact same animation to the chess pieces but also modifying the scale as they translate. And here we have it: a simple startup animation with just a few lines of code. However, we won't actually be able to play chess without the ability to move the chess pieces. Let's work on that next. Before we can start moving the chess pieces, we'll need to be able to select one. I've already added a UITapGestureRecognizer to my ARView. When the users taps a specific location, we will define a ray that starts from the camera origin and goes through that 2D point. We can then perform a raycast with that ray into the 3D scene to see if we hit any entities. I've specified my chess piece collision group as a mask as I know I only want to be able to select the chess pieces in my scene. Be mindful that the raycast function will ignore all entities that do not have a CollisionComponent. If we do find a chess piece, we can finally select it. Now that we know which piece is selected, I want to add an effect that will make the piece look like it is glowing. We can leverage custom materials to achieve this; more specifically, surface shaders. Surface shaders allow you to calculate or specify material parameters through Metal, which then gets called by RealityKit's fragment shader once per each pixel. We can write a surface shader that looks like this fire effect in Metal. Then apply a custom material, with this surface shader to our rectangular prism to have the shader affect how our entity looks. Let's write some code to achieve our desired effect. I've already added a noise texture to the project to use in this surface shader. We'll sample the texture twice, once for the overall shape of the effect and another for detail. We then take the RGB value and remap it to look just the way we want. Then, with the processed value we just extracted, we'll calculate the opacity of the sample point by comparing its y-position with the image value. To give the effect some movement, we'll be moving through the y-axis of the texture as a function of time. Additionally, we will also use the facing angle of each sample point in conjunction with the viewing direction of the camera to fade the effect at the sides. This will soften the edges and hide the regular nature of the underlying model. Finally, we'll set the color and opacity we just calculated using the surface parameter functions. And here are the chess pieces with the selection shader applied to it. They really do look like they are glowing from the inside. Now, if we combine that with three separate translation animations, that will result in something that looks like this. With the functionality to move chess pieces implemented, we'll also be able to capture the opponent's pieces. Just like surface shaders, geometry modifiers can be implemented using custom materials. They are a very powerful tool, as you can change vertex data such as position, normals, texture coordinates, and more. Each of these Metal functions will be called once per vertex by RealityKit's vertex shader. These modifications are purely transient and do not affect the vertex information of the actual entity. I'm thinking we could add a fun geometry modifier to squash the pieces when they are captured. I have this property on my chess piece called capturedProgress to represent the progress of the capturing animation from 0 to 1. Since capturing is a user-initiated action, we somehow need to tell the geometry modifier when to start its animation. The good thing is you can do this by setting the custom property on a customMaterial. This allows data to be shared between the CPU and the GPU. We will specifically use the custom value property here and pass the animation progress to the geometry modifier. To extract the animation progress from the Metal side, we can use the custom parameter on uniforms. Since I want to scale the object vertically, as if it is being squashed by another piece, we will set the scale axis as the y-direction. To add some complexity to the animation, we will also change the geometry in the x-axis to create a wave effect. The offset of the vertex can be set using the set_model_position_ offset function. Here is the final product of our geometry modifier. You can see that it scales up a bit before collapsing down, while being stretched vertically along the x-axis. As a chess novice myself, I thought it might be helpful to add a feature to indicate where your selected piece can move to to help me learn the game. Since the checker pieces are each individual entities with their own Model Component, I can apply a pulsing effect using a surface shader to potential moves to distinguish them from others. Next, I'll add a post-processing effect called "bloom" to accentuate the effect even more. Again, we're using the custom parameter here we used in the surface shader for the glow effect. In this case, we are passing in a Boolean from the CPU side to our Metal surface shader. If this checker is a possible move, I want to add a pulsing effect by changing the color. We'll specifically add the pulse to the emissive color here. Lastly, I'll add the bloom effect to the entire view. Bloom is a post-processing effect that produces feathers of light from the borders of bright areas. We can accomplish this effect by taking advantage of the render callbacks property on ARView. We will write the bloom effect using the already built-in Metal performance shader functions. Next, we'll simply set the renderCallbacks.postProcess closure as our bloom function we just defined. When we pulse our checkers, we are pulsing to a white color which will now be further emphasized with the bloom effect. With the surface shader and bloom effect together, we can see exactly where we can move our pieces to. Finally, let's combine everything we have together to see our real-life chess pieces come to life in our AR app. We can see how all the features we added look in our environment. For your convenience we have linked the Capture Chess sample project to the session resources. Please download it and try it out for yourself to see it in your environment. And it's that simple. From capture to reconstruction of the oversized chess pieces, then into our augmented reality app. We've covered a lot in this session today so let's summarize some of the key points. We first started off by recapping the Object Capture API that we announced in 2021. We then went over a new API in ARKit that enables capturing photos on-demand at native camera resolution during an active ARSession. To help you get the most out of the Object Capture technology, we listed types of objects that are suited for reconstruction, ideal environments to get high-quality images, and the recommended flow to follow while capturing your object. For the latter part of this session, we walked through an example end-to-end developer workflow. We captured photos of the oversized chess pieces and used the images as input to the PhotogrammetrySession API to create 3D models of them. Then, we imported the models into Reality Converter to replace some textures. And finally, we slowly built up our chess game to see our chess pieces in action in AR. And that's it for our session today. Thank you so much for watching. Ahoy! ♪

-

-

6:20 - HighRes capturing

if let hiResCaptureVideoFormat = ARWorldTrackingConfiguration.recommendedVideoFormatForHighResolutionFrameCapturing { // Assign the video format that supports hi-res capturing. config.videoFormat = hiResCaptureVideoFormat } // Run the session. session.run(config) session.captureHighResolutionFrame { (frame, error) in if let frame = frame { // save frame.capturedImage // … } } -

17:00 - Chessboard animation

// Board Animation class Chessboard: Entity { func playAnimation() { checkers .forEach { entity in let currentTransform = entity.transform // Move checker square 10cm up entity.transform.translation += SIMD3<Float>(0, 0.1, 0) entity.move(to: currentTransform, relativeTo: entity.parent, duration: BoardGame.startupAnimationDuration) } // Play built-in animation for board border border.availableAnimations.forEach { border.playAnimation($0) } } } -

18:00 - select chess piece

// Select chess piece class ChessViewport: ARView { @objc func handleTap(sender: UITapGestureRecognizer) { guard let ray = ray(through: sender.location(in: self)) else { return } // No piece is selected yet, we want to select one guard let raycastResult = scene.raycast(origin: ray.origin, direction: ray.direction, length: 5, query: .nearest, mask: .piece).first, let piece = raycastResult.entity.parentChessPiece else { return } boardGame.select(piece) gameManager.selectedPiece = piece } } -

21:16 - capture geometry modifier

// Capture Geometry Modifier class ChessPiece: Entity, HasChessPiece { var capturedProgress: Float get { (pieceEntity?.model?.materials.first as? CustomMaterial)?.custom.value[0] ?? 0 } set { pieceEntity?.modifyMaterials { material in guard var customMaterial = material as? CustomMaterial else { return material } customMaterial.custom.value = SIMD4<Float>(newValue, 0, 0, 0) return customMaterial } } } } -

23:00 - highlight potential moves using bloom

// Checker animation to show potential moves void checkerSurface(realitykit::surface_parameters params, float amplitude, bool isBlack = false) { // ... bool isPossibleMove = params.uniforms().custom_parameter()[0]; if (isPossibleMove) { const float a = amplitude * sin(params.uniforms().time() * M_PI_F) + amplitude; params.surface().set_emissive_color(half3(a)); if (isBlack) { params.surface().set_base_color(half3(a)); } } } -

23:20 - Import MetalPerformanceShaders

import MetalPerformanceShaders class ChessViewport: ARView { init(gameManager: GameManager) { /// ... renderCallbacks.postProcess = postEffectBloom } func postEffectBloom(context: ARView.PostProcessContext) { let brightness = MPSImageThresholdToZero(device: context.device, thresholdValue: 0.85, linearGrayColorTransform: nil) brightness.encode(commandBuffer: context.commandBuffer, sourceTexture: context.sourceColorTexture, destinationTexture: bloomTexture!) /// ... } }

-