-

Create 3D models with Object Capture

Object Capture provides a quick and easy way to create lifelike 3D models of real-world objects using just a few images. Learn how you can get started and bring your assets to life with Photogrammetry for macOS. And discover best practices with object selection and image capture to help you achieve the highest-quality results.

Resources

- Creating a photogrammetry command-line app

- Capturing photographs for RealityKit Object Capture

- Creating 3D objects from photographs

- Capturing photographs for RealityKit Object Capture

- PhotogrammetrySample

- PhotogrammetrySession

- Explore the RealityKit Developer Forums

Related Videos

WWDC22

WWDC21

-

Search this video…

♪ Bass music playing ♪ ♪ Michael Patrick Johnson: Hi! My name is Michael Patrick Johnson, and I am an engineer on the Object Capture team.

Today, my colleague Dave McKinnon and I will be showing you how to turn real-world objects into 3D models using our new photogrammetry API on macOS.

You may already be familiar with creating augmented reality apps using our ARKit and RealityKit frameworks.

You may have also used Reality Composer and Reality Converter to produce 3D models for AR.

And now, with the Object Capture API, you can easily turn images of real-world objects into detailed 3D models.

Let's say you have some freshly baked pizza in front of you on the kitchen table.

Looks delicious, right? Suppose we want to capture the pizza in the foreground as a 3D model.

Normally, you'd need to hire a professional artist for many hours to model the shape and texture.

But, wait, it took you only minutes to bake in your own oven! With Object Capture, you start by taking photos of your object from every angle.

Next, you copy the images to a Mac which supports the new Object Capture API.

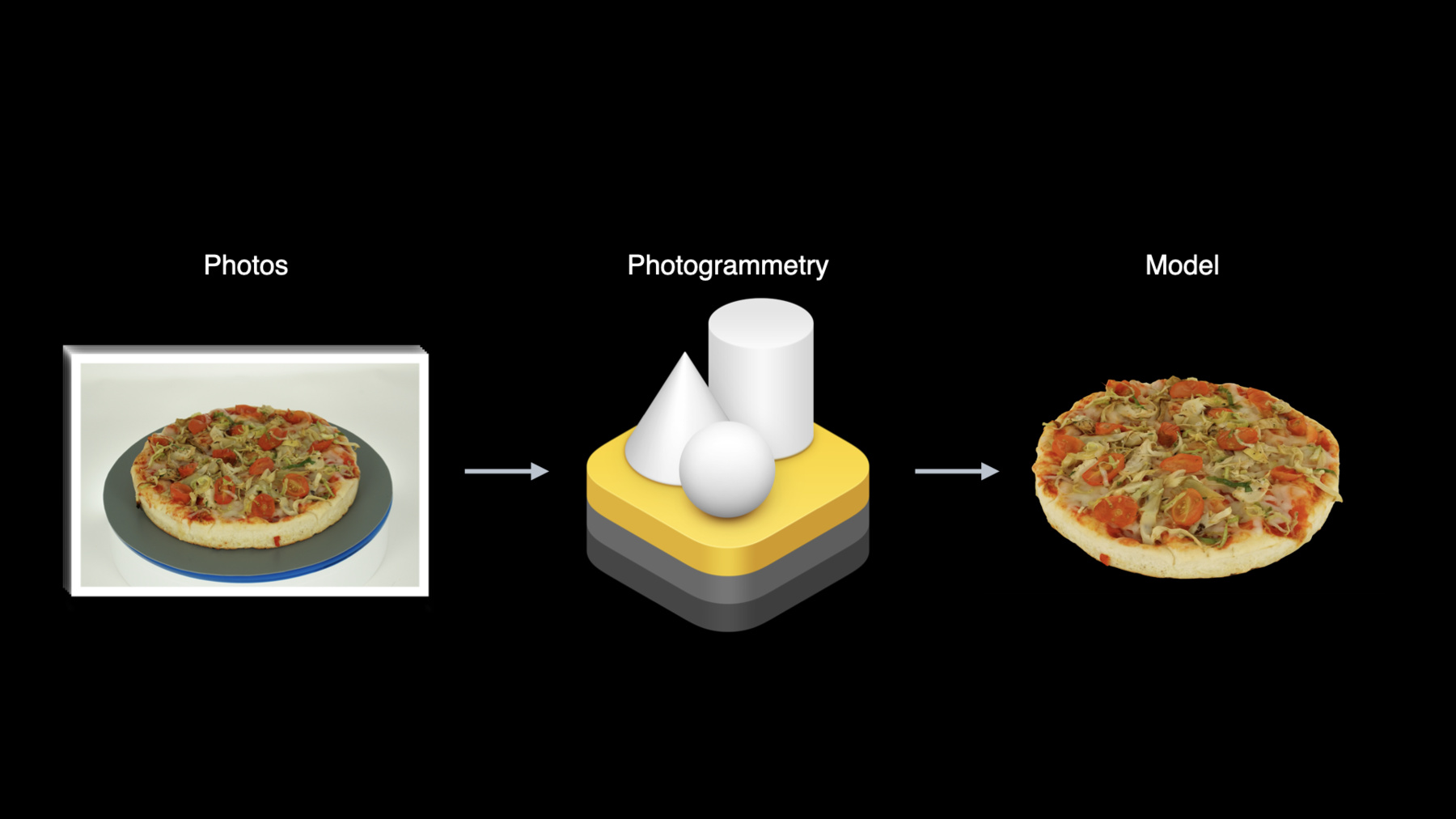

Using a computer vision technique called "photogrammetry", the stack of 2D images is turned into a 3D model in just minutes.

The output model includes both a geometric mesh as well as various material maps, and is ready to be dropped right into your app or viewed in AR Quick Look.

Now let's look at each of these steps in slightly more detail.

First, you capture photos of your object from all sides.

Images can be taken on your iPhone or iPad, DSLR, or even a drone.

You just need to make sure you get clear photos from all angles around the object.

We will provide best practices for capture later in the session.

If you capture on iPhone or iPad, we can use stereo depth data from supported devices to allow the recovery of the actual object size, as well as the gravity vector so your model is automatically created right-side up.

Once you have captured a folder of images, you need to copy them to your Mac where you can use the Object Capture API to turn them into a 3D model in just minutes.

The API is supported on recent Intel-based Macs, but will run fastest on all the newest Apple silicon Macs since we can utilize the Apple Neural Engine to speed up our computer vision algorithms.

We also provide HelloPhotogrammetry, a sample command-line app to help you get started.

You can also use it directly on your folder of images to try building a model for yourself before writing any code.

Finally, you can preview the USDZ output models right on your Mac.

We can provide models at four detail levels optimized for your different use cases, which we discuss in more detail later.

Reduced, Medium, and Full details are ready to use right out of the box, like the pizza shown here.

Raw is intended for custom workflows.

By selecting USDZ output at the Medium detail level, you can view the new model in AR Quick Look right on your iPhone or iPad.

And that's all there is to getting lifelike objects that are optimized for AR! Oh wait, remember the pizzas from before? We have to come clean.

This image wasn't really a photo, but was actually created using Object Capture on several pizzas.

These models were then combined into this scene in a post-production tool and rendered using a ray tracer with the advanced material maps.

So you see, Object Capture can support a variety of target use cases, from AR apps on an iPhone or iPad to film-ready production assets.

In the remainder of this session, we'll show you how to get started using the Object Capture API and then offer our best practices to achieve the highest-quality results.

In the getting started section, we'll go into more details about the Object Capture API and introduce the essential code concepts for creating an app.

Next we will discuss best practices for image capture, object selection, and detail-level selection.

Let's begin by working through the essential steps in using the API on macOS.

In this section, you will learn the basic components of the Object Capture API and how to put them together.

Let's say we have this cool new sneaker we want to turn into a 3D model to view in AR.

Here we see a graphical diagram of the basic workflow we will explore in this section.

There are two main steps in the process: Setup, where we point to our set of images of an object; and then Process, where we request generation of the models we want to be constructed.

First, we will focus on the Setup block, which consists of two substeps: creating a session and then connecting up its associated output stream.

Once we have a valid session, we can use it to generate our models.

The first thing we need to do is to create a PhotogrammetrySession.

To create a session, we will assume you already have a folder of images of an object.

We have provided some sample image capture folders in the API documentation for you to get started quickly.

A PhotogrammetrySession is the primary top-level class in the API and is the main point of control.

A session can be thought of as a container for a fixed set of images to which photogrammetry algorithms will be applied to produce the resulting 3D model.

Here we have 123 HEIC images of the sneaker taken using an iPhone 12 Pro Max.

Currently there are several ways to specify the set of images to use.

The simplest is just a file URL to a directory of images.

The session will ingest these one by one and report on any problems encountered.

If there is embedded depth data in HEIC images, it will automatically be used to recover the actual scale of the object.

Although we expect most people will prefer folder inputs, we also offer an interface for advanced workflows to provide a sequence of custom samples.

A PhotogrammetrySample includes the image plus other optional data such as a depth map, gravity vector, or custom segmentation mask.

Once you have created a session from an input source, you will make requests on it for model reconstruction.

The session will output the resulting models as well as status messages on its output message stream.

Now that we've seen what a session is, let's see how to create one using the API.

Here we see the code to perform the initial setup of a session from a folder of images.

The PhotogrammetrySession lives within the RealityKit framework.

First, we specify the input folder as a file URL.

Here, we assume that we already have a folder on the local disk containing the images of our sneaker.

Finally, we create the session by passing the URL as our input source.

The initializer will throw an error if the path doesn't exist or can't be read.

You can optionally provide advanced configuration parameters, but here we'll just use the defaults.

That's all it takes to create a session! Now that we've successfully created a session object, we need to connect the session's output stream so that we can handle messages as they arrive.

After the message stream is connected, we will see how to request models that will then arrive on that stream.

We use an AsyncSequence -- a new Swift feature this year -- to provide the stream of outputs.

Output messages include the results of requests, as well as status messages such as progress updates.

Once we make the first process call, messages will begin to flow on the output message stream.

The output message sequence will not end while the session is alive.

It will keep producing messages until either the session is deinitialized or in the case of a fatal error.

Now, let's take a closer look at the types of messages we will receive.

After a request is made, we expect to receive periodic requestProgress messages with the fraction completed estimate for each request.

If you're building an app that calls the Object Capture API, you can use these to drive a progress bar for each request to indicate status.

Once the request is done processing, we receive a requestComplete message containing the resulting payload, such as a model or a bounding box.

If something went wrong during processing, a requestError will be output for that request instead.

For convenience, a processingComplete message is output when all queued requests have finished processing.

Now that we've been introduced to the concept of the session output stream and seen the primary output messages, let's take a look at some example code that processes the message stream.

Once we have this, we'll see how to request a model.

Here is some code that creates an async task that handles messages as they arrive.

It may seem like a lot of code, but most of it is simply message dispatching as we will see.

We use a "for try await" loop to asynchronously iterate over the messages in session.outputs as they arrive.

The bulk of the code is a message dispatcher which switches on the output message.

Output is an enum with different message types and payloads.

Each case statement will handle a different message.

Let's walk through them.

First, if we get a progress message, we'll just print out the value.

Notice that we get progress messages for each request.

For our example, when the request is complete, we expect the result payload to be a modelFile with the URL to where the model was saved.

We will see how to make such a request momentarily.

If the request failed due to a photogrammetry error, we will instead get an error message for it.

After the entire set of requests from a process call has finished, a processingComplete message is generated.

For a command-line app, you might exit the app here.

Finally there are other status messages that you can read about in the documentation, such as warnings about images in a folder that couldn't be loaded.

And that's it for the message handling! This message-handling task will keep iterating and handling messages asynchronously for as long as the session is alive.

OK, let's see where we are in our workflow.

We've fully completed the Setup phase and have a session ready to go.

We're now ready to make requests to process the models.

Before we jump into the code, let's take a closer look at the various types of requests we can make.

There are three different data types you can receive from a session: a ModelFile, a ModelEntity, and a BoundingBox.

These types have an associated case in the Request enum: modelFile, modelEntity, and bounds; each with different parameters.

The modelFile request is the most common and the one we will use in our basic workflow.

You simply create a modelFile request specifying a file URL with a USDZ extension, as well as a detail level.

There is an optional geometry parameter for use in the interactive workflow, but we won't use that here.

For more involved postprocessing pipelines where you may need USDA or OBJ output formats, you can provide an output directory URL instead, along with a detail level.

The session will then write USDA and OBJ files into that folder, along with all the referenced assets such as textures and materials.

A GUI app is also able to request a RealityKit ModelEntity and BoundingBox for interactive preview and refinement.

A modelEntity request also takes a detail level and optional geometry.

A bounds request will return an estimated capture volume BoundingBox for the object.

This box can be adjusted in a UI and then passed in the geometry argument of a subsequent request to adjust the reconstruction volume.

We'll see how this works a bit later in the session.

Most requests also take a detail level.

The preview level is intended only for interactive workflows.

It is very low visual quality but is created the fastest.

The primary detail levels in order of increasing quality and size are Reduced, Medium, and Full.

These levels are all ready to use out of the box.

Additionally, the Raw level is provided for professional use and will need a post-production workflow to be used properly.

We will discuss these in more detail in the best practices section.

OK, now that we've seen what kinds of requests we can make, let's see how to do this in code.

We will now see how to generate two models simultaneously in one call, each with a different output filename and detail level.

Here we see the first call to process on the session.

Notice that it takes an array of requests.

This is how we can request two models at once.

We will request one model at Reduced detail level and one at Medium, each saving to a different USDZ file.

Requesting all desired detail levels for an object capture simultaneously in one call allows the engine to share computation and will produce all the models faster than requesting them sequentially.

You can even ask for all details levels at once.

Process may immediately throw an error if a request is invalid, such as if the output location can't be written.

This call returns immediately and soon messages will begin to appear on the output stream.

And that's the end of the basic workflow! You create the session with your images, connect the output stream, and then request models.

The processing time for each of your models will depend on the number of images and quality level.

Once the processing is complete, you will receive the output message that the model is available.

You can open the resulting USDZ file of the sneaker you created right on your Mac and inspect the results in 3D from any angle, including the bottom.

Later in this session, we'll show you how to achieve coverage for all sides of your object in one capture session, avoiding the need to combine multiple captures together.

It's looking great! Now that you've seen the basic workflow, we will give a high-level overview of a more advanced interactive workflow that the Object Capture API also supports.

The interactive workflow is designed to allow several adjustments to be made on a preview model before the final reconstruction, which can eliminate the need for post-production model edits and optimize the use of memory.

First, note that the Setup step and the Process step on both ends of this workflow are the same as before.

You will still create a session and connect the output stream.

You will also request final models as before.

However, notice that we've added a block in the middle where a 3D UI is presented for interactive editing of a preview model.

This process is iterated until you are happy with the preview.

You can then continue to make the final model requests as before.

You first request a preview model by specifying a model request with detail level of preview.

A preview model is of low visual quality and is generated as quickly as possible.

You can ask for a model file and load it yourself or directly request a RealityKit ModelEntity to display.

Typically, a bounds request is also made at the same time to preview and edit the capture volume as well.

You can adjust the capture volume to remove any unwanted geometry in the capture, such as a pedestal needed to hold the object upright during capture.

You can also adjust the root transform to scale, translate, and rotate the model.

The geometry property of the request we saw earlier allows a capture volume and relative root transform to be provided before the model is generated.

This outputs a 3D model that's ready to use.

Let's look at this process in action.

Here we see an example interactive Object Capture app we created using the API to demonstrate this interactive workflow.

First, we select the Images folder containing images of a decorative rock, as well as an output folder where the final USDZ will be written.

Then we hit Preview to request the preview model and estimated capture volume.

After some time has passed, the preview model of our rock and its capture volume appear.

But let's say that we only want the top part of the rock in the output as if the bottom were underground.

We can adjust the bounding box to avoid reconstructing the bottom of the model.

Once we are happy, we hit Refine Model to produce a new preview restricted to this modified capture volume.

This also optimizes the output model for just this portion.

Once the refined model is ready, the new preview appears.

You can see the new model's geometry has been clipped to stay inside the box.

This is useful for removing unwanted items in a capture such as a pedestal holding up an object.

Once we are happy with the cropped preview, we can select a Full detail final render which starts the creation process.

After some time, the Full detail model is complete and replaces the preview model.

Now we can see the Full detail of the actual model, which looks great.

The model is saved in the output directory and ready to use without the need for any additional post-processing.

And that's all there is to getting started with the new Object Capture API.

We saw how to create a session from an input source such as a folder of images.

We saw how to connect the async output stream to dispatch messages.

We then saw how to request two different level of detail models simultaneously.

Finally, we described the interactive workflow with an example RealityKit GUI app for ObjectCapture.

Now I will hand it off to my colleague Dave McKinnon, who will discuss best practices with Object Capture.

Dave McKinnon: Thanks, Michael.

Hi, I'm Dave McKinnon, and I am an engineer working on the Object Capture team.

In the next section we’ll be covering best practices to help you achieve the highest-quality results.

First, we'll look into tips and tricks for selecting an object that has the right characteristics.

Followed by a discussion of how to control the environmental conditions and camera to get the best results.

Next, we'll walk through how to use the CaptureSample App.

This app allows you to capture images in addition to depth data and gravity information to recover true scale and orientation of your object.

We illustrate the use of this app for both in-hand as well as turntable capture.

Finally, we will discuss how to select the right output detail level for your use case as well as providing some links for further reading.

The first thing to consider when doing a scan is picking an object that has the right characteristics.

For the best results, pick an object that has adequate texture detail.

If the object contains textureless or transparent regions, the resulting scan may lack detail.

Additionally, try to avoid objects that contain highly reflective regions.

If the object is reflective, you will get the best results by diffusing the lighting when you scan.

If you plan to flip the object throughout the capture, make sure it is rigid so that it doesn't change shape.

Lastly, if you want to scan an object that contains fine surface detail, you'll need to use a high-resolution camera in addition to having many close-up photos of the surface to recover the detail.

We will now demonstrate the typical scanning process.

Firstly, for best results, place your object on an uncluttered background so the object clearly stands out.

The basic process involves moving slowly around the object being sure to capture it uniformly from all sides.

If you would like to reconstruct the bottom of the object, flip it and continue to capture images.

When taking the images, try to maximize the portion of the field of view capturing the object.

This helps the API to recover as much detail as possible.

One way to do this is to use portrait or landscape mode depending on the object's dimensions and orientation.

Also, try to maintain a high degree of overlap between the images.

Depending on the object, 20 to 200 close-up images should be enough to get good results.

To help you get started capturing high-quality photos with depth and gravity on iOS, we provide the CaptureSample App.

This can be used as a starting point for your own apps.

It is written in SwiftUI and is part of the developer documentation.

This app demonstrates how to take high-quality photos for Object Capture.

It has a manual and timed shutter mode.

You could also modify the app to sync with your turntable.

It demonstrates how to use the iPhone and iPads with dual camera to capture depth data and embed it right into the output HEIC files.

The app also shows you how to save gravity data.

You can view your gallery to quickly verify that you have all good-quality photos with depth and gravity and delete bad shots.

Capture folders are saved in the app's Documents folder where it is easy to copy to your Mac using iCloud or AirDrop.

There are also help screens that summarize some of the best practice guidelines to get a good capture that we discuss in this section.

You can also find this information in developer documentation.

We recommend turntable capture to get the best results possible.

In order to get started, you'll need a setup like we have here.

This contains an iOS device for capture, but you can also use a digital SLR; mechanical turntable to rotate the object; some lighting panels in addition to a light tent.

The goal is to have uniform lighting and avoid any hard shadows.

A light tent is a good way to achieve this.

In this case, the CaptureSample App captures images using the timed shutter mode synced with the motion of the turntable.

We can also flip the object and do multiple turntable passes to capture the object from all sides.

Here is the resulting USDZ file from the turntable capture shown in Preview on macOS.

Now that we've covered tips and tricks for capturing images, let's move to our last section on how to select the right output.

There's a variety of different output detail settings available for a scan.

Let's take a look.

Here is the table showing the detail levels.

The supported levels are shown along the leftd side.

Reduced and Medium are optimized for use in web-based and mobile experiences, such as viewing 3D content in AR Quick Look.

They have fewer triangles and material channels and consequently consume less memory.

The Full and Raw are intended for high-end interactive use such as computer games or post-production workflows.

They contain the highest geometric detail and give you the flexibility to choose between baked and unbaked materials.

Reduced and Medium detail levels are best for content that you wish to display on the internet or mobile device.

In this instance, Object Capture will compress the geometric and material information from the Raw result down to a level that will be appropriate for display in AR apps or through AR Quick Look.

Both detail levels, Reduced and Medium, contain the diffuse, normal, and ambient occlusion PBR material channels.

If you would like to display a single scan in high detail, Medium will maximize the quality against the file size to give you both more geometric and material detail.

However, if you would like to display multiple scans in the same scene, you should use the Reduced detail setting.

If you want to learn more about how to use Object Capture to create mobile or web AR experiences, please see the "AR Quick Look, meet Object Capture" session.

Exporting with Full output level is a great choice for pro workflows.

In this instance, you are getting the maximum detail available for your scan.

Full will optimize the geometry of the scan and bake the detail into a PBR material containing Diffuse, Normal, Ambient Occlusion, Roughness, and Displacement information.

We think that this output level will give you everything you need for the most challenging renders.

Lastly, if you don't need material baking or you have your own pipeline for this, the Raw level will return the maximum poly count along with the maximum diffuse texture detail for further processing.

If you want to learn more about how to use Object Capture for pro workflows on macOS, please see the "Create 3D Workflows with USD" session.

Finally, and most importantly, if you plan to use your scan on both iOS, as well as macOS, you can select multiple detail levels to make sure you have all the right outputs for current and future use cases.

And that's a wrap.

Let's recap what have we have learned.

First, we covered, through example, the main concepts behind the Object Capture API.

We showed you how to create an Object Capture session and to use this session to process your collection of images to produce a 3D model.

We showed you an example of how the API can support an interactive preview application to let you adjust the capture volume and model transform.

Next, we covered best practices for scanning.

We discussed what type of objects to use as well the environment, lighting, and camera settings that give best results.

Lastly, we discussed how to choose the right output detail settings for your application.

If you want to learn how to bring Object Capture to your own app, check out both the iOS capture and macOS CLI processing apps to get started.

Along with these apps comes a variety of sample data that embodies best practice and can help when planning on how to capture your own scans.

Additionally, please check out the detailed documentation on best practice online at developer.apple.com, as well these related WWDC sessions.

The only thing that remains is for you to go out and use Object Capture for your own scans.

We are excited to see what objects you will scan and share.

♪

-

-

6:56 - Creating a PhotogrammetrySession with a folder of images

import RealityKit let inputFolderUrl = URL(fileURLWithPath: "/tmp/Sneakers/", isDirectory: true) let session = try! PhotogrammetrySession(input: inputFolderUrl, configuration: PhotogrammetrySession.Configuration()) -

9:26 - Creating the async message stream dispatcher

// Create an async message stream dispatcher task Task { do { for try await output in session.outputs { switch output { case .requestProgress(let request, let fraction): print("Request progress: \(fraction)") case .requestComplete(let request, let result): if case .modelFile(let url) = result { print("Request result output at \(url).") } case .requestError(let request, let error): print("Error: \(request) error=\(error)") case .processingComplete: print("Completed!") handleComplete() default: // Or handle other messages... break } } } catch { print("Fatal session error! \(error)") } } -

13:44 - Calling process on two models simultaneously

try! session.process(requests: [ .modelFile("/tmp/Outputs/model-reduced.usdz", detail: .reduced), .modelFile("/tmp/Outputs/model-medium.usdz", detail: .medium) ])

-