-

Explore the machine learning development experience

Learn how to bring great machine learning (ML) based experiences to your app. We'll take you through model discovery, conversion, and training and provide tips and best practices for ML. We'll share considerations to take into account as you begin your ML journey, demonstrate techniques for evaluating model performance, and explore how you can tune models to achieve real-time performance on device.

To learn more about the techniques covered in this session, watch "Optimize your Core ML usage" and "Accelerate machine learning with Metal" from WWDC22.Resources

- Core ML Converters

- Core ML Tools PyTorch Conversion Documentation

- Integrating a Core ML Model into Your App

- Core ML

Related Videos

WWDC23

WWDC22

- Accelerate machine learning with Metal

- Display EDR content with Core Image, Metal, and SwiftUI

- Optimize your Core ML usage

WWDC20

-

Search this video…

♪ ♪ Ciao, my name is Geppy Parziale, and I am a machine learning engineer here at Apple. Today, I want to walk you through the journey of building an app that uses machine learning to solve a problem that would usually require an expert to perform some very specialized work.

This journey gives me the opportunity to show you how to add open source machine learning models to your apps and create fantastic new experiences. During the journey, I will also highlight a few of the many tools, frameworks, and APIs available for you in the Apple development ecosystem to build apps using machine learning.

When building an app, you, the developer, go through a series of decisions that hopefully will bring the best experience to your users. And this is also true when adding machine learning functionality to applications.

During the development, you could ask: should I use machine learning to build this feature? How can I get a machine learning model? How do I make that model compatible with Apple platforms? Will that model work for my specific use case? Does it run on the Apple Neural Engine? So let's take this journey together. I want to build an app that allows me to add realistic colors to my family black and white photos that I found in an old box in my basement.

Of course, a professional photographer could do this with some manual work, spending some time in a photo editing tool. Instead, what if I want to automate this process and apply the colorization in just a few seconds? This seems to be a perfect task for machine learning.

Apple offers a tremendous amount of frameworks and tools that can help you build and integrate ML functionality in your apps. They provide everything from data processing to model training and inference. For this journey, I am going to use a few of them. But remember, you have many to choose from depending on the specific machine learning task that you are developing. The process I use when developing a machine learning feature in my apps goes through a set of stages.

First, I search for the right machine learning model in either scientific publication or specialized website.

I searched for photo colorization, and I found a model called Colorizer that may work for what I need. Here is an example of the colorization I can get using this model.

Here is another one.

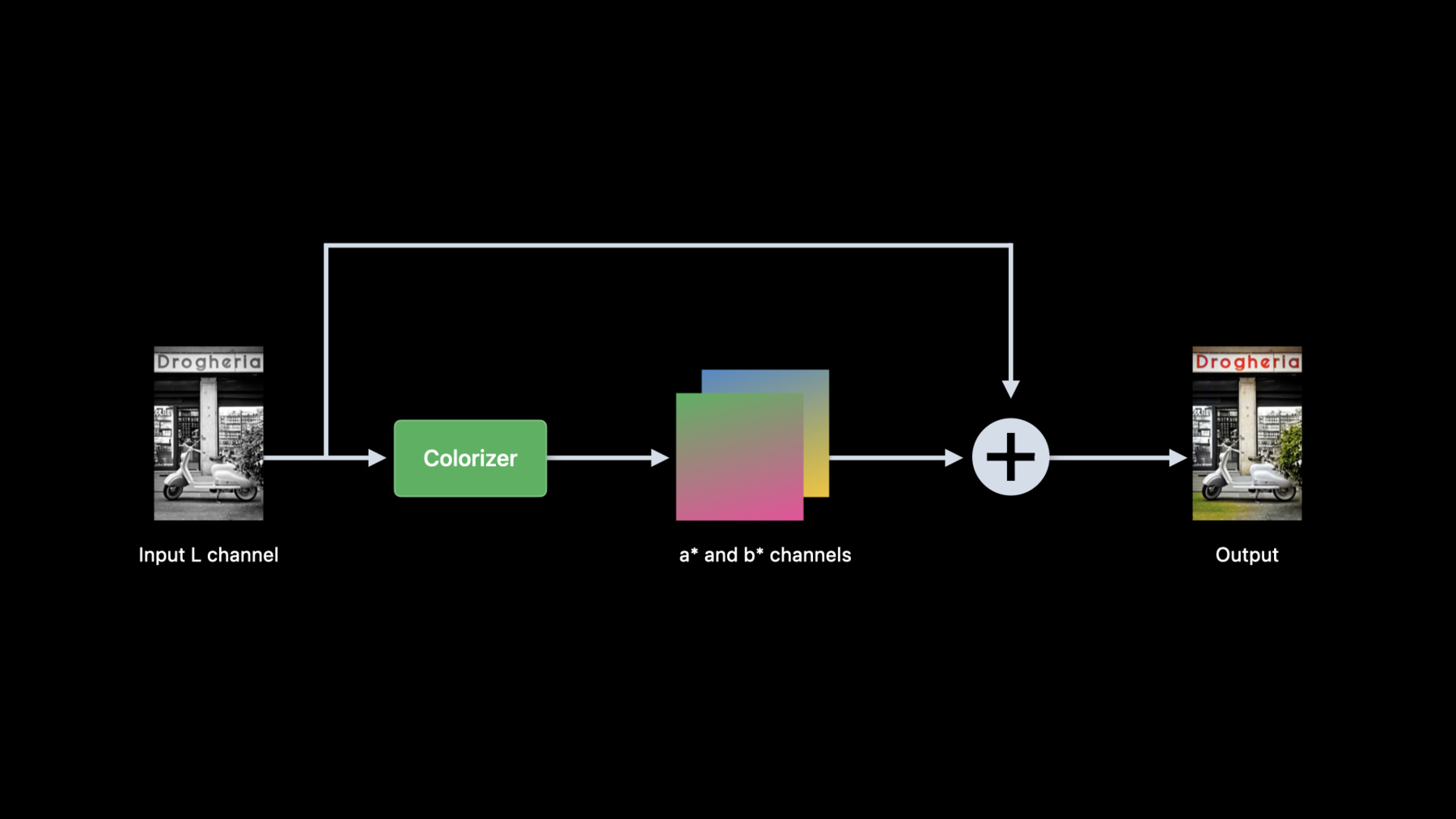

And here is another one. Really great. Let me show you how it works. The Colorizer model expects a black and white image as input. The Python source code I found converts any RGB image to a LAB color space image.

This color space has 3 channels: one represents the image lightness or L channel, and the other two represent the color components. The color components are discarded while the lightness becomes the input of the colorizer model.

The model then estimates two new color components that, combined with the input L channel, provide the resulting image with color.

It's time now to make this model compatible with my app. To achieve this, I can convert the original PyTorch model to Core ML format using coremltools. Here is the simple Python script I used to convert the PyTorch model to Core ML.

First, I import the PyTorch model architecture and weights.

Then I trace the imported model. Finally, I convert the PyTorch model to Core ML and save it.

Once the model is in the Core ML format, I need to verify that the conversion worked correctly. I can do that directly in Python again using coremltools. And this is easy. I import the image in RGB color space and convert it to Lab color space.

Then I separate the lightness from the color channels and discard them.

I run the prediction using the Core ML model.

And finally, compose the input lightness with the estimated color components and convert to RGB.

This allows me to verify that functionality of the converted model matches the functionality of the original PyTorch model. I call this stage Model Verification. However, there is another important check to be done. I need to understand if this model can run fast enough on my target device. So I would need to assess the model on device and make sure it would provide the best user experience. The new Core ML Performance report, available now in Xcode 14, performs a time-based analysis of a Core ML model. I just need to drag and drop the model into Xcode and create a performance report in a few seconds.

Using this tool, I can see that estimated prediction time is almost 90 milliseconds on an iPad Pro with M1 and iPadOS 16.

And this is perfect for my photo colorization app. If you want to know more about Performance Report in Xcode, I suggest you to watch this year’s session "Optimize your Core ML usage". So Performance Report can help you make an assessment of the model and make sure it provides the best on-device user experience.

Now that I am sure about the functionality and performance of my model, let me integrate it into my app.

The integration process is identical to what I have done until now in Python, but this time I can do it seamlessly in Swift using Xcode and all the other tools you are familiar with.

Remember the model, now in Core ML format, expects a single channel image representing its lightness.

So similarly to what I did previously in Python, I need to convert any RGB input image to an image using the Lab color space.

I could write this transformation in multiple ways: directly in Swift with vImage, or using Metal.

Exploring deeper in the documentation, I found that the Core Image framework provides something that can help me with this.

So let me show you how to achieve the RGB to LAB conversion and run the prediction using the Core ML model.

Here is the Swift code to extract the lightness from an RGB image and pass it to the Core ML model. First, I convert the RGB image into LAB and extract the lightness.

Then, I convert lightness to a CGImage and prepare the input for the Core ML model.

Finally, I run the prediction. To extract the L channel from the input RGB image, I first convert the RGB image into a LAB image, using the new CIFilter convertRGBtoLab. The values of the lightness are set between 0 and 100.

Then, I multiply the Lab image with a color matrix and discard the color channels and return the lightness to the caller. Let's now analyze what happens at the output of the model.

The Core ML model returns two MLShapedArrays containing the estimated color components.

So after the prediction, I convert the two MLShapedArray into two CIImages.

Finally, I combine them with the model input lightness. This generates a new LAB image that I convert to RGB and return it.

To convert the two MLShapedArrays into two CIImages, I first extract the values from each shaped array. Then, I create two core images representing the two color channels and return them. To combine the lightness with the estimated color channels, I use a custom CIKernel that takes the three channels as input and returns a CIImage.

Then, I use the new CIFilter convertLabToRGB to convert the Lab image to RGB and return it to the caller. This is the source code for the custom CIKernel I use to combine the lightness with the two estimated color channels in a single CIImage.

For more information about the new CI filters to convert RGB images to LAB images and vice versa, please refer to the session "Display EDR content with Core Image, Metal, and SwiftUI." Now that I completed the integration of this ML feature in my app, let's see it in action. But wait. How will I colorize my old family photos in real time with my application? I could spend some time to digitize each of them and import them in my app.

I think I have a better idea. What if I use my iPad camera to scan these photos and colorize them live? I think it would be really fun, and I have everything I need to accomplish this. But first, I have to solve a problem.

My model needs 90 milliseconds to process an image. If I want to process a video, I would need something faster.

For a smooth user experience, I'd like to run the device camera at least 30 fps.

That means the camera will produce a frame about every 30 milliseconds.

But since the model needs about 90 milliseconds to colorize a video frame, I am going to lose 2 or 3 frames during each colorization.

The total prediction time of a model is a function of both its architecture as well as the compute units operations it gets mapped to. Looking at the performance report again, I notice that my model has a total of 61 operations running on a combination of the neural engine and CPU.

If I want a faster prediction time, I will have to change the model.

I decided to experiment with the model's architecture and explore some alternatives that may be faster. However, a change in the architecture means I need to retrain the network.

Apple offers different solutions that allow me to train machine learning models directly on my Mac.

In my case, since the original model was developed in PyTorch, I decided to use the new PyTorch on Metal, so I can take advantage of the tremendous hardware acceleration provided by Apple Silicon.

If you are interested in knowing more about the PyTorch accelerated with Metal, please check the session, "Accelerate machine learning with Metal" Because of this change, our journey needs to take a step back.

After retraining, I will have to convert the result to Core ML format and run the verification again.

This time, model integration consists of simply swapping the old model with the new one. After retraining a few candidate alternative models, I have verified one that will meet my requirements. Here it is with the corresponding performance report. It runs entirely on the neural engine and the prediction time is now around 16 milliseconds, which works for video.

But Performance Report tells me only one aspect of the performance of my app.

Indeed, after running my app, I notice immediately that colorization is not as smooth as I expect. So what happens in my app at runtime? In order to understand that, I can use the new Core ML template in Instruments.

Analyzing the initial portion of the Core ML trace, after loading the model, I notice that the app accumulates predictions. And this is unexpected. Instead, I'd expect to have a single prediction per frame.

Zooming in the trace and checking the very first predictions, I observe that the app requests a second Core ML prediction before the first one is finished.

Here, the Neural Engine is still working on the first request when the second is given to Core ML.

Similarly, the third prediction starts while still processing the second one. Even after four predictions, the lag between the request and execution is already about 20 milliseconds. Instead, I need to make sure that a new prediction starts only if the previous one is finished to avoid cascading these lags.

While fixing this problem, I also found out that I accidentally set the camera frame rate to 60 fps instead of the desired 30fps.

After making sure that the apps process a new frame after the previous prediction completes and setting the camera frame rate to 30 fps, I can see that Core ML dispatches correctly a single prediction to the Apple Neural Engine, and now the app runs smoothly.

So we reached the end of our journey.

Let's test the app on my old family photos.

Here are my black and white photos that I found in my basement. They capture some of the places in Italy I visited a long time ago.

Here is a great photo of the Colosseum in Rome.

The color of the walls and the sky are so realistic.

Let's check this one.

This is Castel del Monte in the South of Italy. Really nice.

And this is my hometown, Grottaglie. Adding colors to these images triggered so many memories.

Notice that I am applying the colorization only to the photo while keeping the rest of the scene black and white.

Here, I am taking advantage of the rectangle detection algorithm available in the Vision framework. Using VNDetectRectangleRequest, I can isolate the photo in the scene and use it as input to the Colorizer model.

And now let me recap.

During our journey, I explored the enormous amount of frameworks, APIs, and tools Apple provides to prepare, integrate, and evaluate machine learning functionality for your apps. I started this journey identifying a problem that required an open source machine learning model to solve it.

I found an open source model with desired functionality and made it compatible with Apple platforms. I assessed the model performance directly on the device using the new Performance Report. I integrated the model in my app using the tools and the frameworks you are familiar with.

I optimized the model using the new Core ML Template in Instruments. With Apple tools and frameworks, I can take care of each phase of the development process directly on Apple devices and platforms from data preparation, training, integration, and optimization.

Today, we just scratched the surface of what you, the developer, can achieve with the frameworks and tools Apple provides you. Please, refer to previous sessions, linked to this, for additional inspiring ideas to bring machine learning to your apps. Explore and try frameworks and tools. Take advantage of the great synergy between software and hardware to accelerate your machine learning features and enrich the user experience of your apps. Have a great WWDC, and arrivederci. ♪ ♪

-

-

3:06 - Colorization pre-processing

from skimage import color in_lab = color.rgb2lab(in_rgb) in_l = in_lab[:,:,0] -

3:39 - Colorization post-processing

from skimage import color import numpy as np import torch out_lab = torch.cat((in_l, out_ab), dim=1) out_rgb = color.lab2rgb(out_lab.data.numpy()[0,…].transpose((1,2,0))) -

3:56 - Convert colorizer model to Core ML

import coremltools as ct import torch import Colorizer torch_model = Colorizer().eval() example_input = torch.rand([1, 1, 256, 256]) traced_model = torch.jit.trace(torch_model, example_input) coreml_model = ct.convert(traced_model, inputs=[ct.TensorType(name="input", shape=example_input.shape)]) coreml_model.save("Colorizer.mlpackage") -

4:26 - Core ML model verification using Core ML Tools

import coremltools as ct from PIL import Image from skimage import color in_img = Image.open(“image.png").convert("RGB") in_rgb = np.array(in_img) in_lab = color.rgb2lab(in_rgb, channel_axis=2) lab_components = np.split(in_lab, indices_or_sections=3, axis=-1) (in_l, _, _) = [ np.expand_dims(array.transpose((2, 0, 1)).astype(np.float32), 0) for array in lab_components ] out_ab = coreml_model.predict({"input": in_l})[0] out_lab = np.squeeze(np.concatenate([in_l, out_ab], axis=1), axis=0).transpose((1, 2, 0)) out_rgb = color.lab2rgb(out_lab, channel_axis=2).astype(np.uint8) out_img = Image.fromarray(out_rgb) -

7:11 - Colorization in Swift

import CoreImage import CoreML func colorize(image inputImage: CIImage) throws -> CIImage { let lightness: CIImage = extractLightness(from: inputImage) let modelInput = try ColorizerInput(inputWith: lightness.cgImage!) let modelOutput: ColorizerOutput = try colorizer.prediction(input: modelInput) let (aChannel, bChannel): (CIImage, CIImage) = extractColorChannels(from: modelOutput) let colorizedImage = reconstructRGBImage(l: lightness, a: aChannel, b: bChannel) return colorizedImage } -

7:41 - Extract lightness from RGB image using Core Image

import CoreImage.CIFilterBuiltins func extractLightness(from inputImage: CIImage) -> CIImage { let rgbToLabFilter = CIFilter.convertRGBtoLab() rgbToLabFilter.inputImage = inputImage rgbToLabFilter.normalize = true let labImage = rgbToLabFilter.outputImage let matrixFilter = CIFilter.colorMatrix() matrixFilter.inputImage = labImage matrixFilter.rVector = CIVector(x: 1, y: 0, z: 0) matrixFilter.gVector = CIVector(x: 1, y: 0, z: 0) matrixFilter.bVector = CIVector(x: 1, y: 0, z: 0) let lightness = matrixFilter.outputImage! return lightness } -

8:31 - Create two color channel CIImages from model output

func extractColorChannels(from output: ColorizerOutput) -> (CIImage, CIImage) { let outA: [Float] = output.output_aShapedArray.scalars let outB: [Float] = output.output_bShapedArray.scalars let dataA = Data(bytes: outA, count: outA.count * MemoryLayout<Float>.stride) let dataB = Data(bytes: outB, count: outB.count * MemoryLayout<Float>.stride) let outImageA = CIImage(bitmapData: dataA, bytesPerRow: 4 * 256, size: CGSize(width: 256, height: 256), format: CIFormat.Lh, colorSpace: CGColorSpaceCreateDeviceGray()) let outImageB = CIImage(bitmapData: dataB, bytesPerRow: 4 * 256, size: CGSize(width: 256, height: 256), format: CIFormat.Lh, colorSpace: CGColorSpaceCreateDeviceGray()) return (outImageA, outImageB) } -

8:51 - Reconstruct RGB image from Lab images

func reconstructRGBImage(l lightness: CIImage, a aChannel: CIImage, b bChannel: CIImage) -> CIImage { guard let kernel = try? CIKernel.kernels(withMetalString: source)[0] as? CIColorKernel, let kernelOutputImage = kernel.apply(extent: lightness.extent, arguments: [lightness, aChannel, bChannel]) else { fatalError() } let labToRGBFilter = CIFilter.convertLabToRGBFilter() labToRGBFilter.inputImage = kernelOutputImage labToRGBFilter.normalize = true let rgbImage = labToRGBFilter.outputImage! return rgbImage } -

9:08 - Custom CIKernel to combine L, a* and b* channels.

let source = """ #include <CoreImage/CoreImage.h> [[stichable]] float4 labCombine(coreimage::sample_t imL, coreimage::sample_t imA, coreimage::sample_t imB) { return float4(imL.r, imA.r, imB.r, imL.a); } """

-