-

Capture and process ProRAW images

When you support ProRAW in your app, you can help photographers easily capture and edit images by combining standard RAW information with Apple's advanced computational photography techniques. We'll take you through an overview of the format, including the look and feel of ProRAW images, quality metrics, and compatibility with your app. From there, we'll explore how you can incorporate ProRAW into your app at every stage of the production pipeline, including capturing imagery with AVFoundation, storage using PhotoKit, and editing with Core Image.

Resources

- Capturing still and Live Photos

- Capturing photos in RAW and Apple ProRAW formats

- PhotoKit

- Core Image

- Photos

- Capture setup

Related Videos

WWDC21

- Explore Core Image kernel improvements

- Improve access to Photos in your app

- What’s new in camera capture

WWDC20

-

Search this video…

♪ Bass music playing ♪ ♪ David Hayward: Hi, my name is David Hayward, and I'm an engineer with the Core Image team.

Today, my colleagues Tuomas Viitanen, Matt Dickoff, and I will be giving a detailed presentation that will show you everything you need to know about the ProRAW image format and how add support for it to your application.

Here’s what we will discuss today.

First, I will describe what makes up a ProRAW file.

Next, we will discuss how to capture these files, how to store them in a photo library, and how to edit and display them.

Let’s start with, What is a ProRAW? For some time, Apple devices have been able to fully leverage our Image Signal Processor to capture beautiful HEIC or JPEG images that are ready for display.

Then starting in iOS 10, we supported capturing Bayer-pattern RAW sensor data and storing that in a DNG file.

The differences and advantages of these two approaches was well-described in the WWDC 2016 session titled “Advances in iOS photography”.

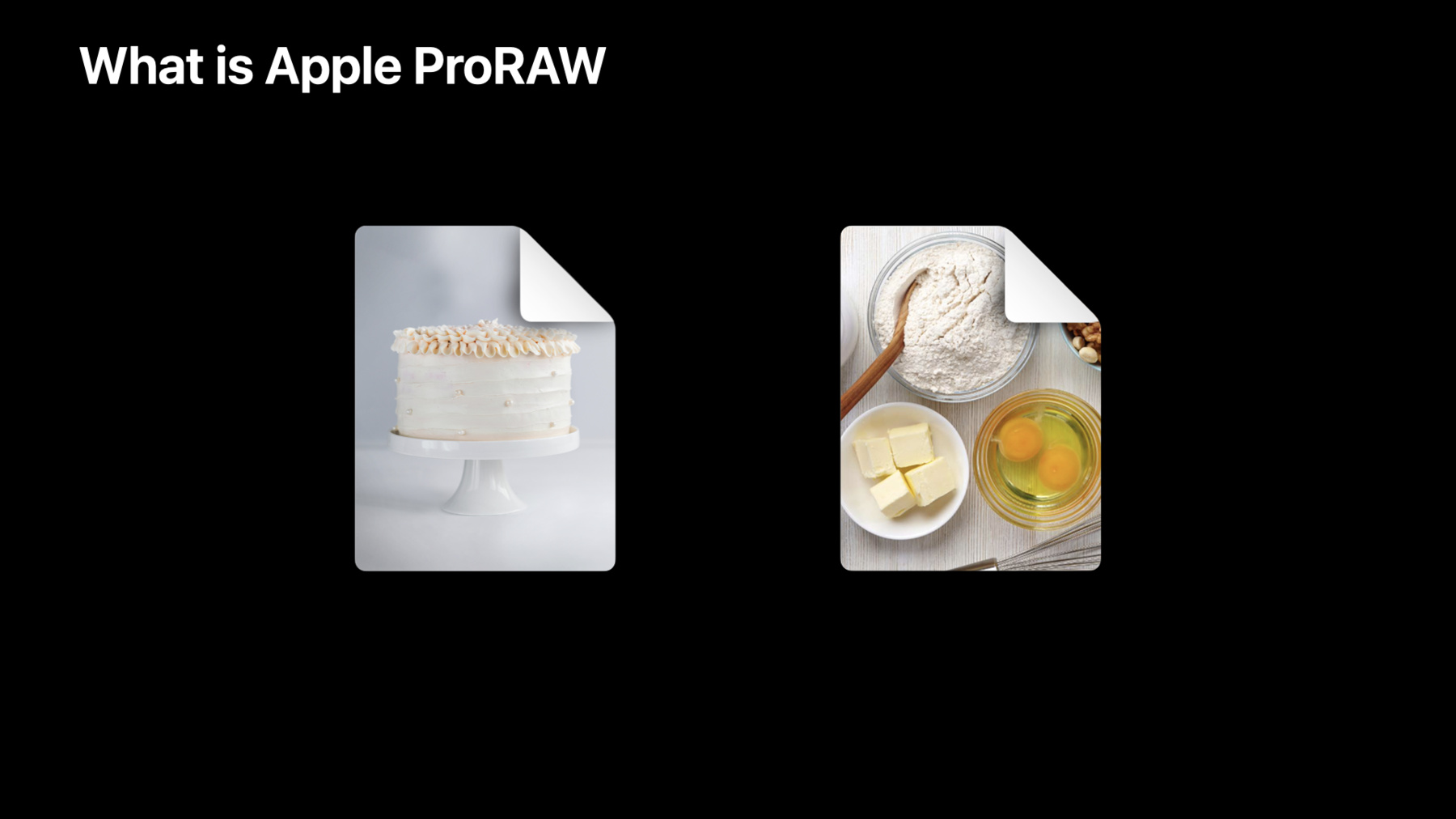

That presentation used a great analogy to describe the two formats.

A processed HEIC or JPEG is like going to a bakery and ordering a multilayer cake.

Whereas a Bayer-pattern RAW file is like going to the grocer and getting the raw ingredients to make a cake.

The advantage of processed HEIC or JPEGs are that you get a final image baked by Apple.

It is fast to display and has great quality because it is made by fusing multiple capture frames using Smart HDR, Deep Fusion, or Night mode.

Also, it has a small file size because it uses advanced lossy compression.

The advantage of Bayer RAW is that you get an image that has much greater flexibility for editing.

It has not been saved using lossy compression, and it has more bits of precision.

Our goal with ProRAW was to establish an image format with the best features of both.

The advantage of ProRAW is that it has a similar look to HEIC but uses lossless compression.

Also, depending on the scene, it is fused from multiple exposures, so it has great dynamic range for editing.

Because they are low noise and already demosaiced, they are reasonably fast to display.

The ProRAW format is designed to maximize three properties: compatibility, quality, and look.

Let's discuss each of these in some more detail.

In order to ensure compatibility, the ProRAW files are contained in a standard Adobe DNG file.

The linearized DNG file format is supported by Apple apps such as Photos, Adobe apps such Lightroom, and many others.

Many apps get support automatically via the system frameworks, ImageIO, and Core Image.

Earlier versions of iOS and macOS also have basic support for the format.

And it is also worth mentioning that the files can contain full resolution, JPEG-quality prerendered previews.

All ProRAWs captured by Camera app will contain these previews which will look identical to the image if it had been taken without ProRAW mode enabled.

The quality of a ProRAW file is quite impressive.

The pixels in the DNG are scene-referred and linearizable, may be generated from multiple demosaiced exposures combined with image fusion, losslessly compressed 12-bit RGB; but we create these bits through an adaptive companding curve so we can achieve up to 14 stops of dynamic range.

The resulting file sizes can range from 10 megabytes to 40 megabytes, but that size will scale based on the unique scene of each photo.

Apple goes to great effort tuning the quality of our images.

ProRAW images have a default look that is consistent with the look of our HEICs and JPEGs.

This is achieved by embedding special tags in the DNG file.

These tags are documented in the DNG spec and provide the recipe for how to render the default look of each image.

The first tag applied is the LinearizationTable which decompands the 12-bit stored data to linear scene values.

We use the BaselineExposure tag because ProRAW images adapt to the dynamic range of the scene.

The BaselineSharpness tag allows us to specify how much edge sharpening to apply by default.

The ProfileGainTableMap tag -- which is new in the DNG 1.6 spec -- allows us to describe how the adjust the bright and shadow regions.

Lastly, is the ProfileToneCurve that specifies the output global tone curve.

All of these tags are unique to each image.

Because they provide the recipe and not the cake, the image remains highly editable.

For example, the default sharpness, or tone curves, can be altered at any time.

Lastly, when present in the scene, we store semantic masks in the ProRAW for regions such a people, skin, and sky.

So now that we know what makes up a ProRAW, I would like to introduce Tuomas who will describe how to capture them.

Tuomas Viitanen: Thank you, David.

My name is Tuomas Viitanen.

I'm an engineer in the Camera Software team, and I will guide you through how you can capture Apple ProRAWs with your application.

AVFoundation Capture APIs provide you with access to camera on iOS and MacOS.

They allow you to stream live preview and video, capture photos, and record movies, among many other things.

And as a recent addition, we added support to capture photos in the new Apple ProRAW DNG format.

A good source of information on the basic use of the AVFoundation Capture APIs are Advances in iOS Photography talk from WWDC 2016 that explains how to use the AVCapture photo output and AVCam sample code that is an example of a simple camera capture application.

Next, we'll look at the Apple ProRAW capture in detail.

But first, let's take a quick look at where Apple ProRAW is supported.

A good starting point for that is to do a comparison with the existing Bayer RAW support.

Bayer RAW was introduced in iOS 10 and is supported on a wide range of devices.

Apple ProRAW was introduced in iOS 14.3 and is supported on iPhone 12 Pro and iPhone 12 Pro Max.

Apple's own Camera application supports Apple ProRAW capture with all fusion captures, like Deep Fusion and Night mode, as well as with flash captures.

Apple ProRAW allows photo-quality prioritization to be set to .balanced and .quality, which allows you to get the benefit of Apple image fusion to your RAW captures.

While Bayer RAW is only supported on single-camera AVCaptureDevices, like wide and tele.

Apple ProRAW is supported on all devices, including dual wide-, dual-, and triple-camera devices that seamlessly switch between cameras when zooming.

Similar to Bayer RAW, Apple ProRAW does not support depth data delivery nor content aware distortion correction.

Live Photo capture is only supported with Bayer RAW but portrait effects matte and semantic segmentation skin and sky mattes are only supported with ProRAW.

So, let's go through how you can capture Apple ProRAWs.

The items we cover are: how to set up your capture device and capture session, how to set up your photo output, how to prepare your photo settings for the ProRAW capture, and what options you have after you capture a ProRAW photo.

Let's start from setting up the session.

Apple ProRAW is only supported on the formats that support the highest quality photos.

You will get that by setting the capture sessionPreset to .photo.

Or if you would like to select the device format manually, you can find the formats that support the highest quality stills.

Note that this is different from high quality stills, which is supported on a broader set of formats.

And then simply configure the desired format to be the activeFormat of the device.

In this example and later, I'm simply using the first index from the list of supported items, but you'll want to replace this with a selection that's based on criteria that works best for your application.

Now, let's look how you can configure the photo output for the ProRAW capture.

First, you need to enable ProRAW on the photoOutput.

Be sure to do that already before starting the session, as it will otherwise cause a lengthy pipeline reconfiguration.

Setting this property to true prepares the capture pipeline for ProRAW captures, and adds the ProRAW pixel formats to the list of supported RAW photo pixel format types.

If you like, you can also indicate to the capture pipeline whether you prefer speed or quality with your captures, or if you just like a balance between the two.

More info on the effect of photo-quality prioritization to the captured photos can be found from the Capture high-quality photos using video formats talk.

And next, we'll look how you can prepare for the capture.

To be able to create photo settings for your ProRAW capture, you need to select a correct RAW pixel format.

You get the supported RAW pixel formats from the same availableRawPhoto PixelFormatTypes that gives you the supported Bayer RAW formats.

You can distinguish the ProRAW formats from the BayerRAW formats using the new isAppleProRAWPixelFormat class method on the photoOutput.

An example of a ProRAW pixel format is l64r that's a 16-bit full range RGBA pixel format.

For reference, you can also query whether a format is a Bayer RAW format using isBayerRAWPixelFormat class method.

Next, we'll go through some of the options you have for capturing Apple ProRAW and, optionally, a fully rendered process photo along with it.

The first and simplest option of capturing ProRAW is to request only the ProRAW photo.

To do that, you can simply specify the rawPixelFormatType when creating the photoSettings.

This gives you a single asset which is simple to work with and store to photo library like Matt will later show you.

It's also possible to capture a pair of processed photo and ProRAW similar to what you can do with Bayer RAW by providing also the processedFormat for the photoSettings.

But this requires you to work with multiple assets, and it's also harder to keep track of them in the photo library.

Luckily, Apple ProRAW supports up to a full-resolution JPEG image as thumbnail, and in many cases, this gives you the best of the previous two options.

To request a thumbnail, you can select the desired format from availableRawEmbedded ThumbnailPhotoCodecTypes and specify the desired thumbnail dimensions.

Now you're almost ready to capture a ProRAW photo.

But before that, if you like, you can specify the photo-quality prioritization for this capture.

Note that the value you specify here must be less than or equal to the maxPhotoQualityPrioritization you specified previously on the photoOutput.

You can also request up to a display-sized preview image if you would like to have something to show for the clients quickly after the capture.

And then you can capture the ProRAW photo using the same capturePhoto method that's used for all other still captures as well.

Now that the still capture is on the way, let's take a look what you can do when the requested photo is ready.

When the photo is fully processed, your didFinishProcessingPhoto delegate will be invoked with an AVCapturePhoto representing the ProRAW photo.

If you requested a preview image for the capture, you can now get the pixel buffer with uncompressed preview image or a CGImageRepresentation of that to show on the display.

And in case you requested a processed photo along with the ProRAW, your didFinishProcessingPhoto delegate will be invoked twice.

You can distinguish the ProRAW from the processed photo using isRawPhoto property on the received AVCapturePhoto.

And then you can simply request the fileDataRepresentation for the ProRAW to save the DNG to the photo library.

Or optionally, get a pixel buffer with the RAW pixel data if you prefer to work with that.

Like David mentioned earlier, the ProRAW DNG may include semantic segmentation mattes.

The inclusion of these mattes is automatic and scene dependent.

While the mattes are currently not available through the AVFoundation Capture APIs, you can retrieve them through Core Image and ImageIO APIs like David will later show you.

And finally, we'll look at some customization options you have with the ProRAW DNG.

You can customize the ProRAW by implementing the AVCapturePhotoFileData RepresentationCustomizer delegate methods.

By implementing replacementAppleProRAW CompressionSettings, you can change the compression bit depth and quality of the ProRAW photo.

The Apple ProRAW is encoded losslessly by default using 12 bits with a companding curve.

You can change the bit depth of the losslessly compressed ProRAW by keeping the quality level at 1 and providing the desired bit depth with the customizer.

This saves storage space but still keeps the high quality of the ProRAW photo.

In case you prefer a lossy compression, you can set the quality level to below 1, in which case ProRAW is compressed automatically using 8-bit lossy compression.

This gives you a small file size, but does have a clear impact on the quality of the ProRAW photo.

Now that you have implemented the customizer, you can request customization for the captured ProRAW DNG in your didFinishProcessingPhoto delegate.

By creating the customizer and providing that for fileDataRepresentation, you will get the customized ProRAW back with the compression settings you just provided.

That was the introduction to the Apple ProRAW capture using AVFoundation Capture APIs.

I'll hand it over to Matt who will tell you more about Apple ProRAW and photo library.

Matt? Matt Dickoff: Thanks, Tuomas! Hello! My name's Matt Dickoff, and I'm an engineer on the Photos team.

I'll be walking you through how to first save the ProRAW file you've just captured as a complete asset in the photo library.

Then, we'll step through how to fetch RAWs that already exist in the photo library.

PhotoKit is a pair of frameworks used to interact with the photo library on Apple Platforms.

It was first introduced in these releases and has seen improvements and additions since then.

Notably, it has support for RAW image formats like Apple ProRAW.

Today I'll only be covering the specific APIs that deal with RAWs in the photo library.

If you still have questions about PhotoKit after this presentation, I encourage you to take a look at the online developer documentation.

In order to save a new asset, we'll need to perform changes on the shared PHPhotoLibrary.

As with saving any asset, the change we'll be making is a PHAssetCreationRequest, and we'll be adding our Apple ProRAW file to this asset as the primary .photo PHAssetResource.

So let's see what the code for that looks like.

First, we start by performing changes on the shared photo library.

We'll simply make a PHAssetCreationRequest and then add the Apple ProRAW file to it as the primary photo resource.

And that's it! Just handle the success and error as your application sees fit.

Now that we know how to add assets to the photo library, let's take a look at how we can fetch them back, including RAW assets that the user may already have in their library.

I'm happy to say that in iOS 15, we've provided a new PHAssetCollectionSubtype, .smartAlbumRAW.

With this smart album, you can easily get a collection that contains all the assets in the photo library that have a RAW resource.

This album is the same RAW album that you see in the Apple Photos app.

Now that we have some PHAssets with RAW resources, let's take a look at what it takes to actually get at those resources.

First, we'll want to get all the PHAssetResources for a PHAsset.

Next, we'll be looking for two different types of resource: the .photo we talked about previously, and additionally, .alternatePhoto.

I want to take a brief moment to explain what .alternatePhoto is.

Some photo libraries will contain assets that follow the old RAW plus JPEG storage model that some DSLR cameras use.

This requires storing the RAW and a JPEG as two distinct resources; the JPEG as the photo and the RAW as the alternate photo.

This leads to an increased file footprint in the photo library, less portability, and often a confusing user experience.

This model is not recommended for Apple ProRAW.

If your intent is to save a ProRAW file to the photo library, you should use the capture settings to embed a full-size JPEG preview in the file.

Now, back to our resources.

We'll be checking the uniformTypeIdentifier of the resource to see if it conforms to a RAW type that we are interested in.

And finally, once we have a RAW resource, we'll use the PHAssetResourceManager to retrieve the actual data.

Now let's take a look at that in code.

First, we iterate through all the PHAssetResources on an asset, look for any that are .photo or .alternatePhoto type, check that they conform to the RAW image UTType, and finally use the PHAssetResourceManager to request the data for that resource.

And that's it! You now know how to use PhotoKit to both save and retrieve assets with RAW resources from the photo library.

Now I'm going to hand it back to David who will talk about how best to edit and display Apple ProRAW images.

David: Thank you, Matt.

The last section of our talk today will be about how to use the Core Image framework to edit and display ProRAW images.

I will describe how to create CIImages from a ProRAW file; how to apply the most common user adjustments; how you can turn off the default look to get linear, scene-referred values; how to render edits to output-referred formats such as HEIC; and finally, how to display ProRAWs to a Mac with an Extended Dynamic Range display.

Getting a preview image from a ProRAW is possible using the CGImageSourceCreateWithURL and CGImageSourceCreate ThumbnailAtIndex APIs.

New in iOS 15 and macOS 12, Core Image has added a new convenience API to access the preview.

Getting semantic segmentation mattes such as person, skin, and sky is supported by passing the desired option key when initializing the CIImage.

This too has a new and more convenient API to access the optional matte images.

Most importantly, your application will want to get the primary ProRAW image.

If your application just wants to show the default rendering look, all you need to do is create an immutable CIImage from a URL or data.

However, if your application wants to unlock the full editability of ProRAWs, then create a CIFilter from the URL.

If you just ask that filter for its outputImage, you will get a CIImage with a default look.

The key advantage of this API is that the filter can be easily modified.

The RAW CIFilter has several inputs that your application can change to produce new outputImage.

The basic code pattern is to set a value object for one of the documented key strings.

This setValue forKey API was due for an update though.

So new in iOS 15 and macOS 12, we have created a more discoverable and Swift-friendly API.

To use the new API, create a CIRAWFilter instance and then just set the desired property to a new value.

Next, let’s discuss what properties you might want to change.

Given the dynamic range of ProRAW images, one of the most important controls to provide is exposure.

You can set this value to any float.

For example, set it to positive 1 to make the image twice as bright or negative 1 to make it half as bright.

Because ProRAWs are edited in a linear scene-referred space, adjusting the image white balance is more effective.

The scene neutral can be specified as a temperature in degrees kelvin, or as an x/y chromaticity coordinate.

The default sharpness of a ProRAW look can be adjusted to any value from zero to one.

And the strength of ProRAW local tone map can be adjusted to any value from zero to one.

The local tone map allows for each region of the image to have its own optimized tone curve.

Here is an example of a ProRAW image with a local tone map strength turned to fully off...

and at half strength...

and fully on.

In this image, the local tone curves bring up the darker regions and bring down the brighter areas so as to present the best possible result on a Standard Dynamic Range display.

Next, let’s discuss how your application can get direct access to the 14 stops of linear scene-referred data.

Most users will prefer the common adjustments I described earlier, but access to linear data can be very useful for certain application cases.

To get a linear image, you just need turn off the filter inputs that apply the default look to the image.

Set the baselineExposure, exposureBias, boost, and localToneMapAmount to 0, and disable gamut mapping before getting the outputImage.

Once you have the linearRAWImage, you can use it as an input to other Core Image filters to perform scene-referred computations.

For example, you could use the built-in Core Image histogram filter to calculate statistics.

Or you may want to render the linear image to get its pixel data.

To do this, just create a CIRenderDestination for a RGBA-half-float mutable data, tell the render destination to use a linear colorSpace of your choice, and then have a CIContext start a task to render the rawFilter’s output image.

Here is an example of ProRAW with the default look in comparison to the as-captured linear scene-referred image.

The linear image will look flat and underexposed, but it still contains the full and unclipped 14 stops of data from the scene.

This pair of images also provides a good illustration of the difference between an output-referred image and a scene-referred image.

In the output-referred default look, the area of the image with the maximum luma is in the sunset on the left.

But this sky area on the right has a luma that is 80 percent as bright as that of the sunset.

While the default image looks great, the luma values do not represent the reality of the scene.

For the linear scene-referred image, the sky on the right of the image has a maximum radiance of just 12 percent of the radiance of the sunset, which is more physically logical.

This is why the linear scene-referred image is so important for image processing and analysis.

After your application has made changes to the ProRAW CIFilter properties, you may want to save the image to an output-referred format such as HEIC or JPEG.

Here’s an example of how to save the rawFilter output image to an 8-bit deep HEIC file.

With this API, you can specify the output colorSpace to use, though we recommend displayP3.

It is also possible to use the options dictionary to save the semantic masks into the HEIC.

Also, because ProRAWs have extra precision, you might consider using this new Core Image API to save to 10-bit deep HEIC files.

So far, all of the adjustments I’ve described produce an output image that is tone-mapped and gamut-mapped to the Standard Dynamic Range.

This is commonly abbreviated as “SDR”.

The next topic I’d like to discuss is how you can present the dynamic range of ProRAW images in full glory on an Extended Dynamic Range display.

Many Mac displays -- ranging from the MacBook Pros to iMacs to the Pro Display XDR -- can present EDR content using a MetalKit View.

To display a ProRAW CIImage, we recommend using a MetalKit View subclass for best performance.

Your MetalKit View subclass should create a CIContext and a MTLCommandQueue for the view.

For important details on how to get the best performance with Core Image in a MetalKit View, please review our presentation from last year on the subject.

If your Mac display supports EDR, then you can set the view’s colorPixelFormat to rgba16Float and set wantsExtendedDynamic RangeContent to true.

Then, when it is time for your view subclass to draw, set the rawFilter.extended DynamicRangeAmount to 1 and tell the Core Image context to start a task to render the outputImage.

Looking at a ProRAW image on an EDR display is really something one needs to see in person to fully appreciate.

But let me try to approximate what it looks like.

Here, the SDR image is shown, while this shows what that same ProRAW file will feel like in EDR.

The bright areas and specular highlights are no longer restricted by tone mapping, so they will be visible in full glory.

This concludes our presentation on Apple ProRAW.

Today we have described in detail the design and contents of the file format as well as how you can capture, store, and edit these images.

I look forward to seeing how your applications can unlock the full potential of these images.

♪

-

-

7:52 - Setting up device and session

// Use the .photo preset private let session = AVCaptureSession() private func configureSession() { session.beginConfiguration() session.sessionPreset = .photo //... } -

8:03 - Setting up device and session

// Or optionally find a format that supports highest quality photos guard let format = device.formats.first(where: { $0.isHighestPhotoQualitySupported }) else { // handle failure to find a format that supports highest quality stills } do { try device.lockForConfiguration() { // ... } device.unlockForConfiguration() } catch { // handle the exception } -

8:39 - Setting up photo output 1

// Enable ProRAW on the photo output private let photoOutput = AVCapturePhotoOutput() private func configurePhotoOutput() { photoOutput.isHighResolutionCaptureEnabled = true photoOutput.isAppleProRAWEnabled = photoOutput.isAppleProRAWSupported //... } -

8:59 - Setting up photo output 2

// Select the desired photo quality prioritization private let photoOutput = AVCapturePhotoOutput() private func configurePhotoOutput() { photoOutput.isHighResolutionCaptureEnabled = true photoOutput.isAppleProRAWEnabled = photoOutput.isAppleProRAWSupported photoOutput.maxPhotoQualityPrioritization = .quality // or .speed .balanced //... } -

9:26 - Prepare for ProRAW capture 1

// Find a supported ProRAW pixel format guard let proRawPixelFormat = photoOutput.availableRawPhotoPixelFormatTypes.first( where: { AVCapturePhotoOutput.isAppleProRAWPixelFormat($0) }) else { // Apple ProRAW is not supported with this device / format } // For Bayer RAW pixel format use AVCapturePhotoOutput.isBayerRAWPixelFormat() -

10:09 - Prepare for ProRAW capture 2

// Create photo settings for ProRAW only capture let photoSettings = AVCapturePhotoSettings(rawPixelFormatType: proRawPixelFormat) // Create photo settings for processed photo + ProRAW capture guard let processedPhotoCodecType = photoOutput.availablePhotoCodecTypes.first else { // handle failure to find a processed photo codec type } let photoSettings = AVCapturePhotoSettings(rawPixelFormatType: proRawPixelFormat, processedFormat: [AVVideoCodecKey: processedPhotoCodecType]) -

10:53 - Prepare for ProRAW capture 3

// Select a supported thumbnail codec type and thumbnail dimensions guard let thumbnailPhotoCodecType = photoSettings.availableRawEmbeddedThumbnailPhotoCodecTypes.first else { // handle failure to find an available thumbnail photo codec type } let dimensions = device.activeFormat.highResolutionStillImageDimensions photoSettings.rawEmbeddedThumbnailPhotoFormat = [ AVVideoCodecKey: thumbnailPhotoCodecType, AVVideoWidthKey: dimensions.width, AVVideoHeightKey: dimensions.height] -

11:08 - Prepare for ProRAW capture 4

// Select the desired quality prioritization for the capture photoSettings.photoQualityPrioritization = .quality // or .speed .balanced // Optionally, request a preview image if let previewPixelFormat = photoSettings.availablePreviewPhotoPixelFormatTypes.first { photoSettings.previewPhotoFormat = [kCVPixelBufferPixelFormatTypeKey as String: previewPixelFormat] } // Capture! photoOutput.capturePhoto(with: photoSettings, delegate: delegate) -

11:44 - Consuming captured ProRAW 1

func photoOutput(_ output: AVCapturePhotoOutput, didFinishProcessingPhoto photo: AVCapturePhoto, error: Error?) { guard error == nil else { // handle failure from the photo capture } if let preview = photo.previewPixelBuffer { // photo.previewCGImageRepresentation() // display the preview } if photo.isRawPhoto { guard let proRAWFileDataRepresentation = photo.fileDataRepresentation() else { // handle failure to get ProRAW DNG file data representation } guard let proRAWPixelBuffer = photo.pixelBuffer else { // handle failure to get ProRAW pixel data } // use the file or pixel data } -

12:52 - Consuming captured ProRAW 2

// Provide settings for lossless compression with less bits class AppleProRAWCustomizer: NSObject, AVCapturePhotoFileDataRepresentationCustomizer { func replacementAppleProRAWCompressionSettings(for photo: AVCapturePhoto, defaultSettings: [String : Any], maximumBitDepth: Int) -> [String : Any] { return [AVVideoAppleProRAWBitDepthKey: min(10, maximumBitDepth), AVVideoQualityKey: 1.00] } } -

13:35 - Consuming captured ProRAW 3

// Provide settings for lossy compression class AppleProRAWCustomizer: NSObject, AVCapturePhotoFileDataRepresentationCustomizer { func replacementAppleProRAWCompressionSettings( for photo: AVCapturePhoto, defaultSettings: [String : Any], maximumBitDepth: Int) -> [String : Any] { return [AVVideoAppleProRAWBitDepthKey: min(8, maximumBitDepth), AVVideoQualityKey: 0.90] } } -

13:51 - Consuming captured ProRAW 4

// Customizing the compression settings for the captured ProRAW photo func photoOutput(_ output: AVCapturePhotoOutput, didFinishProcessingPhoto photo: AVCapturePhoto, error: Error?) { guard error == nil else { // handle failure from the photo capture } if photo.isRawPhoto { let customizer = AppleProRAWCustomizer() guard let customizedFileData = photo.fileDataRepresentation(with: customizer) else { // handle failure to get customized ProRAW DNG file data representation } // use the file data } -

15:19 - Saving a ProRAW asset with PhotoKit

PHPhotoLibrary.shared().performChanges { let creationRequest = PHAssetCreationRequest.forAsset() creationRequest.addResource(with:.photo, fileURL:proRawFileURL, options:nil) } completionHandler: { success, error in // handle the success and possible error } -

15:45 - Fetching RAW assets from the photo library

// New enum PHAssetCollectionSubtype.smartAlbumRAW PHAssetCollection.fetchAssetCollections(with: .smartAlbum, subtype: .smartAlbumRAW, options: nil) -

17:16 - Retrieving RAW resources from a PHAsset

let resources = PHAssetResource.assetResources(for: asset) for resource in resources { if (resource.type == .photo || resource.type == .alternatePhoto) { if let resourceUTType = UTType(resource.uniformTypeIdentifier) { if resourceUTType.conforms(to: UTType.rawImage) { let resourceManager = PHAssetResourceManager.default() resourceManager.requestData(for: resource, options: nil) { data in // use the data } completionHandler: { error in // handle any error } } } } } -

18:28 - Getting CIImages from a ProRAW

// Getting the preview image let isrc = CGImageSourceCreateWithURL(url as CFURL, nil) let cgimg = CGImageSourceCreateThumbnailAtIndex(isrc!, 0, nil) return CIImage(cgImage: cgimg) -

18:36 - Getting CIImages from a ProRAW 2 (New in iOS 15 and macOS 12)

// Getting the preview image let rawFilter = CIRAWFilter(imageURL: url) return rawFilter.previewImage -

18:44 - Getting CIImages from a ProRAW 3

// Getting the preview image let rawFilter = CIRAWFilter(imageURL: url) return rawFilter.previewImage // Getting segmentation mattes images return CIImage(contentsOf: url, options: [.auxiliarySemanticSegmentationSkinMatte : true]) -

18:56 - Getting CIImages from a ProRAW 4

// Getting the preview image let rawFilter = CIRAWFilter(imageURL: url) return rawFilter.previewImage // Getting segmentation mattes images let rawFilter = CIRAWFilter(imageURL: url) return rawFilter.semanticSegmentationSkinMatte -

19:09 - Getting CIImages from a ProRAW 5

// Getting the primary image return CIImage(contentsOf: url, options:nil) let rawFilter = CIFilter(imageURL: url, options:nil) return rawFilter.outputImage -

19:31 - Applying common user adjustments

func get_adjusted_raw_image (url: URL) -> CIImage? { // Load the image let rawFilter = CIFilter(imageURL: url, options:nil) // Change one or more filter inputs rawFilter.setValue(value, forKey: CIRAWFilterOption.keyName.rawValue) // Get the adjusted image return rawFilter.outputImage } -

19:54 - Applying common user adjustments 2

func get_adjusted_raw_image (url: URL) -> CIImage? { // Load the image let rawFilter = CIRAWFilter(imageURL: url) // Change one or more filter inputs rawFilter.property = value // Get the adjusted image return rawFilter.outputImage } -

20:17 - Applying common user adjustments 3

// Exposure rawFilter.exposure = -1.0 // Temperature and tint rawFilter.neutralTemperature = 6500 // in °K rawFilter.neutralTint = 0.0 // Sharpness rawFilter.sharpnessAmount = 0.5 // Local tone map strength rawFilter.localToneMapAmount = 0.5 -

21:40 - Getting linear scene-referred output 1

// Turn off the filter inputs that apply the default look to the RAW rawFilter.baselineExposure = 0.0 rawFilter.shadowBias = 0.0 rawFilter.boostAmount = 0.0 rawFilter.localToneMapAmount = 0.0 rawFilter.isGamutMappingEnabled = false let linearRawImage = rawFilter.outputImage -

22:00 - Getting linear scene-referred output 2

// Use the linear image with other filters let histogram = CIFilter.areaHistogram() histogram.inputImage = linearRawImage histogram.extent = linearRawImage.extent // Or render it to a RGBAh buffer let rd = CIRenderDestination(bitmapData: data.mutableBytes, width: imageWidth, height: imageHeight, bytesPerRow: rowBytes, format: .RGBAh) rd.colorSpace = CGColorSpace(name: CGColorSpace.extendedLinearITUR_2020) let task = context.startTask(toRender: rawFilter.outputImage, from: rect, to: rd, at: point) task.waitUntilCompleted() -

23:54 - Saving edits to other file formats 1 (8-bit HEIC)

// Saving to 8-bit HEIC try ciContext.writeHEIFRepresentation(of: rawFilter!.outputImage!, to: theURL, format: .RGBA8, colorSpace: CGColorSpace(name: CGColorSpace.displayP3)!, options: [:]) -

24:12 - Saving edits to other file formats 2 (10-bit HEIC)

// Saving to 10-bit HEIC try ciContext.writeHEIF10Representation(of: rawFilter!.outputImage!, to: theURL, format: .RGBA8, colorSpace: CGColorSpace(name: CGColorSpace.displayP3)!, options: [:]) -

24:51 - Displaying to a Metal Kit View in EDR on Mac

class MyView : MTKView { var context: CIContext var commandQueue: MTLCommandQueue //... } -

25:13 - Displaying to a Metal Kit View in EDR on Mac

// Create a Metal Kit View subclass class MyView : MTKView { var context: CIContext var commandQueue: MTLCommandQueue //... } // Init your Metal Kit View for EDR colorPixelFormat = MTLPixelFormat.rgba16Float if let caml = layer as? CAMetalLayer { caml.wantsExtendedDynamicRangeContent = true //... } // Ask the filter for an image designed for EDR and render it rawFilter.extendedDynamicRangeAmount = 1.0 context.startTask(toRender: rawFilter.outputImage, from: rect, to: rd, at: point)

-