-

What’s new in camera capture

Learn how you can interact with Video Effects in Control Center including Center Stage, Portrait mode, and Mic modes. We'll show you how to detect when these features have been enabled for your app and explore ways to adopt custom interfaces to make them controllable from within your app. Discover how to enable 10-bit HDR video capture and take advantage of minimum-focus-distance reporting for improved camera capture experiences. Explore support for IOSurface compression and delivering optimal performance in camera capture.

To learn more about camera capture, we also recommend watching "Capture high-quality photos using video formats" from WWDC21.Resources

Related Videos

WWDC22

- Bring Continuity Camera to your macOS app

- Discover advancements in iOS camera capture: Depth, focus, and multitasking

WWDC21

WWDC20

WWDC16

-

Search this video…

♪ Bass music playing ♪ ♪ Brad Ford: Hello and welcome to "What's new in camera capture." I'm Brad Ford from the Camera Software team. I'll be presenting a host of new camera features beginning with minimum focus distance reporting; how to capture 10-bit HDR video; the main course, Video Effects in Control Center; and then -- a brief respite from new features -- I'll review strategies to get the best performance out of your camera app. And lastly, I'll introduce a brand-new performance trick for your bag, IOSurface compression. All of the features I'll be describing today are found in the AVFoundation framework -- specifically, the classes prefixed with AVCapture. To briefly review, the main objects are AVCaptureDevices, which represent cameras or microphones; AVCaptureDeviceInputs, which wrap devices, allowing them to be plugged into an AVCaptureSession, which is the central control object of the AVCapture graph. AVCaptureOutputs render data from inputs in various ways. The MovieFileOutput records QuickTime movies. The PhotoOutput captures high-quality stills and Live Photos. Data outputs, such as the VideoDataOutput or AudioDataOutput, deliver video or audio buffers from the camera or mic to your app. There are several other kinds of data outputs, such as Metadata and Depth. For live camera preview, there's a special type of output, the AVCaptureVideoPreviewLayer, which is a subclass of CALayer. Data flows from the capture inputs to compatible capture outputs through AVCaptureConnections, represented here by these arrows. If you're new to AVFoundation camera capture, I invite you to learn more at the Cameras and Media Capture start page on developer.apple.com. OK, let's dive right into the first of our new features. Minimum focus distance is the distance from the lens to the closest point where sharp focus can be attained. It's an attribute of all lenses, be they on DSLR cameras or smartphones. iPhone cameras have a minimum focus distance too, we've just never published it before... ...until now, that is. Beginning in iOS 15, minimum focus distance is a published property of iPhone autofocus cameras. Here's a sampling of recent iPhones. The chart illustrates how the minimum focus distance of the Wide and telephoto cameras varies from model to model. There's a notable difference between the iPhone 12 Pro and 12 Pro Max Wide cameras, the Pro Max focusing at a minimum distance of 15 centimeters compared to 12 on the Pro. This is due to the sensor shift stabilization technology in the iPhone 12 Pro Max. Likewise, the minimum focus distance of the tele is farther on the 12 Pro Max than the 12 Pro. This is due to the longer reach of the telephoto lens. It has a 2.5x versus a 2x zoom. Let me show you a quick demo of why minimum focus distance reporting is important.

Here's a sample app called AVCamBarcode. It showcases our AVFoundation barcode detection APIs. The UI guides the user to position an object inside of a rectangle for scanning. In this example, I've chosen a fairly small QR code on a piece of paper. The barcode is only 20 millimeters wide. By tapping on the Metadata button, I see a list of all the various object types supported by AVCaptureMetadataOutput. There are a lot of them. I'll choose QRCodes and then position my iPhone 12 Pro Max camera to fill the rectangle with the QRCode. Unfortunately, it's so small that I have to get very close to the page to fill the preview. That's closer than the camera's minimum focus distance. The code is blurry, so it doesn't scan. To guide the user to back away, I need to apply a zoom factor to the camera preview...

...like so. Seeing a zoomed image on the screen will prompt them to physically move the camera farther away from the paper. I can do that with a slider button, but it would be much better if the app took care of the zoom automatically.

That's where the new minimumFocusDistance property of AVCaptureDevice comes in. It's new in iOS 15. Given the camera's horizontal field of view, the minimum barcode size you'd like to scan -- here I've set it to 20 millimeters -- and the width of the camera preview window as a percentage, we can do a little math to calculate the minimum subject distance needed to fill that preview width. Then, using the new minimumFocusDistance property of the camera, we can detect when our camera can't focus that close and calculate a zoom factor large enough to guide the user to back away. And finally, we apply it to the camera by locking it for configuration, setting the zoom factor, and then unlocking it. After recompiling our demo app, the UI now automatically applies the correct zoom amount.

As the app launches, it's already zoomed to the correct space. No more blurry barcodes! And if I tap on it, we can see where it takes me. Ah! "Capturing Depth in iPhone Photography" -- an oldie but a goodie. I deeply appreciate that session.

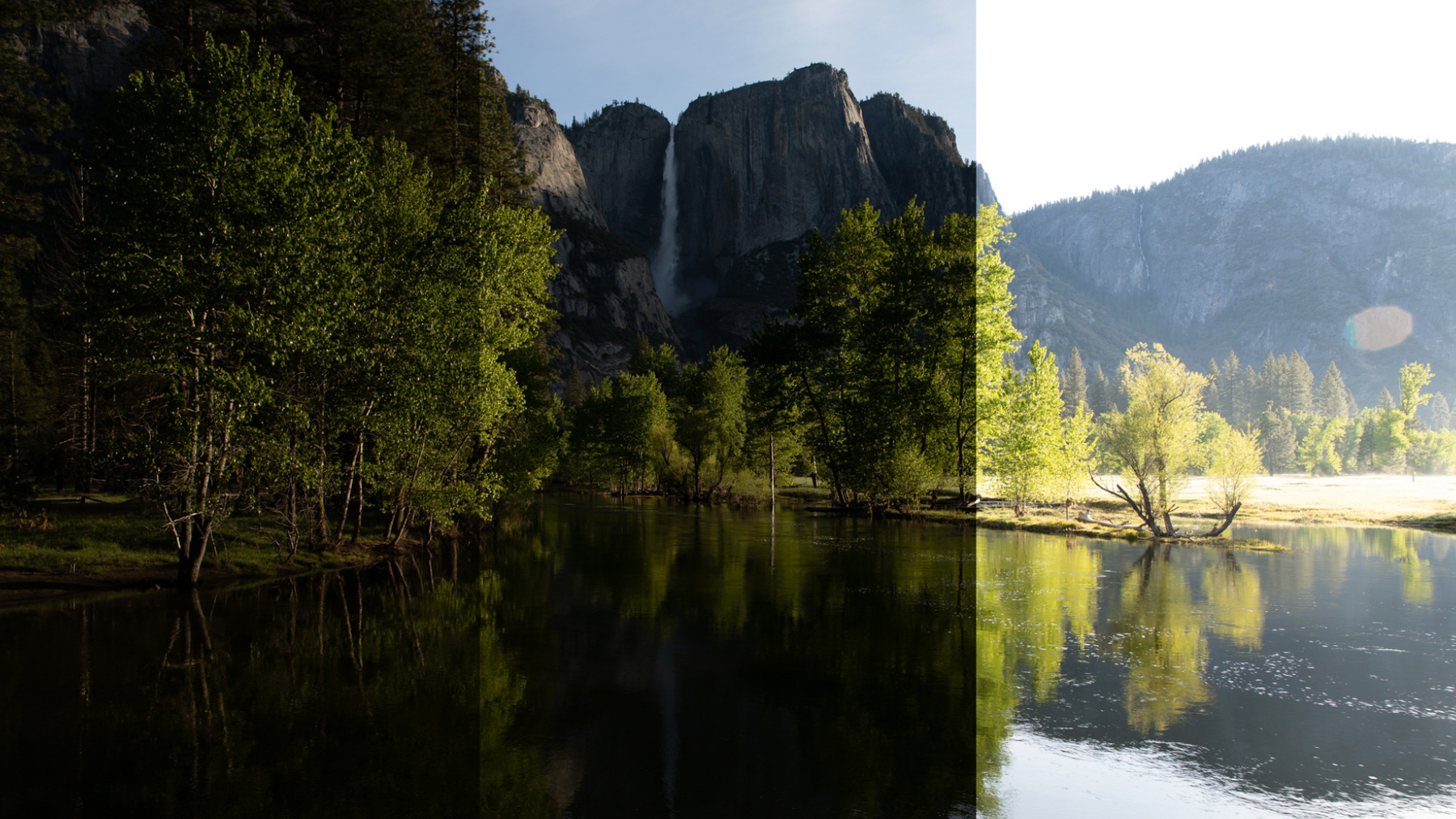

Please check out the new AVCamBarcode sample for best practices on how to incorporate minimum focus distance, as well as a lot of other best practices for scanning barcodes. Next up is 10-bit HDR video. HDR stands for High Dynamic Range, and it's been around as a still image technology since way back in iOS 4.1. Preserving High Dynamic Range is usually accomplished by taking multiple exposures of a scene and then blending them to preserve both highlights and shadows. But what about video HDR? That's a challenge since you've got to deliver 30 or 60 frames a second. Not exactly Video HDR -- but close -- in 2018, Apple introduced EDR, or Extended Dynamic Range, to the iPhone XS camera line. EDR is an HDR-like solution for video. It essentially doubles the capture frame rate, alternating between standard exposures and short exposures, but timed so there's virtually no vertical blanking between the captures. When nominally capturing 30 frames per second, EDR video is actually running the camera at 60 frames a second. When required by the scene, the hue map from the EV- is dynamically applied to the EV0 image to recover clipped highlights, but without sacrificing detail in the shadow. It's not a full HDR solution, as its effectiveness diminishes in low light, but it provides amazing results in medium to good light. Now here's the confusing part. EDR was presented as a suite of AVCaptureDevice properties under the moniker of videoHDR. Wherever you see videoHDRSupported or videoHDREnabled in the AVCapture API, you should mentally substitute EDR. That's what it is. AVCaptureDevice also has a property called "automaticallyAdjusts VideoHDREnabled", which defaults to true. So EDR is enabled automatically whenever it's available. If for some reason you wish to disable it, you need to set automaticallyAdjustsVideo HDREnabled to false, and then set videoHDREnabled to false as well. Now the story gets even better. I needed to tell you about EDR so I could tell you about 10-bit HDR Video. 10-bit HDR video is truly High Dynamic Range because it's got more bits! That means increased editability. It's got EDR for highlight recovery, and it's always on. It uses hybrid log gamma curves as well as the BT.2020 color space, allowing for even greater contrast of colors -- brighter brights than Rec 709. And whether you use AVCaptureMovieFileOutput or AVCaptureVideoDataOutput plus AVAssetWriter, we automatically insert per-frame Dolby Vision metadata into your movies, making them compatible with Dolby Vision displays. 10-bit HDR video was first introduced on the iPhone 12. 10-bit HDR video formats can be identified by their unique pixel format type. On older iPhone models, cameras have AVCaptureDeviceFormats that always come in pairs. For each resolution and frame rate range, there's a 420v and a 420f format. These are 8-bit, biplanar, YUV formats. The V in 420v stands for video range -- or 16 to 235 -- and the F in 420f stands for full range -- or 0 to 255. On iPhone 12 models, some formats come in clusters of three. After the 420v and 420f formats comes an x420 format of the same resolution and frame rate range. Like 420v, x420 is a biplanar 420 format in video range, but the x in x420 stands for 10 bits instead of 8. To find and select a 10-bit HDR video format in code, simply iterate through the AVCaptureDevice formats until you find one whose pixel format matches x420, or -- deep breath -- 420YpCbCr10BiPlanarVideoRange. You can of course include other search criteria, such as width, height, and max frame rate. We've updated our AVCam sample code to support 10-bit HDR video when available. There's a handy utility function in it called "tenBitVariantOfFormat" which can find the 10-bit HDR variant of whatever the currently selected device active format is. Please take a look. 10-bit HDR video is supported in all the most popular video formats, including 720p, 1080p, and 4K. And we included a 4 by 3 format as well -- 1920 by 1440 -- which does support 12 megapixel, high-resolution photos.

While capturing 10-bit HDR video is simple, editing and playing it back properly is tricky. I invite you to watch a companion session from 2020 entitled "Edit and play back HDR video with AVFoundation." All right, that's it for HDR video.

Now on to the main event: Video Effects in Control Center. Put simply, these are system-level camera features that are available in your apps with no code changes. And the user is in control. This is a bit of a departure for us. Traditionally, when we introduce a new camera feature in iOS or macOS, Apple's apps adopt it out of the box. We expose new AVCapture APIs, you learn about them -- just as you're doing now -- and then you adopt the feature at your own pace. This is a safe and conservative approach, but often results in a long lead time in which users miss out on a great feature in their favorite camera apps. With Video Effects in Control Center, we are introducing system-level prepackaged camera features that are available to everyone right away with no code changes, and the user is in control. We do continue to expose new APIs for these features, so you can tailor the experience in your app as soon as your release schedule allows. Let's take a look at these effects. The first was announced at our May Spring Loaded Apple event and it's called "Center Stage". It's available on the recently released M1 iPad Pro models and it makes use of their incredible 12 megapixel Ultra Wide front-facing cameras. Center Stage really enhances the production value of your FaceTime video calls. It also works great out of the box in every other video conferencing app. Here, let me show you. I've just downloaded Skype from the App Store; this is a stock version of the app.

When I start a Skype call, you immediately see Center Stage kick into action. It's kind of like having your own personal camera operator. It frames you as you move around the scene to keep you perfectly framed, whether you come in tight or you move back and like to pace. it can even track you when you turn your face away from the camera. That's because it tracks bodies, not just faces. As a user, I can control Center Stage by simply swiping down for Control Center, tapping on the new Video Effects module, and making my selection. As I turn Center Stage off and go back to the app, I no longer get the Center Stage effect. No changes in the app. There's a companion new feature that all video conferencing apps get as well, and that's called "Portrait". Portrait mode offers me a beautifully rendered shallow depth-of-field effect. It's not just a simple privacy blur; it uses Apple's Neural Engine plus a trained monocular depth network to approximate a real camera with a wide-open lens. Now let's take a look finally at the mic modes by swiping down and picking the Mic Mode module, I can make my choice between Standard, Voice Isolation, or Wide Spectrum. Mic modes enhance the audio quality in your video chats. More on these in a minute. While Center Stage, Portrait, and Mic Modes share screen real estate in Control Center, they differ somewhat in API treatment. I'll introduce you to Center Stage APIs first and then to Portrait and Mic Modes. Center Stage is available on all the front cameras of the M1 iPad Pros. Whether you're using the new front-facing Ultra Wide camera, the Virtual Wide camera -- which presents a cropped, conventional field of view -- or the Virtual TrueDepth camera, Center Stage is available. The TrueDepth camera comes with some conditions, which I'll cover momentarily. The Control Center Video Effects module presents an on/off toggle per app. This allows you to default Center Stage to on in a conferencing app, while defaulting it to off in a pro photography app where you want to frame your shot manually. There's one state per app, not one state per camera. Because the Center Stage on/off toggle is per app, not per camera, it's presented in the API as a set of class properties on AVCaptureDevice. These are readable, writable, and key-value observable. centerStageEnabled matches the on/off state of the Center Stage UI in Control Center. The Center Stage control mode dictates who is allowed to toggle the enabled state. More on that in a minute. Not all cameras or formats support Center Stage. You can iterate through the formats array of any camera to find a format that supports the feature and set it as your activeFormat. Additionally, you can find out if Center Stage is currently active for a particular camera by querying or observing its Center Stage active property. You should be aware of Center Stage's limitations. Center Stage uses the full 12-megapixel format of the Ultra Wide camera, which is a 30 fps format, so the max frame rate is limited to 30. Center Stage avoids upscaling to preserve image quality, so it's limited to a max output resolution of 1920 by 1440. Pan and zoom must remain under Center Stage control, so video zoom factor is locked at one. Geometric distortion correction is integral to Center Stage's people framing, and depth delivery must be off, since depth generation requires matching full field of view images from the RGB and infrared cameras. Now let's get into the concept of control modes. Center Stage has three supported modes: user, app, and cooperative. User mode is the default Center Stage control mode for all apps. In this mode, only the user can turn the feature on and off. If your app tries to programmatically change the Center Stage enabled state, an exception is thrown. Next is the app mode, wherein only your app is allowed to control the feature. Users can't use Control Center because the toggle is grayed out there. Use of this mode is discouraged. You should only use it if Center Stage is incompatible with your app. If you do need to opt out, you can set the control mode to app, then set isCenterStageEnabled to false. The best possible user experience for Center Stage is the cooperative mode, in which the user can control the feature in Control Center, and your app can control it with your own UI. You need to do some extra work, though. You must observe the AVCaptureDevice .isCenterStageEnabled property and update your UI to make sure Center Stage is on when the user wants it on. After setting the control mode to cooperative, you can set center stage enabled to true or false -- based on a button in your app, for instance. The poster child for cooperative mode is FaceTime. While I'm in a FaceTime call, I can use a button right within the app to turn Center Stage on so that it tracks me, or I can use the conventional method of swiping down in Control Center and turning Center Stage on or off. FaceTime and Control Center cooperate on the state of Center Stage so that it's always matching user intent. FaceTime is also smart enough to know when features are mutually incompatible. So if, for instance, I tried to turn on Animoji, which requires depth...

...it knows to turn Center Stage off, because those two features are mutually incompatible. If I tap to turn Center Stage back on, FaceTime knows to disable Animoji. That wraps up Center Stage API. Let's transition over to Center Stage's roommate in Control Center, Portrait. Put simply, it's a beautifully rendered shallow depth-of-field effect, designed to look like a wide-aperture lens. On iOS, Portrait is supported on all devices with Apple Neural Engine -- that's 2018 and newer phones and pads. Only the front-facing camera is supported. It's also supported on all M1 Macs, which likewise contain Apple's Neural Engine. Portrait is a computationally complex algorithm. Thus, to keep video rendering performance responsive, it's limited to a max resolution of 1920 by 1440 and a max resolution of 30 frames per second.

Like Center Stage, the Portrait effect has a sticky on/off state per app. Its API is simpler than Center Stage's, the user is always in control through Control Center, and it's only available by default in certain classes of apps. On iOS, apps that use the VoIP UIBackgroundMode are automatically opted in -- users can turn the effect on or off in Control Center. All other iOS apps must opt in to declare themselves eligible for the Portrait effect by adding a new key to their app's Info.plist: NSCameraPortraitEffectEnabled. On macOS, all apps are automatically opted in, and can use the effect out-of-the-box. The Portrait effect is always under user control through Control Center only. As with Center Stage, not all cameras or formats support Portrait. You can iterate through the formats array of any camera to find a format that supports the feature, and set it as your active format. You can also find out if Portrait is currently active for a particular camera by querying or observing its isPortraitEffectActive property. Mic Mode APIs are analogous to Portrait.

User selection is sticky per app. Users are always in control; your app can't set the Mic Mode directly. Some apps need to opt in to use the feature. Mic Modes are presented in AVFoundation's AVCaptureDevice interface, and there are three flavors: standard, which uses standard audio DSP; wide spectrum, which minimizes processing to capture all sounds around the device but it still includes echo cancellation; and voice isolation, which enhances speech and removes unwanted background noise such as typing on keyboards, mouse clicks, or leaf blowers running somewhere in the neighborhood. These flavors can only be set by the user in Control Center, but you can read and observe their state using AVCaptureDevice's preferredMicrophoneMode -- which is the mode selected by the user -- and activeMicrophoneMode -- which is the mode now in use, taking into account the current audio route, which may not support the user's preferred Mic Mode. In order to use Mic Modes, your app must adopt the Core Audio AUVoiceIO audio unit. This is a popular interface in video conferencing apps, since it performs echo cancellation. And Mic Mode processing is only available on 2018 and later iOS and macOS devices. With Portrait and Mic Modes, the user is always in control, but you can prompt them to turn the feature off or on by calling the new AVCaptureDevice .showSystemUserInterface method. And you can pass it either videoEffects or microphoneModes. Calling this API opens Control Center and deeplinks to the appropriate submodule. Here, we're drilling down to the Video Effects module, where the user can choose to turn Portrait off.

That wraps up Portrait, and wraps up Video Effects in Control Center. I've just shown you examples of system-level camera features injected into your app without you changing a line of code -- a pretty powerful concept! There's a companion session to this one called "Capture high-quality photos using video formats", where you'll learn about improvements we've made to still image quality in your apps without you changing a line of code. Please check it out. We've covered a lot of new features. At this point in the session, I'd like to take a breather and talk about performance. Center Stage and Portrait are great new user features, but they do carry an added performance cost. So let's review performance best practices to make sure your camera apps are ready for new features like Portrait and Center Stage. Camera apps use AVCapture classes to deliver a wide array of features. The most popular interface is AVCaptureVideoDataOutput, which allows you to get video frames direct to your process for manipulating, displaying, encoding, recording... you name it. When using VideoDataOutput, it's important to ensure that your app is keeping up with real-time deadlines so there are no frame drops. By default, VideoDataOutput prevents you from getting behind by setting its alwaysDiscardsLateVideoFrames property to true. This enforces a buffer queue size of one at the end of video data output's processing pipeline and saves you from periodic or chronic slow processing by always giving you the freshest frame and dropping frames you weren't ready to process. It doesn't help you if you need to record the frames you're receiving, such as with AVAssetWriter. If you intend to record your processed results, you should turn alwaysDiscardsLateVideoFrames off and pay close attention to your processing times. VideoDataOutput tells you when frame drops are occurring by calling your provided captureOutput didDrop sampleBuffer delegate callback. When you receive a didDrop callback, you can inspect the included sampleBuffer's attachments for a droppedFrameReason. This can inform what to do to mitigate further frame drops. There are three reasons: FrameWasLate, which means your processing is taking too long; OutOfBuffers, which means you may be holding on to too many buffers; and Discontinuity, which means there is a system slow down or hardware failure that's not your fault. Now let's talk about how to react to frame drops. One of the best ways is to lower the device frame rate dynamically. Doing so incurs no glitches in preview or output. You simply set a new activeMinVideoFrameDuration on your AVCaptureDevice at run time. A second way is to simplify your workload so you're not taking so much time. Now let's talk about system pressure, another performance metric that's crucially important to a good user experience in your camera app. System pressure means just that, the system is subject to strain or pressure. AVCaptureDevice has a property called systemPressureState, which consists of factors and an overall level. The SystemPressureState contributing factors are a bit mask of three possible contributors. systemTemperature refers to how hot the device is getting. peakPower is all about battery aging, and whether the battery is capable of ramping up voltage quickly enough to meet peak power demands. And the depthModuleTemperature, which refers to how hot the TrueDepth camera's infrared sensor is getting. The SystemPressureState's level is an indicator that can help you take action before your user experience is compromised. When it's nominal, everything is copacetic. Fair indicates that system pressure is slightly elevated. This might happen even if you're doing very little processing but the ambient temperature is high. At serious, system pressure is highly elevated; capture performance may be impacted. Frame rate throttling is advised. Once you reach critical, system pressure is critically elevated; capture quality and performance are significantly impacted. Frame rate throttling is highly advised. And you never want to let things escalate to shutdown, where system pressure is beyond critical. At this level, AVCaptureSession stops automatically to spare the device from thermal trap.

You can react to elevated pressure in a variety of ways. Lower the capture frame rate; this will always help system pressure. If lowering the frame rate isn't an option, consider lessening your workload on the CPU or GPU, such as turning off certain features. You might also keep features on but degrade the quality, perhaps by only processing a smaller resolution or less frequently. AVCaptureSession never frame rate throttles on your behalf, since we don't know if that's an acceptable quality degradation strategy for your app. That wraps up performance best practices. Now onto our dessert course, IOSurface compression. I carefully avoided talking about memory bandwidth in the performance section, since there's not much you can do about the overall memory bandwidth requirements of video flowing through the ISP and eventually to your photos, movies, preview, or buffers. But still, memory bandwidth can be a crucial limiter in determining which camera features can simultaneously run. When working with uncompressed video on iOS and macOS, there are a lot of layers involved. It's a little like a Russian nesting doll. At the top level is a CMSampleBuffer, which can wrap all kinds of media data, as well as timing and metadata. Down one level, there's the CVPixelBuffer, which specifically wraps pixel buffer data along with metadata attachments. Finally, you get to that lowest level, IOSurface, which allows the memory to be wired to the kernel, as well as provides an interface for sharing large video buffers across processes. IOSurfaces are huge. They account for the great memory bandwidth requirements of uncompressed video. Now thankfully, IOSurface compression offers a solution for memory bandwidth problems. New in iOS 15, we're introducing support for a lossless in-memory video compression format. It's an optimization to lower total memory bandwidth for live video. It's an interchange format understood by the major hardware blocks on iOS devices and Macs. It's available on all iPhone 12 variants, Fall 2020 iPad Airs, and Spring 2021 M1 iPad Pros. Which major hardware blocks deal in compressed IOSurfaces? Well, there are a lot. All services listed here understand how to read or write compressed IOSurfaces. At this point you may be saying, "Great, how do I sign up?" Well, good news. If you're capturing video on supported hardware and the AVCaptureSession doesn't need to deliver any buffers to your process, congratulations, your session is already taking advantage of IOSurface compression whenever it can to reduce memory bandwidth. If you want compressed surfaces delivered to your video data output, you need to know about a few rules. Physical memory layout is opaque and may change, so don't write to disk, don't assume the same layout on all platforms, don't read or write using the CPU. AVCaptureVideoDataOutput supports several flavors of IOSurface compression. Earlier in the talk, you learned that iOS cameras natively support 420v and 420f -- 8-bit YUV formats; one video and one full range. And later, you learned about x420, a 10-bit HDR video format. Video data output can also internally expand to 16-bits-per-pixel BGRA if requested. Each of these has an IOSurface compressed equivalent which, beginning in iOS 15, you can request through AVCaptureVideoDataOutput. If you're a fan of ampersands in your four-character codes, this is your lucky day. Here it is again in eye chart format. These are the actual constants you should use in your code. Two years ago we released a piece of sample code called "AVMultiCamPiP". In this sample, the front and back cameras are streamed simultaneously to VideoDataOutputs using a multicam session, then composited as a Picture in Picture using a Metal shader, which then renders the composite to preview and writes to a movie using AVAssetWriter. This is the perfect candidate for IOSurface compression as all of these operations are performed on hardware. Here's the existing VideoDataOutput setup code in AVMultiCamPiP. It likes to work with BGRA, so it configures the VideoDataOutput's videoSettings to produce that pixel format type. The new code just incorporates some checks. First it sees if the IOSurface compressed version of BGRA is available. And if so, it picks that; the else clause is just there as a fallback. And just like that, we've come to the end. You learned about minimum focus distance reporting, how to capture 10-bit HDR video, Video Effects and Mic Modes in Control Center, performance best practices, and IOSurface compression. I hope you enjoyed it! Thanks for watching. ♪

-

-

0:01 - Optimize QR code scanning

// Optimize the user experience for scanning QR codes down to sizes of 20mm x 20mm. let deviceFieldOfView = self.videoDeviceInput.device.activeFormat.videoFieldOfView let minSubjectDistance = minSubjectDistanceForCode( fieldOfView: deviceFieldOfView, minimumCodeSize: 20, previewFillPercentage: Float(rectOfInterestWidth)) -

0:02 - minSubjectDistance

private func minSubjectDistance( fieldOfView: Float, minimumCodeSize: Float, previewFillPercentage: Float) -> Float { let radians = degreesToRadians(fieldOfView / 2) let filledCodeSize = minimumCodeSize / previewFillPercentage return filledCodeSize / tan(radians) } -

0:03 - Lock device for configuration

let deviceMinimumFocusDistance = Float(self.videoDeviceInput.device.minimumFocusDistance) if minimumSubjectDistanceForCode < deviceMinimumFocusDistance { let zoomFactor = deviceMinimumFocusDistance / minimumSubjectDistanceForCode do { try videoDeviceInput.device.lockForConfiguration() videoDeviceInput.device.videoZoomFactor = CGFloat(zoomFactor) videoDeviceInput.device.unlockForConfiguration() } catch { print("Could not lock for configuration: \(error)") } } -

0:04 - firstTenBitFormatOfDevice

func firstTenBitFormatOfDevice(device: AVCaptureDevice) -> AVCaptureDevice.Format? { for format in device.formats { let pixelFormat = CMFormatDescriptionGetMediaSubType(format.formatDescription) if pixelFormat == kCVPixelFormatType_420YpCbCr10BiPlanarVideoRange /* 'x420' */ { return format } } return nil } -

0:05 - captureOutput

func captureOutput( _ output: AVCaptureOutput, didDrop sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) { guard let attachment = sampleBuffer.attachments[.droppedFrameReason], let reason = attachment.value as? String else { return } switch reason as CFString { case kCMSampleBufferDroppedFrameReason_FrameWasLate: // Handle the late frame case. break case kCMSampleBufferDroppedFrameReason_OutOfBuffer: // Handle the out of buffers case. break case kCMSampleBufferDroppedFrameReason_Discontinuity: // Handle the discontinuity case. break default: fatalError("A frame dropped for an undefined reason.") } }

-