-

Build dynamic iOS apps with the Create ML framework

Discover how your app can train Core ML models fully on device with the Create ML framework, enabling adaptive and customized app experiences, all while preserving data privacy. We'll explore the types of models that can be created on-the-fly for image-based tasks like Style Transfer and Image Classification, audio tasks like custom Sound Classification, or tasks that build on a rich set of Text Classification, Tabular Data Classification, and Tabular Regressors. And we'll take you through the many opportunities these models offer to make your app more personal and dynamic.

For even more inspiration, check out “Classify hand poses and actions with Create ML” and “Discover built-in sound classification in SoundAnalysis” from WWDC21.Resources

Related Videos

WWDC22

WWDC20

-

Search this video…

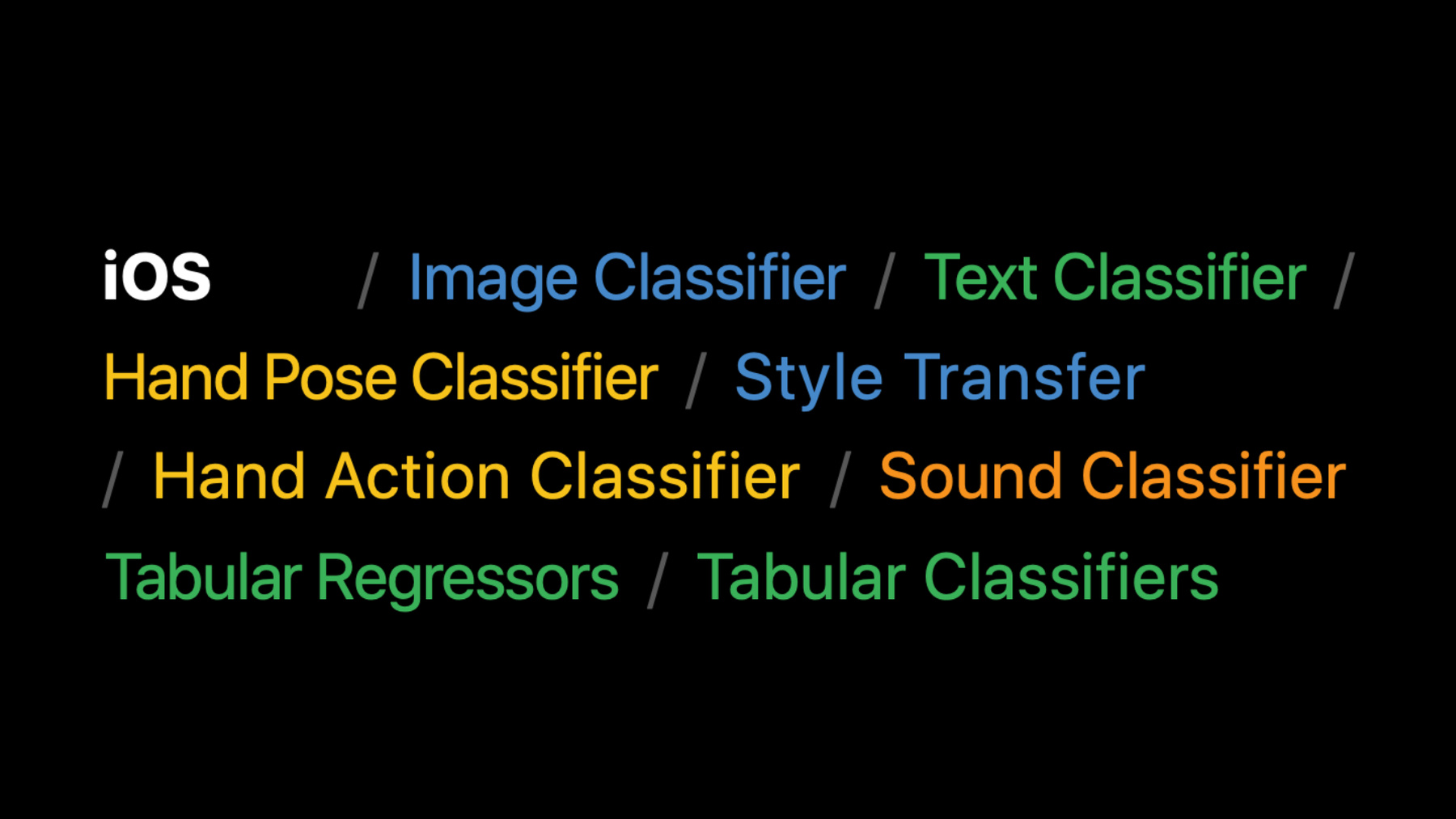

♪ Bass music playing ♪ ♪ Tao Jia: Hello, my name is Tao. Today, my colleague Jack and I are going to talk about building dynamic iOS apps with the Create ML framework. So what do I mean by an app being dynamic? Dynamic apps are those apps that give their users the flexibility to customize certain elements of the app. Dynamic apps also tailor their contents to best suit different user needs. These adaptable in-app features give your users more intelligent and personalized experiences tailored just for them. You can deliver certain such experiences by simple heuristics and predefined rules. But often times, these approaches may not render the best experience for all of your app users as their backgrounds and preferences can vary. On the other hand, machine learning techniques enable you to create models that learn directly from user data. This often is more generalizable and likely suit more users than heuristics and predefined rules. So what tools can you use to deliver such experience? The Create ML app, available on your Mac, makes it easy to create models by simply selecting some training data and hitting the train button. It supports all sorts of model types through a rich set of templates. The app is built on top of the Create ML framework which provides accelerated training of machine learning models. The Create ML Framework was originally introduced in macOS Mojave allowing for models to be trained in Swift code and from within your macOS app. And now we're bringing that framework to iOS 15 and iPadOS 15. With it available on device, your apps can do all sorts of new and dynamic things. In other words, you can access programmatic APIs directly from your application for on-device model creation. It gives your app the capability to learn from and thus adapt to your users. Last but most importantly, user data never have to leave their devices; therefore, user privacy is preserved. Now, let's dive in. There are different tasks available in Create ML. Here are all the ones available on macOS, and these are now available on iOS. Among them are the popular tasks such as image, sound, and text classifiers. More recently added ones include style transfer as well as this year's new addition of hand pose and hand action classifiers. With these tools available, there are many interesting ideas and use cases. Here are a few examples. With image classification, an app can learn what a child's favorite stuffed animals look like and then help find more photos of them in order to help create a story about their adventures together. Text classifier can be used to help a user quickly organize a note they just wrote with suggested tags and folders learned from their past actions. The hand action classifier, which is new this year, could give your user the power to trigger in-app visual effects with custom hand actions. There are so many cool things you can do with Create ML on iOS but it's better to just show you what I mean with a demo. I have an app that looks a bit like a photo booth app, but more dynamic as it allows me to create a customized photo filter. Let me show you how that works. Here on my iPad, it shows at the top a list of existing filters I trained on my Mac using a Create ML style transfer task. Each one of them is represented by the particular style image used to create the filter. On the bottom, the app is waiting for a photo to try those filters, either by taking or choosing a photo. Let me take a photo now.

How about a selfie? Let's see how these filters look. Let me first click this first picture of wave form. Oh, I got all these water drops on my face and hair; reminds me of my vacation in Miami a long time ago. What about this picture of birdies? Look how colorful I am. How about this third picture that looks like cracked ice? Oh, I look cool and icy. Now these look really fun, but I feel I am still missing something. What if I could just create a filter using any photo of my choice? That would make this app really fun to use. Let me give it a shot.

I have this drawing from my daughter when she was 3 years old. I really love the textures and colors. I'm curious to see how I would look in my daughter's artistic style. Let me click this "+ Filter" button, select Camera, and take a picture. "Use Photo." Now the filter is being created. Under the hood, it is training a customized style transfer model. Let me explain how that works. First, select a single style image. The style image I used is my daughter's drawing. Next, I need to provide a set of content images for the model to learn how to apply the style onto while preserving the original contents of these images. In this demo, I used a few tens of photos from my album, including scenic photos and selfies. If you want to apply the style on other photo types -- for example, photos of your pet -- you may also include a few such photos in your content set as well. Then, select your filter type as either image or video, depending on your application scenario. For this demo, I chose image, as I want to apply the style on static photos. You can also experiment with style strength and style density, as well as the number of iterations to get your favorite combination of stylization and original contents. For details on how to set these parameters, please refer to last year's WWDC session. Now let's give the newly created filter a try on my photo. Wow, that's how I would look on my daughter's drawing. It actually picked up these textures and colors. How about me trying a different photo? I have this bunny that my daughter really loves to play with. How about me taking a selfie with the bunny and apply the filter? The bunny has gotten stylized by her drawing too. I can't wait to show this to her and try on her other drawings. It'll be a lot of fun. In this demo, I showed you how to leverage style transfer model available in the Create ML framework on iOS to create a customized photo filter. So what does that look like in code? First, define training data source that specifies one style image and a set of content images. Next, define your session parameters, to specify things like where you want your checkpoints to be saved. Next, define your training job using these parameters. Finally, dispatch the training job, and once a successful completion is received, save out the trained model to stylize images, compile and instantiate a Core ML model, start making predictions. And that's it. That's what I used to create a customized photo filter, using style transfer API from Create ML framework. Other tasks follow a similar API pattern. So far I've talked about tasks that enable model creation from rich media data types, such as image, text, audio, and video. But what if your app does not interact with such data types? Let me invite my colleague Jack to talk about how you can make your app dynamic in these cases. Jack Cackler: Thank you, Tao. In addition to the tasks we've covered so far, the Create ML framework on iOS also supports classifiers and regressors for structured tabular data. Let's walk through an example of how these could create a more dynamic app experience. First, some background on classifiers and regressors. A classifier learns to predict particular classes from data in a training dataset. A regressor is similar, but learns to predict a numerical value instead of a discrete class label. Here are the APIs for training classifiers and regressors from general tabular data, allowing them to be used in many different scenarios. In particular, Create ML on iOS offers four different algorithms for each of these. Working with general tabular models can require a little more work to use, as you have to decide which features and target values you want to use in the model. However, this can often be straightforward. Let's consider an app using a tabular regressor to add a personalized experience. Here's a simple app for ordering meals from restaurants. The app has restaurants in the area. Here's a local Thai restaurant called Amazing Thai. If I select it, the app shows dishes I can order from the restaurant and information about each dish. It's a simple app, but what if I can make it better? What's really neat is if over time my app learned my behavior and helped surface intelligent restaurant and dish suggestions I might like. This would take this from a simple app into a really dynamic experience. I can achieve this by training a tabular regressor right in the app. I'm going to take three kinds of information and combine them in a structured table to train the model and deliver a new dynamic experience. The first is the content, which is data I've loaded into the app. In the case of our restaurant app, it's information about the dishes. The second is the context. In this case, the time of the day the user is ordering. Finally, I add the user's order history and create an experience that's tailored just for them right on their device. By combining the content and the context, as well as past user interaction, I can predict future interaction; a great opportunity for personalization, in this case to help predict dishes a user might like in the future. Let's go back to the app with the model added in. I'm ordering lunch today and I'm in the mood for pizza. I set the meal to Lunch and select the Pizza Parlor, pick a margherita pizza, and order it. There's a few pieces of information on this window that are really what's training our tabular regressor. The content is the keywords for this dish; things like the ingredients -- tomato, mozzarella -- as well as the restaurant itself -- Pizza Parlor -- and the genre of food -- pizza. The context for this model is the time of day. This is from lunch, and now I've taught my model that these are things I might like at lunch. Finally, my interaction is that I ordered this dish and not the others. The regressor I'm training is predicting a preference score for each dish that I might order, and today it learned that I did order this dish and not the others. Going back to the main screen, the model has already trained and there's a new window that suggests dishes just for me. The dish I actually ordered -- the margherita pizza -- is the first recommendation, but the next recommendation is a caprese sandwich from a completely different restaurant. Several other pizzas are also near the top. Let's try another example. Say I'm ordering dinner now.

This time I order a yellow curry from Amazing Thai. Now the model has updated again. It's learned my new preference, contextual on the time of day I'm ordering. The yellow curry I just ordered is now the top recommendation and a similar curry is the second recommendation. The next recommendation is the vegetarian pizza. It has mushrooms and peppers just like the curry I just ordered, and the app knows I might like pizza even if it might not be my first choice for dinner. If I then go to order lunch the next day, the model has learned to distinguish what I might order for lunch versus what I might order for dinner. This gives a really personalized experience that helps me find exactly what I want at the time I want it, and that's with just two orders. There's three real steps to adding a tabular classifier or regressor to an app: setting up the data, training, and prediction. The first function here creates the features leveraged in this regressor from the meal and keywords. I take the keywords associated with each dish, combine them with the current meal (the context) to create a new keyword that allows the model to capture the interaction between the content (dish) keywords and the context (meal) keywords. We put in the value of 1.0 in the dictionary to simply indicate that a particular keyword is present in the data entry. First, for each dish the user ordered, I add in an entry with the features generated previously and a positive target value. However, if I just included this, the model wouldn't learn to discern what dishes I like and what dishes I don't. To do this, I also add an entry using all the keywords that were not present in the dish with a negative target value of -1. This allows the model to learn which keywords best fit user preferences. I convert this combined information into a DataFrame, specifying both the keywords and the target. Finally, I train the model, specifying that the column I'm trying to predict is the target column that I had set to 1 or -1. In this case, the model is a simple linear regressor which generates meaningful results I can use in the app. At prediction time, I simply take the data I want to run an inference on and call a prediction from the model I trained. So far we've shown how to train a style transfer model and a tabular regressor in iPadOS and iOS apps. Let's talk about some best practices to integrate machine learning training into your app. Don't forget to follow best practices for machine learning in general. For example, always test how your model performs on data not in the training dataset. For long-running training tasks, leverage the asynchronous training control and checkpointing mechanism to customize your model creation workflow. Some aspects of model creation can be computationally intensive, consume additional memory, or require additional assets to be downloaded. Do take these into consideration when integrating into your app. Please refer to our APIs and documentation for more info. If you want to learn more about best practices, I highly encourage you to check our other WWDC sessions from past years on "Designing Great ML Experiences" and "Control training in Create ML with Swift". In this session, we have talked about how you can use the Create ML framework on iOS. We gave examples using style transfer and a tabular regressor, and most of the Create ML templates can now be trained directly on an iPhone or iPad. Through training on iOS, you can create dynamic apps that give users customized and personalized experiences while preserving user privacy. Because training and inference are entirely in-app, there's no model deployment to worry about either. We're really looking forward to seeing what you come up with. Thank you for listening and enjoy the rest of WWDC. ♪

-

-

7:58 - Training a style transfer model

// define training data source let data = MLStyleTransfer.DataSource.images(styleImage: styleUrl, contentDirectory: contentUrl) // define session parameter let sessionParameters = MLTrainingSessionParameters(sessionDirectory: sessionUrl) // define training job let job = try MLStyleTransfer.train(trainingData: data, sessionParameters: sessionParameters) // dispatch training job // save out model upon receiving successful completion, compile for later use // make prediction with CoreML model try model.write(to: writeToUrl) let compiledURL = try MLModel.compileModel(at: writeToUrl) let mlModel = try MLModel(contentsOf: compiledURL) let inputImage = try MLDictionaryFeatureProvider(dictionary: ["image": image]) let stylizedImage = try mlModel.prediction(from: inputImage) -

13:39 - Collecting features for a regressor

func featuresFromMealAndKeywords(meal: String, keywords: [String]) -> [String: Double] { // Capture interactions between content (the dish keywords) and context (meal) by // adding a copy of each keyword modified to include the meal. let featureNames = keywords + keywords.map { meal + ":" + $0 } // For each keyword, create an entry in a dictionary of features with a value of 1.0. return featureNames.reduce(into: [:]) { features, name in features[name] = 1.0 } } -

14:08 - Preparing training data

var trainingKeywords: [[String: Double]] = [] var trainingTargets: [Double] = [] for item in userPurchasedItems { // Add in the positive example. trainingKeywords.append( featuresFromMealAndKeywords(meal: item.meal, keywords: item.keywords)) trainingTargets.append(1.0) // Add in the negative example. let negativeKeywords = allKeywords.subtracting(item.keywords) trainingKeywords.append( featuresFromMealAndKeywords(meal: item.meal, keywords: Array(negativeKeywords))) trainingTargets.append(-1.0) } -

14:37 - Training a linear regressor model

// Create the training data. var trainingData = DataFrame() trainingData.append(column: Column(name: "keywords" contents: trainingKeywords)) trainingData.append(column: Column(name: "target", contents: trainingTargets)) // Create the model. let model = try MLLinearRegressor(trainingData: trainingData, targetColumn: "target") -

14:58 - Making a prediction

// Setup the data to run an inference on. var inputData = DataFrame() inputData.append(column: Column(name: "keywords", contents: dishKeywords)) // Call predictions on the trained model with the data. let predictions = try model.predictions(from: inputData)

-