-

Introducing enterprise APIs for visionOS

Find out how you can use new enterprise APIs for visionOS to create spatial experiences that enhance employee and customer productivity on Apple Vision Pro.

Chapters

- 0:00 - Introduction

- 2:36 - Enhanced sensor access

- 11:38 - Platform control

- 18:39 - Best practices

Resources

- Building spatial experiences for business apps with enterprise APIs for visionOS

- Forum: Business & Education

Related Videos

WWDC24

-

Search this video…

Hi, my name is Kyle McEachern. I’m an engineer on the Enterprise team for Vision Pro and I’m excited to talk to you today about a set of features we’re collectively calling Enterprise APIs for visionOS. Since the launch of Vision Pro, we’ve been hearing from our enterprise customers about how they'd love additional functionality to let them build the apps that would really change how they do business. Today, I'm happy to introduce the new functionality coming to visionOS with these Enterprise APIs. Now, there’s a wide range of enterprise environments where Vision Pro can help make work easier, more productive, and more accurate.

Including new spatial experiences for enhanced collaboration and communication.

Creating guided work activities to make complex tasks simpler.

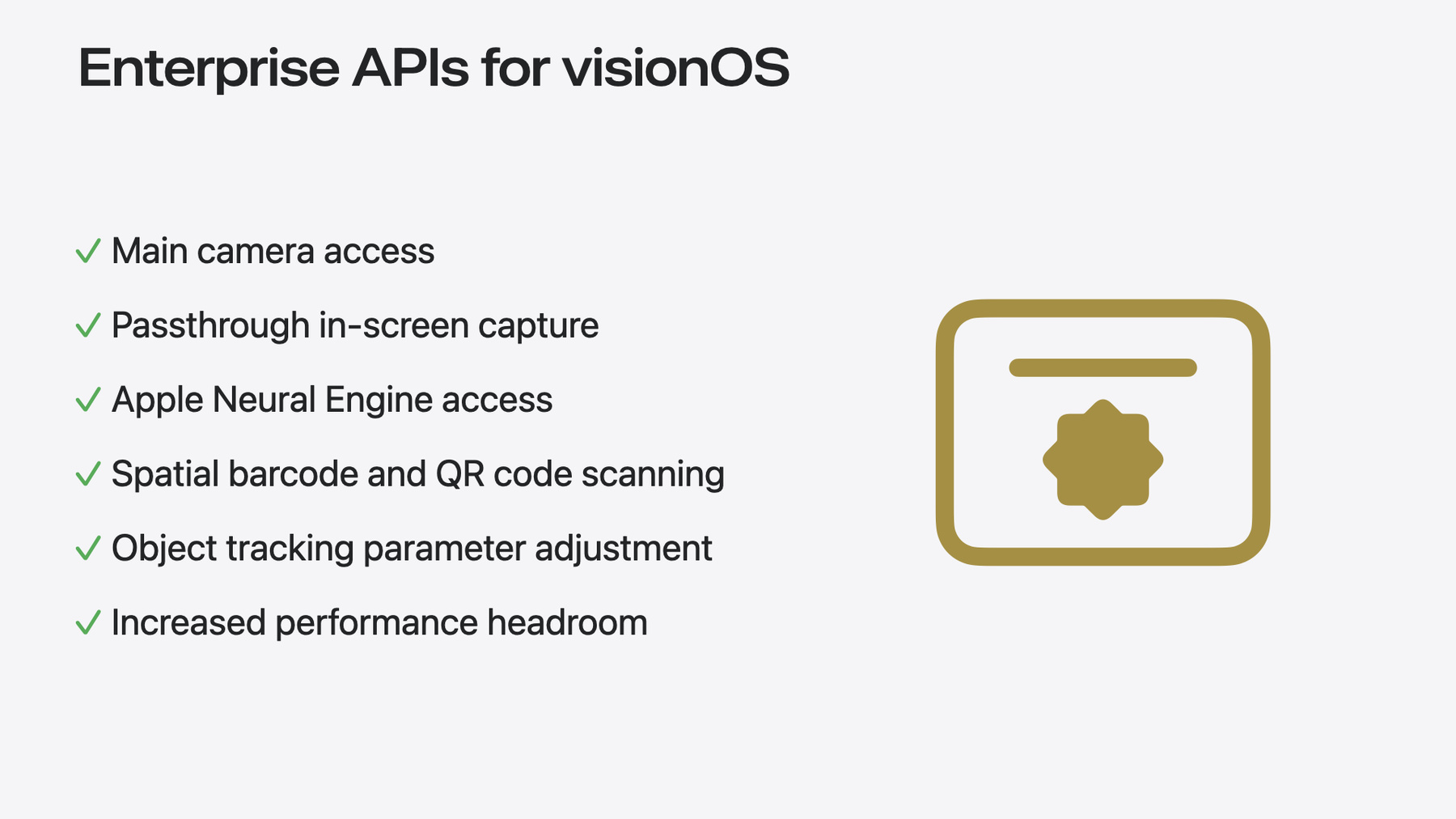

Or enabling entirely new spatial experiences that previously weren't possible. To support these and more, this year we’re providing a collection of six new APIs that will unlock exciting new capabilities for enterprise customers and their apps.

Sounds amazing, right? Now, before I go through the APIs in detail, it’s important to understand that while these APIs support a wide range of uses across many industries, they also provide a far greater level of access to the device capabilities than the existing APIs. And because at Apple we believe that Privacy is a fundamental human right, we’ve designed them with privacy in mind.

To ensure they are only used in appropriate situations, a managed entitlement is required, along with a license file tied to your developer account that enables the entitlement for your apps.

And since these APIs are intended only for Enterprise use, the entitlements are only available for proprietary In-House apps or those custom-made for other businesses, privately distributed through Apple Business Manager to the organizations’ users.

The first category is made up of APIs that provide enhanced sensor access and improve the visual capabilities of Vision Pro, including access to the main camera, improved capture and streaming, and enhanced functionality through the camera.

And the second category is focused on platform control, with advanced machine learning capabilities via Apple Neural Engine, enhanced object tracking capabilities, and the ability to tune the performance of your apps to get even more compute functionality for intensive workloads. Today we're going to go over these categories to discuss the six new APIs, how they're implemented, and example use cases for them, then wrap it up then wrap it up with some best practices. So let's start with that first category, enhanced sensor access.

One of the most common requests we get is to get access to the main camera, so that's where I'm kicking things off. With this new API enabled, you can now see the entire environment around Vision Pro. This API gives apps access to the device's main camera video feed for use in your app.

By analyzing and interpreting this feed, then deciding what actions to take based on what's seen, many enhanced spatial capabilities are unlocked.

For example, this could be used on a production line, using the main camera feed and a computer vision algorithm for anomaly detection to quickly identify parts that don't meet quality standards, and highlighting them so they can be replaced with good ones. So now, let's dive in to look at how to get access to the Main Camera feed and embed it in an app.

With this "main-camera-access.allow" entitlement and a valid Enterprise License file built with your app, we can utilize the API and get the video feed.

Once we're set up with our Entitlement, we'll set up our core variables. We start off by setting up a CameraFrameProvider with the supported formats now defined as "main", so we can get the feed from the main camera, as well as creating an ARKitSession and a CVPixelBuffer so we can store and display the feed.

Then we make sure to request the user's authorization for main camera access, and then we pass our CameraFrameProvider into the ARKit session, and run it.

Once that's done, we can pull the video frames from the CameraFrameProvider, by requesting the CameraFrameUpdates for that "main" format, and store them in a variable to access. Then finally, we can take each frame that we receive, taking the sample from the .left" value, since the "main" camera that we're using is the one on the user's left side, and put it into our Pixel Buffer, at which point, we can use it however we'd like in our app! Simple as that. Access to the main camera unlocks so much potential for enterprise apps, only a few of which I've highlighted here, but it has the potential to be truly impactful across a wide variety of industries, so I'm really excited to be able to talk about it today. Next, let's talk about including Passthrough video in screen captures. Today, when doing a screen capture with Vision Pro, all of the windows a user has open are captured, but the background is removed, rather than having the passthrough video feed of the user's surroundings. Now, you can capture and share the user's entire view inside Vision Pro.

With this API, we are bringing that full, combined spatial view to screen capture in visionOS, including the passthrough camera's feed. It's all there, exactly what the user is seeing, to capture and save, or stream via an app to other users on separate devices. To better protect user privacy when capturing the entire view of the user, including open apps, this feature requires the use of a Broadcast Upload Extension to receive the feed, and visionOS’s built in broadcast feature through ReplayKit, meaning the user needs to specifically utilize the system’s "start broadcast" button each time they are starting a screen capture or share.

For example, this could be used by a technician in the field, making a call to an expert back at the office. They can share their actual view, including both the passthrough camera and the visionOS windows on top of it, with ‘see what I see’ functionality. They can then work together with that expert, hands-free, to get feedback that matches exactly what they're experiencing. So how do we implement this? Let's walk through it. As with the Main Camera API, we put our "include-passthrough" entitlement file in place, which gives us access to the combined Spatial view with the Passthrough camera when taking a screen capture. And that's it! When doing a screen capture of the system from an app with this Entitlement, the system will automatically replace the normally-black background with the Passthrough camera's view, enabling this see-what-I-see capability in your captures. As simple as that! Gotta love when it's a one-step change. With this API, apps can provide the ability to allow users to debug remotely, or provide expert guidance to and from workers in different locations, increasing the amount a knowledgeable individual can help in different scenarios. I wish I had this one a couple of months ago when I was trying to teach myself how to solder! And finally, we have Spatial Barcode and QR Code Scanning. Vision Pro can now automatically detect and parse barcodes and QR codes, allowing for custom app functionality based on these detected codes.

When a QR code or barcode is detected, the app receives information about the type of code that was detected, its spatial position relative to the user, and the payload content of that code. This has countless potential use cases, just as there are countless potential applications of QR and Barcodes.

As one example, this could be used in a warehouse, allowing a worker who is retrieving a requested item to simply look at a package's barcode to verify that it's the correct item that they're looking for. No more relying on hand identification or carrying around a scanner that makes for awkward carrying or movement of items. For this API, we use the "barcode-detection.allow" entitlement. Now, let's take a look at how we set up Barcode Detection in an app.

First, we set up our ARKitSession, and make sure we have the .worldSensing permission enabled, since it's needed to detect Barcodes.

Then we enable a new-to-visionOS variable, a BarcodeDetectionProvider. Just like the existing ability to set up a HandDetectionProvider, this will handle the nuts and bolts of finding and tracking these barcodes. In this case, by passing in these 3 symbologies, we're going to be looking here for Code-39 barcodes, QR codes, and UPC-E type barcodes. The documentation lists all the symbologies supported by this Vision Pro Enterprise API.

Then we kick off our ARKit session, connected to this BarcodeDetectionProvider. And then we wait for updates to that provider, and handle them when they arrive.

The API will send updates when a new code is detected, with the "added" event. Where we can, for instance, highlight the code. When a code's location has moved, with the "updated" event, letting us do things like update our UI to track the code, and when we no longer can see the code, with the "removed" event, allowing us to clean up our UI. And that's it! Spatial barcodes, auto-detected and available in our app. This could have so many uses in logistics, in error checking, identifying parts, or providing contextual data to users at specific points. So now I'd like to share a brief demo with you, showing just one example of how these new APIs could be used to create an experience that previously wasn't possible, as I'm trying, but failing to put together a new electronic component I'm working on, and need some help from my friend Naveen in our support center.

Here I am recently, trying valiantly to assemble some electronics that I'm supposed to have ready for a presentation at a meeting that's coming up later in the day. Unfortunately I'm just stuck. Thankfully however, I've got my enterprise's "Support Center" app, which lets me get live help in just these kinds of situations! When I open up the Support Center, I can connect to a support agent to get live help. Looks like it's my friend Naveen back at HQ! I'm sure he'll be able to help. Naveen wants to take a look at what I'm working on, and asks me to enable my camera to share with him. By clicking on "Enable Camera Sharing", I can share my Main Camera feed with Naveen. I get a preview of it here in the window, so I know what I'm looking at and sending to him.

Looks like it's not an easy kit to identify on sight, so Naveen wants to take a look at the instructions for the kit. There’s a code on the box, which should pull up the instructions for the right kit automatically. I click on "Enable Barcode Scanning" in the app, then grab the box and look at the code on the side, and there we go, the instructions are right there in the app with me! I let Naveen know I found the instructions, and he asks me to share my whole screen with him, so he can see the instructions and my view all at once, and really see what I'm seeing.

To do this, I click on "Share Full View", choose the app's name in the menu, and hit "Start Broadcast". Now, Naveen's getting my whole view. Let's check in on what he's seeing on his end.

Here we can see Naveen's view, and we've got my whole view being shared with him in his Support Center agent view. He can take a look at my kit, comparing it to the instructions, and he sees that I'm missing the last step, and need to connect a wire to Pin 45 to finish the job, and lets me know.

Back on my end, I put the piece into the right spot, and bingo! My status light turns green, and our kit is good to go! Naveen bids goodbye, and I can close out of the app, now that I've gotten that help and I'm ready to go for my big meeting later. Phew! Now of course, this demo put these new features together into one app in a specific way, just to give a brief example of how they could be used. In your case, you may only need one or two of these features, and your usage may be entirely different. We’ve designed them to be flexible and powerful, ready for enterprise environments across industries. And now let's move on to our second category, increased platform control. First up, providing Vision Pro access to Apple Neural Engine onboard the device. This allows for Machine Learning tasks to be executed on-device through Apple Neural Engine, rather than the default of CPU and GPU only.

This enhances the existing CoreML capabilities of Vision Pro. By allowing the device to run models that require, or are enhanced by, access to Apple Neural Engine.

For example, when implementing the feature we discussed for main camera access, with Vision Pro identifying products with defects, a customer may implement their custom machine learning model for anomaly detection, which has features that require Apple Neural Engine in order to fully function on the device so everything can be detected locally.

Apple silicon powers all of our Machine Leaning platforms, with models running on our CPUs, GPUs, and Apple Neural Engine.

Without this Entitlement, models in visionOS are able to run on Vision Pro's CPU and its GPU.

With this entitlement, Apple Neural Engine is unlocked as a compute device for your apps to run models on, enabling powerful Machine Learning capabilities.

When implemented, CoreML will dynamically decide what processing unit a model will most efficiently run on, based on a variety of factors, including current resource usage on the device, what compute devices are available, and the specific needs of your model, and direct the model calculations there. Let's take a look at how you enable Apple Neural Engine for your models. To implement this, it's a fairly simple process. As with all of these APIs, you will need your entitlement and license to be set up. For Apple Neural Engine, it's the "neural-engine-access" entitlement That entitlement is all that's needed to allow your models to be run with Neural Engine. In order to verify in your code that you have access to Apple Neural Engine, you can use a snippet like this, looking at the "availableComputeDevices" property of the MLModel class and then take actions based on the results.

Note that these are example method calls an app may use, and not a part of the API.

Then, if appropriate when invoking your model, the system will utilize the Neural Engine while executing the model. If it's unavailable, or the system state makes it not as efficient to use, CoreML will fall back to GPU and/or CPU for calculation.

With this dynamic decision-making system, any Machine Learning model running on an app with this Entitlement will automatically be executed as efficiently as possible. Nice, right? Next up, let's talk about enhanced known-object tracking through parameter adjustment. Known-object tracking is a new feature in visionOS 2.0, allowing apps to have specific reference objects built into their project that they can then detect and track within their viewing area. For Enterprise customers, we are enhancing this new feature with configurable parameters to allow for tuning and optimizing this object tracking to best suit their use case Including changing the maximum number of objects tracked at once. Changing the tracking rate of both static objects, as well as and dynamic objects, which are moving through the user's field of view, and modifying the detection rate for identifying and locating new instances of these known objects in Vision Pro's field of view.

However, this API does have the same baseline compute abilities, so in order to get the full value of increasing one or more parameters that best suit your use case, other parameters may need to be reduced to balance out the compute requirements and maintain system health while delivering the functionality you're looking for in your app.

This could be used in an app built for a complex repair environment, with a wide variety of tools and parts needing to be tracked and used, allowing a technician to follow guided instructions on replacing parts on a machine, enhanced with the stored knowledge of this high number of relevant objects, highlighting them in-place as they are needed during the repair.

So what does this look like in practice? Pretty simple, really! Our entitlement for this API is "object-tracking-parameter-adjustment.allow". With that in place, we can set up our object tracking code! First, we set up our TrackingConfiguration.

Then, on that configuration variable, we set our new target values. In this case, we're setting our maximum tracked objects up to 15 from the default of 10.

You don't need to set all of the values each time, just the ones you're changing we've just included this to show the options you have.

Then we set up an ObjectTrackingProvider, passing in our reference objects we want to look for, along with our custom TrackingConfiguration we just set up.

These reference objects, just as with all known-object tracking in visionOS 2.0, are ".referenceObject" files. The process for creating those files can be found linked in the documentation for this session.

Then we just start our ARKit Session with that ObjectTrackingProvider, and we're all set! Increased maximum objects tracked, just like that. Your use case may require a large number of different objects to track at once, or a need for tracking more granularly than the default detection rate, so we've designed this to be flexible to the needs of our customers, allowing tuning to create the best experiences possible in your app. And finally, we have app compute settings for increased performance headroom. These new Enterprise APIs we've talked about, allow customers to push their apps and devices to their limits. And now, even further, with app compute settings. With this entitlement, enterprise developers can get access to even more compute power on their Vision Pro devices, in exchange for a bit more ambient noise by allowing the fans to run a bit faster to keep the system running smoothly.

In any heavy workload, a developer needs to balance compute power and thermal usage, battery life, and user comfort. In its default settings, Vision Pro has been tuned to have the optimal balance of these three for most use cases.

However, this entitlement allows for increasing the compute power of the CPU and GPU, in exchange for slight reductions in the other two areas, if there is a specific use-case where that trade-off makes sense for an enterprise.

This way, customers can really get every ounce of performance out of Vision Pro and unlock all the potential of their enterprise apps For example, a customer might want to review a highly-complex, high-fidelity, three-dimensional design by loading it in their space and walking around it, and finds that the rendering and performance are improved by enabling app compute settings. To enable this setting, you simply put the "app-compute-category" entitlement in place, and the system will automatically know that it has the ability to utilize the enhanced compute capabilities, and run the fans as-needed to keep the device running smoothly. This setting is app-wide, and isn't turned on and off by app code. With this entitlement in place, the system will automatically adjust the CPU and GPU power usage, and fans whenever needed for the workload.

Since this setting is applied app-wide, and is not controlled through a specific method being invoked in your code, do keep in mind that this system will be enabled and disabled dynamically based on Vision Pro's status and workloads at any given time. Your experiences may vary in similar situations, due to the dynamic nature of this feature. Lastly, let's talk about best practices when implementing these new enterprise APIs on visionOS.

Always be mindful of environmental safety, and ensuring that people using Vision Pro are in an area where they can perform all their work safely while wearing the device.

Investigate the existing APIs, and make sure that what's needed for your specific use case isn't already covered. Quite a lot of great functionality to build amazing experiences is already included with the existing SDK! Only request the specific entitlements that you're going to need for your app. We want to ensure that developers have what they need to accomplish their enterprise app development goals, while keeping user privacy and purpose limitation in mind.

And finally, ensure employee privacy. With this additional level of access, in particular to the APIs providing direct access to the main camera feeds, it creates scenarios that need to be considered in your workplace and with your employees. Ensure that you are building just what is needed for your enterprise needs, while respecting the privacy of those in the work environment. So, that’s my brief overview of the enterprise APIs for visionOS. I hope you’ll find that one or more of these brand new APIs will help you close the gap between your idea of the perfect spatial app for your needs and its implementation.

These APIs unlock powerful new features for both enhanced sensor access as well as improved platform control. We designed them to be "building blocks," that could be used in a wide variety of ways, and allow for the creation of apps that are custom fit for your enterprise business needs, and I hope you're as excited as we are to see what you can build with them. But before I go, I’d like to leave you with some final guidance. First, take a look at the documentation for these APIs. You’ll find a link to the docs in the resources associated with this session. And when you have decided on the one or more that fits your needs, be sure to validate that you have a use-case required for eligibility. That is, an in-house enterprise app designed for your employees or for the employees of your customer. And with those items checked, apply for the appropriate entitlement associated with your desired API. The information for how and where to apply can also be found in this session's resources.

We’d also love to receive your feedback on these new APIs and on features you’re interested in seeing in subsequent versions of visionOS. And finally, be sure to check out this year's session titled "What’s new in device management", to learn the latest techniques for managing Apple Vision Pro in your enterprise. Thank you for joining me in exploring enterprise APIs for VisionOS!

-

-

3:36 - Main Camera Feed Access Example

// Main Camera Feed Access Example let formats = CameraVideoFormat.supportedVideoFormats(for: .main, cameraPositions:[.left]) let cameraFrameProvider = CameraFrameProvider() var arKitSession = ARKitSession() var pixelBuffer: CVPixelBuffer? await arKitSession.queryAuthorization(for: [.cameraAccess]) do { try await arKitSession.run([cameraFrameProvider]) } catch { return } guard let cameraFrameUpdates = cameraFrameProvider.cameraFrameUpdates(for: formats[0]) else { return } for await cameraFrame in cameraFrameUpdates { guard let mainCameraSample = cameraFrame.sample(for: .left) else { continue } self.pixelBuffer = mainCameraSample.pixelBuffer } -

7:47 - Spatial barcode & QR code scanning example

// Spatial barcode & QR code scanning example await arkitSession.queryAuthorization(for: [.worldSensing]) let barcodeDetection = BarcodeDetectionProvider(symbologies: [.code39, .qr, .upce]) do { try await arkitSession.run([barcodeDetection]) } catch { return } for await anchorUpdate in barcodeDetection.anchorUpdates { switch anchorUpdate.event { case .added: // Call our app method to add a box around a new barcode addEntity(for: anchorUpdate.anchor) case .updated: // Call our app method to move a barcode's box updateEntity(for: anchorUpdate.anchor) case .removed: // Call our app method to remove a barcode's box removeEntity(for: anchorUpdate.anchor) } } -

13:17 - Apple Neural Engine access example

// Apple Neural Engine access example let availableComputeDevices = MLModel.availableComputeDevices for computeDevice in availableComputeDevices { switch computeDevice { case .cpu: setCpuEnabledForML(true) // Example method name case .gpu: setGpuEnabledForML(true) // Example method name case .neuralEngine: runMyMLModelWithNeuralEngineAvailable() // Example method name default: continue } } -

15:39 - Object tracking enhancements example

// Object tracking enhancements example var trackingParameters = ObjectTrackingProvider.TrackingConfiguration() // Increasing our maximum items tracked from 10 to 15 trackingParameters.maximumTrackableInstances = 15 // Leaving all our other parameters at their defaults trackingParameters.maximumInstancesPerReferenceObject = 1 trackingParameters.detectionRate = 2.0 trackingParameters.stationaryObjectTrackingRate = 5.0 trackingParameters.movingObjectTrackingRate = 5.0 let objectTracking = ObjectTrackingProvider( referenceObjects: Array(referenceObjectDictionary.values), trackingConfiguration: trackingParameters) var arkitSession = ARKitSession() arkitSession.run([objectTracking])

-