-

Keep up with the keyboard

Each year, the keyboard evolves to support an increasing range of languages, sizes, and features. Discover how you can design your app to keep up with the keyboard, regardless of how it appears on a device. We'll show you how to create frictionless text entry and share important architectural changes to help you understand how the keyboard works within the system.

Chapters

- 0:00 - Introduction

- 1:29 - Out of process keyboard

- 3:47 - Design for the keyboard

- 14:06 - New text entry APIs

- 15:06 - Key takeaways

Resources

- Adding a search interface to your app

- FocusState

- SafeAreaRegions

- Human Interface Guidelines: Keyboards

- Adjusting your layout with keyboard layout guide

Related Videos

WWDC23

WWDC21

-

Search this video…

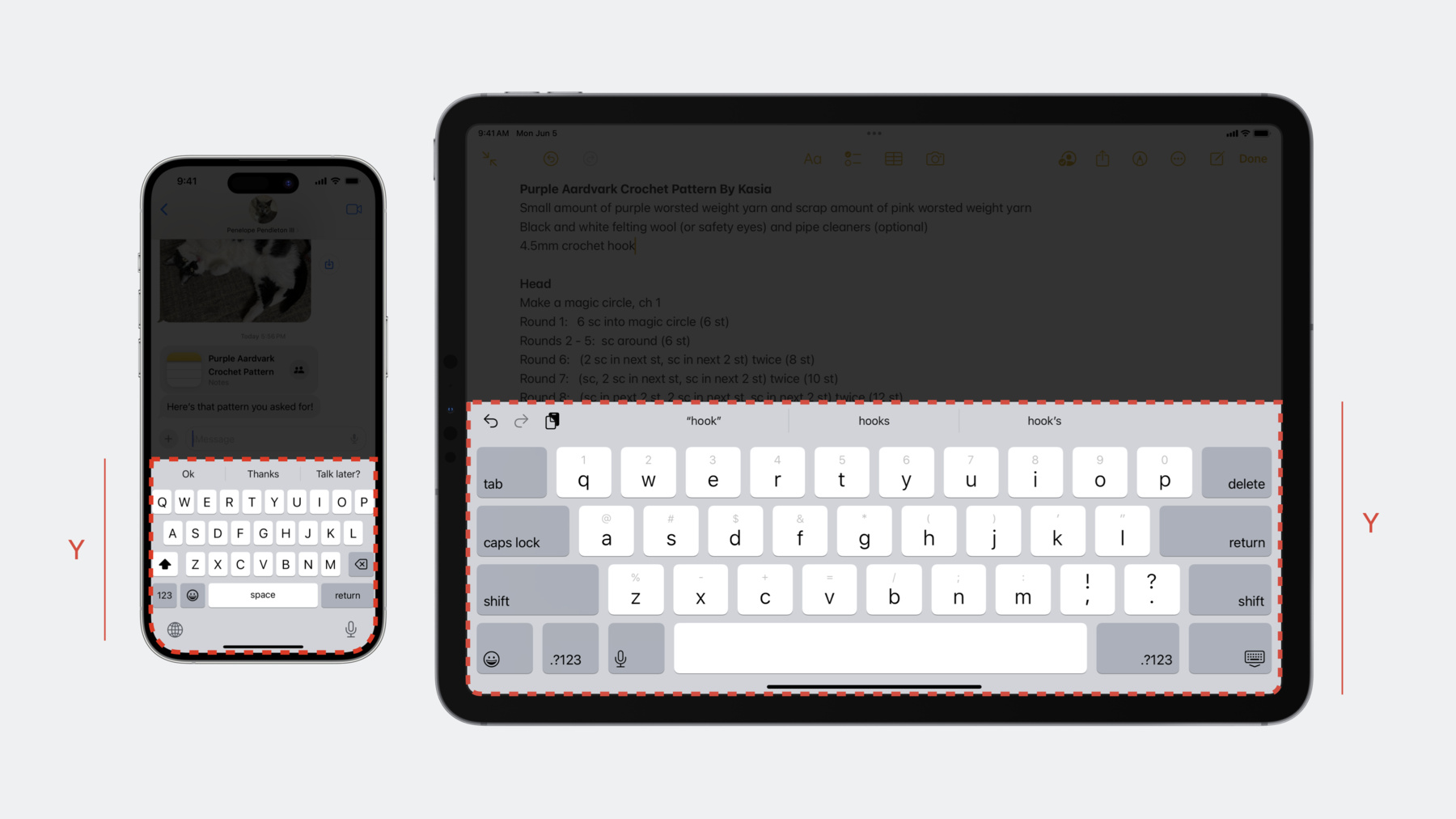

♪ ♪ Spencer: Hello, everyone. My name is Spencer Lewson. Today I'd like to tell you about how the keyboard has changed over the last few years and how you can design your app to "keep up with the keyboard." As you all know, the iPhone's keyboard was originally introduced back in 2007, and it's evolved substantially since then. It now supports many different languages, each of which may have a different size for their layout. And of course, the keyboard comes on many different devices as well. But just as how the keyboard has evolved, so has the system, which has added exciting features like multitasking and the floating keyboard, allowing the keyboard to transcend the bounds of the app.

Last year, we introduced Stage Manager, which unlocks a whole new level of productivity on iPad. Now with multiple scenes running on multiple displays, the use of a hardware keyboard and mouse is now more appealing than ever. So that's why today we'd like to tell you about how we're making this wide variety of keyboard scenarios possible with a rearchitecture of the keyboard and how that may affect your app. We'd also like to share some tips and tricks for designing your app to work as seamlessly with the keyboard as possible, with as little effort as possible. And finally, we'd like to introduce some exciting new capabilities in the world of text entry. So let's talk about the new out of process keyboard.

Taking the keyboard out of your app process allows us to improve security and ensure the privacy of what users type. It also frees up memory in your apps and throughout the system, as there's now only one keyboard, instead of multiple instances running in multiple apps. And this new architecture allows us to design for the future and implement exciting new functionality. So let's talk a bit about how that works. Prior to iOS 17, the keyboard's views and logic were executing within your apps process. But new on iPhone on iOS 17, the keyboard has been moved to its own process, running almost completely outside your app. I'm excited to show you how all of this works, but first let's talk about how it works in process so we can see the differences.

With in process, your app would first request the keyboard, such as by invoking becomeFirstResponder in response to a touch, and this would kick off a series of synchronous pieces of work to initialize all the views. Once that was completed, the system would perform the animations to bring up the keyboard. Your app would then sit idle or perform any app side work until a touch event comes in and a text insertion is generated.

With out of process, this now works a bit differently. When your app requests the keyboard, again, such as by invoking becomeFirstResponder, your app will perform some initial computations and then keyboard process will asynchronously initialize its UI. While that happens, the app will sit idle or perform app side work. Once it's ready, it will bring up the keyboard and coordinate the animations between the two processes. Now that the keyboard UI is up, it will await any touch events that occur within the bounds of the keyboard and convert them into text insertions for your app. For the majority of apps, these changes are completely transparent and require no adoption from you. Though, aspects of this new asynchronous approach now exist throughout the entire keyboard and can introduce some slight differences in timing. So if your app is especially sensitive to the timing of text entry, selection changes, or any other text related operations, you should keep this new architecture in mind. So now that we've talked a bit about the diversity of the typing on iOS, let's talk about some of the relatively new scenarios to account for when designing your app. Of course, we're all familiar with the most common use case: a full screen app with a keyboard. This is a relatively straightforward use case where your app and the keyboard are both full screen, making adjusting for the keyboard as simple as moving up your views by a value that happens to be the height of the keyboard. However, with Stage Manager, the system is moving away from that model. With advanced multitasking, apps aren't necessarily full screen. That means when the keyboard comes up, some special care needs to be taken to adjust the app's views correctly. That's because the keyboard's scene and the app's scene no longer line up. And some extra transformations need to take place to adjust the app appropriately for its context. For instance, in this scenario, the app's adjustment isn't actually Y, the height of the keyboard, it needs to adjust by the intersection of the keyboard and your app, shown as Y'. And now there may not even be a single adjustment to calculate, as you may have multiple scenes on screen, each with different calculations and adjustments necessary. There's also some recently introduced scenarios with the hardware keyboard. When the hardware keyboard is attached, the system will present an assistant toolbar in the center of the screen. When this full sized toolbar is present, it acts as part of the keyboard, so your views should be adjusted out of its way. Using a flick gesture, this toolbar can also be minimized. When outside of Stage Manager, we're preserving existing behavior where this mini toolbar does not act as part of the keyboard and will overlap your views. Users can access the content underneath by dragging the toolbar to the other side of the screen. However, when in Stage Manager, the mini toolbar does act as part of the keyboard and depending on your use case, you can choose to update scroll offsets, and push up input accessory views, and make any other layout adjustments as you'd like. Now, we know that there's a lot of scenarios and nuance to account for here, but the good news is that with the right APIs, the system does most of the work for you. So let's talk about the keyboard layout guide. You may be familiar with the keyboard layout guide which was introduced in iOS 15. It provides an easy auto layout guide that automatically adjusts for the keyboard, and we've been adding to it over the last year. In fact, it's now used by some complex Apple apps, such as Spotlight and Messages. It's the recommend approach because adopting can be as simple as one line to add a constraint between your views and the guide. Now let's talk about exactly what this gets you. The existing default behavior is as follows: the keyboard layout guide follows the keyboard when it's onscreen and docked. That is to say, when the keyboard is touching the bottom of the screen. If the keyboard is not docked, such as when it's floating on iPad, the guide's height will match the view's bottom safe area. Finally, the guide will track any keyboard dismiss gestures once the touch point intersects with the guide. I'm excited to tell you that we've expanded the customization options in iOS 17 to allow you to change these to get the exact behavior that you want. There are now three properties on UIKeyboardLayoutGuide. First, followsUndockedKeyboard. By default, the guide will treat the floating keyboard or mini toolbar the same as an offscreen keyboard. However, when set to true, the guide will continue to follow the keyboard even when it's floating, as long as it's over your app's window. Next is usesBottomSafe area. By default, the keyboard layout guide will track the height of the safe area when the keyboard is dismissed. But when set to false, the usesBottomSafeArea will instead track the bottom of the view, which in this case is the bottom of the screen. When might this be useful? Well, this will allow you to extend your background to cover the bottom of the screen when the keyboard is dismissed and also adjust for when the keyboard is brought up, behaving similar to an InputAccessoryView. And actually, that's a really interesting use case for this property, so let's talk about how that could work. Here's the code to get that simple input accessory-like view with a text field that stays above the bottom safe area and a backdrop that extends all the way to the bottom of the view only when the keyboard is dismissed. Note, we'll only be dealing with the vertical constraints here, because they're the interesting ones in this case. First, let's set usesBottomSafeArea to false. Then, let's tie the top of the text field to the top of the backdrop, using system spacing between the two, just to give it a little padding. Next, make sure the text field is always above the keyboard by constraining the top of the guide to the bottom of the text field, with at least system spacing. The greater than is important here. When the keyboard is offscreen, the text field is going to need enough flexibility to stay above the bottom safe area insets. Let's also tie the guide's top anchor to the bottom of the backdrop. This will ensure the backdrop goes all the way to the bottom when the guide is offscreen, but only because we've set usesBottomSafeArea to false. Finally, constrain the bottom safe area to the bottom of the text field, plus system spacing. And again, we're using greater than constraints here to make sure it's flexible enough to follow the keyboard when it comes up. And with that, you have an adaptive view that has a backdrop that extends all the way to the bottom of the view, but adjusts to keep the text field system spacing above the keyboard and the backdrop at the top of the keyboard, just like an input accessory view might.

Third, we have keyboardDismissPadding. This adjusts the parameters of the scroll to dismiss gesture. If you've tried to create an InputAccessory-like view in the past using the Keyboard Layout Guide, you may have noticed that the keyboard dismiss gesture doesn't begin until the touch intersects the keyboard. Let's use this new property to fix that.

The keyboardDismissPadding property allows you to specify padding above the keyboard that should respond to the keyboard dismiss gesture. This is relatively straightforward. Simply grab the height of your view however you wish and set the property. Done. Now the keyboard dismiss gesture begins when the touch intersects with your view. Of course, UIKit isn't the only framework that apps are built with. There's also SwiftUI. And fortunately, SwiftUI automatically handles the common cases for you. With SwiftUI, the keyboard is included as part of the safe area, which when the keyboard is dismissed, will track the small home affordance at the bottom of the screen. When the keyboard is brought up, the system will animate and adjust the safe area for you, resizing your views automatically, so there's not really any keyboard code to write at all. Though, you may need to do some work on your layouts to make sure that your views are resizing or repositioning in the way you want. There's a lot of great resources about SwiftUI, more than I could ever list– so to learn more about this, take a look at the documentation linked below. Now, let's talk about a more manual way of integrating the keyboard, the keyboard notifications. Before SwiftUI and keyboard layout guide, the only way to integrate your keyboard into your application was to listen for a set of keyboard notifications– willShow, didShow, willHide, didHide– and then manually adjust your layout based on the frame and animation information in the notification. These are still around, but they require more careful handling, since the system isn't doing the work for you. And with the introduction of Stage Manager, we've noticed an increase of a commonly used pattern for that handling no longer works 100% of the time. This pattern usually focuses on receiving a keyboard notification and using the raw value from the height of the keyboard directly.

Now remember earlier when we were discussing the nuance of how the height of the keyboard and your app's position on screen interact? Let's discuss how this works with notifications. Each notification specifies the expected frame of the keyboard relative to the screen coordinates. If the screen's coordinate space and your app's coordinate space match, such as when the app is full screen, the raw height value included in the notification will coincidentally result in your views adjusting as expected. However, when the screen's coordinate space and your app's coordinate space differ, this raw height value will no longer be the correct value to adjust your views by. This can result in your views being pushed too high and appear in the wrong place. Fortunately, there's a few changes that can be made to your notification handling to make things work smoothly again. In iOS 16.1, the keyboard notifications began including the corresponding UIScreen as the notification's object. First, let's use it to check that the keyboard is appearing on the same screen as our app, otherwise no adjustments will be necessary. Next, let's compute a rect to represent the keyboard's placement relative to your view. We can do this by retrieving the keyboard's expected end frame, the coordinate spaces provided by the notification and your view, and then using them to convert the keyboardFrameEnd into your coordinate space. With this new rect, we can determine the necessary offset for your views by calculating the intersection of your views and the convertedKeyboardFrameEnd. If your views and the keyboard are overlapping, the necessary offset will now be the height of the intersection between your views and the keyboard. And with that, you can adjust your constraints or layouts however you like. Now, with the new out of process architecture, there may be some small changes in behavior with notifications you should know about. So let's take a moment to talk about that. Remember this diagram outlining the lifecycle of the keyboard process? Let's zoom in on the animation phase here called "bring up animation." In the In-process architecture when your app requests the keyboard, the system would synchronously initialize the keyboard UI and then post the notifications and perform the animations. However, in the new out of process architecture, when your app requests the keyboard, the system will asynchronously initialize the keyboard UI and then asynchronously post the notifications and perform the animations. This introduces some slight differences in timing, so if your app is relying on the timing of the notifications as some sort of "callback" from invoking becomeFirstResponder, or perhaps performing significant work on the main thread which could cause the handling of the notifications to be delayed, you should keep this new model in mind, as it may have an effect on your app. Now that we've reviewed all these tips and tricks to let your users type into your apps as easy as possible, we're excited to introduce a new feature and API that makes text entry even faster. And that's Inline predictions.

In iOS 17, the English language keyboard will now provide predictions for your next few words right inline with the text field. These predictions are securely generated on device and only use the contextual information provided in the focused text field. Adopting these predictions is also really easy. Here we have the UITextInputTraits protocol, and as you can see, a new inlinePredictionType property has been added, and it comes with a few options. By default, inline prediction will be active in most text input fields, but will automatically be disabled in fields where predictions aren't appropriate, such as search fields or password fields. And of course, you can also customize the behavior in your app by explicitly setting the property to yes or no. And with that, let's recap some key takeaways. Please remember to: Design your app to work seamlessly with the keyboard, no matter how it appears. Keep the new out of process keyboard model in mind when writing time-sensitive code. And improve your app's experience by adopting APIs that accelerate text entry. Thanks for keeping up with the keyboard. ♪ ♪

-

-

6:21 - Keyboard layout guide

view.keyboardLayoutGuide.topAnchor.constraint(equalTo: textView.bottomAnchor).isActive = true -

7:56 - usesBottomSafeArea

// Example of using usesBottomSafeArea to create keyboard and text view aligned with safe area view.keyboardLayoutGuide.usesBottomSafeArea = false textField.topAnchor.constraint(equalToSystemSpacingBelow: backdrop.topAnchor, multiplier: 1.0).isActive = true view.keyboardLayoutGuide.topAnchor.constraint(greaterThanOrEqualToSystemSpacingBelow: textField.bottomAnchor, multiplier: 1.0).isActive = true view.keyboardLayoutGuide.topAnchor.constraint(equalTo: backdrop.bottomAnchor).isActive = true view.safeAreaLayoutGuide.bottomAnchor.constraint(greaterThanOrEqualTo: textField.bottomAnchor).isActive = true -

9:40 - Keyboard dismiss padding

var dismissPadding = aboveKeyboardView.bounds.size.height view.keyboardLayoutGuide.keyboardDismissPadding = dismissPadding -

12:11 - Handle willShow or hideKeyboard notifications

func handleWillShowOrHideKeyboardNotification(notification: NSNotification) { // Retrieve the UIScreen object from the notification (Added iOS 16.1) guard let screen = notification.object as? UIScreen else { return } // Determine if the notification’s screen corresponds to your view’s screen guard(screen.isEqual(view.window?.screen)) else { return } // Calculate intersection with keyboard let endFrameKey = UIResponder.keyboardFrameEndUserInfoKey // Get the ending screen position of the keyboard guard let keyboardFrameEnd = userInfo[endFrameKey] as? CGRect else { return } let fromCoordinateSpace: UICoordinateSpace = screen.coordinateSpace let toCoordinateSpace: UICoordinateSpace = view // Convert from the screen coordinate space to your local coordinate space let convertedKeyboardFrameEnd = fromCoordinateSpace.convert(keyboardFrameEnd, to: toCoordinateSpace) // Calculate offset for view adjustment var bottomOffset = view.safeAreaInsets.bottom // Get the intersection between the keyboard's frame and the view's bounds let viewIntersection = view.bounds.intersection(convertedKeyboardFrameEnd) // Check whether the keyboard intersects your view before adjusting your offset. if !viewIntersection.isEmpty { // Set the offset to the height of the intersection bottomOffset = viewIntersection.size.height } // Use the new offset to adjust your UI movingBottomConstraint.constant = bottomOffset // Adjust view layouts and animate using information in notification ... } -

14:38 - Inline predictions

@MainActor public protocol UITextInputTraits : NSObjectProtocol { // Controls whether inline text prediction is enabled or disabled during typing @available(iOS, introduced: 17.0) optional var inlinePredictionType: UITextInlinePredictionType { get set } } public enum UITextInlinePredictionType : Int, @unchecked Sendable { case `default` = 0 case no = 1 case yes = 2 } let textView = UITextView(frame: frame) textView.inlinePredictionType = .yes

-