-

Discover Continuity Camera for tvOS

Discover how you can bring AVFoundation, AVFAudio, and AudioToolbox to your apps on tvOS and create camera and microphone experiences for the living room. Find out how to support tvOS in your existing iOS camera experience with the Device Discovery API, build apps that use iPhone as a webcam or FaceTime source, and explore special considerations when developing for tvOS. We'll also show you how to enable audio recording for tvOS, and how to use echo cancellation to create great voice-driven experiences.

Resources

Related Videos

WWDC23

WWDC22

-

Search this video…

Hi there, I'm Kevin Tulod, a software engineer on the tvOS team. And I'm Somesh Ganesh, an engineer on the Core Audio team. In this session, we'll discuss bringing camera and microphone support to your tvOS app.

tvOS 17 introduces Continuity Camera and Mic to Apple TV. Now you can stream camera and mic data from your iPhone or iPad to use as inputs on tvOS. This opens up whole new genres of apps and experiences for the big screen. Most apps on tvOS generally fall into two categories: content playback apps, like movie and TV show streaming, and games.

Continuity Camera enables you to build completely new apps for tvOS, like content creation apps that record video and audio, or social apps like video conferencing and live streaming.

You can even integrate the camera and mic to existing streaming apps and games to create completely new features that weren't possible in earlier versions of tvOS. This functionality enables a diverse set of apps for TV, and I'm going to show you how to bring Continuity Camera and Mic to your tvOS app.

Last year, macOS Ventura introduced Continuity Camera to use your iPhone as a webcam. You simply bring your phone close to your Mac, and the camera and mic become available as input devices. If you're not already familiar with Continuity Camera on macOS, check out the session on this topic from WWDC 2022.

In this session, I'll first start with an overview of the APIs your app can now adopt on tvOS to access cameras and microphones. Next, I'll dive into how your app can adopt Continuity Camera in tvOS to use iPhone or iPad as a camera and mic.

I'll then briefly discuss how to build a great app experience on tvOS, highlighting the similarities and differences when compared to developing for other platforms. Finally, Somesh will discuss the microphone APIs you can use in your app for a wide range of complex audio needs.

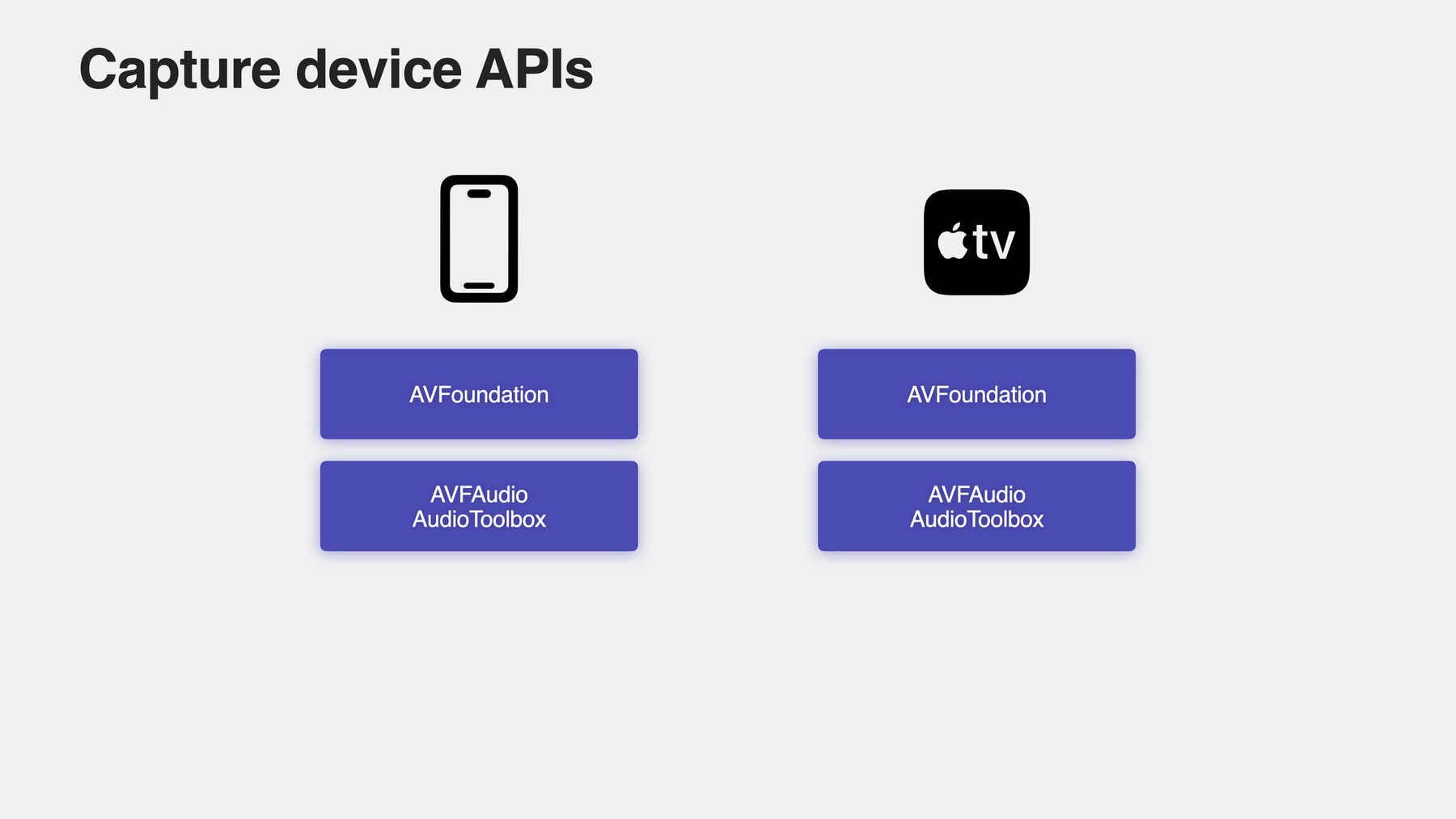

Let's start with an overview of capture device APIs.

AVFoundation, AVFAudio, and AudioToolbox give you access to the camera and mic for capturing video and audio. Let's review how an app can use capture devices, specifically with AVFoundation's AVCapture family of classes. First, apps use AVCaptureDevices and AVCaptureDeviceInputs, which represent cameras and microphones. These plug into an AVCaptureSession, which is the main object for all things related to capture. AVCaptureOutputs render data from inputs in various ways. You can use these to record movies and snap photos, access the camera and mic buffers, or get other metadata from the input devices. To display a live camera feed in UI, you can use AVCaptureVideoPreviewLayer. This is a special type of output that subclasses CALayer. And data flows from the capture inputs to compatible outputs through AVCaptureConnections. These capture APIs are available on iOS, macOS, and now tvOS, starting with tvOS 17.

If you're new to developing using AVFoundation's capture capabilities, you can learn more at the Capture Setup start page on developer.apple.com. tvOS now also supports the same camera and microphone APIs that are offered on iOS. If you already have a camera or mic app built for iOS, most of your code will just work on tvOS, but there are a few APIs and coding practices that differ.

Since Apple TVs do not have built-in AV inputs, your app will need to adopt Device Discovery before you can use the camera and mic. This is to ensure that your app can handle edge cases like when Continuity devices appear and disappear.

There are also a few nuances to app bringup on tvOS that differ from iOS, and we'll go over those considerations for the best tvOS app experience.

Since the same camera and microphone APIs from iOS are now available on tvOS, I'm going to walk you through bringing tvOS support to an existing iOS app. I have a simple camera and mic app that's already built for iOS. The app has a basic UI that accesses the camera and mic to capture photos or record video and audio. Throughout this session, I'll be bringing tvOS support to this app using the new Continuity Camera and Mic APIs. Let's take a look at how it's built.

In Xcode, I'll open up the ContentView, which is where the app's UI is defined. Here the app specifies the position of basic UI elements we see on the screen. Most important is the CameraPreview view, which connects to an AVCaptureVideoPreviewLayer to display the live camera preview behind all of the other UI elements.

Next is the CaptureSession class, which wraps several of the AVCaptureSession classes from AVFoundation. This class has a property for an AVCaptureSession, which is used to control which capture input is selected and where the data is output. In this case, the output is the AVCaptureVideoPreviewLayer that's displayed in the ContentView.

The CaptureSession class also has a function to set the active video input. In this function, the app validates the selected input, configures the AVCaptureSession, and starts the session. This begins the flow of data from the AVCaptureDevice to the preview layer that's displayed in the ContentView.

All of these AVCapture APIs are now also available on tvOS, so I'll go ahead and add tvOS as a supported OS for the app.

I'm now selecting the project in the Project Navigator, highlighting the app's target, and adding Apple TV as a supported destination.

At this point, the app can build and run on tvOS. However, since there are no input devices available, the app wouldn't do very much. This brings us to Continuity Camera, starting with the process of finding a capture device and selecting it for use on tvOS. Before doing anything related to AVCapture, your app should ensure that it has permissions to use the video and audio capture devices. This gives your app the opportunity to explain why it needs access to these devices, and users can accept or deny access. Remember to set the camera and microphone usage keys in your Info.plist. This description is displayed to the user when prompted for authorization.

You may be used to building apps for a user's personal device, like an iPhone or iPad, that has built-in cameras. Apple TV, however, is a communal device shared by multiple people with their own iCloud account. To enable the best experience on a communal device, anybody with a compatible device, including guests, can use their device as a Continuity Camera.

This means users can use the recording features of your app at home, at a friend's house, or even a shared space like a vacation rental. It also means that cameras may appear or disappear from the system at any time, and your app should be able to handle these cases.

AVKit now provides a new view controller called AVContinuityDevicePickerViewController. You can use this controller to select an eligible continuity device to use as a camera and mic. This view controller lists all users that are signed in to the Apple TV and lets them connect their device for Continuity Camera. There is also a way for guests to pair to Apple TV to use their iOS devices for Continuity Camera. When accessing capture devices, your app should first check if there are any available for use. If one is available, you can start using the capture devices to start a capture session and send data to any of the AVCaptureOutputs. If there are none available, use the device picker to present the relevant UI to the user. This guides them through the process of sharing their device's camera and mic. Once a device is selected, the view controller will call a delegate callback to let you know a device has appeared. You can then validate the availability of that device and continue with starting the capture session. Behind the scenes, tvOS and iOS work together to establish this connection. When a selection is made, tvOS will ping that user's eligible devices within close proximity to the Apple TV and prompt for a confirmation. The user can then accept the connection on any of the notified devices.

At that point, the camera and microphone become available to use in your app, and camera and mic data can start streaming.

To present the device picker in your SwiftUI app, AVKit provides the continuityDevicePicker modifier, which is new to tvOS 17, to present the picker. Its presentation state is updated by a state variable, just like other content-presenting view modifiers. When a device is selected and becomes available, the picker dismisses and calls the callback with an AVContinuityDevice. This object has references to the AVCaptureDevices that are associated with a given physical device, like an iPhone or iPad.

The device picker can also be presented in UIKit apps using AVContinuityDevicePickerViewController. This view controller takes a delegate that provides optional lifecycle events and a callback for when an AVContinuityDevice is selected and becomes available. When such a device becomes available, capture devices will also get published to other device listeners, such as AVCaptureDeviceDiscoverySession or any KVO observers on AVCaptureDevice. Remember that on tvOS, the only capture device is a Continuity Camera. This means that your app will have to handle cases where a camera and mic transition from unavailable to available, and vice versa. Across all platforms, AVCaptureDevice.systemPreferredCamera allows you to access the most suitable available camera. This API is now available on tvOS and functions in exactly the same way.

This property will update based on camera availability. Since only one Continuity Camera can be connected to Apple TV at a time, a nil value means there are no cameras available, and a non-nil value means there is a Continuity Camera available. You can use key-value observing to monitor changes to the systemPreferredCamera. And on tvOS, connected capture devices will be of type continuityCamera.

When key-value observing the systemPreferredCamera property, your app can reevaluate the state it should be in based on camera availability. When cameras become available, you can start an AVCaptureSession to start recording video or audio. When cameras become unavailable, you should perform any necessary teardown from a previous capture session, indicate to the user that the device is no longer available, and give them the option to connect a new one. Once a Continuity Camera is connected to Apple TV, your app can access many of the existing camera capture APIs. For example, you can use AVCaptureMetadataOutput to get per-frame video metadata, such as any detected faces or bodies. You can capture high-resolution photos using AVCapturePhotoOutput, record movies with video and audio with AVCaptureMovieFileOutput, and monitor video effects or control the camera properties like zoomFactor. There are so many more camera APIs that are now available on tvOS with Continuity Camera. You can refer to previous videos that dive deep into advanced camera capture features and techniques. Those are the tvOS-specific APIs your app would need to adopt to discover and select Continuity Capture devices. Let's bring this functionality to the app we're working on.

Back in Xcode, in the ContentView, we know we need a state variable on tvOS to control the presentation state of the device picker. I'll go ahead and add the state variable, and make sure it's only used on tvOS using compiler guards.

At the bottom of the ContentView, I'll create a tvOS-only extension to handle Continuity-Camera- specific logic.

I'll then add a computed variable to create a button that shows the device picker by toggling the state variable we added.

Next, I'll add a callback handler that will be called when a Continuity Camera is connected. Once connected, this sets the camera as the active video input, which, in turn, starts the capture session.

Finally, I'll add a method that activates a Continuity Camera in case one is already connected.

Now let's start adding to the UI.

In the view body, I'll add the device picker button into my view.

I'll then add the continuityDevicePicker view modifier and connect it to the state variable and the callback function added earlier.

Finally, I'll add a task that attempts to activate a connected Continuity device if one is already connected.

And those are all of the code changes needed to bring tvOS support to an existing iOS camera app. Now let's run it on the Apple TV to see what it looks like.

The app launches to the basic UI, but without a camera feed, since I haven't yet connected a camera. I'll go ahead and select the button I added to bring up the device picker.

This gives me options for connecting a camera. I'll go ahead and select Justin's user and follow the instructions to pair.

And Continuity Camera is connected! Here's what the tvOS version of this app looks like using the same shared code as iOS, with only a few minor changes to add device discovery on tvOS. There were no changes to the existing usage of the camera APIs. I can even go ahead and snap a photo, just like in the iOS app.

And that's Continuity Camera on tvOS.

If you're adapting an existing app to tvOS or just getting started with tvOS development, I want to quickly recap some nuances on the platform.

The most prominent difference on tvOS is user interaction. tvOS has no direct touch events. Users interact with the system using the focus engine by way of swipes, directional arrow presses, and other buttons on the remote.

As a communal device, tvOS can be used by multiple people and supports multiple users and guests. This means your app may need to handle personal information differently than other platforms. Finally, tvOS has unique file storage policies. This is especially important if you are writing a content creation app that records video or audio. Let's go into more detail.

Remember that disk space is a shared resource. The main consumers of disk space are: the operating system, which cannot be removed; frameworks and app binaries, some of which can be offloaded if the setting is on; and the majority of the space is used as a cache for temporary data. tvOS apps are mostly built for content consumption, like streaming, which requires a very large available cache. Keeping with this disk space model helps ensure the best user experience across all tvOS apps.

On iOS, you can store data persistently using FileManager and writing to the .documentDirectory path. Use of this API this is not recommended on tvOS.

The OS does not allow persistent storage of large files. While available in the headers, uses of .documentDirectory will fail with a runtime error. Instead, when building for tvOS, your app should only use the .cachesDirectory.

Data in this directory will be available while your app is running. However, it is possible that this data will be purged from disk in between app launches. For that reason, it's a good idea to offload your data elsewhere as soon as possible, like uploading it to the cloud, and deleting it when it's no longer needed on disk. There are other file storage options available on tvOS, which has some interesting multi-user use cases. For example, you can store your app data in iCloud using CloudKit, even on a per-user basis. We've covered storage options for multiple users on tvOS in the past and encourage you to refer to those videos for more information. If you're new to tvOS development, check out the Planning your tvOS app page on developer.apple.com, which goes through the different features and considerations to keep in mind when developing for Apple TV. And that's how you can build a great tvOS app experience with the new Continuity Camera and Device Discovery APIs in tvOS 17. Now let's talk about the various microphone capabilities you can access in tvOS 17. Apps will have the ability to use a microphone on tvOS for the first time ever! Let's dive right into what you'd need to do in your app to take advantage of this functionality. Here's an overview of the changes made this year. There are additions to Audio Session in the AVFAudio framework on tvOS.

Like on iOS, Audio Session is the system level interface where you communicate how you intend to use audio in your app. For example, you sign up for and handle notifications like interruptions or route changes and set the category and mode of your app.

The full suite of recording APIs has also been brought over from iOS to tvOS.

These include recording APIs in the AVFAudio as well as the AudioToolbox framework. Let's start with Audio Session.

With tvOS 17, apps will be able to use a few different microphone devices on the Apple TV. This could either be a Continuity Microphone like an iPhone or iPad, or Bluetooth devices like AirPods or other headsets which can be directly paired to the tvOS device.

The way you can recognize the type of input device is via the AVAudioSessionPort type.

After going through the Device Discovery flow, you can get access to an AVContinuityDevice which has an audioSessionPorts property.

Information about the audio device, including its port type, can be queried from this property.

There's now a new port type for the Continuity Microphone, and it is recommended to use this port as an identifier if you want to do anything specific in your app based on the type of input device.

This flow, however, will only work for iPhones and iPads which fall under an AVContinuityDevice.

You can also continue using existing Audio Session API to query the available inputs on a system.

The existing port type for an AirPods or Bluetooth mic has been carried over from iOS. Building on top of capture device availability, now let's talk about microphone device availability and how you need to monitor it on tvOS. The Apple TV does not have a built-in mic, and your app is never guaranteed to always have access to a mic device. To this end, the InputAvailable property in Audio Session now has key value observable support for you to monitor when there is a mic device available to use and when there are none.

It is highly recommend to listen to this property for any dynamic changes to mic availability. This could also help determine your timing to activate your Audio Session and start I/O, as well as handling your app's state when a mic device shows up or vanishes from the system.

Next, similar to iOS, the recording permissions API are now available on tvOS 17 for you to check whether a user has already granted your app access to the mic and, if not, request recording permission.

It is recommend for an app to ensure having permissions to record to avoid failures while starting I/O.

Last but not least in Audio Session, categories and modes that support input like the category for playAndRecord and modes like voice chat and video chat, are now available on tvOS as well. You can refer to our header, AVAudioSessionTypes, to help you determine which audio session category and mode suits your app best. And those are all the Audio Session changes new to tvOS 17. Now, let's talk about the diverse set of recording APIs and their recommended use cases.

First up, AVAudioRecorder.

This is the simplest way to record to an audio file, and if all you need to do is record whatever comes into the microphone for a non-real-time use case, AVAudioRecorder is your go-to option. It can be configured with a variety of encoding formats, a specific file format, sample rate, et cetera.

Next, we have AVCapture. As mentioned by Kevin, you can leverage the existing iOS AVCapture API to get access to the mic for basic recording use cases if both the camera and mic are at play. Moving on to AVAudioEngine.

AVAudioEngine supports both recording and playback for simple as well as complex audio processing use cases. An example of this could be a karaoke app where you can analyze your user's voice input from their mic and mix this mic input with your playback track.

Now, there are some cases where an app might want to directly interact with the real-time audio I/O cycle. AVAudioEngine provides a real-time interface as well.

Apps can provide real-time safe-render callbacks via the AVAudioSinkNode and AVAudioSourceNode.

You can also get access to voice processing capabilities with AVAudioEngine. For apps dealing with low-level interfaces, the corresponding iOS mechanisms have also been brought over to tvOS.

For non-real-time recording use cases, you can reach out for AudioQueue. To interact directly with the real-time audio I/O cycle, you can use the audio units AU RemoteIO and AU VoiceIO via existing AudioUnit APIs.

If you'd like more detailed information about these APIs, I'd recommend checking out the Developer website for the AVFAudio and Audio Toolbox frameworks.

Now, if you require a mic stream where the playback from the Apple TV needs to be echo cancelled, in a conferencing use case, for example, it is highly recommend to opt in to our voice processing APIs. Let's talk about why. Compared to a standard echo cancelation problem, let's say on an iPhone where the recording and playback happens on the same device, here's everything that's new in the tvOS setup. With a Continuity Microphone enabled route, the recording takes place on an iPhone or iPad. The playback however, is streamed on a tvOS device and could be played out of any arbitrary set of TV speakers, home theater setups, sound bars, or even a pair of stereo HomePods, most of which have the ability to playback rich formats like 5.1 and 7.1 LPCM and run their own flavors of audio processing to enhance the user's experience. Along with this, in a general Apple TV setting, a user could be several feet away from the mic device, and this mic device could be much closer to these loud playback devices. All these scenarios set up a very challenging echo control problem where we'd want to cancel out all the playback from the Apple TV while capturing audio from the local environment in high quality. To help you overcome all these challenges, tvOS 17 now has new voice processing and echo cancelation technology in place. You can take advantage of this by simply adopting existing iOS APIs, now available on tvOS as well, in AVAudioEngine, where you'd only need to call setVoiceProcessingEnabled on the inputNode. You can also get access to this via the AU VoiceIO audio unit using the VoiceProcessingIO subtype. For more details on our voice processing APIs and the capabilities they offer, please refer to our session What's new in Voice Processing? And that's all the audio APIs that are new to tvOS 17 this year. Now, let's go back to our app and look at how to leverage some of this microphone functionality in it.

Back in Xcode, I'll open up my AudioCapturer class.

This class abstracts all the nuances of the underlying audio APIs from the rest of the app code.

It has its own instance of AVAudioEngine, which is the recording API I'm going to use in this instance.

It also has its own shared instance of Audio Session.

And this is a quick insight into what this class does. On a high level, it sets up the Audio Session category and mode before activation, sets up the underlying AVAudioEngine with the correct formats...

And sets voice processing on the input node, with a user-controllable toggle to bypass it.

I'm running the engine in a non-real-time context in this example. Now, let's look at what I need to do to modify this for tvOS. This iOS app builds as is for tvOS assuming a mic device is always available. What I'd need to do is add an observer to listen to the inputAvailable KVO notification to make sure I have an input mic device available before starting my Audio Session and subsequently starting I/O. I'll go ahead and add that here.

I also need to make sure that I handle the app's state when the mic device shows up or vanishes from the system, which could be a user simply disconnecting their phone from the Apple TV while in session.

This should now work well on my Apple TV. Let's see it in action! Here, I'm in the audio mode of my app now. I can press play to playback a song and then go ahead and record myself talking.

Wow, this song is so groovy! I always feel like dancing when I listen to it. That was fun! Now, in the voice processing mode which we've been in, this song played out of the Apple TV will get canceled, leaving us with audio only from the local environment being sent to the app.

Wow, this song is so groovy. I always feel like dancing when I listen to it.

And that's echo cancellation on tvOS.

And that's a wrap! Let's recap what we've gone through. We introduced the feature on a high level and talked about the new genre of apps it unlocks on tvOS. We then discussed the new Device Discovery API for presenting a device picker and selecting a continuity device. We reviewed the camera and microphone APIs available now to use on tvOS. And finally, we adapted an existing camera and microphone app to build for tvOS, sharing as much of the code as possible and only adding Device Discovery. We also recapped on some tvOS-specific considerations. We're so excited for you to bring camera and microphone support to your tvOS app. We can't wait to see the apps you'll develop on this platform with this functionality! Thank you.

-