-

Support Cinematic mode videos in your app

Discover how the Cinematic Camera API helps your app work with Cinematic mode videos captured in the Camera app. We'll share the fundamentals — including Decision layers — that make up Cinematic mode video, show you how to access and update Decisions in your app, and help you save and load those changes.

Resources

Related Videos

WWDC17

-

Search this video…

♪ ♪ Rasmus: Hi, I'm Rasmus. I'm an engineer on the Camera Algorithms team working with Computational Video. I'm already excited about Cinematic mode. And now I'm even more excited about showing how you can integrate the magic of Cinematic mode in your very own app using the new Cinematic API. In this presentation, I'll walk through the necessary steps using the new API to build a sample app which does playback and edit, realizing the awesome capabilities of Cinematic mode. But before I start talking about integration, let me first show you what Cinematic mode is, how you can capture it, and what you can do with it post-capture. We introduced Cinematic mode in iPhone 13, and it really is a tiny film crew right in your pocket. It brings you a camera with beautiful shallow depth of field and natural focus falloff, a director, who directs the attention and narrative by changing the focus, and a focus puller, who anticipates keyframes ahead of time and creates smooth transitions between focus points. So how do you capture Cinematic mode? It's captured right in the Camera app on any device in the iPhone 13 & 14 lineup, and it gives you a rendering preview as you record. This makes it super accessible, whether you're an aspiring indie filmmaker or just like to add a magic touch to your camping trip videos. And while I did dream of becoming a filmmaker, I do love to capture Cinematic mode of my own family. But the magic doesn't stop here. There's more to Cinematic mode than meets the eye. It allows you to make some pretty amazing nondestructive edits post-capture. You can change the aperture, and thereby the amount of bokeh. And you can redirect the focus and narrative using alternative detections. This shows the post-capture editing in Photos, but editing can also be done in apps like iMovie, Final Cut Pro, and Motion. And now with the introduction of the Cinematic API, you can use Cinematic mode videos for playback and edit in your own amazing app. The Cinematic API is widely available on new macOS Sonoma, iOS 17, iPadOS 17, and tvOS 17. So this is really exciting. First I'll start with some fundamentals about the special Cinematic mode assets and dataflow. Then I will go through the specific steps to get and play a Cinematic mode asset with simple playback adjustments, like changing the aperture. And then how to do nondestructive edits driving the focus in Cinematic mode and how to save and load these edit changes. I will go through some of the new Cinematic API calls and also provide a sample code app which can be used as a detailed reference. But as promised, let me start by explaining some fundamentals about Cinematic mode. Cinematic mode actually consists of two files and a dataflow from one to the other. First there’s the rendered asset. This is a baked file with the Cinematic mode effect applied, and it can be exported, shared, and played as a regular QuickTime movie. And then there’s the special Cinematic mode asset, which has all the information needed to create the rendered asset. It allows you to do nondestructive post-capture edits, such as change the aperture and refocus to your narrative. Let’s start by taking a look at the rendered asset, and break down a shot with a little Cinematography 101.

Opening shot: we enter what’s clearly an important game of street handball, and by focusing on the main subject, we really feel the tension as he gets ready to make a big play. He checks his team player. He’s also ready. Racking focus to him emphasizes just how ready he is. We rack focus back to our main subject. This is it; you could cut through the tension with a knife.

He makes the play and totally ruins it, setting up some comic relief. Now let’s change the narrative to be about the team player by focusing on him early in the shot, and instead of going back and forth, we keep him in focus. I’ll be the voice of his thoughts. “I love you, buddy, but you always take forever." "Yes, I’m ready, but I have low expectations." "What a waste of time. Bravo.” So this was shot on iPhone 13, showcasing the cinematography tools for storytelling provided by Cinematic mode. And to produce this rendered asset, there’s the special Cinematic mode asset. To support nondestructive post-capture edits, a Cinematic mode asset actually has multiple tracks. First is the video track, which is the original QuickTime movie as captured. It can be HDR/SDR, 1080p at 30 frames per second, and on iPhone 14, even 4K at 24, 25, and 30 frames per second. This track can be played as a regular video, but it lacks the aesthetics and storytelling compared to the rendered asset. There’s no suspense and no comic relief, and we're just watching some people play a game in the back alley. The second track contains disparity, which is the pixel shift seen from two cameras looking at the same scene. Close objects are more shifted than objects further away. You can test this yourself closing one eye and then the other, and see how objects shift at different distances. Disparity is used for focus and rendering the shallow depth of field. The disparity map is at a lower resolution than the video track. The track, shown as a colorization, contains relative and not absolute disparity, which means it can only be used relative to other samples in the same map, such as rendering relative to a focus disparity or transitioning between two focus disparities. For insights about relative disparity and great depth puns, I highly recommend WWDC17, "Capturing Depth in iPhone Photography." The third track contains important metadata for rendering and editing. The track consists of two things. First, the rendering attributes, which hold focus disparity and aperture as an f-number. These drive the rendering. The focus, shown as an overlay, is decided by the Cinematic engine, while the aperture is chosen by the user. And then there’s the Cinematic script, which holds all the automatic scene detections.

This scene shows face, head and torso, which are grouped by an ID linking them together over time when possible. The script also holds the focus decisions, deciding which detection to keep in focus. The focus decisions can be changed post-capture to follow other detections and thereby changing the narrative and rendering. This is the dataflow from the Cinematic mode asset to the rendered asset. As just covered, the Cinematic mode asset holds all the information needed for rendering and post-capture focus and aperture changes. Following is the optional editing, where changes are nondestructive and can always be reverted back to the original. If no editing is done, the Cinematic engine controls the focus disparity automatically, and the aperture set by the user at capture time remains unchanged. With a focus disparity and aperture, the rendering applies the shallow depth of field with accurate focus falloff using the disparity map. And finally, we get the rendered asset with the effect applied, which is a regular, shareable QuickTime movie. With a better understanding of Cinematic mode, it’s time to start building the playback app. But first we need to get hold of a Cinematic mode asset, so let’s look at the code to get it. It’s quite easy to pick a Cinematic mode asset from Photos library.

Just use a Photos picker and filter for Cinematic videos. The picker will get a local identifier for the selected file. As a non-code related side note, if you don’t already have a Cinematic mode asset, it can be AirDropped between different user devices with the All Photos Data option to include both the rendered asset and the Cinematic mode asset. Let’s get back to coding. Using the asset identifier from the pick, I can now fetch a photos asset with information about the Cinematic mode asset before I request it. I need to make sure to set request options to get the original version and allow for network access in case the asset is on iCloud, and then finally request the Cinematic mode asset as an AVAsset. And now to the exciting part. We have gotten the Cinematic mode asset. Let’s integrate playback. A rendered asset can be played back using AVPlayer and AVPlayerItem as a regular movie, and so can the movie track inside the Cinematic mode asset. But to realize the potential of Cinematic mode assets, we need to add a custom video compositor, which can handle multiple tracks, user changes, and finally, call the Cinematic renderer to compose the output. This custom compositor can also be used for thumbnails and offline export, but let’s focus on using it for playback and editing of Cinematic mode assets. I will only go into details specific to the Cinematic API, so for additional insights on custom video compositor classes and HDR, I recommend WWDC20, "Edit and Play Back HDR Video with AVFoundation." Let’s start using the Cinematic API. The Cinematic API uses the prefix CN, so you might be able to spot the three API calls needed to set up the rendering session which will render the shallow depth of field. The first call gets the rendering attributes from the Cinematic asset. The second sets up a rendering session with these attributes. The rendering session uses the GPU, so I need to provide a metal command queue. And the last call sets the rendering quality to export. Quality can be set to different enums like preview and export depending on your performance and quality constraints. In these and following code snippets, I have removed good practice error checks, like guard let else error, just to focus on the core code. The custom compositor needs a composition, which includes the Cinematic asset info needed to render the output. In a typical case, you would need multiple steps to add the multiple tracks, but fortunately, we provide an easy way to do this in just one step. You can add all the tracks, from the asset info to the composition, directly. The Cinematic composition info is just like the Cinematic asset info, but points to composition tracks. Then I get the Cinematic script from the Cinematic asset. The Cinematic script holds all the detections and focus decisions. And I will go into more detail about this later. For the custom compositor, I set up instruction with rendering session, composition with asset tracks, Cinematic script, and aperture as an f-number. This custom instruction describes how to compose the rendered Cinematic content.

To play the video, I’ll need a video composition, to which I’ll add track IDs from the Cinematic composition, add a sample custom compositor, which is where the renderer is called to compose the effect, and add the composition instruction. Let’s take a closer look at what goes on in the custom compositor. There are a few key things specific to the Cinematic API inside the startRequest function in the custom compositor. Using the track IDs from the Cinematic composition, I get the source buffers for the current frame from the original video track, the disparity track, the metadata track, and finally I create a buffer for the rendered output. These buffers allow us to make edits and new renders. From the metadata buffer, I can get the rendering frame attributes, which drive the rendering. The metadata is an opaque structure, so I’ll use CNRenderingSession and get the frame attributes directly. With the frame attributes, I can now make optional playback changes, in this case by changing the aperture f-number according to the instruction, which holds aperture changes from a UI element. Focus disparity can be changed in a similar fashion, but I’ll get back to making scene-driven changes using detections later. At this point, we're really, really close to achieve playback. I just need to get a command buffer on the rendering command queue, such that the composed output can be rendered on the GPU. Encode the rendering using the updated frame attributes and the image and disparity buffers. Add a completion handler for the output buffer, which will be passed to the video composition. And finally commit the command buffer. Now let’s try out playback in the sample app. I can now select a Cinematic mode asset from Photos library.

And when I play back the asset, the effect is applied in real time.

I can scrub back and forth and make changes to aperture, which changes the amount of bokeh.

Stopping down minimizes the effect.

Opening up increases it. Maybe this is too much.

I think this looks good.

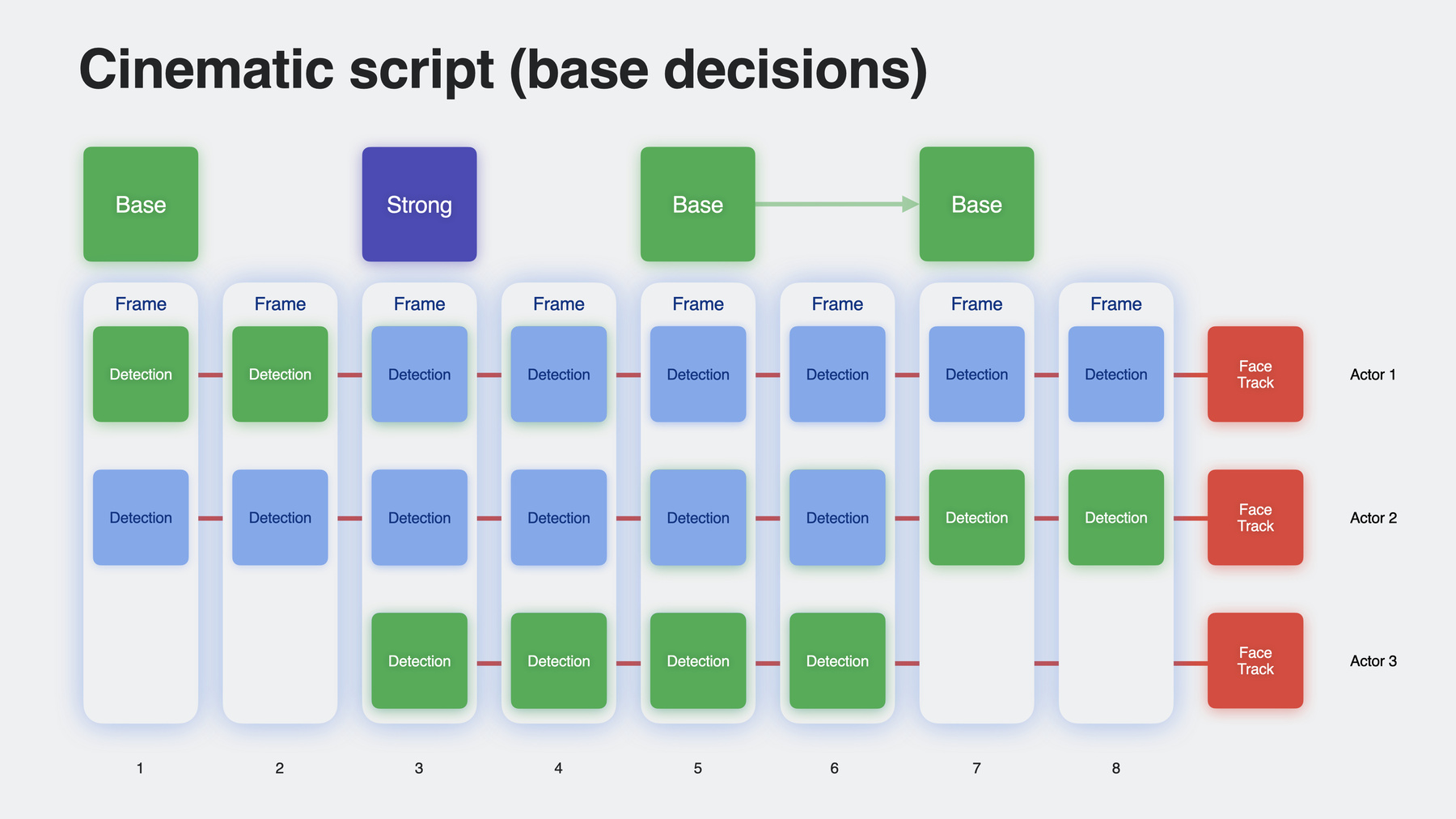

The app is already pretty cool, but it will get even cooler in the upcoming edit section. And to remind you, as a reference, code for each part is available in the sample app. I will now move on to extend the playback app and custom video compositor to do more advanced and nondestructive edits by changing the Cinematic script and thereby the focus. But let’s revisit the Photos app editing environment for Cinematic mode and look specifically at the focus, which is automatically driven during capture by detecting objects and deciding where and on what to focus. These detections and decisions are all in the Cinematic script, and the script can be changed to your narrative. The focus detection is shown as a yellow square, and below the timeline, we have keyframes and focus decision changes. To better understand how this works, let’s break down the Cinematic script structure with a simple example. Let’s start with just two Cinematic script frames in a short sequence. Each hold all detections for a given point in time, and in this case just two detections. The detections are tracked over time with a group ID, which groups face, head, and torso together. In addition, cats, dogs, and balls are detected and tracked, but here we just have the face of Actor 1 and Actor 2. An automatic base decision puts focus on Actor 1 in the first frame, and focus will follow this track until a new decision. Even after Actor 3 enters the scene and introduces a new face and detection track, the focus remains on Actor 1. In frame 5, an automatic keyframe event changes the decision and focus to Actor 2. And after just four frames, Actor 3 does a disheartened exit without ever getting the focused attention she deserves. Focus remains on Actor 2 for the rest of the sequence. These automatic base decisions are decided on a range of parameters like who faces the camera, who looks away, who’s closer, and what’s interesting. And while we do our best to create a good narrative, you might have another one. Luckily, decisions can be changed, and actually in two ways. The first one is to add a weak user decision. This brings Actor 3 into focus as she enters the scene. However, a weak decision only follows a track until the next base or user decision, which happens in frame 5, where a base decision changes focus to Actor 2 for the remaining frames. So if we wanted focus to stay on Actor 3, we could add another weak decision in frame 5, or we could use something stronger. Yes, you might have guessed it, a strong user decision. A strong decision will keep focus on a subject until the next user decision to focus elsewhere or the end of the detection track. Adding a strong decision brings Actor 3 into focus and overrides the following base decision. After the detection track of Actor 3 ends, the focus falls back to the base decision, which is to focus on Actor 2. The decision hierarchy works as follows: user decisions on top of base decisions, user decisions apply when possible, base decisions fill the gaps. So as done in this example, a user can change just parts of the script. And while both decisions revert to a base decision when a detection track ends, a strong decision holds it’s focus track as long as possible. Before moving to change the script, let’s get a script frame and draw the detection boxes. First I need to grab the frame time from the video composition request, and then get the Cinematic script frame for that current point in time. This script frame holds all detections, including the focus detection. Now it’s easy to draw the detection boxes. By iterating all the detections in a script, I can get each detection rectangle and draw it to a texture attached to a renderEncoder using a draw command. In this example, the detections are drawn in white. And to emphasize what’s currently the focusDetection, let’s draw that in another color. I get the focus detection directly from the Cinematic script frame, get its corresponding rectangle, and to make it stand out, I draw the focus rectangle in yellow. Let’s try the playback app with the added detection overlay. I can now enable the new detection overlay to draw the detections.

This scene shows face, head, and torso in white and the focus detection in yellow. You can customize the overlay to only show a specific subset of detections if that makes sense for your app. When I start playback, notice how the focus and rendering follows the yellow focus detections through the movie while alternative detections just show in white. Let me show you how to use these alternative detections to change the focus.

Once we know how to draw the detection boxes, actually changing the script with a UI tapping point is very similar. Again I’ll iterate over all detections, and if the tapping point is inside a detection, I’ll get it’s corresponding detectionID and create a new decision. The decision strength can be set in the UI. In the sample app, weak is set with a single tap and strong with a double tap, but the UI is really up to you.

Then finally I add this new user decision to the Cinematic script, and the script has changed. So let me talk about how the updated script drives the focus. Let’s consider this a normal clip length and look at three decisions and their corresponding focus detection tracks. Let’s arrange the focus tracks according to time and distance in a 2D plot. Because the Cinematic engine knows the whole updated script, it can make smooth focus transitions ahead of time. It looks something like this, where a focus rack starts ahead of time to reach focus at the beginning of each keyframe. It’s pretty magical, and it works like a focus puller, who can direct attention and rack focus ahead of time by knowing the set markers.

The racked focus disparity can be accessed directly from a frame in the updated Cinematic script. I have already shown how to extract a script frame, and changing the frame attributes for focus is similar to changing the aperture. Once I have a script frame from the updated script, I can update the frame attributes for focus disparity directly. This will pass focus with smooth transitions according to the updated script to the renderer. Let’s try out tapping to change the script and focus in the sample app. Going from playback mode to edit, I can now update the Cinematic script. I can change focus decisions by either single-tapping to get a weak decision, shown as a yellow dashed box, or by double-tapping to get a strong decision and a solid yellow box. You can see how the focus and rendering changes according to my user input when I tab on different players. I think this is super cool. Scrub, scrub. Tap, tap, tap. Change the aperture. Scrub, tap, tap, double-tap.

And now that I have completed editing, how can I save these edit changes? The script changes can be saved in a separate data file, and saving the changes separately means the original remains unchanged. And changes can always be reverted back to the original. So I get the script changes, get the changes as a compact binary representation, and write the changes to a data file. The Cinematic API handles the data representation. And you will need to store this data in your app so it can be reloaded to your needs. Loading script changes is done in three equally easy steps. I get the binary data from the data file, unpack the script changes, and reload the changes into the Cinematic script. Changes can also be loaded simultaneously with the original script. So that’s how we can edit and render and save and load the Cinematic script changes. As mentioned earlier, the custom video compositor can be used to export rendered videos. And these can be rendered right back into Photos library. This is covered in detail in the sample code. The API also includes a tracker, CNObjectTracker, which can be used for objects without automatic detections. The sample code covers how the tracker can be engaged by tapping on an object without a detection. And the tracker will provide a detection track and can be added to the script. And now to some more advanced possibilities. You can also add custom tracking by providing your own tracker and add your own custom detection track to the script. And you can make custom changes to the rendering attributes per frame, allowing for custom transitions and aperture changes. I’m personally super excited about what you can all do with Cinematic mode and the new API. And whether it’s a simple app or a complex editor, I’m sure you can come up with ideas we haven’t even thought of. This was an introduction to the new Cinematic API, which allows you to integrate Cinematic mode video assets for playback with playback adjustments, change the Cinematic script and focus to make nondestructive edits and new renders, and save and load these script changes. Please check out this and more in the included sample app. Thank you for your focus, and happy coding. ♪ ♪

-