-

Create a more responsive camera experience

Discover how AVCapture and PhotoKit can help you create more responsive and delightful apps. Learn about the camera capture process and find out how deferred photo processing can help create the best quality photo. We'll show you how zero shutter lag uses time travel to capture the perfect action photo, dive into building a responsive capture pipeline, and share how you can adopt the Video Effects API to recognize pre-defined gestures that trigger real-time video effects.

Resources

Related Videos

WWDC20

-

Search this video…

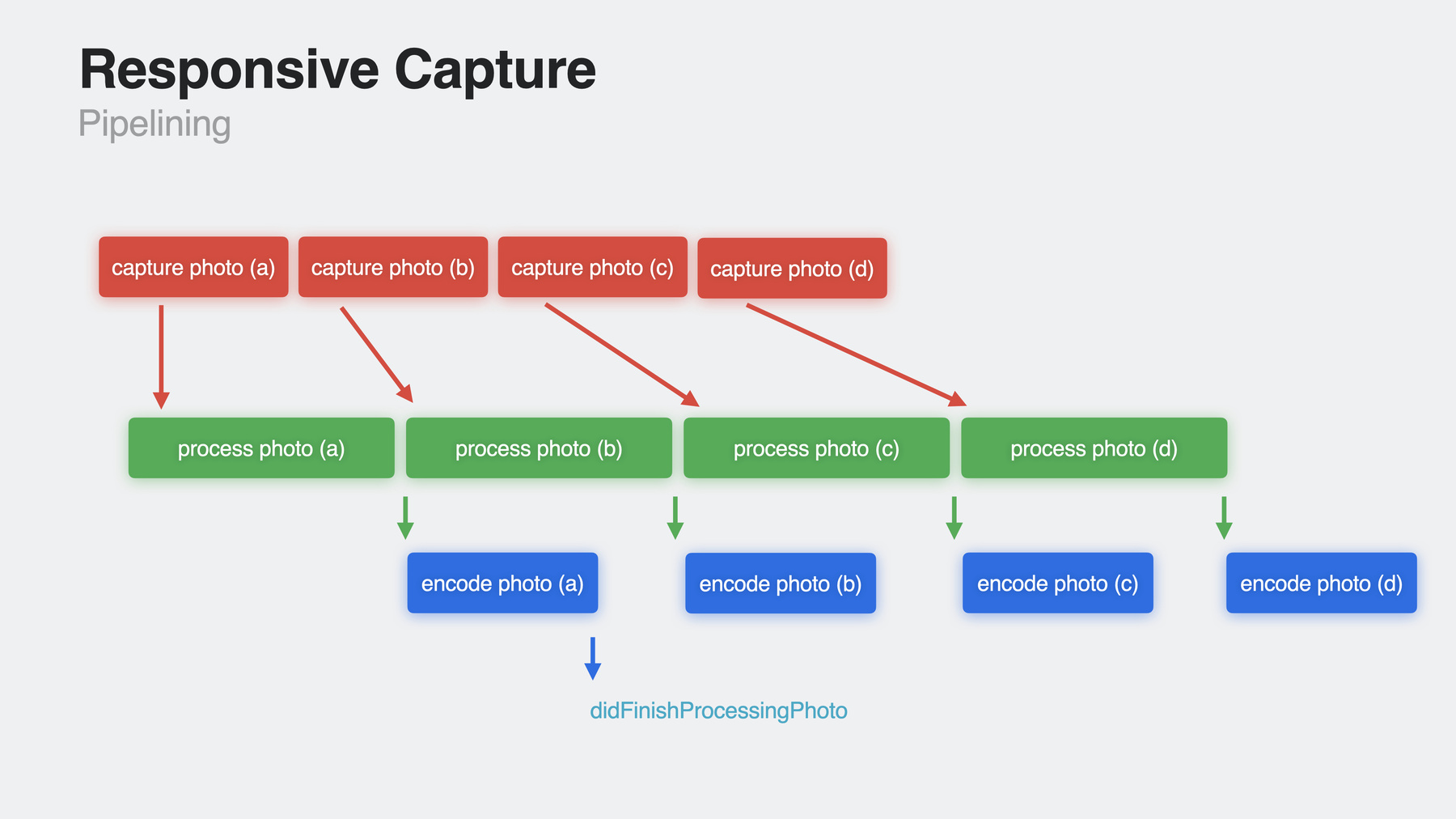

Rob: Hi. I'm Rob Simutis from the Camera software team, with Sebastian Medina from the Photos team, and welcome to our session, "Create a more responsive camera experience." We'll be presenting a slew of new powerful APIs in the AVFoundation capture classes, and in the PhotoKit framework. First, we'll talk about deferred photo processing. Then, I'll show how you can really "capture the moment" with zero shutter lag. Third, I'll cover our new Responsive Capture APIs. And finally, I'll go over a different way to be responsive with updated video effects. Starting with iOS 13, you could use AVCapturePhotoSetting's photo Quality Prioritization enum value to change the responsiveness, or how quickly you could capture and get a processed photo back, and then be able take the next photo. Your app could choose among different enum values to achieve the right responsiveness, but it impacted image quality. Or if you always wanted to use the quality value, you could impact shot-to-shot time. In iOS 17, you can still use this API, but we're bringing you new, complementary APIs so you can improve the chances of getting the shot you wanted while also getting higher-quality photos. I'm going to walk you through this app my team built for our session. By flipping each toggle switch to "on," we can enable the new features this year, and we'll build on the concepts one by one to make a more responsive photography experience. So let's get moving, starting with deferred photo processing. Today, to get the highest quality photos out of the AVCapturePhotoOutput, you use a photo quality prioritization enum value of "quality" on the settings when you capture a photo. For our "balanced" and "quality" values, this typically involves some type of multiple-frame fusion and noise reduction. On iPhone 11 Pro and newer models, one of our most advanced techniques is called "Deep Fusion." This gives amazing, crisp detail in high resolution photos. In this Deep Fusion shot, the parrot's feathers are super-sharp, and really stand out. But it comes at a cost. This processing must complete before the next capture request will start, and it may take some time to finish. Let's look at a real-life example. I'm putting together a presentation about how the camera software team gets to the office to get its work done. Here's my colleague Devin using the latest bike technology to get around Apple Park. As I tap, I'm waiting for the shutter button to finish spinning from one shot before I can take the next one. The end results are great Deep Fusion photos. Just check out that beard detail! But while I was tapping, the processing runs synchronously, and the shot to shot time feels a bit sluggish. So I might have gotten a good photo with crisp detail, but maybe not exactly the shot I was looking for. Let's check out a diagram of events. you call AVCapturePhotoOutput's capturePhoto method with your settings and your delegate receives callbacks at various points in the process... such as willBeginCapture for resolvedSettings. The camera software stack grabs the frames from the sensor, and uses our processing techniques to fuse them into a Deep Fusion image... Then it sends the photo back to you via the didFinishProcessingPhoto delegate callback. This processing must complete before the next capture happens, and that might take some time. You can even call capturePhoto before the didFinishProcessingPhoto callback fires, but it won't start until the previous photo's processing is complete. With deferred photo processing, this timeline shrinks. You request a photo, and, when suitable, the camera pipeline will deliver a lightly-processed "proxy" photo via a new didFinishCapturingDeferredPhotoProxy delegate callback. You store this proxy photo into the library as a placeholder. And the next photo can be immediately captured. The system will run the processing later to get the final photo once the camera session has been dismissed. So now if I turn on deferred photo processing in my app's settings, the capture session will reconfigure itself to deliver me proxy photos at the time of capture when appropriate. And I can get the same sharp, highly-detailed photos as before, but I can take more of them while I'm in the moment by deferring the final processing to a later time. Nice wheelie. Those bikes are solid. There we go. That's a great photo for my presentation, beard and all. So let's look at all the parts that interact to give you a deferred-processed photo. As a brief refresher course from earlier WW presentations, when configuring an AVCaptureSession to capture photos, you add an AVCaptureDeviceInput with an AVCaptureDevice that is, the camera, to the session. Then, you add an AVCapturePhotoOutput to your session, and you select a particular format or session preset, typically the "Photo" session preset when your app calls capturePhoto on the photoOutput. If it's a type of photo that is better suited to deferred photo processing, we'll call you back with didFinishCapturing Deferred Photo Proxy. From there, you send the proxy data to the photo library. So what you've got now in your library is a proxy photo, but you'll eventually want to use or share the final image. Final photo processing happens either on-demand when you request the image data back from the library, or in the background when the system determines that conditions are good to do so, such as the device being idle. And now I'll let my colleague Sebastian show you how to code this up. Over to you, Sebastian. Sebastian: Thanks, Rob. Hi, my name's Sebastian Medina and I'm an engineer on the Photos team. Today I'll go over taking a recently captured image through PhotoKit to trigger deferred processing. Then I’ll be requesting that same image to show what’s new in receiving images from a PHImageManager request. Although, before I get into processing the asset through PhotoKit, I need to make sure the new Camera API for deferred processing is set up. This will allow my app to accept deferred photo proxy images, which we can send through PhotoKit. Now, I’ll go ahead and write the code to take advantage of this. Here, I've set up the AVCapturePhotoOutput and AVCaptureSession objects. Now, I can begin configuring our session. In this case, I want the session to have a preset of type photo so we can take advantage of deferred processing. Now I’ll grab the capture device to then setup the device input. Then, if possible, I'll add the device input. Next, I’ll want to check if photoOutput can be added. And if so, add it. Now for the new stuff. I’ll check if the new autoDeferredPhotoDeliverySupported value is true to make sure I can send captured photos through deferred processing. If this passes, then I can go ahead and opt into the new deferred photo delivery with the property autoDeferredPhotoDeliveryEnabled. This deferred photo delivery check and enablement are all you would need to add to your Camera code to enable deferred photos. Lastly, I'll commit our session configuration. So now when a call is made to the capturePhoto method, the delegate callback we receive will hold a deferred proxy object. Let's check out an example of one of these callbacks. In this photo capture callback I'm receiving the AVCapturePhotoOutput and AVCaptureDeferredPhotoProxy objects from Camera, which are related to an image I recently captured. First, it's good practice to make sure we're receiving suitable photo output values, so I'll check the value of error parameter. Now, we'll start getting into saving our image to the photolibrary using PhotoKit. I'll be performing changes on the shared PHPhotoLibrary. Although, just note, it's only required to have write-access to a photo library. Then I'll capture the photo data from the AVCaptureDeferredPhotoProxy object.

Since I'll be performing changes to the photo library, I'll need to set up the related performChanges instance method. Like with saving any asset, I'll use a PHAssetCreationRequest. Then I'll call the 'addResource' method on a request. For the parameters, I'll to use the new PHAssetResourceType '.photoProxy'. This is what tells PhotoKit to trigger deferred processing on the image. Then I can add the previously captured proxy image data. And in this case I won't be using any options. Here, it's important to know that using this new resource type on image data that doesn't require deferred processing will result in an error. And speaking of errors, I'll go ahead and check for them within the completion handler. And it's as easy as that. Go ahead and handle the success and error within the completion handler as your application sees fit. Now, say I want to retrieve our Asset. I can achieve that through a PHImageManager request, so I'll go through the code to do just that. For parameters I have a PHAsset object for the image I just sent through PhotoKit, the target size of the image to be returned, and the content mode. I'll grab a default PHImageManager object. then, I can call the request image asset for the requestImageForAsset method using our imageManager object. For the parameters, I'll use the asset I previously fetched, the target size, content mode, and in this case, I will not be using any options. Now I can handle the callbacks through the resultHandler where the resultImage is a UIImage and info is a dictionary related to the image. Today, the first callback will hold a lower resolution image with the info dicitionary key PHImageResultIsDegradedKey while the final image callback will not. So, I can make a check for those here. The addition of creating processed images through PhotoKit gives a good opportunity to bring up our new API which will allow developers to receive a secondary image from the requestImageForAsset method. Since it may take longer for an image going through deferred processing to finalize, this new secondary image can be shown in the meantime. To receive this new image, you would use the new allowSecondaryDegradedImage in PHImageRequestOptions. This new image will be nestled between the current two callbacks from the requestImageForAsset method. And the information dictionary related to the image will have an entry for PHImageResultIsDegradedKey, which is used today in the first image callback. To better illustrate what’s going on, today, the requestImageForAsset method provides two images. The first is a low-quality image suitable for displaying temporarily while it prepares the final high-quality image. With this new option, between the current two, you will be provided a new, higher resolution image to display while the final is being processed. Displaying this new image will give your user a more pleasant visual experience while waiting for the final image to finish processing. Now, let's write the code to take advantage of this. Although, this time, I'll be creating a PHImageRequestOptions object. I will then set the new allowSecondaryDegradedImage option to be true. This way the request knows to send back the new secondary image callback. Here, I can go ahead and reuse the requestImageForAsset method I wrote before, although now I'll be adding the image request options object I just created. Since the new secondary image info dictionary will hold a true value for PHImageResultIsDegradedKey, just like the first callback, I'll check for that here. And that's it for receiving the new secondary image representation. Remember to handle the images within the result handler to best support your app. Now you know how to add images to your photo library with deferred processing and how to receive a secondary higher quality image from an image request to display in your app while waiting for the final image to finish processing. These changes will be available starting with iOS 17, tvOS 17, and macOS Sonoma alongside the new deferred processing PhotoKit changes. Now, I’ll hand it back to Rob for more on the new tools to create a more responsive Camera. Rob: Awesome. Thanks, Sebastian! Let's get into the fine details to ensure you have a great experience with deferred photo processing. We'll start with the photo library. To use deferred photo processing, you'll need to have write permission to the photo library to store the proxy photo, and read permission if your app needs to show the final photo or wants to modify it in any way. But remember, you should only request the smallest amount of access to the library as needed from your customers to maintain the most privacy and trust on their behalf. And, we strongly recommend that once you receive the proxy, you get its fileDataRepresentation into the library as quickly as possible. When your app is backgrounded, you have a limited amount of time to run before the system suspends it. If memory pressure becomes too great, your app may be automatically force-quit by the system during that window of backgrounding. Getting the proxy into the library as quickly as possible ensures minimal chance of data loss for your customers. Next, if you normally make changes to a photo's pixel buffer like applying a filter, or if you make changes to metadata or other properties of AVCapturePhoto using AVCapturePhoto File Data Representation Customizer, these won't take effect for the finalized photo in the library once processing is complete. You'll need to do this later as adjustments to the photo using the PhotoKit APIs. Also, your code needs to be able to handle both deferred proxies and non-deferred photos in the same session. This is because not all photos make sense to handle with the extra steps necessary. For example, flash captures taken under the "quality" photo quality prioritization enum value aren't processed in a way that benefits from the shot-to-shot savings like a Deep Fusion photo does. You also might notice that there's no opt-in or opt-out property on the AVCapturePhotoSettings. That's because deferred photo processing is automatic. If you opt in and the camera pipeline will take a photo that requires longer processing time, it will send you a proxy back. If it's not suitable, it will send you the final photo, so there's no need to opt-in or opt-out on a per shot basis. You just need to tell the AVCapturePhotoOutput that you want isAutoDeferredPhotoProcessingEnabled as true before you start the capture session. Finally, let's talk about user experience. Deferred photo processing provides our best image quality with rapid shot-to-shot times, but that just delays the final processing until a later point. If your app is one where the user may want the image right away for sharing or editing and they're not as interested in the highest quality photos we provide, then it may make sense to avoid using deferred photo processing. This feature is available starting with iPhone 11 Pro and 11 Pro Max and newer iPhones. And here are some great related videos on working with AVCapturePhotoOutput and handling library permissions. And now, let's turn to Zero Shutter Lag and talk about skateboarding. For my upcoming presentation about the camera software team's modes of transport, we went to a skate park to grab some footage. I'm filming my coworker with Action Mode on my iPhone 14 Pro and I also want to get some high-quality hero action shots for my slides. But, spoiler alert, I will not be skateboarding. I tap the shutter button to grab a photo of my colleague, Tomo, catching air. When I go to the camera roll to inspect the photo , this was what I got. I tapped the shutter button when he was at the height of his jump, but the photo is of his landing. It's not exactly what I wanted. So what happened? Shutter lag. Shutter lag happened. You can think of "shutter lag" as the delay from when you request a capture to reading out one or more frames from the sensor to fuse into a photo and deliver it to you. Here, time is going from left to right, left being older frames, and the right being newer frames. Say frame five is what's in your camera viewfinder. Today, when you call capturePhoto:with settings on an AVCapturePhotoOutput, the camera pipeline starts grabbing frames from the sensor and applies our processing techniques. But the bracket of frames captured starts after touch-down, after frame five. What you get is a photo based on frames six through nine, or even later. At 30 frames per second, each frame is in the viewfinder for 33 milliseconds. It doesn't sound like much, but it really doesn't take long for the action to be over with. That's long enough for Tomo to have landed, and I've missed getting that hero shot. With Zero Shutter Lag enabled, the camera pipeline keeps a rolling ring buffer of frames from the past. Now, frame five is what you see in the viewfinder, you tap to capture, and the camera pipeline does a little bit of time travel, grabs frames from the ring buffer, and fuses them together and you get the photo you wanted. So now if I use the second toggle in my app's settings pane to enable Zero Shutter Lag, as Tomo catches air, when I tap the shutter button I got one of the "hero" shots I wanted for my presentation. Let's talk about what you need to do to get Zero Shutter Lag in your app. Absolutely nothing! We've enabled Zero Shutter Lag on apps that link on or after iOS 17 for AVCaptureSessionPresets and AVCaptureDeviceFormats where isHighest Photo Quality Supported is true. But, should you find during testing that you're not getting the results you want, you can set AVCapturePhotoOutput.isZeroShutter LagEnabled to false to opt out. And you can verify if the photoOutput supports zero shutter lag for the configured preset or format by checking if isZeroShutterLagSupported is true once the output is connected to your session. Certain types of still image captures such as flash captures, configuring the AVCaptureDevice for a manual exposure, bracketed captures, and constituent photo delivery, which is synchronized frames from multiple cameras, don't get Zero Shutter Lag. Because the camera pipeline is traveling back in time to grab frames from the ring buffer, users could induce camera shake into the photo if there's a long delay between the gesture to start the capture and when you send photo output the photoSettings. So you'll want to minimize any work you do between the tap event and the capturePhoto API call on the photo output. Rounding out our features for making a more responsive photography experience, I'll now cover the Responsive Capture APIs. This is a group of APIs to allow your customers to take overlapping captures, prioritize shot-to-shot time by adapting photo quality, and also give great UI feedback for when they can take their next photo. First, the main API, responsive capture. Back at the skate park, with the two features enabled earlier, I can capture about two photos per second. We've slowed down the footage to help make it clear. At two frames per second, you can't see as much of the action of Tomo in the air and this was the best photo I ended up with. Pretty good, but let's see if we can do better. I'll now turn on the 3rd and 4th switches to enable Responsive Capture features. I'll go over Fast Capture Prioritization in a little bit. But first, back to the park! And let's try that again. With responsive capture, I can take more photos in the same amount of time, increasing the chance of catching just the right one. And there's the "hero" shot for the start of my presentation. The team's really gonna love it! You can think of a call to the AVCapturePhotoOutput.capturePhoto with settings method as going through three distinct phases: capturing frames from the sensor, processing those frames to the final uncompressed image, and then encoding the photo into a HEIC or JPEG. After the encoding is finished, the photo output will call your delegate's didFinishProcessingPhoto callback, or if you've opted in to the deferred photo processing API, perhaps didFinishCapturing Deferred Photo Proxy, if it's a suitable shot. But once the "capture" phase is done and the "processing" starts, the photo output could, in theory, start another capture. And now, that theory is reality, and is available to your app. By opting in to the main Responsive Capture API, the photo output will overlap these phases so that a new photo capture request can start while another request is in the processing phase, giving your customers faster and more consistent back-to-back shots. Note that this will increase peak memory used by the photo output, so if your app is also using a lot of memory it will put pressure on the system, in which case you may prefer or need to opt out. Back to our timeline diagram, here, you take two photos in rapid succession. Your delegate will be called back for the willBeginCaptureFor resolvedSettings, and didFinishCaptureFor resolvedSettings for photo A. But then instead of getting a didFinish Processing Photo callback for Photo A, which is the photo is encoded and delivered to you, you could get the first willBeginCapture For resolvedSettings for photo B. There are now two in-flight photo requests, so you'll have to make sure your code properly handles callbacks for interleaved photos. To get those overlapping, responsive captures, first enable Zero Shutter Lag when it is supported. it must be on in order to get responsive capture support. Then use the AVCapturePhotoOutput isResponsiveCaptureSupported API to ensure the photo output supports it for the preset or format, and then turn it on by setting AVCapturePhotoOutput. .isResponsiveCaptureEnabled to true. Earlier, we enabled "fast capture prioritization," so I'll go over that, briefly, now. When it's turned on for the photo output, it will detect when multiple captures are being taken over a short period of time, and in response, will adapt the photo quality from the highest quality setting to more of a "balanced" quality setting to maintain shot-to-shot time. But, since this can impact photo quality, it's off by default. In Camera.app's Settings pane, this is called "Prioritize Faster Shooting." We've chosen to have it on by default for Camera.app because we think that consistent shot-to-shot times are more important by default, but you might choose differently for your app and your customers. As you might expect by now, you can check the "is fast capture prioritization supported" property on the photo output for when it's supported, and when it is, you can set "is fast capture prioritization enabled" to true if you or your customers want to use the feature. Now, let's chat about managing button states and appearance. The photo output can give indicators of when it's ready to start the next capture, or when it's processing, and you can update your photo capture button appropriately. This is done via an enum of values called AVCapturePhotoOutput CaptureReadiness. The photo output can be in a "notRunning," "ready," and three "not ready" states: "Momentarily," "waiting for capture," or "waiting for processing." The "not ready" enums indicate that if you call capturePhoto with settings, you'll incur a longer wait time between the capture and the photo delivery, increasing that shutter lag I talked about previously. Your app can listen for this state change by using a new class, AVCapturePhotoOutputReadinessCoordinator. This makes callbacks to a delegate object you provide when the Photo Output's readiness changes. You can use this class even if you don't use the Responsive Capture or Fast Capture Prioritization APIs. Here's how you can convey shutter availability and modify button appearance using the Readiness Coordinator and the Readiness enum. The app for our session turns off user interaction events on the capture button when handling a "not ready" enum value to prevent additional requests from getting enqueued inadvertently with multiple taps, resulting in long shutter lag. After a tap, and a capturePhoto with settings request has been enqueued, the captureReadiness state goes between .ready and the .notReadyMomentarily enum value. Flash captures hit the .notReadyWaitingForCapture state. Until the flash fires, the photo output hasn't even gotten frames from the sensor, so the button is dimmed. Finally, if you only use zero shutter lag and none of the other features this year, You might show a spinner while the .notReadyWaitingForProcessing enum value is the current readiness, as each photo's capture and processing is completing. So here's how you make use of the readiness coordinator in code. First, create a readiness coordinator for the photo output, and set an appropriate delegate object to receive callbacks about the readiness state. Then, at the time of each capture, set up your photo settings as you normally would. Then, tell the readiness coordinator to start tracking the capture request's readiness state for those settings. And then call capturePhoto on the photo output. The readiness coordinator will then call the captureReadinessDidChange delegate callback. You update your capture button's state and appearance based on the readiness enum value received to give your customers the best feedback on when they can capture next. Responsive Capture and Fast Capture Prioritization APIs are available on iPhones with A12 Bionic chip and newer, and the Readiness Coordinator is available wherever AVCapturePhotoOutput is supported. And now I've got all of the new features enabled in our app, with the most responsive camera experience possible that also gives super-sharp, high-quality photos. But, you don't have to use them all to get an improved experience. You can use just the ones that are appropriate for your app. We'll finish up our session today with updated video effects. Previously, Control Center on macOS provided options for camera streaming features such as Center Stage, Portrait, and Studio Light. With macOS Sonoma, we've moved video effects out of Control Center and into its own menu. You'll see a preview of your camera or screen share, and can enable Video Effects like Portrait mode and Studio Light. The Portrait and Studio Light effects are now adjustable in their intensity, and Studio Light is available on more devices. And we have a new effect type called "Reactions." When you're on a video call, you might want to express that you love an idea, or give a thumbs up about good news, all while letting the speaker continue without interruption. Reactions seamlessly blends your video with balloons, confetti, and more. Reactions follow the template of portrait and studio light effects, where they're a system-level camera feature, available out of the box, without any code changes needed in your app. For more detail on Portrait and Studio Light effects, check out the 2021 session, "What's new in camera capture." We have three ways to show reactions in the video stream First, you can click on a reaction effect in the bottom pane in the new Video Effects menu on macOS . Second, your app can call AVCaptureDevice.performEffect for: a reaction type. For example, maybe you have a set of reaction buttons in one of your app's views that participants can click on to perform a reaction. And third, when reactions are enabled, they can be sent by making a gesture. Let's check this out. You can do thumbs up, thumbs down, fireworks with two thumbs up, heart, balloons with one victory sign, rain with two thumbs down, confetti with two victory signs, and my personal favorite, lasers, using two signs of the horns. Hey, that's a nice bunch of effects. You can check for reaction effects support by looking at the reactionEffectsSupported property on the AVCaptureDeviceFormat you want to use in your capture session. There are properties on AVCaptureDevice that you can read or key-value observe for knowing when gesture recognition is on and when reaction effects have been enabled. Remember, because these are under the control of the user, your app can't turn them on or off. On iOS, it's the same idea. The participant goes to Control Center to turn gesture recognition on or off, and you can key value observe when this happens. However, to trigger effects in your app on iOS, you’ll need to do it programmatically. So let's go through how you can do that, now. When the "canPerformReactionEffects" property is true, calling the performEffect for reactionType method will render the reactions into the video feed. Your app should provide buttons to trigger the effects. Reactions coming in via gestures may be rendered in a different location in the video than they would be when you call performEffect\ depending on what cues are used for the detection. We have a new enum called AVCaptureReactionType for all of the different reaction effects, such as thumbs-up or balloons that AVCaptureDevice will recognize in a capture session and can render into the video content. And the "AVCaptureDevice.availableReactionTypes" property returns a set of AVCaptureReactionTypes based on the configured format or session preset. These effects also have built-in system UIImages available that you can place in your own views. You can get the systemName for a reaction from a new function AVCaptureReactionType.systemImageName that takes in an AVCaptureReactionType and returns the appropriate string to use with the UIImage systemName constructor. And we have API to tell you when reaction effects are in progress, the aptly-named AVCaptureDevice.reactionEffectsInProgress. When the user performs multiple reaction effects in sequence, they may overlap briefly, so this returns an array of status objects. You can use Key-value observing to know when these begin and end. If you're a voice-over-IP conferencing app, you can also use this information to send the metadata about the effects to remote views, particularly when those callers have video turned off for bandwidth reasons. For example, you might to show an effect icon in their UI on behalf of another caller. Rendering effect animations to the video stream may be challenging to a video encoder. They increase the complexity of the content, and it may require a larger bitrate budget to encode it. By Key-Value observing reactionEffectsInProgress, you can make encoder adjustments while the rendering is happening. If it's feasible for your app, you can increase the bitrate of the encoder while effects are rendering. Or if you're using the low-latency video encoder through VideoToolbox and setting the MaxAllowedFrameQP VTCompressionPropertyKey, then we encourage you to run tests in your app using the various video configurations including resolutions, framerates, and bitrate tier supported and adjust the max allowed FrameQP accordingly while the effects are in progress. Note that with a low MaxAllowedFrameQP value, the framerate of effects can be compromised and you'll end up with a low video framerate. The 2021 session "Explore low-latency video encoding with VideoToolbox" has more great information on working with this feature. You should also know that the video frame rate may change when effects are in progress. For example, if you've configured your AVCaptureSession to run at 60 frames per second, you'll get 60 frames per second while effects aren't running. But while effects are in progress, you may get a different frame rate, such as 30 frames per second. This follows the model of Portrait and Studio Light effects where the end frame rate may be lower than you specified. To see what that frame rate will be, check out AVCaptureDeviceFormat.videoFrameRateRange ForReactionEffectsInProgress for the format you're configuring on the device. As with other AVCaptureDeviceFormat properties, this is informational to your app, rather than something you can control. On macOS and with tvOS apps using Continuity Camera, reaction effects are always enabled. On iOS and iPad OS, applications can opt-in via changes to their Info.plist. You opt in either by advertising that you're a VoIP application category in your UIBackgroundModes array, or by adding NSCameraReactionEffectsEnabled with a value of YES. Reaction effects and gesture recognition are available on iPhones and iPads with the A14 chip or newer, such as iPhone 12, Apple Silicon Macs and Intel Macs and Apple TVs using Continuity Camera devices, Apple Studio display attached to a USB-C iPad or Apple Silicon Mac, and third party cameras attached to a USB-C iPad or Apple Silicon Mac. And that wraps up our session about responsive camera experiences with new APIs this year. We talked about deferred photo processing, zero shutter lag, and responsive capture APIs to give you new possibilities for making the most responsive photography app with improved image quality and we also covered how your users can really express themselves with updated video effects, including new "reactions." I can't wait to see how you respond to all the great new features. Thanks for watching.

-