-

Link fast: Improve build and launch times

Discover how to improve your app's build and runtime linking performance. We'll take you behind the scenes to learn more about linking, your options, and the latest updates that improve the link performance of your app.

Resources

Related Videos

WWDC23

WWDC22

-

Search this video…

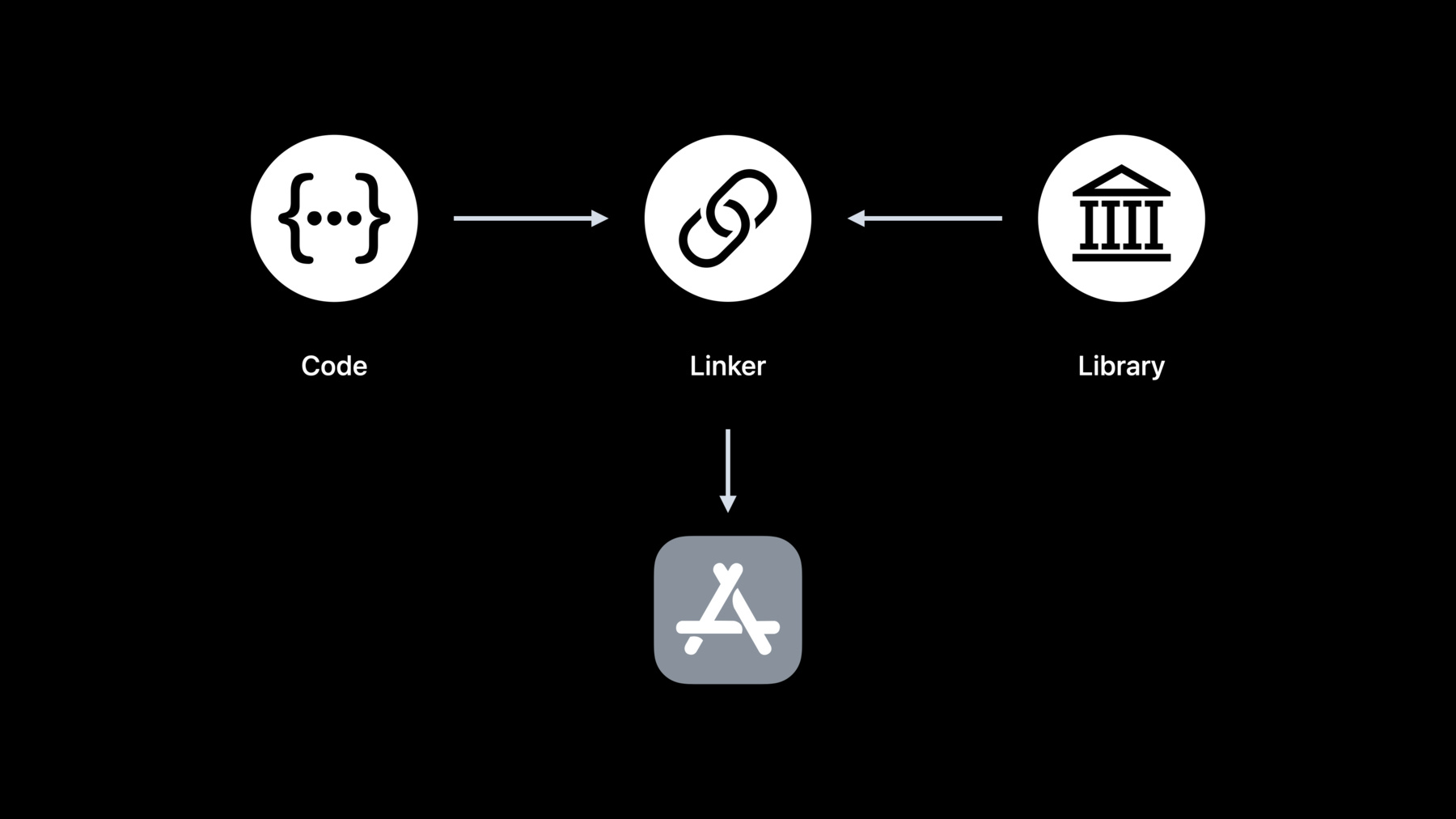

♪ ♪ Nick Kledzik: Hi, I'm Nick Kledzik, lead Engineer on Apple's Linker team. Today, I'd like to share with you how to link fast. I'll tell you what Apple has done to improve linking, as well as help you understand what actually happens during linking so that you can improve your app's link performance. So what is linking? You've written code, but you also use code that someone else wrote in the form of a library or a framework. In order for your code to use those libraries, a linker is needed. Now, there are actually two types of linking. There is "static linking", which happens when you build your app. This can impact how long it takes for your app to build and how big your app ends up being. And there is "dynamic linking". This happens when your app is launched. This can impact how long your customers have to wait for your app to launch. In this session I'll be talking about both static and dynamic linking. First, I'll define what static linking is and where it came from, with some examples. Next, I'll unveil what is new in ld64, Apple's static linker. Then, with this background on static linking, I'll detail best practices for static linking. The second half of this talk will cover dynamic linking. I'll show what dynamic linking is and where it came from, and what happens during dynamic linking. Next, I'll reveal what is new in dyld this year. Then, I'll talk about what you can do to improve your app's dynamic link time performance. And lastly, we'll wrap up with two new tools that will help you peek behind the curtains. You'll be able to see what is in your binaries, and what is happening during dynamic linking. To understand static linking, let's go way back to when it all started. In the beginning, programs were simple and there was just one source file. Building was easy. You just ran the compiler on your one source file and it produced the executable program. But having all your source code in one file did not scale. How do you build with multiple source files? And this is not just because you don't want to edit a large text file. The real savings is not re-compiling every function, every time you build. What they did was to split the compiler into two parts. The first part compiles source code to a new intermediate "relocatable object" file. The second part reads the relocatable .o file and produces an executable program. We now call the second part 'ld', the static linker. So now you know where static linking came from. As software evolved, soon people were passing around .o files. But that got cumbersome. Someone thought, "Wouldn't it be great if we could package up a set of .o files into a 'library'?" At the time the standard way to bundle files together was with the archiving tool 'ar'. It was used for backups and distributions. So the workflow became this. You could 'ar' up multiple .o files into an archive, and the linker was enhanced to know how to read .o files directly out of an archive file. This was a great improvement for sharing common code. At the time it was just called a library or an archive. Today, we call it a static library. But now the final program was getting big because thousands of functions from these libraries were copied into it, even if only a few of those functions were used. So a clever optimization was added. Instead of having the linker use all the .o files from the static library, the linker would only pull .o files from a static library if doing so would resolve some undefined symbol. That meant someone could build a big libc.a static library, which contained all the C standard library functions. Every program could link with the one libc.a, but each program only got the parts of libc that the program actually needed. And we still have that model today. But the selective loading from static libraries is not obvious and trips up many programmers. To make the selective loading of static libraries a little clearer, I have a simple scenario. In main.c, there's a function called "main" that calls a function "foo". In foo.c, there is foo which calls bar. In bar.c, there is the implementation of bar but also an implementation of another function which happens to be unused. Lastly, in baz.c, there is a function baz which calls a function named undef. Now we compile each to its own .o file. You'll see foo, bar, and undef don't have gray boxes because they are undefined. That is, a use of a symbol and not a definition. Now, let's say you decide to combine bar.o and baz.o into a static library. Next, you link the two .o files and the static library. Let's step through what actually happens.

First, the linker works through the files in command line order. The first it finds is main.o. It loads main.o and finds a definition for "main", shown here in the symbol table. But also finds that main has an undefined "foo". The linker then parses the next file on the command line which is foo.o. This file adds a definition of "foo". That means foo is no longer undefined. But loading foo.o also adds a new undefined symbol for "bar". Now that all the .o files on the command line have been loaded, the linker checks if there are any remaining undefined symbols. In this case "bar" remains undefined, so the linker starts looking at libraries on the command line to see if a library will satisfy that missing undefined symbol "bar". The linker finds that bar.o in the static library defines the symbol "bar". So the linker loads bar.o out of the archive. At that point there are no longer any undefined symbols, so the linker stops processing libraries. The linker moves on to its next phase, and assigns addresses to all the functions and data that will be in the program. Then it copies all the functions and data to the output file. Et voila! You have your output program. Notice that baz.o was in the static library but not loaded into the program. It was not loaded because the way the linker selectively loads from static libraries. This is non-obvious, but the key aspect of static libraries. Now you understand the basics of static linking and static libraries. Let's move on to recent improvements on Apple's static linker, known as ld64. By popular demand, we spent some time this year optimizing ld64. And this year's linker is... twice as fast for many projects. How did we do this? We now make better use of the cores on your development machine. We found a number of areas where we could use multiple cores to do linker work in parallel. That includes copying content from the input to the output file, building the different parts of LINKEDIT in parallel, and changing the UUID computation and codesigning hashes to be done in parallel. Next, we improved a number of algorithms. Turns out the exports-trie builder works really well if you switch to use C++ string_view objects to represent the string slices of each symbol. We also used the latest crypto libraries which take advantage of hardware acceleration when computing the UUID of a binary, and we improved other algorithms too.

While working on improving linker performance, we noticed configuration issues in some apps that impacted link time. Next, I'll talk about what you can do in your project to improve link time. I'll cover five topics. First, whether you should use static libraries. And then three little known options that have a big effect on your link time. Finally, I'll discuss some static linking behavior that might surprise you. The first topic is if you are actively working on a source file that builds into a static library, you've introduced a slowdown to your build time. Because after the file is compiled, the entire static library has to be rebuilt, including its table of contents. This is just a lot of extra I/O. Static libraries make the most sense for stable code. That is, code not being actively changed. You should consider moving code under active development out of a static library to reduce build time. Earlier we showed the selective loading from archives. But a downside of that is that it slows down the linker. That is because to make builds reproducible and follow traditional static library semantics, the linker has to process static libraries in a fixed, serial order. That means some of the parallelization wins of ld64 cannot be used with static libraries. But if you don't really need this historical behavior, you can use a linker option to speed up your build. That linker option is called "all load". It tells the linker to blindly load all .o files from all static libraries. This is helpful if your app is going to wind up selectively loading most of the content from all the static libraries anyways. Using -all_load will allow the linker to parse all the static libraries and their content in parallel. But if your app does clever tricks where it has multiple static libraries implementing the same symbols, and depends on the command line order of the static libraries to drive which implementation is used, then this option is not for you. Because the linker will load all the implementations and not necessarily get the symbol semantics that were found in regular static linking mode. The other downside of -all_load is that it may make your program bigger because "unused" code is now being added in. To compensate for that, you can use the linker option -dead_strip. That option will cause the linker to remove unreachable code and data. Now, the dead stripping algorithm is fast and usually pays for itself by reducing the size of the output file. But if you are interested in using -all_load and -dead_strip, you should time the linker with and without those options to see if it is a win for your particular case. The next linker option is -no_exported_symbols. A little background here. One part of the LINKEDIT segment that the linker generates is an exports trie, which is a prefix tree that encodes all the exported symbol names, addresses, and flags. Whereas all dylibs need to have exported symbols, a main app binary usually does not need any exported symbols. That is, usually nothing ever looks up symbols in the main executable. If that is the case, you can use -no_exported_symbols for the app target to skip the creation of the trie data structure in LINKEDIT, which will improve link time. But if your app loads plugins which link back to the main executable, or you use xctest with your app as the host environment to run xctest bundles, your app must have all its exports, which means you cannot use -no_exported_symbols for that configuration. Now, it only makes sense to try to suppress the exports trie if it is large. You can run the dyld_info command shown here to count the number of exported symbols. One large app we saw had about one million exported symbols. And the linker took two to three seconds to build the exports trie for that many symbols. So adding -no_exported_symbols shaved two to three seconds off the link time of that app. I'll tell you more about the dyld_info tool later in this talk. The next option is: -no_deduplicate. A few years back we added a new pass to the linker to merge functions that have the same instructions but different names. It turns out, with C++ template expansions, you can get a lot of those. But this is an expensive algorithm. The linker has to recursively hash the instructions of every function, to help look for duplicates. Because of the expense, we limited the algorithm so the linker only looks at weak-def symbols. Those are the ones the C++ compiler emits for template expansions that were not inlined. Now, de-dup is a size optimization, and Debug builds are about fast builds, and not about size. So by default, Xcode disables the de-dup optimization by passing -no_deduplicate to the linker for Debug configurations. And clang will also pass the no-dedup option to the linker if you run clang link line with -O0. In summary, if you use C++ and have a custom build, that is, either you use a non-standard configuration in Xcode, or you use some other build system, you should ensure your debug builds add -no_deduplicate to improve link time. The options I just talked about are the actual command line arguments to ld. When using Xcode, you need to change your product build settings. Inside build settings, look for "Other Linker Flags".

Here's what you would set for -all_load. And notice the "Dead Code Stripping" option is here as well. And there's -no_exported_symbols. And here's -no_deduplicate.

Now let's talk about some surprises you may experience when using static libraries. The first surprise is when you have source code that builds into a static library which your app links with, and that code does not end up in the final app. For instance, you added "attribute used" to some function, or you have an Objective-C category. Because of the selective loading the linker does, if those object files in the static library don't also define some symbol that is needed during the link, those object files won't get loaded by the linker. Another interesting interaction is static libraries and dead stripping. It turns out dead stripping can hide many static library issues. Normally, missing symbols or duplicate symbols will cause the linker to error out. But dead stripping causes the linker to run a reachability pass across all the code and data, starting from main, and if it turns out the missing symbol is from an unreachable code, the linker will suppress the missing symbol error. Similarly, if there are duplicate symbols from static libraries, the linker will pick the first and not error. The last big surprise with using static libraries, is when a static library is incorporated into multiple frameworks. Each of those frameworks runs fine in isolation, but then at some point, some app uses both frameworks, and boom, you get weird runtime issues because of the multiple definitions. The most common case you will see is the Objective-C runtime warning about multiple instances of the same class name. Overall, static libraries are powerful, but you need to understand them to avoid the pitfalls. That wraps up static linking. Now, let's move on to dynamic linking. First, let's look at the original diagram for static linking with a static library. Now think about how this will scale over time, as there is more and more source code. It should be clear that as more and more libraries are made available, the end program may grow in size. That means the static link time to build that program will also increase over time.

Now let's look at how these libraries are made. What if we did this switch? We change 'ar' to 'ld' and the output library is now an executable binary. This was the start of dynamic libraries in the '90s. As a shorthand, we call dynamic libraries "dylibs". On other platforms they are known as DSOs or DLLs. So what exactly is going on here? And how does that help the scalability? The key is that the static linker treats linking with a dynamic library differently. Instead of copying code out of the library into the final program, the linker just records a kind of promise. That is, it records the symbol name used from the dynamic library and what the library's path will be at runtime. How is this an advantage? It means your program file size is under your control. It just contains your code, and a list of dynamic libraries it needs at runtime. You no longer get copies of library code in your program. Your program's static link time is now proportional to the size of your code, and independent of the number of dylibs you link with. Also, the Virtual Memory system can now shine. When it sees the same dynamic library used in multiple processes, the Virtual Memory system will re-use the same physical pages of RAM for that dylib in all processes that use that dylib. I've shown you how dynamic libraries started and what problem they solve. But what are the "costs" for those "benefits"? First, a benefit of using dynamic libraries is that we have sped up build time. But the cost is that launching your app is now slower. This is because launching is no longer just loading one program file. Now all the dylibs also need to be loaded and connected together. In other words, you just deferred some of the linking costs from build time to launch time. Second, a dynamic library based program will have more dirty pages. In the static library case, the linker would co-locate all globals from all static libraries into the same DATA pages in the main executable. But with dylibs, each library has its DATA page. Lastly, another cost of dynamic linking is that it introduces the need for something new: a dynamic linker! Remember that promise that was recorded in the executable at build time? Now we need something at runtime that will fulfill that promise to load our library. That's what dyld, the dynamic linker, is for. Let's dive into how dynamic linking works at runtime. An executable binary is divided up into segments, usually at least TEXT, DATA, and LINKEDIT. Segments are always a multiple of the page size for the OS. Each segment has a different permission. For example, the TEXT segment has "execute" permissions. That means the CPU may treat the bytes on the page as machine code instructions. At runtime, dyld has to mmap() the executables into memory with each segments' permissions, as show here. Because the segments are page sized and page aligned, that makes it straightforward for the virtual memory system to just set up the program or dylib file as backing store for a VM range. That means nothing is loaded into RAM until there is some memory access on those pages, which triggers a page fault, which causes the VM system to read the proper subrange of the file and fill in the needed RAM page with its content. But just mapping is not enough. Somehow the program needs to be "wired up" or bound to the dylib. For that we have a concept called "fix ups".

In the diagram, we see the program got pointers set up that point to the parts of the dylib it uses. Let's dive into what fix-ups are. Here is our friend, the mach-o file. Now, TEXT is immutable. And in fact, it has to be in a system based on code signing. So what if there is a function that calls malloc()? How can that work? The relative address of _malloc can't be known when the program was built. Well, what happens is, the static linker saw that malloc was in a dylib and transformed the call site. The call site becomes a call to a stub synthesized by the linker in the same TEXT segment, so the relative address is known at build time, which means the BL instruction can be correctly formed. How that helps is that the stub loads a pointer from DATA and jumps to that location. Now, no changes to TEXT are needed at runtime– just DATA is changed by dyld. In fact, the secret to understanding dyld is that all fixups done by dyld are just dyld setting a pointer in DATA.

So let's dig more into the fixups that dyld does. Somewhere in LINKEDIT is the information dyld needs to drive what fixups are done. There are two kinds of fixups. The first are called rebases, and they are when a dylib or app has a pointer that points within itself. Now there is a security feature called ASLR, which causes dyld to load dylibs at random addresses. And that means those interior pointers cannot just be set at build time. Instead, dyld needs to adjust or "rebase" those pointers at launch. On disk, those pointers contain their target address, if the dylib were to be loaded at address zero. That way, all the LINKEDIT needs to record is the location of each rebase location. Dyld can then just add the actual load address of the dylib to each of the rebase locations to correctly fix them up.

The second kind of fixups are binds. Binds are symbolic references. That is, their target is a symbol name and not a number. For instance, a pointer to the function "malloc". The string "_malloc" is actually stored in LINKEDIT, and dyld uses that string to look up the actual address of malloc in the exports trie of libSystem.dylib. Then, dyld stores that value in the location specified by the bind. This year we are announcing a new way to encode fixups, that we call "chained fixups".

The first advantage is that is makes LINKEDIT smaller. The LINKEDIT is smaller because instead of storing all the fixup locations, the new format just stores where the first fixup location is in each DATA page, as well as a list of the imported symbols. Then the rest of the information is encoded in the DATA segment itself, in the place where the fixups will ultimately be set. This new format gets its name, chained fixups, from the fact that the fixup locations are "chained" together. The LINKEDIT just says where the first fixup was, then in the 64-bit pointer location in DATA, some of the bits contain the offset to the next fixup location. Also packed in there is a bit that says if the fixup is a bind or a rebase. If it is a bind, the rest of the bits are the index of the symbol. If it's a rebase, the rest of the bits are the offset of the target within the image. Lastly, runtime support for chained fixups already exists in iOS 13.4 and later. Which means you can start using this new format today, as long as your deployment target is iOS 13.4 or later. And the chained fixup format enables a new OS feature we are announcing this year. But to understand that, I need to talk about how dyld works.

Dyld starts with the main executable– say your app. Parses that mach-o to find the dependent dylibs, that is, what promised dynamic libraries it needs. It finds those dylibs and mmap()s them. Then for each of those, it recurses and parses their mach-o structures, loading any additional dylibs as needed. Once everything is loaded, dyld looks up all the bind symbols needed and uses those addresses when doing fixups. Lastly, once all the fixups are done, dyld runs initializers, bottom up. Five years ago we announced a new dyld technology. We realized the steps in green above were the same every time your app was launched. So as long as the program and dylibs did not change, all the steps in green could be cached on first launch and re-used on subsequent launches. This year we are announcing additional dyld performance improvements. We are announcing a new dyld feature called "page-in linking". Instead of dyld applying all the fixups to all dylibs at launch, the kernel can now apply fixups to your DATA pages lazily, on page-in. It has always been the case that the first use of some address in some page of an mmap()ed region triggered the kernel to read in that page. But now, if it is a DATA page, the kernel will also apply the fixup that page needs. We have had a special case of page-in linking for over a decade for OS dylibs in the dyld shared cache. This year we generalized it and made it available to everyone. This mechanism reduces dirty memory and launch time. It also means DATA_CONST pages are clean, which means they can be evicted and recreated just like TEXT pages, which reduces memory pressure. This page-in linking feature will be in the upcoming release of iOS, macOS, and watchOS. But page-in linking only works for binaries built with chained fixups. That is because with chained fixups, most of the fixup information will be encoded in the DATA segment on disk, which means it is available to the kernel during page-in. One caveat is that dyld only uses this mechanism during launch. Any dylibs dlopen()ed later do not get page-in linking. In that case, dyld takes the traditional path and applies the fixups during the dlopen call. With that in mind, let's go back to the dyld workflow diagram. For five years now, dyld has been optimizing the steps above in green by caching that work on first launch and reusing it on later launches. Now, dyld can optimize the "apply fixup" step by not actually doing the fixups, and letting the kernel do them lazily on page-in. Now that you have seen what's new in dyld, let's talk about best practices for dynamic linking. What can you do to help improve dynamic link performance? As I just showed, dyld has already accelerated most of the steps in dynamic linking. One thing you can control is how many dylibs you have. The more dylibs there are, the more work dyld has to do to load them. Conversely, the fewer dylibs, the less work dyld has to perform. The next thing you can look at are static initializers, which is code that always runs, pre-main. For instance, don't do I/O or networking in a static initializer. Anything that can take more than a few milliseconds should never be done in an initializer. As we know, the world is getting more complicated, and your users want more functionality. So it makes sense to use libraries to manage all that functionality. Your goal is to find your sweet spot between dynamic and static libraries. Too many static libraries and your iterative build/debug cycle is slowed down. On the other hand, too many dynamic libraries and your launch time is slow and your customers notice. But we sped up ld64 this year, so your sweet spot may have changed, as you can now use more static libraries, or more source files directly in your app, and still build in the same amount of time. Lastly, if it works for your installed base, updating to a newer deployment target can enable the tools to generate chained fixups, making your binaries smaller, and improving launch time. The last thing I'd like you all to be aware of is two new tools that will help you peek inside the linking process. The first tool is dyld_usage. You can use it to get a trace of what dyld is doing. The tool is only on macOS, but you can use it to trace your app launching in the simulator, or if your app built for Mac Catalyst. Here is an example run against TextEdit on macOS.

As you can tell by the top few lines, the launch took 15ms overall, but only 1ms for fixups, thanks to page-in linking. The vast majority of time is now spent in static initializers.

The next tool is dyld_info. You can use it to inspect binaries, both on disk and in the current dyld cache. The tool has many options, but I'll show you how to view exports and fixups. Here the -fixup option shows all the fixup locations dyld will process and their targets. The output is the same regardless of if the file is old style fixups or new chained fixups. Here the -exports option will show all the exported symbols in the dylib, and the offset of each symbol from the start of the dylib. In this case, it is showing information about Foundation.framework which is the dylib in the dyld cache. There is no file on disk, but the dyld_info tool uses the same code as dyld and can thus find it.

Now that you understand the history and tradeoffs of static versus dynamic libraries, you should review what you app does and determine if you have found your sweet spot. Next, if you have a large app and have noticed the build takes a while to link, try out Xcode 14 which has the new faster linker. If you still want to speed up your static link more, look into the three linker options I detailed and see if they make sense in your build, and improve your link time. Lastly, you can also try building your app, and any embedded frameworks, for iOS 13.4 or later to enable chained fixups. Then see if your app is smaller and launches faster on iOS 16. Thanks for watching, and have a great WWDC.

-