-

Explore EDR on iOS

EDR is Apple's High Dynamic Range representation and rendering pipeline. Explore how you can render HDR content using EDR in your app and unleash the dynamic range capabilities of HDR displays on iPhone and iPad. We'll show how you can take advantage of the native EDR APIs on iOS, provide best practices to help you decide when HDR is appropriate, and share tips for tone-mapping and HDR content rendering. We'll also introduce you to Reference Mode and highlight how it provides a reference response to enable color-critical workflows such as color grading, editing, and content review.

Resources

Related Videos

WWDC23

Tech Talks

WWDC22

-

Search this video…

♪ Mellow instrumental hip-hop music ♪ ♪ Hi, my name is Denis, and I'm part of the Display and Color Technologies team here at Apple.

Today I'll be exploring some exciting updates with EDR and their implications for iOS developers.

You might already be familiar with EDR if you've seen last year's presentation, but as a short recap, EDR refers to Extended Dynamic Range, and is Apple's HDR technology.

EDR refers to both a rendering technology as well as a pixel representation, with EDR's pixel representation being particularly important because it consistently represents both standard, and high dynamic range content.

In well-exposed content, the subject -- in this example, the campers -- should fall within the standard dynamic range of the image, whereas specular and emissive highlights, such as the campfire, will fall into the higher range.

In a standard range representation, these elements would end up getting clipped, but with EDR they remain representable.

While other pixel representations are designed to represent a fixed range of brightness values, EDR's representation is truly dynamic, capable of describing any arbitrary value.

Additionally by taking advantage of any unused backlight, EDR allows any display to render high dynamic range content regardless of the display's peak.

And with HDR content becoming far more prevalent and accessible, so has the list of applications adopting EDR on macOS.

"Baldur's Gate 3," "Divinity: Original Sin 2," and "Shadow of the Tomb Raider" are already shipping with EDR on macOS.

By adopting EDR, games are capable of rendering brighter and more saturated colors, as well as generating more realistic lighting, reflections, and more colorful content.

Where bright elements would be limited to the SDR peak white, with EDR they regain their vibrance and depth as the authors intended.

EDR is integrated across the Apple ecosystem, including in Safari, and QuickTime player.

As a result, video-on-demand apps and services such as Apple TV and Netflix gain the ability to deliver the ever-expanding catalogue of HDR10, Dolby Vision, and ProRes content to their consumers.

Pro apps that adopt EDR enable their users to create stunning HDR content by providing a variety of professional workflows to accurately edit, grade, master, and review HDR stills and videos.

With all the excitement around EDR adoption on macOS, we're excited to bring several new updates to EDR.

First and foremost, we are happy to announce that our EDR APIs are now available on iOS and iPadOS.

Additionally, as part of Apple's commitment to supporting our pro users, this year we are introducing two new pro color features on the 12.9-inch iPad Pro with Liquid Retina XDR display.

Reference Mode and EDR rendering over Sidecar.

Reference Mode is a new display mode that is designed to enable color-critical workflows such as color grading, editing, and content review by providing a reference response for a variety of common video formats, similar to the reference presets on macOS.

To do this, Reference Mode fixes the SDR peak brightness at 100 nits, and the HDR peak brightness at 1000 nits, thus giving 10 times EDR headroom.

Reference Mode also provides a one-to-one media to display mapping.

And disables all dynamic display adjustments for ambient surround, such as True Tone, Auto-Brightness and Night Shift, instead allowing users to finely calibrate the white point manually.

This way the display will produce colors exactly as they are described by their respective specifications.

This chart provides a list of formats that Reference Mode supports.

Note that unlike the reference presets on macOS, Reference Mode is a single toggle that supports the five most common HDR and SDR video formats, providing a consistent reference response across media types.

And don't worry if you have content in a format that is not listed on this table.

Any formats that are not supported will be color managed as they would in the default Display Mode.

As an example, let's take a look at LumaFusion in Reference Mode.

By enabling Reference Mode on iOS, LumaFusion becomes a more powerful tool for video post-production.

When displaying HDR video, colors within the P3 color gamut up to a 1000 nit peak are rendered accurately, so users can be confident their videos are always being displayed correctly and consistently.

With the combination of Reference Mode and LumaFusion's new Video Scopes feature, color-critical workflows are now possible on iPad Pro.

If we disable Reference Mode, EDR headroom can change dynamically, which we'll see here as iOS modulates the brightness of our video.

Users can export their LumaFusion projects as XML, which can then be imported by other popular Mac post-production apps, allowing a team of content creators to easily collaborate on both platforms.

It's very exciting to see LumaFusion adopting Reference Mode, along with the value and flexibility it brings to pro content creators.

But Reference Mode isn't the only new feature.

Back in 2019, we introduced Sidecar, a technology that allows Mac users to use their iPad as a secondary display.

And now, with the introduction of Reference Mode, we are adding support for EDR rendering over Sidecar, which extends the capability of Sidecar when Reference Mode is enabled to support reference-grade SDR and HDR content, allowing pro content creators to use their iPad Pro as a secondary reference display for Apple silicon Macs.

It goes without saying that content rendered over Sidecar will provide a reference response for all the same video formats as native iOS in Reference Mode.

For example, let's take a look at this HDR10 test pattern as rendered on a Mac in the HDR video preset and compare it to the rendition on the iPad Pro using Sidecar.

In this configuration, both devices act as a reference display with P3 color and a D65 white point, since that happens to be the spec for both displays.

As you can see from the P3 primary and secondary color bars, both the Mac and iPad produce a similar response as we would expect.

Additionally, both configurations support a peak luminance of 1000 nits, and as seen in the gradient, those values are rendered faithfully while values beyond 1000 nits are clipped.

We are excited by the prospects and new opportunities that EDR rendering over Sidecar brings to our pro users.

We also look forward to seeing more developers taking advantage of these features by adopting EDR in their own apps.

On that note, let's explore how you can integrate EDR rendering into your own iOS and iPadOS apps.

We'll start by taking a look at the implication of EDR's pixel representation and render pipeline.

Traditionally, the floating point representation of SDR was values in the zero to one range, zero being black and 1 being SDR white.

With EDR, SDR content is still represented in the 0 to 1 range while values above 1 represent content brighter than SDR.

Note that EDR is represented in linear space, which means that 2.0 EDR is not perceptibly two times brighter than 1.0.

Unlike other HDR formats, EDR does not tone map values to the 0 to 1 range, and this has some implications on rendering.

In that regard, EDR ensures that SDR content, or values from 0 to 1, are always rendered.

And any values above 1 will be properly rendered without tone mapping up to the current EDR headroom.

However, any values that are brighter will be clipped.

Initially, this behavior may seem unideal, but it means that any content authored in HDR will be rendered as close to the intention as possible, with any highlights that are too bright clipped as they typically would be in a conventional representation.

Clearly then, the higher your headroom, the brighter and more dynamic your content can be.

But how much headroom do we have? Well, it should be noted that the instantaneous EDR headroom is a dynamic value and is based on many factors, including but not limited to the specific display technology of a device, as well as the current display brightness.

But as an oversimplification, your current EDR headroom is roughly equal to the maximum brightness of the display divided by the current SDR brightness.

So earlier, when I mentioned that Reference Mode provides 10 times EDR headroom, that's because we fix EDR 1.0 -- or the SDR brightness -- to 100 nits, while we fix the HDR peak brightness to 1000 nits.

Thus 1000 nits divided by 100 nits gives you a constant headroom of 10 times EDR.

This table gives a few more examples of different devices and their maximum potential headroom.

Note that this is the potential headroom and that the true headroom will be dependent on various other factors, including the current display brightness.

Later in this talk, we'll go into further detail about headroom, and show you an example of how to query and use the headroom to make informed decisions about rendering.

But for now, you should have a good understanding of EDR and when you would want it.

Now, let's shift gears to actually rendering some EDR content.

In this section, we'll go over how to read your HDR content into a renderable format.

The particular example we'll be covering is an Image I/O workflow, but if you're looking for a different framework, take a look at one of our other EDR talks this year.

In the case of still media, we start off with an image file that we want to load in.

This image will typically be encoded in a biplanar YUV space.

When we load it in initially, the image and it's buffer will be in their original format.

Unfortunately, while in that format it can be difficult to interpret and work with the image in any meaningful way.

So with the help of CGBitmapContext we will decode and convert the image to a more usable format.

At which point, we can create a MTLTexture from the backing pixel data of the context and then render it with our Metal engine.

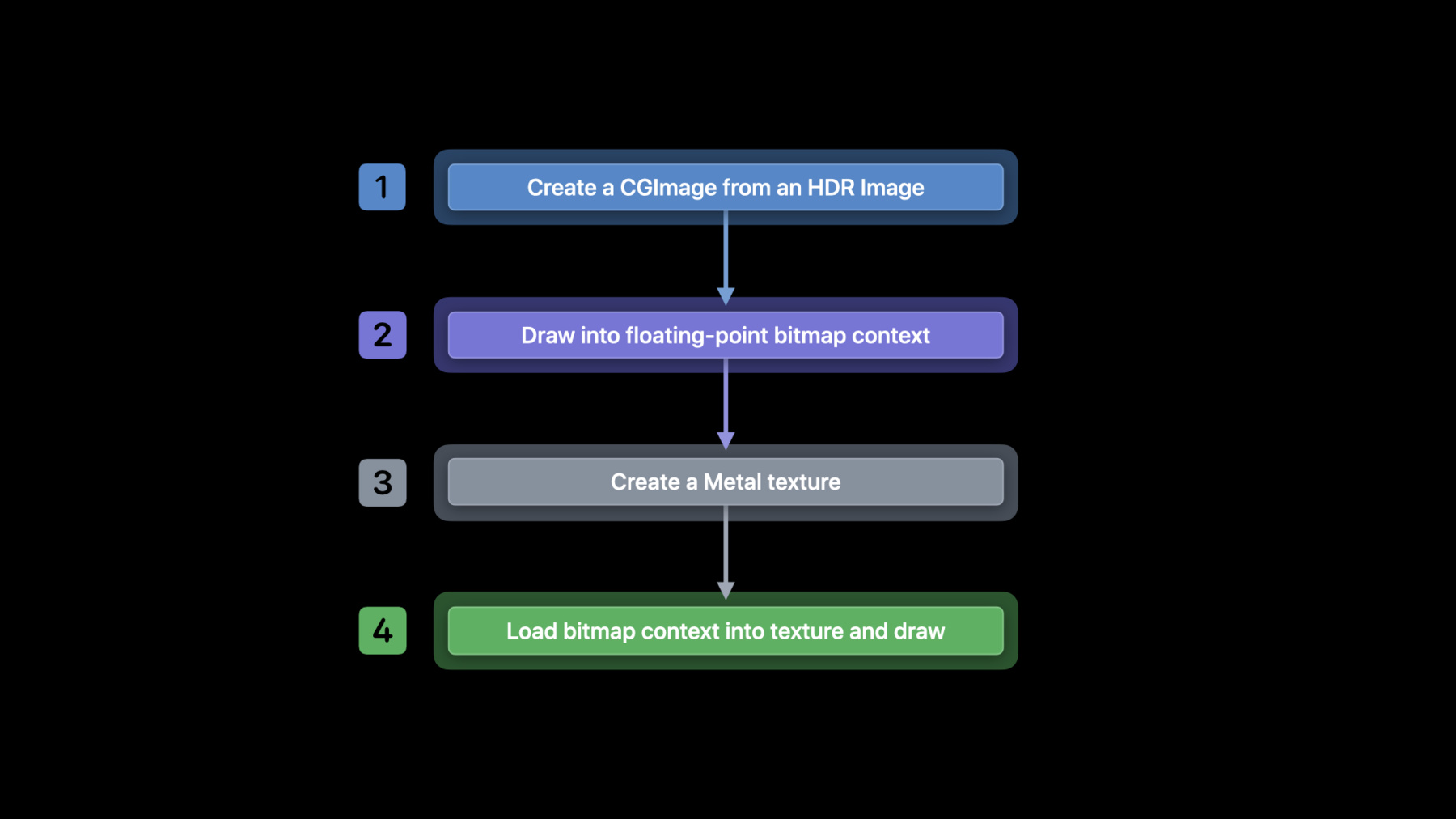

More concretely, in order to achieve this, there are four steps we need to cover.

We'll start by creating a CGImage for our HDR still, drawing that CGImage into a bitmap context, creating a Metal texture, and, finally, loading the bitmap's data into the newly created texture.

In the first step, we'll read our image and do a little bit of setup.

First, we read our image from a URL into a CGImageSource, then create a CGImage from that source.

In this case, we created the image by passing a nil options dictionary.

However, if you'd like floating point buffers with certain HDR formats, there is a new kCGImageSourceShouldAllowFloat option that you may wish to set.

Now, we'll instantiate a CGBitmapInfo.

In this case, we are creating a 16-bit floating point context with a premultiplied alpha.

Remember this because we'll want our Metal texture to use the same format.

Next, we'll construct a CGBitmapContext using the bitmap info that we just created, along with the width and height of the CGImage.

Note that you'll want the color space of the context to match the color space of the CAMetalLayer to which you'll be rendering.

Otherwise, you will need to perform the appropriate color management yourself.

Lastly, we'll draw our CGImage into the bitmap context.

At this point, we can move on to creating a Metal texture from our context.

To create our Metal texture, we'll first instantiate a MTLTextureDescriptor.

Recall from earlier that we opted to use half float for our bitmap context, but when rendering EDR, you can also have your 2D texture in a 32-bit packed pixel format with a 10-bit blue, green, and red, and a 2-bit alpha.

We'll go into more detail in the following sections, but for now, it's enough to know that the pixel format of the texture should match the pixel format of your content; in our case, half float.

At this point, we'll instantiate our texture with our Metal layer's device and the newly created texture descriptor.

Finally, we'll grab the data from our bitmap context and copy it into our texture.

With that, we have a Metal texture containing EDR values that we can send to our Metal pipeline for rendering.

This section covered a sample workflow for sourcing an HDR still image starting from a URL and working our way down to a Metal texture.

Next, we'll go over the minimum code changes required to render such a texture on iOS and iPadOS.

The process for opting into EDR with the new iOS and iPadOS APIs is identical to that on macOS.

So, if you already have EDR support for a macOS build of your app, you won't need to make any changes.

In order to opt into EDR, you need to ensure you're using a CAMetalLayer, that you set the appropriate flags and tags on that layer, and that you have bright content in a supported EDR format.

First, take the CAMetalLayer to which you'll be rendering your content.

On that layer you'll want to enable the wantsExtendedDynamicRange Content flag.

Then, on that same layer, you'll need to set a supported combination of pixel format and CGColorSpace.

Depending on what sort of content you have and how you source it, the specific pixel format and color space will be different.

In our case, we loaded our image into a 16-bit floating point buffer, and here we've opted to match it with an extended linear Display P3 color space.

iOS supports rendering EDR in 16-bit floating point pixel buffers combined with linear color spaces.

But if you choose to use one of these combinations, be sure to use the extended variant of the color space.

Otherwise, your content will be clipped to SDR.

iOs also supports 10-bit packed BGRA pixel buffers which we briefly mentioned earlier.

Such buffers are supported for rendering with either PQ or HLG color spaces, as outlined in this chart.

In this section, we covered the minimum code changes required on your rendering layer in order to support EDR rendering, including the wantsExtendedDynamicRangeContent flag as well as the various pixel formats and color spaces that support EDR.

At this point, if you were to render the Metal texture, we sourced from the previous section to the CAMetalLayer in this section, we would be rendering EDR.

But there are a couple more tricks we can do.

As mentioned in the overview, the default behavior of EDR is to clip to the current EDR headroom.

There are cases where you may decide that the headroom is not high enough to justify rendering your EDR content and instead go down an SDR path.

Or times when you'd like to use the current headroom to tone map your content before displaying.

In either case, iOS now has new APIs that support querying the headroom, and in this section, we'll go over the calls as well as how they differ from macOS so that you can better make such decisions.

On macOS, headroom queries are found on NSScreen.

On NSScreen, we have queries for: the maximum EDR headroom the display can support, the maximum EDR headroom of the current reference preset, and the current EDR headroom.

Additionally, macOS provides a notification whenever the EDR headroom changes.

On iOS however, headroom queries are found on UIScreen, and unlike NSScreen, here we instead have queries for the maximum EDR headroom the display supports and the current EDR headroom.

Additionally, UIScreen provides the Reference Display Mode status, which is used to indicate whether Reference Mode is supported and engaged.

Note that UIScreen does not provide notifications for changes in EDR headroom but will send notifications whenever the Reference Mode status changes.

You may also notice that the maximum reference headroom query is missing from the list.

Instead of using a dedicated query, you can determine its value by querying the potential maximum headroom when the Reference Mode status indicates that Reference Mode is enabled.

Let's take a look at some sample code to get a better feel for how to query headroom.

On UIScreen, you can query the potentialEDRHeadroom in order to see the maximum possible headroom of the display.

If you decide this value is too low, you may choose to render an SDR path instead, saving some processing power.

Then, if you've decided on the EDR path, you may have a render delegate or an otherwise regularly scheduled draw call.

In this call, we can query the currentEDRHeadroom and use it to tone map our content such that none of it exceeds the headroom, and thus won't clip.

If you'd like to be aware of the Reference Mode status, you can register to receive a notification whenever the status changes using the UIScreen.referenceDisplayMode StatusDidChangeNotification.

Then, whenever the status does change, you can get the new status and the new potential EDR headroom and use them to make further decisions about rendering.

Regarding the Reference Mode status, there are four unique states you should be aware of.

StatusEnabled indicates that Reference Mode is enabled and that it is rendering as expected.

StatusLimited indicates that Reference Mode is enabled but that for some reason we are temporarily unable to achieve a reference response.

Note that if this status does occur, it will be accompanied by an out-of-reference UI notification to inform users that the reference response is compromised.

StatusNotEnabled indicates that Reference Mode is supported on this device, but that it hasn't been enabled.

And finally, StatusNotSupported indicates that Reference Mode is not supported on this device.

These new APIs should give developers deeper insight into the current state of the display and provide them with the tools they need to make informed decisions on how to render their EDR content.

In the previous section, we covered how to query various headroom parameters, including the current EDR headroom, which you could use to tone map your content and avoid clipping.

But what if you don't want to dig into or implement your own tone mapping algorithms? Well, in the case of video content, you may then want to take advantage of Apple's built-in tone mapping.

If you would like to enable Apple's tone mapping on your content, you can do so using the CAEDR metadata interface, which includes metadata constructors for both HDR10 and HLG.

Be aware that tone mapping is not supported on all platforms but that there is a query with which you can check if your platform has this support.

To check if your platform supports tone mapping, query CAEDRMetadata.isAvailable.

If it is available, you then need to instantiate CAEDRMetadata.

We'll go over the specific constructors in just a moment, but let's skip this step for now.

Once you have your EDR metadata, apply it to the layer on which you are rendering.

This will opt your layer into being processed by the system tone mapper based on the metadata provided.

As mentioned, there are a number of EDR metadata constructors available, which are specific to their HDR video format.

Here we have the constructor for HLG metadata which takes no parameters.

Next, is one of the two HDR10 constructors available.

This one takes three parameters: the minimum luminance of the mastering display in nits, the maximum luminance of the mastering display in nits, and the optical output scale which indicates the contents mapping of EDR 1.0 to brightness in nits.

Typically, we set this to 100.

Last, we have the second of our HDR10 constructors which takes a MasterDisplayColourVolume SEI message, a ContentLightLevelInformation SEI message, and an opticalOutputScale, which as mentioned earlier, is typically set to 100 nits.

Once you've used one of these constructors to create your CAEDRMetadata object, set it on your application's CAMetalLayer.

This will cause all content rendered on this layer to be processed by the system tone mapper, thus avoiding any clipping without having to perform the mapping yourself.

Which constructor to use depends entirely on how your content was sourced or authored, but typically, if your content is in an HLG color space, you'll want to use the HLG constructor.

And if it is in a PQ color space, then you'll want to use an HDR10 constructor.

If your content comes with the SEI messages already attached, then we'd recommend using the second HDR10 constructor to best adhere to the author's intent.

Otherwise, you will need to use the first constructor.

If you are using a linear color space, then which constructor you use is entirely dependent on how the content was authored.

So if you intend to use them with Apple's tone mapping, I highly recommend you read our developer documentation regarding HDR10 and HLG metadata.

Now let's take a look at Pixelmator and their adoption of EDR.

Thanks to EDR, we can render images in a more vivid and true-to-life way.

For example, if we open a RAW photo and increase the exposure and highlights, the details in the brightest areas can't be rendered using the standard dynamic range of a display, also known as SDR.

Now, if we turn on EDR, all the details that are beyond SDR white become visible.

Note how much more vibrant the EDR content appears relative to the SDR colors of the canvas.

Turning off EDR will make the visual difference between SDR and EDR all the more obvious.

And with that, the session has come to an end.

During this talk, we've covered a couple of new and exciting features coming to iOS and iPadOS.

We've provided a quick refresher on EDR, gone over a sample workflow for reading an HDR Image with Image I/O and converting it to a Metal texture, and looked at how to opt into EDR so that we can render that texture without clipping to SDR.

We also briefly touched on the headroom query APIs and their utility in making informed decisions about EDR rendering, as well as how to opt into Apple's system provided tone mapping for popular HDR formats.

I hope that you've come away from this talk with a better understanding of EDR and how to adopt it in your iOS and iPadOS apps.

If you'd like to learn more about working with HDR, or EDR content in general, there are a couple of sessions from previous years, as well as some upcoming sessions that I would recommend.

With that said, thank you for attending our session "Exploring EDR on iOS." And have a wonderful WWDC! ♪

-

-

9:23 - Create CGImage and Draw

// Create CGImage from HDR Image let isr = CGImageSourceCreateWithURL(HDRImageURL, nil) let img = CGImageSourceCreateImageAtIndex(isr, 0, nil) // Draw into floating point bitmap context let width = img.width let height = img.height let info = CGBitmapInfo(rawValue: kCGBitmapByteOrder16Host |CGImageAlphaInfo.premultipliedLast.rawValue | CGBitmapInfo.floatComponents.rawValue) let ctx = CGContext(data: nil, width: width, height: height, bitsPerComponent: 16, bytesPerRow: 0, space: layer.colorspace, bitmapInfo: info.rawValue) ctx?.draw(in: img, image: CGRect(x: 0, y: 0, width: CGFloat(width), height: CGFloat(height))) -

10:30 - Create floating point texture and Load EDR bitmap

// Create floating point texture let desc = MTLTextureDescriptor() desc.pixelFormat = .rgba16Float desc.textureType = .type2D let texture = layer.device.makeTexture(descriptor: desc) // Load EDR bitmap into texture let data = ctx.data texture.replace(region: MTLRegionMake2D(0, 0, width, height), mipmapLevel: 0, withBytes: &data, bytesPerRow: ctx.bytesPerRow) -

11:55 - CAMetalLayer properties

// Opt into using EDR var layer = CAMetalLayer() layer?.wantsExtendedDynamicRangeContent = true // Use supported pixel format and color spaces layer.pixelFormat = MTLPixelFormatRGBA16Float layer.colorspace = CGColorSpace(name: kCGColorSpaceExtendedLinearDisplayP3) -

14:42 - UIScreen headroom

// Query potential headroom let screen = windowScene.screen let maxPotentialEDR = screen.potentialEDRHeadroom if (maxPotentialEDR < 1.5) { // SDR path } // Query current headroom func draw(_ rect: CGRect) { let maxEDR = screen.currentEDRHeadroom // Tone-map to maxEDR } // Register for Reference Mode notifications let notification = NotificationCenter.default notification.addObserver(self, selector: #selector(screenChangedEvent(_:)), name: UIScreen.referenceDisplayModeStatusDidChangeNotification, object: nil) // Query for latest status and headroom func screenChangedEvent(_ notification: Notification?) { let status = screen.referenceDisplayModeStatus let maxPotentialEDR = screen.potentialEDRHeadroom } -

16:54 - CAEDRMetadata and CAMetalLayer

// Check if EDR metadata is available let isAvailable = CAEDRMetadata.isAvailable // Instantiate EDR metadata // ... // Apply EDR metadata to layer let layer: CAMetalLayer? = nil layer?.edrMetadata = metadata -

17:22 - Instantiating CAEDRMetadata

// HLG let edrMetadata = CAEDRMetadata.hlg // HDR10 (Mastering Display luminance) let edrMetaData = CAEDRMetadata.hdr10(minLuminance: minLuminance, maxLuminance: maxContentMasteringDisplayBrightness, opticalOutputScale: outputScale) // HDR10 (Supplemental Enhancement Information) let edrMetaData = CAEDRMetadata.hdr10(displayInfo: displayData, contentInfo: contentInfo, opticalOutputScale: outputScale)

-