-

Go bindless with Metal 3

Learn how you can unleash powerful rendering techniques like ray tracing when you go bindless with Metal 3. We'll show you how to make your app's bindless journey a joy by simplifying argument buffers, allocating acceleration structures from heaps, and benefitting from the improvements to the Metal's validation layer and Debugger Tools. We'll also explore how you can command more CPU and GPU performance with long-term resource structures.

Resources

Related Videos

WWDC23

WWDC22

- Discover Metal 3

- Maximize your Metal ray tracing performance

- Profile and optimize your game's memory

WWDC21

-

Search this video…

♪ instrumental hip hop music ♪ Hello and welcome. My name is Alè Segovia Azapian from the GPU Software team here at Apple. And I’m Mayur, also from the GPU Software team. Alè: In this session we are going to talk about bindless rendering. The bindless binding model is a modern way to provide resources to your shaders, unlocking advanced rendering techniques, such as ray tracing. For today, I'll start with a brief recap of how the bindless binding model works, and how you can easily adopt bindless in your games and apps with Metal 3.

Bindless rendering aggregates data, which opens up new opportunities to improve performance on the CPU and GPU. I'll give you two specific tips today to improve your CPU and GPU times.

Then I'll hand it over to Mayur, and he’ll show you how the tools can help you adopt a bindless model.

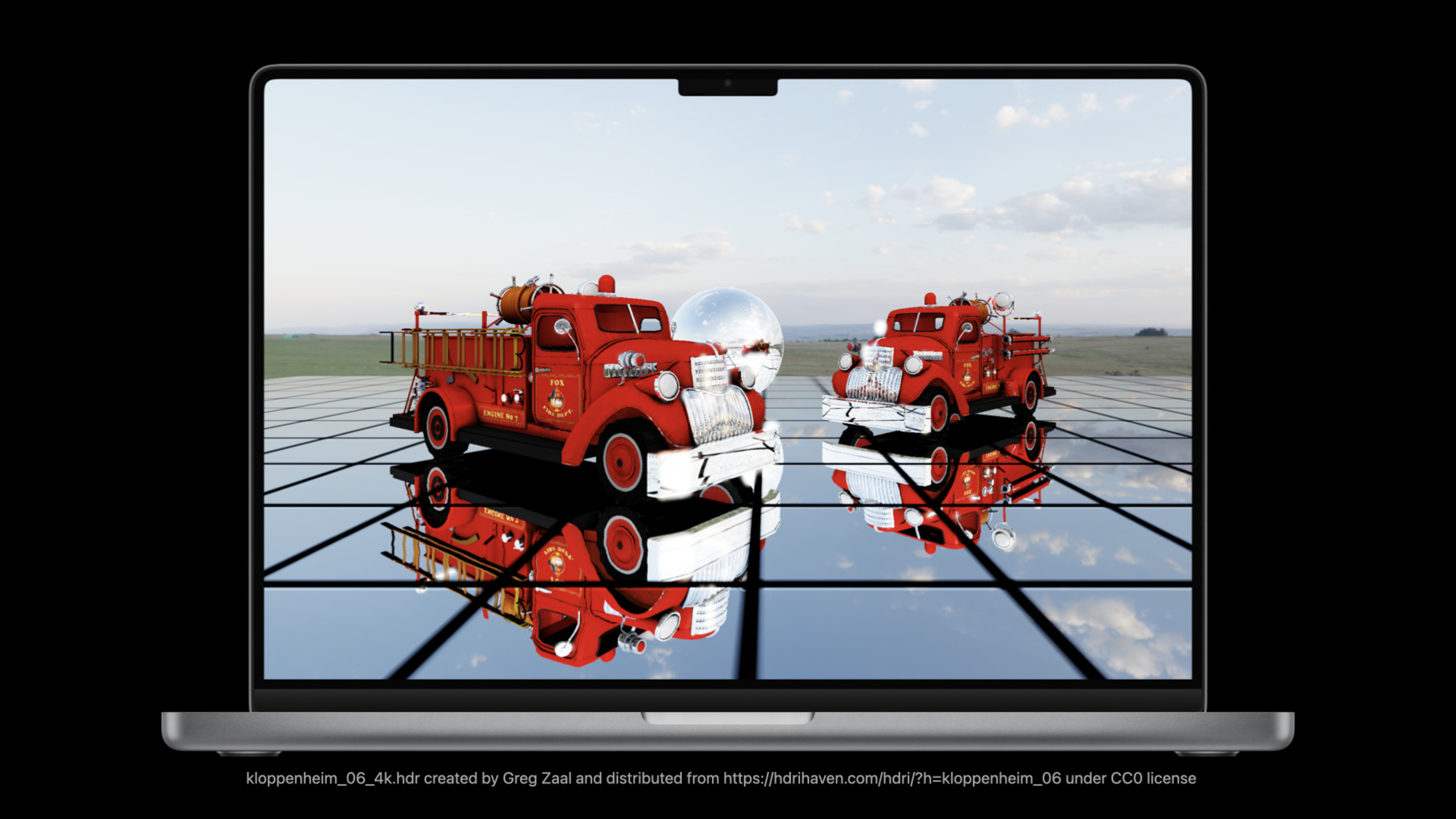

In the bindless model, resources are aggregated and linked together with argument buffers. Conceptually, this is what this looks like. In this example, an array aggregates all the meshes in a scene. Unlike the traditional binding model, where you bind each resource independently to a specific slot in a pipeline, in the bindless model, resources are first linked together in memory. This lets you bind a single buffer that your shaders can freely navigate and access the resources they need to calculate elaborate surfaces and lighting. After the app goes bindless, the ray tracing shaders can access all the data they need to beautifully shade the reflections. The app makes the 3D models and textures, including the floor, the trucks, their materials, and even the sky, available to the ray tracing shaders by placing all its data into argument buffers. Even better, when bindless rendering is paired with other Metal features like Heaps, apps and games enjoy better performance, thanks to less pressure on the CPU. I'll talk about four specific enhancements in Metal 3 that you may find useful for bindless rendering.

Argument buffers are the fundamental Metal construct that allows you to link your resources together. They reference resources such as textures and other buffers. Metal 3 makes writing argument buffers easier than ever because now you no longer need an Argument encoder object. And the same is true for unbounded arrays. You can now allocate acceleration structures from a Metal Heap and the Shader Validation Layer alerts you when resources aren’t resident in GPU memory. Together, these four features make it easier than ever to go bindless.

In particular, writing argument buffers in Metal 3 is a joy. To encode a scene into argument buffers, you write into these buffers the scene data, such as instances, meshes, materials, and textures. In Metal 2, this is accomplished with an argument encoder. So first, I will recap how these objects work, and then I will show you how Metal 3 helps simplify your code. With argument encoders, the first step is to create the encoder instance. You do this via shader function reflection or by describing the struct members to Metal. With the encoder instance, set its recording destination and offset to the target argument buffer. And use its methods to write data into the buffer. Please check out the bindless session from last year for a detailed refresher on argument buffers and argument encoders. Now this mechanism is great, but the encoder objects can sometimes be challenging to manage. Metal provides two mechanisms for creating argument encoders. It might not be clear which one is appropriate for your app. Additionally, using an argument encoder from multiple threads requires care. Developers, intuitively understand how to write a C struct, and with Metal 3, you can now do just that for your argument buffers. Metal 3 simplifies writing argument buffers by allowing you to directly write into them like any other CPU-side structure. You now have access to the virtual GPU address and Resource IDs of your resources. When you write these directly into your argument buffer, Metal now understands what resources you are referencing. It is functionally the same as previously encoding the reference using an argument encoder, except an encoder isn't needed anymore. This capability is supported all devices with argument buffers tier 2 support. That is, any Mac from 2016 or newer, and any iOS device with the A13 bionic chip or newer.

If you are unsure whether a device supports argument buffers tier 2, there is a convenient feature query in the MTLDevice object that you can use. This is what the process now looks like in Metal 3. First, define your CPU-visible structs, using a 64-bit type for buffer addresses, and MTLResourceID for textures. Then, allocate the argument buffer. You allocate buffers either directly from the MTLDevice, or from a MTLHeap. You get the buffer's contents and cast it to the argument buffer struct type. And finally, write the addresses and resource IDs to the struct members. Take a look at how this is done in the hybrid rendering demo. Here is the code. Notice how simple it is. The host-side struct directly stores the GPU address of the normals buffer. This is a 64-bit unsigned integer so I used uint64_t. Now that there is no encoder object, you simply use the size of the struct for your argument buffer. Metal guarantees that the size and alignment of the GPU and CPU structs match across clang and the Metal shader compiler.

Next, allocate the buffer as usual. And if a buffer’s storage mode is Managed or Shared, get a direct pointer to the buffer and cast it to the struct type. And finally, set the normals member to the gpuAddress, and optionally, an offset that you must align to the GPU’s memory requirements.

One thing I want to highlight is how the structure declaration changes between the Metal Shading Language and the C declaration. In this example, these are kept separate, but if you prefer, you can have a single struct declaration in a shared header and use conditional compilation to distinguish between the shader compiler types and the C types.

Here’s a unified declaration in C. The __METAL_VERSION__ macro is only defined when compiling shader code. Use it to separate GPU and CPU code in header declarations. If your app targets C++, you can take this further and use templates to make the declarations even more uniform.

Check out the argument buffer sample code for the best practices. Now that's how you write one struct, but you can also write many structs using unbounded arrays. You could already implement unbounded arrays in Metal using argument encoders, but Metal 3 simplifies the process even further by bringing it closer to just filling out an array of structs.

Here's what's different compared to just writing one struct. You now need to allocate enough storage for all structs you want to store. And then, iterate over the array, writing the data for each struct.

Back to the code sample, first, expand the size of the buffer to store as many structs as meshes in the scene. Notice how this is exactly the same you do for a CPU buffer: multiply the size of the struct times the mesh count. I want to take a moment to note how powerful this is. This single variable completely controls the size of the array. The shader does not need to declare this size to the Metal shader compiler at any point and it's free to index into any position. This is part of the reason the bindless model is so flexible in Metal, because you write shaders that access arrays of any size with no constraints. It just works! Next, allocate the buffer of this size and cast the pointer to the contents to the correct mesh struct type.

Now that the buffer is large enough, walk it with a simple for loop, straddling the size of the mesh struct. And finally, directly set the GPUAddress of each struct in the array, and optionally, an aligned offset.

From the GPU side in the shader, this is one way to represent the unbounded array. Here, I declare it as a mesh pointer parameter that I pass to the shader.

This makes it possible to freely access the contents directly, just as you would for any C array.

Another option is to pull all the unbounded arrays into a struct. This helps keep shaders neat by aggregating data in a single place. In this example, all the meshes and materials are brought together in a scene struct.

Using the scene struct, the scene is passed directly to the shader by binding a single buffer, instead of passing every unbounded array separately.

And access is just like before, but now, the mesh array is reached through the scene struct.

And that’s how to write argument buffers and unbounded arrays in Metal 3. The completely revamped API now makes it more intuitive and matches what you do for CPU structs, or arrays of structs. With this year's ray tracing update, ray tracing acceleration structures can be allocated from Metal Heaps, along with your buffers and textures.

This means they can be aggregated together amongst themselves and with other resource types. This is great, because when you aggregate all acceleration structures into heaps, you can flag them all resident in a single call to useHeap. This is a huge opportunity for significant CPU savings in your application's render thread.

Here are some tips for working with acceleration structures in heaps. First, when allocated from heaps, acceleration structures have alignment and size requirements that vary per device. There is a new query to check for the size and alignment of an acceleration structure for heap allocation. Use the heapAccelerationStructureSize andAlignWithDescriptor method of the MTLDevice to determine the SizeAndAlignment for structure descriptors. Keep in mind, this is different from the accelerationStructureSizes WithDescriptor method in MTLDevice.

Now that acceleration structures are in a MTLHeap object, call useHeap: to make them all resident in a single call. This is faster than calling useResource on each individual resource. And keep in mind that unless you opt your heap into hazard tracking, Metal does not prevent race conditions for resources allocated from them, so you will need to synchronize the acceleration structure builds between one another and with ray tracing work. Don't worry though, I will talk more about this in a moment.

For more details on this and other ray tracing performance advances in Metal 3, make sure to check out the "Maximize your Metal ray tracing performance" talk this year. Using heap-allocated acceleration structures provides an opportunity to reduce your app's CPU usage when it matters the most. Last but not least, here's one of my favorite features this year: Shader validation enhancements.

On the topic of useResource and useHeap, it is very important that apps flag residency to Metal for all indirectly accessed resources. Forgetting to do it means that the memory pages backing those resources may not be present at rendering time. This can cause command buffer failures, GPU restarts, or even image corruption. Unfortunately, it is very common to run into these problems when starting the bindless journey, because in bindless, the majority of scene resources are accessed indirectly, and shaders make pointer navigation decisions at runtime. This year, Metal 3 introduces new functionality in the shader validation layer that will help you track down missing residency of resources during command buffer execution. I'll show you a concrete example. During the update process of the Hybrid Rendering app, we encountered a real problem where reflections sometimes looked incorrect. I'll show you how the validation layer helped diagnose and fix this problem.

To flag residency to Metal, the app stores all individual resources not backed by heaps into a mutable set at load time. The app adds buffers and it adds textures. At rendering time, before the app dispatches the ray tracing kernel, it indicates to Metal that it uses all resources in the set. This is a simple process where the app iterates over the set and calls useResource on each element. Metal then makes all these resources resident before starting the ray tracing work. Here's part of the code where the app collects the resources into this set. The app does this as part of its argument buffer writing process. The app's loading function iterates over each submesh. It nabs the data it needs to write to the argument buffer-- that is, index data and texture data for materials-- then it stores the index buffer's address in the argument buffer. For materials, it then loops over the texture array, writing the texture GPU resource IDs into the argument buffer. And at the end, it adds all individual textures from the submesh materials to the sceneResources set, so it can flag them resident at dispatch time.

Unfortunately, there is a subtle bug here. The app would run the command buffer, and in some cases, reflections would be missing. Previously, it was hard to track this down. Now in Metal 3, the shader validation layer comes to the rescue. These kinds of problems now produce an error during command buffer execution, indicating what the problem is. The error message indicates the name of the shader function that triggered the problem, the name of the pass, the metal file and line of code where it detected the access, and even the label of the buffer, its size, and the fact that it was not resident. As a pro tip, this is why it's always a good practice to label Metal objects. The tools use the labels, which is helpful when trying to identify which object is which while debugging your app. With all this detailed information in hand, it's now easy to find the missing resource in the shader code. What's even better is that when the debug breakpoint is enabled, Xcode conveniently shows the exact line in the shader code where shader validation detects the problem. In the case of the demo app, it is the indices buffer that is not resident. The fix is now straightforward. Going back to the code, the app now stores the missing index buffer into the resident resource set. With these changes, later at ray tracing time, Metal knows to make the index buffers available to the GPU, solving the issue. This is an essential tool, and a complete game changer, that will potentially save you hours of debugging time in your bindless journey. So those are the enhancements Metal 3 brings to help you organize and refer to bindless resources. Now I'm going to switch gears and talk about how to maximize your game's performance when going bindless. In this section, I will cover two topics: unretained resources, and untracked resources. These tips will help you get more performance out of both your CPU and your GPU when you have long-lived and aggregate resources. Now, to talk about how to improve CPU performance with long-lived resources, I will first recap the Metal resource lifecycle. Objective-C and Swift handle object lifecycles via reference counting. Metal resources follow this model. Resources start with a retainCount of 1 and the runtime deallocates them when all strong references disappear. Because the CPU and GPU operate in parallel, it would be a problem if the CPU deallocated a resource by allowing its retainCount to reach 0 while the GPU is still using it.

To prevent this, Metal command buffers create strong references to all resources they use, ensuring their retainCount is always at least 1. Metal creates strong references for resources you directly bind to a pipeline with functions such as setVertexBuffer or setFragmentTexture-- and this also includes render attachments-- Metal heap objects you flag resident via the useHeap API, and indirect resources that you make resident via the useResource API, even if they are part of a heap. For more details on Metal object lifecycles, please check out the "Program Metal in C++ with metal-cpp" talk this year. Now, it's very useful that Metal creates these references, because as a programmer, you never have to worry that you might be deallocating an object while the GPU is still using it. This safety guarantee Metal gives you is very fast to execute, but it does comes with a small CPU cost. Now, in the bindless model, apps aggregate resources into heaps and these tend to be long lived, matching the application's domain. For example, in a game, resources are alive for the duration of a whole level. When this is the case, it becomes unnecessary for Metal to provide additional guarantees about resource lifecycles.

What you can do then is recoup this CPU cost by asking Metal command buffers not to retain resources they reference.

To turn off Metal's automatic resource retaining, simply create a command buffer with unretained references. You do this directly from the MTLCommandQueue, just like you create any regular command buffer. You don't need to make any other changes to your app, as long as you are already guaranteeing your resource lifecycles.

Keep in mind that the granularity level for this setting is the entire command buffer. It will either retain all referenced resources or none of them.

In a small microbenchmark, we measured a CPU usage reduction of 2% in the command buffer's lifecycle just by switching to command buffers with unretained references. but this time was spent entirely creating and destroying unnecessary strong references.

In summary, unretained resources provides an opportunity for some extra CPU savings when you are already guaranteeing resource lifecycles. Similar to unretained resources, untracked resources provides an opportunity to disable a safety feature to obtain more performance. Many visual techniques consist in rendering to intermediate textures and writing into buffers and then consuming them in later passes. Shadow mapping, skinning, and post-processing are good examples of this. Now, producing and immediately consuming resources introduces read-after-write hazards. Additionally, when several passes write to the same resource, such as two render passes drawing into the same attachment, one after the other, or two blit encoders write into the same resource, it produces write-after-write hazards because of the way Metal schedules work on the GPU. When you use tracked resources, Metal automatically uses synchronization primitives to avoid hazards on the GPU timeline. For example, Metal makes the GPU wait for a compute skinning pass to finish writing into a buffer before starting a scene rendering pass that reads from the same buffer.

This is great, and it's a big part of why Metal is such an approachable graphics API, but there are some performance considerations for apps that aggregate resources into heaps.

Consider this example. Here, the GPU is busy, drawing two frames that do vertex skinning, render the scene, and apply tone mapping, one after the other. As the app keeps the GPU busy, Metal identifies opportunities where render and compute work can overlap, based on resource dependencies. When there are no dependencies, and the conditions are right, Metal schedules work to overlap and run in parallel. This saturates the GPU and allows it to get more work done in the same amount of wall-clock time.

Now, when the app aggregates resources together in a heap, all of its subresources appear as a single one to Metal. This is what makes heaps so efficient to work with. But this means that Metal sees read and write work on the same resource and must conservatively schedule work to avoid any race conditions, even when no actual hazard exists.

This situation is called "false sharing" and, as you might expect, it increases the execution wall-clock time of the GPU work. So here's the performance tip. If you know there are no dependencies between the resources in the heap, then you can avoid this behavior.

To avoid false sharing, you can opt resources out of hazard tracking and directly signal fine-grained dependencies to Metal. You opt out of resource tracking by setting a resource descriptor's hazardTracking property to Untracked. Because this is so important, it is the default behavior for heaps, as it allows you to unlock more opportunities for the GPU to run your work in parallel right out of the gate. Once you start using untracked resources, you express dependencies using the following primitives. Depending on the situation, use Fences, Events, Shared events, or Memory barriers. Metal Fences synchronize access to one or more resources across different render and compute passes, within the context of a single command queue. This is a split barrier kind-of primitive, so the consumer pass waits until the producer signals the Fence.

The only requirement you need to keep in mind when using Fences is to commit or enqueue your producer command buffers before your consuming command buffers. When you can't guarantee this order, or need to synchronize across multiple queues on the same device, use MTL Events. Using Events, the consumer command buffer waits for the producer command buffer to signal the Event with a given value. After it signals the value, it is safe to read the resource. Use Events to tell a GPU to pause work until a command signals an Event. MTLSharedEvents behave very similarly to regular Events, but work at larger scope that goes beyond a single GPU. Use these to synchronize access to resources across different Metal devices and even with the CPU. For example, use Shared Events to process the results of a GPU calculation from the CPU. Here is an example. The GPU in this case skins a mesh in a compute pass and the CPU stores the pose to disk. Because these two are independent devices, use a Shared Event to have the CPU wait until the GPU produces the resource. In the beginning, the CPU unconditionally starts to wait for the GPU to signal the Shared Event. When the GPU produces the resource and places it into unified memory, it signals the Shared Event. At this point, the waiting thread on the CPU wakes up and safely consumes the resource.

The last primitive type is Memory Barriers. A Memory Barrier forces all subsequent commands within a single render or compute pass to wait until all the previous commands finish. The cost of a barrier is similar to the cost of a Fence in almost all cases. There is, however, one exception.

That exception is barriers after the fragment stage in a render pass. These barriers have a very high cost that’s similar to splitting the render pass. Metal disables barriers after the fragment stage on Apple GPUs to help your apps stay on the fastest driver path. The Metal debug layer even generates a validation error if you add an after-fragment barrier on Apple GPUs. It is recommended to use a Fence to synchronize resources after the fragment stage.

Here's a short summary of the synchronization primitives and when to use them. Prefer using Fences for the lowest overhead when committing or enqueueing work to a single command queue in producer, then consumer order. Fences are great for the majority of common cases. When the submission order can't be guaranteed, or there are multiple command queues, use Metal Events. Shared Events allows synchronization of multiple GPUs between themselves and with the CPU. Use them only in these specific multi-device cases. Use Memory Barriers for cases where it's desired to synchronize within a pass. Barriers are a fast primitive in most cases, such as concurrent compute passes, and vertex stages between draw calls. But friendly reminder, use a Fence between passes instead of a barrier for synchronizing after the fragment stages, because these barriers are very expensive and Apple GPUs don't allow it.

Using untracked resources and manual fine-grained tracking, you can now have all the advantages of data aggregation, while maximizing GPU parallelism. And those are the performance tips to get the most out of the CPU and GPU when going bindless. I've talked a lot about how Metal 3 unlocks simplified and efficient bindless workflows. But writing code is only half the equation. The other half is how the available tools can help you verify how the GPU sees and executes work. I am now going to hand it over to Mayur to talk about what's new with Metal 3 tooling for bindless. Mayur: Thank you Alè. Today, I'm excited to show you some of the great new features in the Metal Debugger that will help you debug and optimize your bindless Apps. I just took a frame capture of the HybridRendering app that Alè just showed you. When you capture a frame in the Metal Debugger, you'll arrive to the Summary page, which provides you with an overview of your frame alongside helpful insights on how to improve your app's performance. But today, I’m excited to show you the new dependency viewer. To open it, just click on Dependencies here on the left. Here is the new dependency viewer and it features a brand new design that’s packed with powerful new features. The dependency viewer shows you a graph-based representation of your workload. Each node in the graph represents a pass, which is encoded by a command encoder, and its output resources. The edges represent resource dependencies between passes. New this year, you can analyze your workload by focusing on two types of dependencies. data flow and synchronization. The solid lines represent data flow and they show you how data flows in your app. The dotted lines represent synchronization and they show you dependencies that introduce GPU synchronization between passes. To learn more, you can click on any encoder, resource, or edge, and the debugger will show you a lot of detailed information in the new sidebar. For example, this edge adds synchronization and also has data flow between these passes. By default, the dependency viewer shows both data flow and synchronization dependencies, but you can use this menu down here at the bottom to focus on just one of the dependency types. Here, I will focus on just synchronization. As Alè said earlier, false sharing is a common problem when reading and writing different resources from a tracked heap. The dependency viewer makes it easy to catch these issues. This demo I captured is from an early development version that has this issue. If I click on this heap, the dependency viewer shows me that this heap is tracked and therefore adds synchronization between these two passes. The dependency viewer also highlights the resources allocated inside the heap, such as this render target texture that the render encoder stores, and a buffer that the compute encoder reads and writes to. The problem is this synchronization between these two passes is not needed because the compute encoder is not using any resources from previous encoders. To remove this dependency, I can modify the app to use an untracked heap and insert Fences where synchronization is needed. With that change, these two passes can now run in parallel. Another great improvement in Xcode 14 to help debug your bindless apps is the new resource list. I can navigate to a draw call that I want to debug and open it. When using bindless, hundreds or even thousands of resources are available to the GPU at any time. This year, the Metal debugger gives you the ability to check which of these resources a draw call accessed, just by clicking on the "Accessed" mode at the top. Now the debugger shows me only the handful of resources that this draw call accesses and the type of each access. This is really useful for understanding what resources your shader has accessed from argument buffers. Knowing what resources your draw call uses is great, but if it shows resources that you weren’t expecting, you can use the shader debugger to figure out what’s going on. To start the shader debugger, just click on the debug button here in the bottom bar, select the pixel that you want to debug, and hit the Debug button. And now you are in the shader debugger. The shader debugger shows how your code was executed line by line, including which resources were accessed. For these lines, this shader reads textures from an argument buffer. I can expand the detailed views on the right sidebar to check which resources were read. This can help identify issues where your shader accesses the wrong argument buffer element. In this demo, I’ve shown you how to use the new dependency viewer to analyze and validate resource dependencies, how to use the new resource list to understand what resources a draw call accessed, and how to use the shader debugger to analyze, line by line, how a shader was executed. I can’t wait to see how you use these new features to create great Metal bindless apps. Back to you Alè. Alè: Thank you, Mayur. That was an awesome demo. To wrap up, Metal 3 brings a lot to the table for going bindless. With simplified argument buffer encoding, acceleration structures from heaps, improvements to the validation layer and tools, Metal 3 is an excellent API to bring effective and performant bindless to your games and apps. With this year's enhancements, the hybrid rendering app is looking better than ever. We are releasing this updated version of the app with full source code in the Metal sample code gallery. You can download, study, and modify it, and as an exercise, I challenge you to take it even further and add recursive reflections to the mirror surfaces. I can't wait to see what you do with it. There has never been a better time to go bindless with Metal 3. Thank you for watching. ♪ instrumental hip hop music ♪

-

-

5:38 - Write argument buffers in Metal 3

// Write argument buffers in Metal 3 struct Mesh { uint64_t normals; // 64-bit uint for constant packed_float3* }; NSUInteger meshArgumentSize = sizeof(struct Mesh); id<MTLBuffer> meshArgumentBuffer = [device newBufferWithLength:meshArgumentSize options:storageMode]; struct Mesh* meshes = (struct Mesh *)(meshArgumentBuffer.contents); meshes->normals = normalBuffer.gpuAddress + normalBufferOffset; -

6:31 - // Shader struct:

// Shader struct: struct Mesh { constant packed_float3* normals; }; // Host-side struct: struct Mesh { uint64_t normals; }; -

6:53 - Shared struct:

// Shared struct: #if __METAL_VERSION__ #define CONSTANT_PTR(x) constant x* #else #define CONSTANT_PTR(x) uint64_t #endif struct Mesh { CONSTANT_PTR(packed_float3) normals; }; -

7:53 - Write unbounded arrays of resources in Metal 3

// Write unbounded arrays of resources in Metal 3 struct Mesh { uint64_t normals; // 64-bit uint for constant packed_float3* }; NSUInteger meshArgumentSize = sizeof(struct Mesh) * meshes.count; id<MTLBuffer> meshArgumentBuffer = [device newBufferWithLength:meshArgumentSize options:storageMode]; struct Mesh* meshes = (struct Mesh *)(meshArgumentBuffer.contents); for ( NSUInteger i = 0; i < meshes.count; ++i ) { meshes[i].normals = normalBuffers[i].gpuAddress + normalBufferOffsets[i]; } -

9:03 - Metal shading language: unbounded arrays option 1

// Metal shading language: struct Mesh { constant packed_float3* normals; }; fragment half4 fragmentShader(ColorInOut v [[stage_in]], constant Mesh* meshes [[buffer(0)]] ) { /* determine mesh to read, e.g. geometry_id */ packed_float3 n0 = meshes[ geometry_id ].normals[0]; packed_float3 n1 = meshes[ geometry_id ].normals[1]; packed_float3 n2 = meshes[ geometry_id ].normals[2]; /* interpolate normals and calculate shading */ } -

9:25 - Metal shading language: unbounded arrays option 2

// Metal shading language: struct Mesh { constant packed_float3* normals; }; struct Scene { constant Mesh* meshes; // mesh array constant Material* materials; // material array }; fragment half4 fragmentShader(ColorInOut v [[stage_in]], constant Scene& scene [[buffer(0)]] ) { /* determine mesh to read, e.g. geometry_id */ packed_float3 n0 = scene.meshes[ geometry_id ].normals[0]; packed_float3 n1 = scene.meshes[ geometry_id ].normals[1]; packed_float3 n2 = scene.meshes[ geometry_id ].normals[2]; /* interpolate normals and calculate shading */ } -

11:00 - Size and alignment for MTLAccelerationStructure in a MTLHeap

heapAccelerationStructureSizeAndAlignWithDescriptor: -

13:49 - Store individual indirect resources in NSMutableSet

// Argument buffer loading for (NSUInteger i = 0; i < mesh.submeshes.count; ++i) { Submesh* submesh = mesh.submeshes[i]; id<MTLBuffer> indexBuffer = submesh.indexBuffer; NSArray* textures = submesh.textures; // Copy index buffer into argument buffer submeshAB[i].indices = indexBuffer.gpuAddress; // Copy material textures into argument buffer for (NSUInteger m = 0; m < textures.count; ++m) { submeshAB[i].textures[m] = textures[m].gpuResourceID; } // Remember indirect resources [sceneResources addObject:indexBuffer]; [sceneResources addObjectsFromArray:textures]; }

-