-

Reduce network delays for your app

CPU performance and network throughput rates keep improving, but the speed of light is one limit that isn't going any higher. Learn the APIs and best practices to maximize your app's responsiveness and efficiency by keeping network round-trip times low and minimizing the number of round trips when performing network operations.

Resources

Related Videos

WWDC22

WWDC21

WWDC19

WWDC18

WWDC15

-

Search this video…

♪ Bass music playing ♪ ♪ Stuart Cheshire: Welcome to "Reduce network delays for your app".

My name is Stuart Cheshire.

I'm going to talk about factors that contribute to making network apps feel slow today and then I'll hand over to my colleague Vidhi Goel to tell you about the techniques and APIs you can use to make your network app more responsive.

Let's start by talking about something you may have already seen in the WWDC beta of iOS.

If you look in Developer settings, you will see a new item in the Networking section called Responsiveness.

While upload throughput, download throughput, and idle ping time are all interesting, the main factor that affects the responsiveness of your network app is the network's responsiveness under working conditions, not idle conditions.

The typical internet speed test idle ping time measurement tells you how well your internet connection works when you're not using it.

What matters is how well your internet connection works when you are using it.

Tap on Test.

It will warn you that it will generate network traffic, it will measure your network for a few seconds, and then it will tell you how well your network is performing under working conditions.

The tool reports network responsiveness in round trips per minute, or RPM, instead of milliseconds.

We created this new RPM metric because milliseconds are a fairly abstract concept to a lot of people.

People are also more familiar with metrics where higher is better.

The RPM metric produces numbers in the range from a few hundred RPM to a few thousand RPM, just like the RPM of your car engine.

There's also a command-line version of this test tool on macOS called NetworkQuality.

You might think that your home or work network has a great ping time, but that's when it's idle.

Run this network quality test for yourself -- on your iPhone or Mac -- and you might find that when your network is being used, its responsiveness becomes a lot worse.

And I do mean a lot worse.

Your network might have an idle round-trip time of 20 milliseconds, which sounds pretty good, but you might find that under working conditions the round-trip time goes up to 600 milliseconds or more.

That's 30 times worse.

Network responsiveness under working conditions is what matters for your app's user experience.

We all see this problem all the time, but especially with audio and video freezes, and dropouts during video conferencing.

High network delays hurt all apps, but we've got used to that, so we notice it more when it affects video conferencing.

When we have problems with our video conferences, we think we'll fix it by upgrading our internet connection.

People have gone from a few megabits per second to gigabit and above, yet the problems still happen.

A few megabits per second should be plenty for video conferencing, so why do we still have these problems? When buffers in the network are excessively large, when they fill up, they don't improve throughput, but they do add delay.

We normally think of the internet operating like this, with packets flowing speedily through the network.

But if we look inside the cloud, we'll see that it really works more like this.

The packet you see coming out of the network is not the one that you saw go in.

Packets spend a lot of time sitting in excessively large buffers in the network.

This phenomenon of excessively large buffers is called bufferbloat, and until now it hasn't been widely measured, despite the effect it has on everyday network use.

The good news is that there are modern queue management algorithms, like CoDel -- the Controlled Delay queueing algorithm -- that eliminate bufferbloat.

When the network keeps queues short, the time a packet spends waiting in the network is dramatically reduced.

It's possible to get high throughput and low delay at the same time.

It's not an either/or choice. It's not a zero-sum game.

We're working with the industry to deploy smarter queue management algorithms and improve network responsiveness under working conditions.

But for now, if you want to give a great user experience, you will want to make your apps cope with the internet as it is today.

Bufferbloat is a large component of the network delays your application will experience, but it's not the only source of delay.

There's software and hardware processing time.

As CPUs get ever faster, this processing time continues to shrink.

There's the actual data transmission time.

As data rates increase from kilobits to megabits to gigabits per second, transmission time continues to shrink.

Then there's the time delay due to buffering in the network.

As I said, we're working with the industry on reducing these delays.

But there's always going to be the speed-of-light signal propagation delay.

Back in the 1990s, the Stanford-to-MIT ping time, round-trip, coast-to-coast, across the United States, was already under 100 milliseconds.

That's pretty close to the speed-of-light limit already, so it's not going to get much better.

We're working on reducing the other three delays, but the speed-of-light delay will never go away, so this is why it’s important to design your apps taking network round-trip times into account.

When we're talking about taking network round-trip times into account, what kind of apps are we talking about? Everyone knows that video conferencing is severely affected by high network delays.

Everyone knows that online games are severely affected by high network delays.

But this affects all apps that use the network.

I'm talking about getting weather forecasts, stock quotes, driving directions.

This affects web browsing.

It affects skipping ahead while watching a streaming video.

Think about how many apps include some animated spinning delay indicator while the app is waiting for the network.

Maybe your app.

We show that "please wait" indicator so that the user doesn't think the app has hung.

It's great that we put so much effort into giving the user something to look at while they wait for the slow network, but we should also put equal effort into reducing the time they spend waiting.

If you have an app where you ever show a delay indicator while waiting for data from the network, there are techniques you can use to reduce those wait times.

The time an app waits for network data is a function of how long it takes for one network round trip, and how many network round trips your app requires.

As an app developer, there's not a lot you can do to improve the underlying network round-trip time, but you can control how many round trips your app requires.

Let me introduce my colleague, Vidhi Goel, to tell you how you can do that.

Vidhi Goel: Thanks, Stuart.

Hi, I am Vidhi, and today I'd like to talk to you about what you can do as a developer to reduce network delays in your app.

App responsiveness is inversely proportional to the number of network round trips.

Let me show you how you can reduce these network round trips by adopting the modern networking protocols and make your apps super snappy.

To speed up your apps, adopt the modern networking protocols such as HTTP/3 & QUIC, TCP Fast Open, TLS 1.3, and Multipath TCP.

With these techniques, your app can potentially achieve multiple round-trip reductions in delivering data to your user.

Server side support is required for all of these modern protocols, so check with your provider about their readiness.

We are happy to tell you that all of these technologies are available on iOS and macOS.

Let's look into each of these technologies.

First up, we have HTTP/3 and QUIC which are enabled by default in iOS 15 and macOS Monterey.

QUIC is a transport protocol which can set up a connection much faster than TCP and TLS.

By reducing the head-of-line blocking, QUIC can significantly reduce delays in delivering data to your user.

And here is the best part: if you are already using URLSession, you’re all set.

If you provide your own application layer using the Network framework APIs and want to take advantage of QUIC, simply create an NWConnection with QUIC parameters and set a TLS Application-Layer protocol or ALPN.

Please check out the "Accelerate networking with HTTP/3 and QUIC" session to learn more about how to use these technologies in your app.

QUIC is useful in many scenarios.

However, TCP may still be the right choice for some applications.

When using TCP, you can eliminate an entire round trip by sending the app data along with the TCP handshake.

TCP Fast Open is supported in Network framework and Sockets.

To use it with NWConnections, there are two options: the first option is to allow Fast Open on your connection and in this case, the app will provide the initial data to be sent out with the handshake.

To enable this, set the allowFastOpen parameter to true and create your connection.

And then, before calling start, you would call send with your initial data.

When using TCP Fast Open, you have to be careful that you only send idempotent requests with the handshake.

Idempotent basically means that the data is safe to be replayed over the network.

There is another way to use TCP Fast Open that does not require your app to send its own initial data.

If your app is using TLS over TCP, you can choose to send the TLS handshake message as the initial data.

To enable this, go to your TCP-specific options and set enableFastOpen to true.

The recommended way to use TCP Fast Open is via the Network framework API, but if your app is built over Sockets, then you would call the connectx API with the respective flags to specify that you want to send idempotent data with the handshake.

I mentioned idempotent a couple of times.

Let me explain what it means and why it is important to send only idempotent requests with the handshake.

An idempotent and replay-safe operation is one that has no additional effect if it is performed more than once.

For example, when the user goes to the developer.apple.com web page, the HTTP GET request for this web page is sent out with the TCP handshake.

If the acknowledgment for this request was either delayed or dropped in the network, the device will resend the HTTP GET request which may get routed to a different server.

And this time the acknowledgement arrives along with the HTTP response.

As the HTTP GET request does not have any additional effect when it is resent over the network, it is considered as an idempotent request.

Now, let's say the user is trying to buy a new iPhone 12.

The HTTP request sent for this operation is not an idempotent request.

It could result in multiple transactions if the request goes to a different server each time when the data is replayed over the network.

Keeping that in mind, let's talk about TLS 1.3.

TLS 1.3 removes an entire round trip from the handshake as compared to TLS 1.2.

It also provides stronger security.

It is enabled by default since iOS 13.4 for URLSession and NWConnection.

The TLS 1.3 protocol defines early data support, which can save yet another round trip by sending idempotent requests along with the TLS handshake message.

Let's switch gears and look at Multipath TCP which works a bit differently in reducing network delays.

Multipath TCP allows a single TCP connection to continue as the device switches from one network to another.

To get the low latency feature of Multipath TCP, use the interactive mode API.

It will save all the round trips needed to establish a new connection, and the system will automatically choose the faster network path for your data packets.

To opt in from the client, set the multipathServiceType property to interactive on your URLSession configuration or on your NWParameters.

To give you an idea of how many round trips you can save with these modern technologies, let's start with a reference point.

Let's say your app is currently running TLS 1.2 over TCP.

In this case it will take four round trips to get the first byte to your user.

If your server switches from TLS 1.2 to TLS 1.3, your connections will eliminate an entire round trip.

If you enable TCP Fast Open on your connection, you will save yet another round trip.

In iOS 15, HTTP/3 over QUIC provides a reduction to two round trips.

The QUIC protocol also defines early data support, which could enable a further reduction to one round trip.

Based on our measurements at Apple, it is common for the users to see round-trip times that sometimes spike up as high as 600 milliseconds.

Let's see what that means for your app.

Four round trips at 600 milliseconds means your user is waiting almost two and a half seconds for the data to arrive.

That's a huge amount of time to wait and stare at the network spinner.

By adopting the modern networking protocols, you can reduce that time to first byte from 2.4 seconds to about half a second.

The user will definitively notice the difference when the data arrives a whole second and a half earlier.

Every developer who wants great network performance should pay attention to the number of round trips.

This is where the big wins are.

All the technologies that I've talked about help to reduce network delays in your app in real-world network conditions.

If you test your app on 5G, LTE, or fast Wi-Fi networks, your app responsiveness might seem fine to you.

But your users aren't always using your app in the best network conditions.

To simulate realistic networks, Network Link Conditioner tool is available for iOS from the Developer settings menu.

For macOS, you can download it from the Apple Developer website.

This tool is a reliable and repeatable way to test your app in different network conditions that your users may experience in their day-to-day life.

If you recall, Stuart mentioned earlier that you can't do much about reducing your network round-trip time.

Well, that's not entirely true.

Let me explain how you can reduce the network round-trip time when you correctly inform the system about your app traffic.

Most apps have a mix of traffic that they send or receive.

There is a lot of data that is exchanged from the user's device when running a bunch of apps.

In real-world networks, like a home or office Wi-Fi, a number of devices share the same network.

These devices simultaneously send and receive significant amount of data while using a set of apps.

To avoid building up long queues in this shared network, it is crucial that you classify your app data appropriately so that the system can manage your traffic efficiently in order to maintain low network delays.

And when you allow the system to maintain low network delays, it makes your app's foreground traffic faster and hence the data that matters most to the users is delivered quickly.

Let me illustrate this with an example.

Many apps prefetch content like graphics, audio files, et cetera, to make it available for later use.

When the app is prefetching a substantial amount of data, this is what the network might look like.

The bottleneck queue can become full.

If at this point, the user initiates a network activity -- like viewing their profile page -- the response for this request will be queued at the end of the network queue, and it may take seconds before the profile is shown.

This would not be a good user experience.

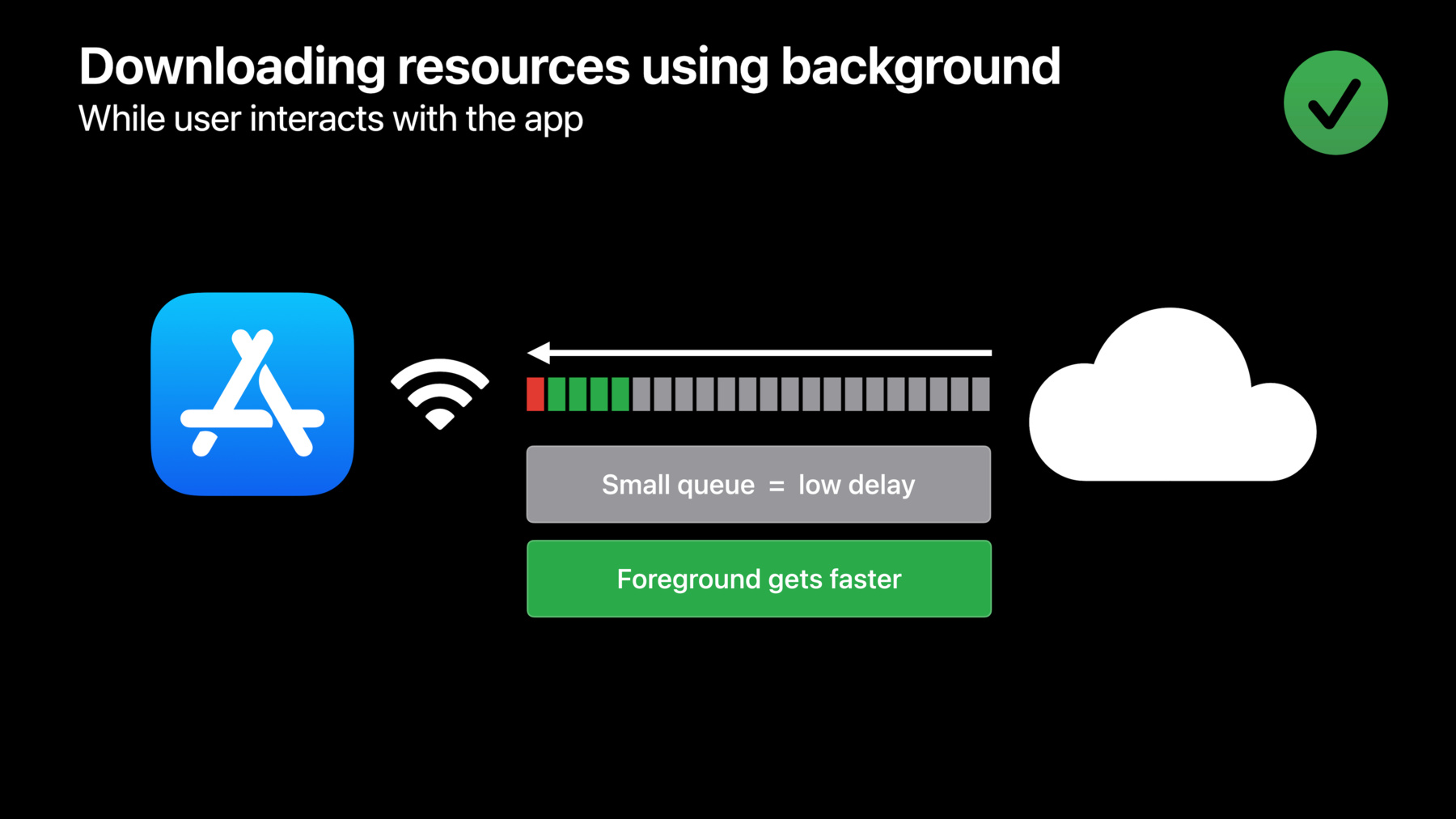

Now, let's look at what happens to the network when we mark these nonuser initiated prefetching tasks as background.

Marking these non user initiated transfers as background will dramatically reduce the size of the network queue which will then become available for other foreground data.

Thus, any foreground data -- that is, the green packets -- will be delivered instantly for a quick, delightful experience.

In iOS 15 and macOS Monterey, the background service type has improved dramatically.

We have added new congestion control algorithms for background uploads and downloads.

These new algorithms not only reduce network delays significantly, for better user experience, but also ensure that the background transfers finish in nearly the same time as other traffic.

Let's look at the networking APIs that you can adopt to take advantage of the background service type.

When your app is in the foreground and performing non-user-initiated transfers, you would use the default URLSession and set the network service type to background on your URL requests.

Again, this allows the system to maintain low network delays.

And for NWConnection, you would set the service class to background on your NWParameters.

If your app starts a long-running transfer, whether it is user initiated or not, you would create a background URLSession to continue running even when your app is suspended.

For time-insensitive tasks, you can set the isDiscretionary property to true.

This will allow the system to wait for optimal conditions to perform the transfer.

We've talked about how your app can help in keeping the network queues short.

Another source of delay can be on the sending device itself.

Historically, networking stacks have used very large send buffers.

This adds a lot of unnecessary delay, sometimes in the order of seconds, before the packet even enters the network.

We fixed this for URLSession and NWConnection way back in 2015.

But most servers on the internet run on Linux and use BSD Sockets.

Contact your server operators to ensure that they are using the TCP not sent low watermark socket option to reduce the delays at the source.

For your next steps, Adopt the modern networking protocols to eliminate multiple round trips.

Use the background mode for prefetching assets, bulk transfers, and non urgent tasks.

Test your app's performance in different network conditions.

Network Link Conditioner is an excellent tool for doing that.

Keeping network delays low improves the responsiveness of your app and enhances the overall user experience.

Thanks for watching and have a great WWDC! ♪

-

-

9:28 - Fast open with TCP handshake

/* Allow fast open on the connection parameters */ parameters.allowFastOpen = true let connection = NWConnection(to: endpoint, using: parameters) /* Call send with idempotent initial data before starting the connection */ connection.send(content: initialData, completion: .idempotent) connection.start(queue: myQueue) -

11:01 - Sockets with fast open

connectx(fd, ..., CONNECT_DATA_IDEMPOTENT | CONNECT_RESUME_ON_READ_WRITE, ...); // delay SYN write(fd, ...); // SYN goes out with first data segment -

13:35 - Save round-trips when switching networks with Multipath TCP

// Multipath TCP // Save multiple round-trips when switching networks // On URLSessionConfiguration let configuration = URLSessionConfiguration.default configuration.multipathServiceType = .interactive // On NWParameters let parameters = NWParameters.tcp parameters.multipathServiceType = .interactive -

20:09 - Background service type, App in foreground

//Use default URLSession, set background on URLRequest var request = URLRequest(url: myurl) request.networkServiceType = .background //Set service class on parameters to apply to the NWConnection let parameters = NWParameters.tls parameters.serviceClass = .background -

20:10 - Time insensitive tasks running in background

//Configure background URL Session lazy var urlSession: URLSession = { let configuration = URLSessionConfiguration.background(withIdentifier: "MySession") configuration.isDiscretionary = true return URLSession(configuration: configuration, delegate: self, delegateQueue: nil) }()

-