-

Evaluate videos with the Advanced Video Quality Tool

Learn how the Advanced Video Quality Tool (AVQT) can help you accurately assess the perceptual quality of your compressed video files. Utilizing the AVFoundation framework, AVQT supports a wide range of video formats, codecs, resolutions and frame-rates in both the SDR and HDR domains, which results in easy and efficient workflows — for example, no requirement to decode to a raw pixel format.

AVQT uses Metal to achieve high processing speeds by offloading heavy pixel-level computation to the GPU, typically analyzing videos in excess of real-time video frame rates. With incredible ease of use and computational efficiency, AVQT can help you eliminate low-quality videos from your video catalog before they might otherwise reach people in your app.Resources

Related Videos

WWDC22

-

Search this video…

♪ Bass music playing ♪ ♪ Pranav Sodhani: Hello and welcome to WWDC! My name is Pranav, and I am part of the Display and Color Technologies team at Apple.

In this talk, we will introduce a video quality tool and show how you can use it to evaluate perceptual quality of compressed videos in your apps or content creation workflows All right.

Let's start by looking at a typical video delivery workflow.

In such a workflow, the high-quality source video undergoes video compression and, optionally, video downscaling to generate videos with lower bit rates.

And these low-bit rate videos can then be easily delivered over bandwidth-constrained networks.

A few possible ways to use such workflows include AVFoundation APIs such as AVAssetWriter, apps such as Compressor, or it can be one of your own video compression workflows.

Now, downscaling and compression can add video coding and scaling artifacts.

And this will impair the source video and create visible artifacts.

One example of such artifact is blockiness in the compressed video, which is shown in the frame on the right side.

Another example is when the video appears blurry and video details start disappearing.

Such artifacts can adversely affect a consumer's video quality experience.

We know that consumers are expecting a high-quality video experience.

So it's important to deliver on this expectation.

Now, the first step to do this is to evaluate the quality of your delivered content.

And the most accurate way of doing this is to have real people watch the videos and rate them on a video quality scale.

But this is very time consuming and not scalable if you want to evaluate a high volume of videos.

Fortunately, there's another way.

What we need here is an objective way to characterize video quality, so that we can automate the process for speed and scalability.

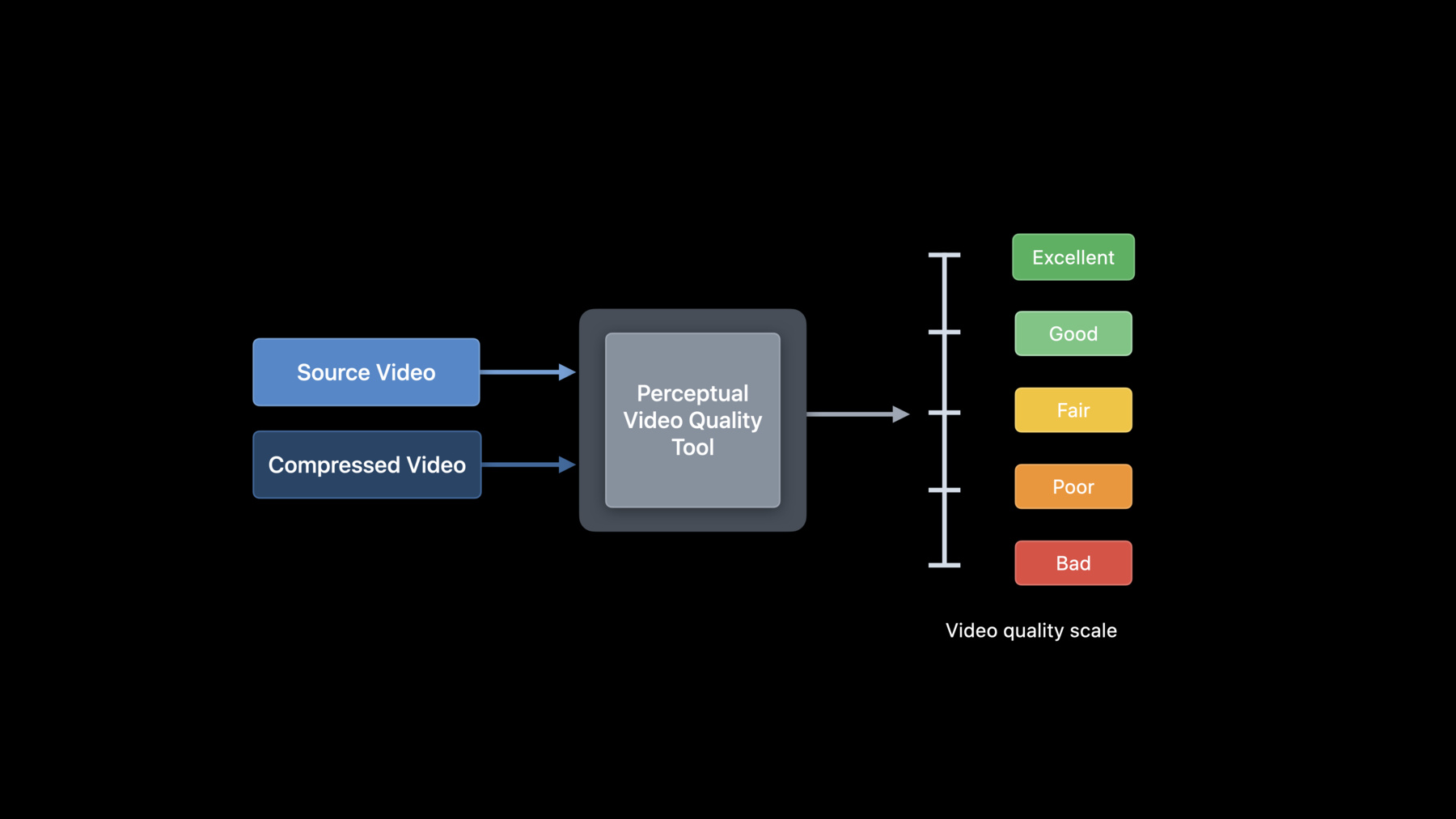

In such a setup, the perceptual video quality tool will take the compressed video and the source video as input and output a video quality score.

This score can be a floating point number in the range of one to five and mimic how real people would have rated the compressed video.

Today, we are very excited to enable our developers with such a perceptual video quality tool.

We are calling it the Advanced Video Quality Tool or AVQT in short.

Let's learn more about AVQT.

So what exactly is AVQT? Well, AVQT comes as a macOS command line executable.

And it attempts to mimic how real people rate quality of compressed videos.

You can use AVQT to compute both frame level and segment level scores, where a segment is typically a few seconds long.

And, of course, we have added support for all AVFoundation-based video formats in AVQT.

This includes SDR, as well as HDR video formats such as HDR10, HLG, and Dolby Vision.

Next, we want to discuss three key attributes of AVQT that make it very useful across applications.

First, we will see how well AVQT aligns with human perception.

Then we will talk about AVQT's computational speed.

And finally, we will show why viewing setup awareness is important when predicting video quality.

Let's get into details for each of these.

AVQT correlates well with human opinions on video quality.

And it works well across content types, such as animation, natural scenes, or sports.

We have found that traditional video quality metrics such as PSNR and structural similarity -- or SSIM in short -- typically do not work very well across content types.

Let's look at one example.

Now, this is a frame from a high-quality sports clip, which is our first source video.

Let's look at the same frame in the compressed video.

You can see that the frame does appear to be of sufficiently high perceptual quality.

Rightly so, it gets a PSNR score of around 35 and an AVQT score of 4.4.

Next, we go with the same exercise for our second source video.

The compressed video in this case seems to have visible artifacts.

In particular, you can see some artifacts on the face of the person.

Interestingly, it gets the same PSNR score of around 35, but this time AVQT rates it around 2.5, implying poor quality.

We think the AVQT score is the correct prediction here.

Note that this is just one example we have picked to illustrate what can go wrong in cross-content evaluations.

We wanted to test AVQT's perceptual accuracy on a diverse set of videos.

So we evaluated it on publicly available video quality datasets.

These datasets include source videos, compressed videos, and video quality scores provided by human subjects.

Here, we will show you the results on two datasets: Waterloo IVC 4K and VQEG HD3.

The Waterloo IVC dataset includes 20 source videos and 480 compressed videos, spanning both coding and scaling artifacts.

It covers four different video resolutions and two different video standards.

The VQEG HD3 dataset is relatively smaller.

It has nine source videos and 72 compressed videos.

And these were generated using video coding at 1080p video resolution.

And to measure performance of a video quality metric objectively, we make use of correlation and distance measures.

Pearson Correlation Coefficient, or PCC in short, measures how well the predicted scores correlate with subjective scores.

A higher PCC value implies better correlation.

And RMSE measures how far off the predictions are from subjective scores.

A lower RMSE value would imply higher prediction accuracy.

Now, we want to evaluate how well can AVQT predict the scores given by human subjects.

On the x-axis here, we have the ground truth subjective video quality scores.

And on the y-axis, we have scores predicted by AVQT.

Every point here represents a compressed video.

As you can see from the scatter plot, except for a few outliers, AVQT does a great job in predicting the subjective scores for this dataset.

This is also reflected in the high PCC and low RMSE scores.

And we see a high performance on the VQEG HD3 dataset as well.

Let's move on and talk about AVQT's computational speed.

Note that high computational speed is quite important to ensure scalability.

AVQT's algorithm is designed and optimized to run fast on Metal.

This implies that you can run through large video files very quickly.

It also takes care of all preprocessing natively.

For you, this means you don't have to decode your videos and scale them offline.

AVQT does that for you.

You should note that AVQT runs through a 1080p video at 175 frames per second.

So if you have a 10-minute long 1080p video at 24 Hz, AVQT can compute its quality in under 1.5 minutes.

The final attribute we want to talk about is viewing setup awareness.

Your viewing setup can affect the video quality you perceive when watching a video.

In particular, factors such as display size, display resolution, and viewing distance can mask or exaggerate artifacts in the video.

To address this, AVQT takes these parameters as input to the tool and then attempts to predict the right trend as these factors change.

Let's take a look at one such case.

Consider two scenarios.

In scenario A, you're watching a 4K video on a 4K display at a viewing distance of 1.5 times the height of the screen.

In scenario B, you are watching the same video on the same display but now at a viewing distance which is three times the height of the screen.

Clearly, in scenario B you will miss out on some of the artifacts which were visible when you were watching it closely.

This implies that your perceived video quality in scenario B will be higher than in scenario A.

And AVQT can capture such trends at different quality levels.

The chart here shows that as viewing distance increases from 1.5H to 3H, AVQT scores increase as well.

We recommend you go over the README document available with the tool for more information on this.

Well, now that we have all of you excited about AVQT, let me show how you can use the tool the right way.

We will be making AVQT available to all of you soon via the Developer downloads portal.

Let me walk you through a demo.

So I have already downloaded the tool and installed AVQT on this system.

If you look at the output of "which AVQT", you can see AVQT is placed in the usr/local/bin directory.

Now, you can invoke AVQT help command to read more about the usage of different flags supported by AVQT.

Let's see what I have in my current directory.

I have a sample reference and a sample compressed video that I will use for the demo.

So let me run AVQT through them.

We will provide the reference and test files as input and specify an output file.

Let's name the output file sample_output.csv.

The tool prints the progress and reports the segment scores on the screen.

The default segment duration is six seconds and since this clip is five seconds long, we have only one segment.

Next, let's look at the output file.

You can see the frame level scores here.

And, finally, we have the segment level scores towards the bottom.

Now, in the demo, we showed a few options, but the tool has several other features built into it as well.

For example, you can use the segment-duration and the temporal-pooling flags to change the way frame level scores are aggregated.

Similarly, you can specify the viewing setup using the viewing-distance and display-resolution flags.

Please refer to the README for more details on this.

All right.

So far we have learned about some of the key attributes of AVQT.

We have also seen how to use the command line tool on a pair of videos to generate video quality scores.

Now let's take a look at a specific case where you can use AVQT to optimize bit rates for HLS.

HLS tiers are encoded at different bit rates.

And we know that choosing these bit rates is not an easy process always.

To help with this, we have published some bit rate guidelines in the HLS Authoring Specification document.

Please note that these bit rates are only initial encoding targets for typical content delivered via HLS.

We also know that different content has different encoding complexity, which implies that the optimal bit rate varies across different content.

So the bit rate that works well for one type of content -- for example, an animation movie -- might not work well for a sporting event.

Here's how you can use AVQT as feedback to help you determine optimal bit rate for your content.

First, we start with our initial target bit rates.

We use this bit rate to encode our source video and create the HLS tier.

We then use the source video and the encoded HLS tier to compute video quality scores using AVQT.

Finally, we can analyze AVQT scores to decide if we want to increase or decrease the target bit rate for the HLS tier.

To demonstrate this with an example, let's pick a specific HLS tier.

Here we are choosing the 2160p tier at 11.6 megabits per second.

We will then encode our previous two sequences -- animation and sports -- with this recommended bit rate.

Once we have the encoded tiers ready, we will use AVQT to compute their video quality scores.

The chart here shows AVQT scores for the two video sequences.

For this particular tier, we can expect a high video quality, so we are setting the threshold to be 4.5, indicating close-to-excellent quality.

You can see that while this bit rate is good enough for this animation clip, it is not sufficient for the sports clip.

So we go back and use this feedback to adapt our bit rate target.

In particular, we need to increase our bit rate target for the sports clip and recompute its AVQT score.

Let's aim for a 10-percent increase in bit rate.

Here we have plotted our new AVQT scores for the sports clip.

The updated scores now lie above our expected threshold of four and a half.

And it also resembles video quality of the animation content much more closely.

To wrap up, here are a few important things that we want you to take away from this talk.

Video compression can lead to visible artifacts, which affects consumers' video quality experience.

You can evaluate the quality of your compressed videos using AVQT.

AVQT comes as a macOS command line tool, is quick to compute, and is viewing setup aware.

It also has support for all AVFoundation-based video formats.

Finally, you can use AVQT to optimize the video quality of your HLS tiers.

Thank you very much! ♪

-