-

What's new in USD

Discover proposed schema and structure updates to the Universal Scene Description (USD) standard. Learn how you can use Reality Composer to build AR content with interactive properties like anchoring, physics, behaviors, 3D text, and spatial audio that exports to USDZ. And, discover streamlined workflows that help you bring newly-created objects into your app.

If you're interested to learn more about USDZ as a distribution format, check out "Working with USD.” And for more on creating AR content with Reality Composer, watch “The Artist's AR Toolkit."

We'd love to hear feedback about the preliminary schemas. After you watch this session, come join us on the Developer Forums and share your thoughts.Resources

Related Videos

WWDC21

WWDC20

WWDC19

-

Search this video…

Hello and welcome to WWDC.

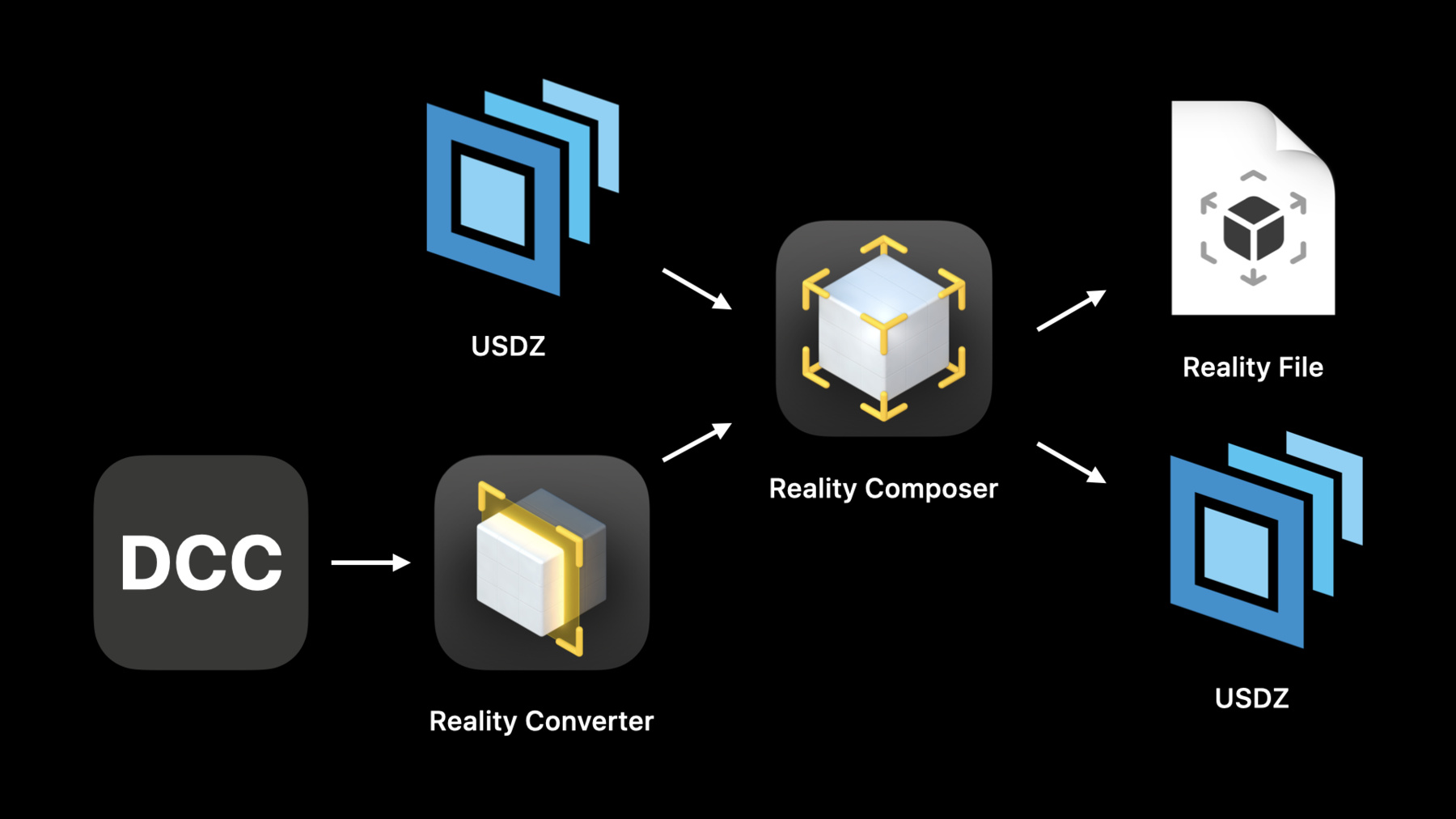

Hello everyone. My name is Abhi and I'm a software engineer here at Apple. Thanks for joining our session today. We'll take a look at USD as it's used around the world, new workflows enabled by Reality Composer's new USD export functionality and then take a peek behind the curtains at the new AR USD schemas helping make these workflows possible. Last year we introduced Reality Composer for macOS and iOS with a goal of making interactive AR content creation intuitive and easy for developers. Reality Composer allows you to import your own usdz content or get started with a great built in library of static and procedural assets and bring them to life with interactions, physics, anchoring and more. This year we've made it even easier to bring content from a digital content creation tool or a DCC into Reality Composer. With the introduction of Reality Converter, we've also added support to export content from Reality Composer as a usdz, which enables new workflows between Reality Composer and a variety of DCCs. To make this possible, we've worked in collaboration with Pixar to develop new preliminary AR USD schemas which we'll take a look at in-depth later in the session. For those unfamiliar with usdz, usdz is the compact, single file distribution format for USD. It is optimized for sharing and is deeply integrated into iOS, macOS and tvOS, and applications like Messages, Files, Safari and more.

If you're interested to learn more about usdz as a distribution format including more about its underlying technology USD, and relevant concepts such as schemas, composition, the stage, prims and properties, we encourage you to check out last year's session Working with USD and the related talk section.

Over even just the last year we've seen an exciting growth in the adoption of USD and the usdz file format. USD is being used everywhere from films to gaming to AR to DCCs. A few examples include Pixar, which uses USD for its films and Maya, Houdini, Unity, Unreal Engine and Adobe Aero which support USD interchange either as export or import or both. So why USD? Let's take a high level look at other formats out there today. The most basic format is .obj. which essentially contains a single 3D model. It has limited material support and no support for animations. Then there's a large group of more modern file formats. They usually support multiple models that can be laid out in a scene graph and usually support material definitions and animations. USD supports all of this and is additionally designed to be scalable. Pixar developed USD for use in its films. USD also allows for collaboration, allowing for many artists to work on a single scene without getting in each other's way. usdz is the archive package and inherits most of these features and is optimized for sharing. Next we'll take a look at new workflows enabled by Reality Composer's new usdz export functionality and then take a peek behind the curtains at the new AR USD schemas that make this possible. When Reality Composer launched last year, it supported the import of usdz content and the export to Reality File. This year we've expanded the artist workflow with the introduction of Reality Converter which makes it easy to convert content from DCCs to usdz for import and use in Reality Composer. New this year as well is the ability to export content created in Reality Composer to usdz. This enables not only new artist workflows between Reality Composer and DCCs, but creates an ecosystem of content creation tools all speaking the same language - USD. So for example, we can start by creating custom content in a DCC and use Reality Converter to convert it to a usdz and import into Reality Composer. Or we could import a usdz we found online or export it from another application directly into Reality Composer. And the third option is we can start with any of the great built-in content inside the asset library. Next, we can add functionality specific to Reality Composer such as interactions or physics to bring our content to life, and anchoring to help place in the world. We can then export our creation as a Reality File or as a usdz. Last year with the export of only Reality Files, we would have been able to share our content online with family and friends, view in AR Quick Look or using an application. However, this is where our content creation story would have ended. This year the journey continues. We can take our usdz and continue making edits in any DCC that supports the usdz file format. For example, we could scatter a content Houdini, export it, pick it up in Maya, make a few more edits, export it again and bring it back into Reality Composer to make a few final editions. We've designed the new AR USD schemas which we'll take a closer look at later in the session so that they are compatible with DCCs and viewers that haven't yet adopted them, allowing you to make edits without losing information and view content as accurately as possible. So let's take a look at an artist workflow.

So here I'm in Reality Composer and I've brought in some usdz assets from a variety of different sources. For example here we have a plane and a toy car from the AR Quick Look gallery available online. I've also worked with artists to create some really nice assets including the sun asset and this cloud asset. And finally I've also worked with our artists to create a nice wooden flag asset that fits well with the rest of our content. We've already brought it through Reality Composer and added a few behaviors. So when I tap on this flag we'll see that it performs a behavior and then displays some additional content. So let's go ahead and preview that. Again this is a single USD, so we'll see our content move, and additional content show up. So that looks pretty awesome. So what I want to do in this demo now is take all of my usdz assets, export them, bring them into Houdini, and make them race against each other with the beat of some music that I made in Garage Band. So the first thing we'll want to do is export this content to usdz and we can do that by first going into our Preferences.

And selecting Enable usdz export. Now when we go to X where our content we'll see two different options. We'll see the usdz option and our existing Reality File Export option. Let's go ahead and export to usdz. And I've already done this and brought it into Houdini. So let's jump over to our pre-baked secene. So here we have our usdz assets in Houdini. Now we can see our toy car and our toy plane. And I've worked with my artists to position them to create this racing scene so we can see we've inserted some of our road assets and placed them along. And we've also animated all of our content to some additional music that I made in Garage Band before. We can see the full setup of our scene right here in the Houdini Editor. And we can also get a preview of our content right here. So we'll see in our scene I've also placed our flag asset. Houdini doesn't yet understand the behavior schema so we won't actually see our flag animate and additional content show up. However it's still an asset, so we can still place it when I go to export my content. These behaviors are still inside of the usdz so we bring it back into Reality Composer or another Reality Kit based application. We should see our behaviors show up again. So this is looking pretty good. And what I want to do next is export my content so I can bring it back into Reality Composer and add a few final behaviors, like play animation and some audio. So let's go ahead and export content from Houdini and I've already done that and brought it into Reality Composer. So let's jump over to our final scene. So here in Reality Composer we have our baked asset from Houdini. We can see it comes in as one big asset together. So we have our instance road, our toy car and our toy plane and some instance clouds as well. I will notice that it has a behavior. I've already gone ahead and set up a couple of behaviors here, specifically a top trigger a usdz animation and a play music action. The usdz animation targets our baked scene and we'll play that animation that we've built in Houdini which moves the plane and the car to the beat of some music. So let's go ahead and preview our scene. We can see that when I tap on the flag, our original behavior comes through. It's gone all the way through Houdini and now comes back in Reality Composer.

And now, we'll see our content animates and we hear some audio.

But that looks pretty sweet. I think I'm ready to export this now as usdz and bring it right into my Reality Kit application and publish it to the App Store. So we've seen how we can start with some content in Reality Composer that we've brought in from various different sources including the AR Quick Look Gallery and other DCCs. And we saw how for example the flag asset kept the behaviors that we imbued from Reality Composer through a full import, export and import flow between Reality Composer, Houdini and again Reality Composer.

So that's a look at the new workflows and usdz based content creation ecosystem enabled by Reality Composer's new usdz expert functionality. Next let's take a closer look behind the scenes at the new AR USD schemas, making the export of Reality Composer content to USD possible. Reality Composer enables you to create many different kinds of experiences with features like scenes, AR anchoring, interactions, physics, 3D text, spatial audio and more. We've worked in collaboration with Pixar to create new preliminary schemas and structures for all of this, to enable the export of Reality Composer content to usdz. As a reminder schemas are USD extension mechanism, allowing you to specify new types in the library. In this section we want to give you an overview of these new schemas so you can gain an intuition of their design to adopt into your own content or enter applications. We encourage you to also check out our in-depth documentation available on the Developer site for more information and examples.

So let's start with scenes. Scenes are a fundamental part of Reality Composer. A single scene can contain multiple models and specify a scene wide properties such as gravity, ground play materials, and where in the world ou content Would like to be anchored. You can create multiple scenes in a single Reality Composer project and load each individually in an application or stitch them together with the chain scene action to create a larger overall experience.

The scene structure in USD defines a multiple scenes in a single USD file, which scenes being targetable by behaviors. You can also load a scene by name in a Reality Kit based application just like you can for a Reality File.

Let's take a look at the scene structure in a USD. Here we're taking a look at the plain text version of USD. Known as a USDA for readability. Scenes restructured under a scene library, which is specified using a new type of kind metadata called sceneLibrary on the root primm. Each exformable primm under this root primm is considered a scene and it can contain its own tree of prems defining meshes, materials, behaviors, anchoring and more. Just like in a single scene USD. Scenes can be marked active by using the depth specifier and inactive by using the over specifier. This also allows DCC as in viewers that haven't yet adopted the scene structure to still be able to view all active scenes. So if we want to swap the depth and over for our scenes we'll now see the sphere instead of the cube. In addition each scene can be given a readable name. This name can be used to load a particular scene from the usdz into Reality Kit based application just like you would for a Reality File. Note that Reality Kit, AR Quick Look and Reality Composer only support a single active scene in a scene library and don't yet support nested scenes.

Next let's take a look at anchoring. Anchoring helps specify where content should anchor in the real world. For example, one scene can anchor to a horizontal plane like a table or a floor and another scene can anchor to and follow an image or a face. The AR anchoring schema is an applied schema that supports specifying the horizontal plane, vertical plane, image and face anchoring types. Note that AR object and geo location anchors aren't yet supported in USD.

Let's take a look at how to add anchoring information to a USD. Here we have a basic cube in our USD. We want to anchor this cube to an image in the real world. We can do this by first applying the anchoring schema to the primm. And then specifying the anchoring type. In this case because we're aligning our content to an image, we use the image type. And then specify a related image reference primm. The image reference primm contains a reference to the image which can be a JPEG or a PNG as outlined in the usdz specification and then defined as physical width. This property is defined in centimeters to avoid unit changes due to composition or edits made by a DCC that hasn't yet adopted the schema. Next let's take a look at behaviors. Behaviors in Reality Composer allow you to easily bring your 3D content to life.

A behavior in Reality Composer contains a single trigger that can target multiple objects such as a tap, collision or proximity event and can contain one or more actions targeting multiple objects such as emphasize, play audio and add force. Here we have an example of a top trigger with a bounce action.

The behavior schema follows the same structure but also pulls back the curtain and allows for more complex nesting and composition of triggers and actions. The schema defines only three new Primm types of behavior, a trigger interaction specific triggers and actions are defined with data schemas similar to USD previous surface which allows the behavior schema to be much more flexible beyond the initial triggers and actions we've added support for this year. Let's add a behavior to our scene. Here, we've defined a single behavior defining a top tier and a bounce action as seen in the video in the previous slide. The behavior contains an array of trigger relationships and another array of action relationships. Nested in the behavior we've defined a trigger which starts to trigger. The trigger defines itself as a tap trigger using the ID property. And the objects that are observed for top events which invoke this trigger in this case are tap trigger targets are cue primm from the previous examples. We also have a nested action and have related our behaviors trigger and action properties to their object parts. The action defines itself as a bounce action using the info ID property and motion type properties. The motion type properties defined as a property in the emphasize actions in the behaviors data schema and the bounce actions target is defined with the affected objects property which again is our cue. Together with the trigger we've defined a behavior that bounces the cube when it is stopped.

In the previous example we only had one trigger and one action for this behavior but multiple triggers and multiple actions can be related to these properties when multiple triggers are defined, the satisfaction of any of them will invoke the related actions. When multiple actions are defined they each run serially one after the other. We have also encoded the concept of group actions in our action data schema which allows for serial or pedal execution of related actions. Also, behaviors are automatic automatically loaded as part of the scene. If behaviors are defined in a multi-scene USD there'll be scoped to the scene in which they're defined. In this example we have three behaviors which are all loaded as part of the my cube scene.

Next let's take a look at physics. Physics in Reality Composer helps make your AR content feel at home in the real world. In Reality Composer you can define the physical properties of an object such as its physics material like rubber, plastic or wood. Its collision shape such as a box or a sphere, and motion type as well as scene level physical properties like the ground plane material and gravity. The physics schema allows you to set up a physics rigid body simulation. It does this with schemas for the physics material, colliders, rigid bodies and forces, specifically gravity.

Here we have a wooden ball that we want to make participate in the physics simulation as a dynamic object. To achieve that we're applying the Collider API and the Rigid Body API to our primm. We're using the primm's own geometry in this case to define its convex collider shape. This property comes from the Collider API. We're then giving our wooden ball a massive 10kg. This property uses kilograms to avoid unintended scaling due to composition similar to the Anchoring API. Next we can apply a wood physics material to a wooden ball. Here we're defining a wooden material. The physics material schema is an applied schema. So we first apply it to our wood material primm. We've opted for an applied schema so that these properties can be applied to an existing material in the scene without having to create a brand new print and then define various properties about the material such as its restitution and its friction. We can then apply the material to our wooden sphere. Next let's make sure our content doesn't fall into infinity by adding a ground plane collider to our seat. We can do this by specifying an infinite collider plane and then marking it as the scene ground plane.

For maximum compatibility we're putting this into the custom data dictionary for the primm so that older versions of USD that do not have this registered can still open the file.

We can then specify the plane's position and normal. And we can also apply material to our plane. In this case let's reuse our wood material from earlier. Let's take a look at the scene we've just created. Finally let's define the gravity in our scene. For fun let's put our wooden ball on the moon. We can do this simply by creating a perimeter seen with the gravitational force type. We recommend that there only be one gravitational force per scene. We can define the gravitational force in stage units per second using a vector. Together we have a scene that looks like this.

Next let's take a look at audio. Audio and Reality Composer is driven by behaviors. Specifically, the play audio action. This allows audio to be played at the start of a scene or after a supported event such as the top of an object. The audio schema which is distinct from the behavior schema allows for the embedding of audio content in a USD. Audio specified in this way will be played back alongside the stages animation track and can be configured with various playback options such as audio mode, playback offset and volume. When a USD containing the audio schema is brought into Reality Composer, it's audio will play alongside the USD animation. This can be invoked with the USD animation action which now supports audio controls as well. If you're working with an editor that doesn't yet support the spatial audio schema you can use the usdz Python tool available on the Developer website to add it to your USD. Let's take a look at the audio scheme only USD. Here we have a model that specifies audio to be played back alongside the animation and the USD. First we're defining a brand new primm type named Spatial Audio. We specify the audio file itself using the file path property. We can also specify the oral mode which is how the audio will be played back. Spatial audio will omit audio from a specific transform and non-special audio. We'll play the audio without taking transform into account. In addition you can set the start time and media offset of your content which will begin your audio clip at a specific point and after a specific time respectively. Since the spatial audio primm inherits from the xform primm schema it can be placed in space. By default it will inherit the transform of its parent. However we can set our own local transform to offset our audio to play it from a specific location. In this case we want to play our horse neigh from the horse's mouth. Next let's take a look at 3D text. 3D text in Reality Composer allows for the addition of readable content in the scene. Text can be configured with a variety of system fonts and weights, and additional options such as alignment, depth, bounding volume, wrap mode and more. The 3D text schema defines all of these properties and is a schema. Let's take a look at the text schema in USD. Here we are defining text with the content #WWDC20 with the font Helvetica and a fallback font of Arial. If we choose a different main font our system will use that. If it has it. We can also define additional properties such as the wrap mode and alignments.

Finally let's take a look at the new metadata options. These options allow for the specification of a playback mode whether the animation and audio automatically plays or is paused upon load and scene understanding metadata that specifies if a scene's content interacts with the real world as generated by the new scene standing feature. In Reality Kit and ARKit on the new iPod Pro with LiDAR Scanner. This allows objects to not only fall on content in your scene but also interact with real world objects in your environment.

Let's take a look at the new playback metadata in a USD. Here we have a USD with an animation and are specifying that it should loop and not start automatically. This is a hint to a viewer that it should display a play button. So the user can initiate the animation. Autoplay is also disabled for all content coming out of Reality Composer so they can be driven explicitly by behaviors.

Next let's take a look at the new scene understanding metadata and USD. Here we have a USD with some objects that have physics properties applied to them in our scene. We're specifying that all content in the scene will interact with the environment as generated by the scene or just in capability in Reality Kit. As next steps, we encourage you to check out the schemas in depth through new documentation available on the Developer website. Send us feedback through Feedback Assistant or the Developer Forums. Check out Reality Converter in the related talks. And finally begin adopting the new AR USD schemas in your content and editor applications. So that's an overview of the new workflows in USD-based DCC ecosystems enabled by USD export in Reality Composer and the new AR USD schemas. We've seen how we can start with content in Reality Composer, bring it to life with interactions, physics and anchoring, export it, modify it in a DCC and re-import it into Reality Composer or a DCC, continuing the content creation story and creating an ecosystem of content creation tools that all speak the common language of USD.

We've also taken a closer look at the new AR USD schemas making USDZ exporting in Reality Composer possible. With this new functionality and schemas we're excited to see what amazing creations you will continue to create. Thank you and enjoy the rest of your WWDC.

-

-

11:44 - USD scene structure

def Xform "Root" ( kind = "sceneLibrary" ) { def Cube "MyCubeScene" ( sceneName = "My Cube Scene" ) { ... } over Sphere "MySphereScene" ( sceneName = "My Sphere Scene" ) { ... } } -

13:30 - Adding anchoring to USD

def Cube "ImageAnchoredCube" ( prepend apiSchemas = [ "Preliminary_AnchoringAPI" ] ) { uniform token preliminary:anchoring:type = "image" rel preliminary:imageAnchoring:referenceImage = <ImageReference> def Preliminary_ReferenceImage "ImageReference" { uniform asset image = @image.png@ uniform double physicalWidth = 12 } ... } -

15:11 - Defining a behavior

def Preliminary_Behavior "TapAndBounce" { rel triggers = [ <Tap> ] rel actions = [ <Bounce> ] def Preliminary_Trigger "Tap" { uniform token info:id = "tap" rel affectedObjects = [ </Cube> ] } def Preliminary_Action "Bounce" { uniform token info:id = "emphasize" uniform token motionType = "bounce" rel affectedObjects = [ </Cube> ] } ... } -

16:22 - Compound behavior

def Preliminary_Behavior "TapOrGetCloseAndBounceJiggleAndFlip" { rel triggers = [ <Tap>, <Proximity> ] rel actions = [ <Bounce>, <Jiggle>, <Flip> ] ... } -

17:19 - Defining physics

def Sphere "WoodenBall" ( prepend apiSchemas = [ "Preliminary_PhysicsColliderAPI", "Preliminary_PhysicsRigidBodyAPI" ] ) { rel preliminary:physics:collider:convexShape = </WoodenBall> double preliminary:physics:rigidBody:mass = 10.0 } -

18:15 - Applying a wood material for physics

def Material "Wood" ( prepend apiSchemas = ["Preliminary_PhysicsMaterialAPI"] ) { double preliminary:physics:material:restitution = 0.603 double preliminary:physics:material:friction:static = 0.375 double preliminary:physics:material:friction:dynamic = 0.375 } def Sphere "WoodenBall" ( prepend apiSchemas = [ "Preliminary_PhysicsColliderAPI", "Preliminary_PhysicsRigidBodyAPI" ] ) { rel preliminary:physics:collider:convexShape = </WoodenBall> double preliminary:physics:rigidBody:mass = 10.0 rel material:binding = </Wood> } -

18:40 - Defining a ground plane

def Xform "MyScene" ( prepend apiSchemas = ["Preliminary_PhysicsColliderAPI"] ) { def Preliminary_InfiniteColliderPlane "groundPlane" ( customData = { bool preliminary_isSceneGroundPlane = 1 } ) { point3d position = (0, 0, -2) vector3d normal = (0, 1, 0) rel preliminary:physics:collider:convexShape = </MyScene/groundPlane> } rel material:binding = </Wood> } -

19:08 - Adding gravity

def Preliminary_PhysicsGravitationalForce "MoonsGravity" { vector3d physics:gravitationalForce:acceleration = (0, -1.625, 0) } -

20:28 - Defining spatial audio

def SpatialAudio "HorseNeigh" { uniform asset filePath = @Horse.m4a@ uniform token auralMode = "spatial" uniform timeCode startTime = 65.0 uniform double mediaOffset = 0.33333333333 double3 xformOp:translate = (0, 0.5, 0.1) uniform token[] xformOpOrder = ["xformOp:translate"] } -

21:45 - 3D text

def Preliminary_Text "heading" { string content = "#WWDC20" string[] font = [ "Helvetica", "Arial" ] token wrapMode = "singleLine" token horizontalAlignment = "center" token verticalAlignment = "baseline" } -

22:46 - Playback metadata

#usda 1.0 ( endTimeCode = 300 startTimeCode = 1 timeCodesPerSecond = 30 playbackMode = "loop" autoPlay = false ) def Xform “AnimatedCube" { ... } -

23:08 - Scene understanding metadata

def Xform "Root" ( kind = "sceneLibrary" ) { def Xform "MyScene" ( sceneName = "My Scene" preliminary_collidesWithEnvironment = true ) { def Xform "DigitalBug" { ... } } }

-