-

Advances in AR Quick Look

AR Quick Look is a built-in viewer for experiencing high-quality content in 3D and AR. See how integration with Reality Composer enables rich, interactive experiences to be displayed and shared more easily than ever before. Explore rendering improvements and multiple object viewing, then dive into the practical application of AR Quick Look in retail, education, and more.

Resources

Related Videos

WWDC21

WWDC20

WWDC19

-

Search this video…

Good morning everyone. And thank you for joining our session. My name is David Lui, and I'm thrilled to be back here at WWDC to share with you all the new and exciting features in AR Quick Look.

Last year, with iOS 12, we introduced AR Quick Look, which set the foundation and provided a great single-model AR previewing experience. We made it really easy to experience 3D content in your world in an immersive and realistic way using physically based rendering. AR Quick Look is built in as part of iOS and is available on the web and in many applications like messages and Apple News.

We gave a session last year where you could learn more about how to integrate AR Quick Look into your application and website along with how to create great-looking content for it. So, in this session, we'll go over our integration with Reality Composer and how AR Quick Look views the produced content, improvements to the visual rendering, and experience within AR Quick Look for greater realism and content immersion.

Along with how to support all these new features on the web including customizing the preview and experience for your applications, and lastly, our enhanced integration with retail with support for call to action and Apple Pay. So, earlier in the week, we talked about Reality Kit and Reality Composer, our new macOS and iOS tool for simple AR-based content creation, where we can quickly create really amazing, rich content with activity for applications. You can take all that same rich content and share them through various applications, all of which can be viewed in AR Quick Look. It's a really great way to easily realize these new interactive experiences and view them in a feature-rich environment all by simply sharing the 3D model file. And we really mean feature rich. In support of Reality Kit and Reality Composer, we added new rendering effects to AR Quick Look like dynamic ray-traced shadows and people occlusion to help improve the realism of 3D content placed in your world.

We've also added a lot more capabilities, like multimodel support and a built-in animation scrubber that not only enhances the AR experience but gives you more control over the content.

Adopting AR Quick Look is the easiest way to take advantage of all these new features, to bring an amazing AR experience to any application or website and seek content as if it was actually there with you.

So, let's get started. AR Quick Look is built around USDZ.

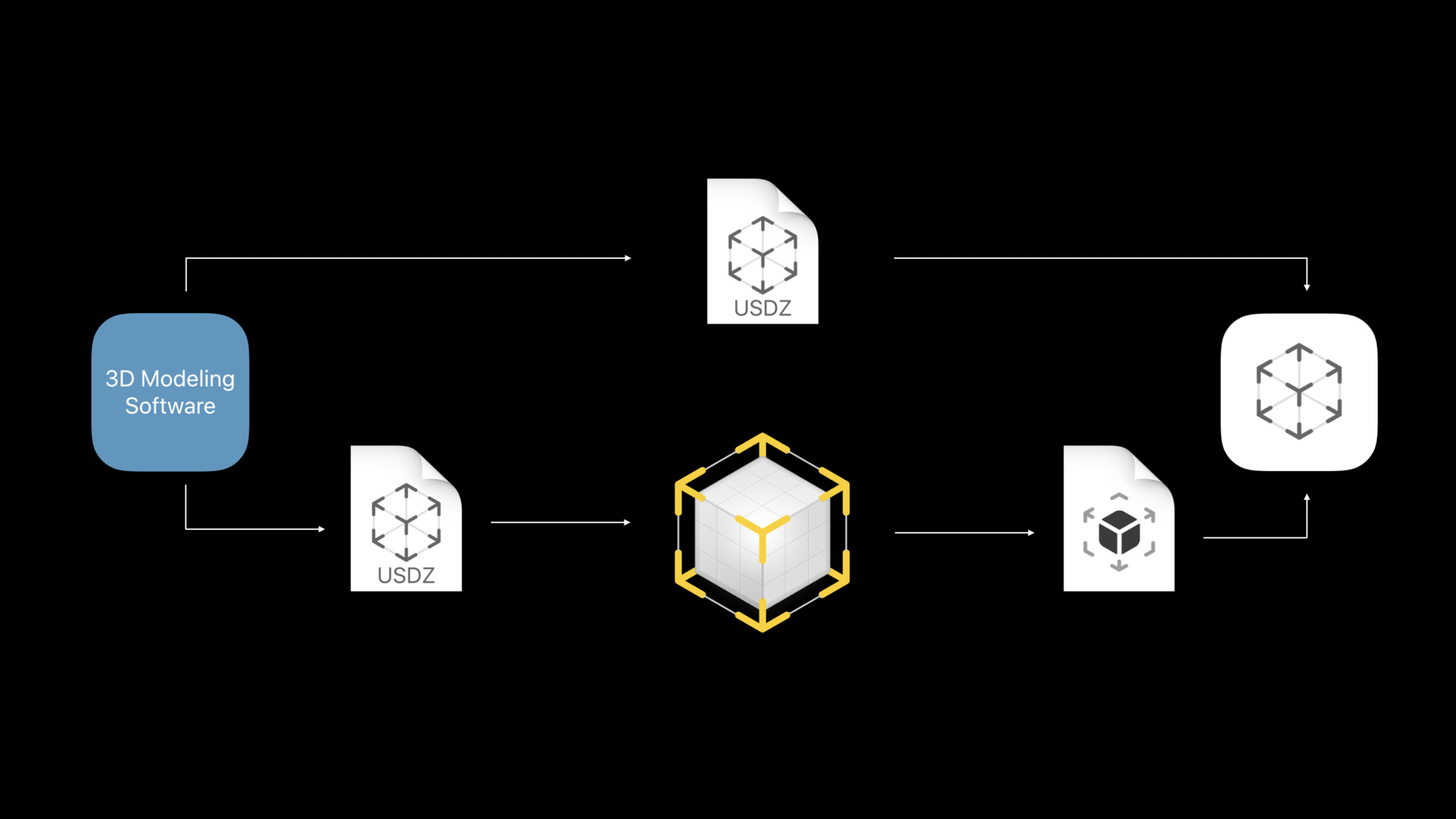

Today, people create content for AR Quick Look using their favorite 3D modelling software and exporting that scene as a USDZ file.

You can then view that content in AR Quick Look where you can push the object into your world to view it. You can take that same USDZ file and import it into Reality Composer where you can add new behaviors and interactivity that all gets packaged up in our new reality file format. AR Quick Look supports viewing these new reality files and is a great place to play with these experiences. For many users, AR Quick Look is the destination to explore and interact with 3D content as people share these models from one device to another across many applications.

Scenes are the fundamental building blocks of reality files. A Reality Composer scene contains a specified anchor type, such as a horizontal or face placement, and one or more objects placed in the scene. These objects can contain any combination of interactive behaviors, captivating audio, and realistic physics. Together, this produces a really compelling stand-alone AR experience represented as a Reality file. So, let's take a closer look at some of these properties.

Every scene has an anchor type, which describes how the model should be placed in the world. Last year, AR Quick Look supported horizontal anchors like tables, floors. And this year, we've added support for vertical surfaces like walls as well as faces and known 2D images in your environment.

So, let's describe each of these in detail.

Horizontal and vertical anchors are found in everyday settings such as tables and floors. Since it is so common, objects are placed on the first detected plane, and we make it really easy to go between different surfaces, just like dragging the model from one surface to another.

Rotation gestures differ depending on whether the object is on a horizontal surface or a vertical surface.

And this behavior applies for both reality files and USDZ files.

So, let me show you how to place this picture frame on the wall starting from a horizontal surface. I'll push the frame onto this table, and you can then tap and drag the model over to the couch and position it onto the wall. You can also start looking directly -- thank you. You can also start looking directly at the wall and pushing the content from your device. It's a really seamless way to go between planes in order to anchor your content in the world as you would like. You can also anchor virtual content to real-world objects that can move. Like putting on eyeglasses on your face. For these type of experiences, AR Quick Look automatically turns on the front camera and anchors the content based off of how it's authored relative to the center of your face.

Face occlusion geometry from ARKit is used in AR Quick Look to realistically obscure the object position on your face so that they will intersect and break the illusion. Once anchored, scale and rotate gestures are disabled to ring true to their authored size and correctly positioned to your face. You can also take selfies with your friends when trying on the same pair of glasses in AR Quick Look and with ARKit 3 this year, there's support for up to three faces. This experience is only available on devices with a front-facing TrueDepth camera. And for other devices, the anchor is treated as if it was a horizontal anchor.

So here are some examples of my friends trying on different accessories, such as a masquerade mask, propeller beanie, and this great-looking mustache in AR Quick Look. This makes the content more animated and really feel like it's part of you since it's interacting with using your face. It's a really great way to express yourself and play with these models in a brand-new way. AR Quick Look uses the front camera if there is a single face anchor. If there are any other scenes, then AR Quick Look will fall back treating this as a horizontal anchor experience. So, for example, say you have two face anchor scenes offered in Reality Composer like I have on the left-hand side. So there are two different styles of glasses. When previewed in AR Quick Look on the right-hand side, these glasses will be placed into the world as a horizontal experience so you can compare them side by side. Another type of dynamic anchor is an image anchor, where virtual content can track and follow a real-world image. In AR Quick Look, we want users to be able to preview the scene in the world without having access to the physical image. So, if no anchor is detected, AR Quick Look will directly place it on the first plane that it finds. And later, when it detects the specified anchor, it will magically move over and attach to the real-world image.

And finally, if the anchor disappears, then the scene will reattach to the last plane that it found. One thing to note is that gestures like scale and rotate are disabled.

And like fixed anchors, the content remains true to its authored size. So, let's take a look at this toy rocket model I've authored in Reality Composer as an image anchor experience. You can see how the content is anchored to the real-world image and follows each and every movement whether translate or rotation. We really encourage people to engage with the virtual content and really move around since this is an AR experience. This means directly moving the anchor closer to you, to illustrate change in scale, or rotating the image anchor to view the scene from different perspectives.

Now that you've anchored content into your world, you can add behaviors to bring interactivity to your scene.

Behaviors are comprised of a trigger and one or more actions, and these are all defined in the bottom behaviors panel in Reality Composer, where we can attach any combination of triggers and action sequences.

This is a really great way to provide more interactivity between the user and the virtual content and create a more immersive and engaging user experience.

So there's a variety of triggers and actions defined in Reality Kit that's exposed in Reality Composer, and these are all natively supported in AR Quick Look.

You can take a Tap trigger and attach it to your bike object where on tap the bike will disassemble, revealing all the individual parts and components, and this is all done by the Move action. You can also attach a Proximity trigger to a boom box where you can get, when you get close to it, music starts playing that I can jam to. One thing to note is that AR Quick Look does not support custom app actions. There's so many things you can make with this, and to learn more about behaviors and how to create interactive AR experiences, check out the Building AR Experiences with Reality Composer session from this year.

So, all of these new features I described are all driven from this new Reality File. Reality File is a self-contained format that contains multiple scenes with any number of objects, along with anchoring type information, like whether the content should be placed on a vertical wall or on a person's face and interactivity data with behaviors like tap triggers and audio actions for realistic and interact AR experience.

Reality files work hand in hand with USDZ, and USDZ is the perfect format for sharing and publishing individual 3D models on the web or in your app for use in AR.

And if you want to use these USDZ models you've created over the years, to make interactive AR experiences, you can combine them in Reality Composer and export out a reality file.

Either format can be viewed in AR Quick Look on iOS 13. Now, I'd like to invite my colleague, Jerry, to give you a demo with these new experiences. Jerry. Thanks, David.

Good morning everyone. Today I am very excited to show you previewing Reality Files inside AR Quick Look. Now, yesterday at the Building AR Experiences with Reality Composer, my friend Powell created a quick lesson illustrating different seasons on Earth, and he sent me a copy. So let's go ahead and view that in AR Quick Look.

Now, as you may recall, this entire experience is driven through scene start triggers. For example, the moon, it triggers the moon to rotate around the Earth and the Earth to rotate around the sun. As the Earth circles the sun, the appropriate season appears. And this is created using weight action followed by a show action.

And after a slight delay, the sign disappears using a hide action.

Now, you may have noticed that no matter where I look, the sign is always oriented towards me, and this is achieved by using a look at action.

So, that's a quick overview of how easy it is to preview Reality Files, such as this short lesson inside AR Quick Look.

And now, I have another demo, and for this, we're going to take a road trip around the solar system.

So, in the same session, my friend Avi created a project showing three celestial bodies, the moon, the Earth, and the sun. I've expanded upon that to add some more features to show you different behaviors in action. So let's take a look.

So the most obvious starting point of our journey is of course the Earth. But unfortunately we cannot get to space inside a car. We need something with a more powerful engine. I think I have an idea. Notice that I can see what triggers I can interact with by looking at the above text. For example, here I can see move closer to activate. So there must be a proximity trigger. Let's go ahead and take a closer look.

Oh, hey, it's a rocket. Perfect for going to space. Notice that I, there's a tap trigger here, so I'm going to go ahead and launch the rocket. And we have lift off. So now let's set a course for the moon.

And let's try to stick to that landing.

Nailed it. Oh, look, there's a sign here. Well, good thing we took the rocket, not the car.

So the thing about space is that it is hard to view the sizes of celestial bodies because the sizes are so hard it's hard to grasp. However, we can easily illustrate this with the help AR. So, I'm going to tap here and fly up and out of here.

And this time, let's go up higher so that we can take an overview look of the moon, the Earth, and the sun. And I'll tap on the sun to get a better sense. Whoa. And as you can see, the sun is absolutely enormous compared to the size of the Earth. Too bad the distances are not to scale, otherwise it might actually be quite warm in this room.

So that's a quick look at how easy it is to view authored content inside Reality, to view rich content authored using Reality Composer and viewed inside AR Quick Look. And we absolutely cannot wait to see what kinds of content that you can create. So, now, let's talk about the visual improvements of AR Quick Look.

One of the main goals of AR Quick Look is to create a believable AR experience, and to achieve that, it is important to understand and take into account the surrounding environment of the user.

This year, we added a variety of new rendering effects to make the content look as if it is truly part of the world.

let's go into some more details, starting off with real-time shadows. Last year, AR Quick Look rendered shadows to make virtual content look as if was part of the world.

This year, we made shadows dynamic and rendered in real time. So now shadows can follow your content as if it was part of the world, and this helps bring your animated content to life.

By default, projective shadows are used as it provides a good approximation for how shadows look like in the real world. And on higher-end devices, like iPhone 10S, AR Quick Look will use a ray-traced technique, which produces physically correct shadows and looks even better.

One key difference between ray-traced shadows and projective is that ray-traced technique produces softer shadows and is especially great for objects with fine contact points. Now, we've made great strides in terms of optimizations to enable AR Quick Look to perform real-time ray-tracing of dynamic scenes, all on a mobile device.

If you're interested in learning more about ray-traced shadows, I recommend checking out the Ray-tracing with Metal session later today at 11. And since dynamic shadows are rendered in real time on all ARKit supported devices, you do not need to bake in shadows as part of your model.

Another new visual effect is cameral noise. In low light situations, you might notice noise in your video because there just isn't enough light captured by the camera sensor. Since virtual objects are rendered on top of the camera feed, they will remain free of artifacts, making your content look out of place. Well, now, Reality Kit supports camera grain, so the noise will be added to the virtual content based on the amount of noise in the camera feed to create an effect that the robot is actually part of the scene.

This is done by taking the noise texture provided by ARKit and applying that to the virtual content.

Camera grain is automatically enabled on all ARKit supported devices.

Depending on your model and the environment, some colors may look too bright or blown out, such as the shiny marble. AR Quick Look uses HDR with tone mapping to provide a greater range of brightness and contrast. As you can see, without it, the marble looks too bright and out of place. But with HDR, it blends in with the environment. Under the hood, Reality Kit allocates 16 bits per color channel, and this allows for a greater range of color values to be represented and passed through the shaders. This then gets mapped using a custom tone mapping curve and rendered to the final texture. HDR with tone mapping is available on all A10X devices and later.

Now, if you're like me who has tried to grab a virtual object or had people walk in front of them, then you probably noticed that virtual objects are always rendered in front of people when it should be behind, breaking the illusion. Now, with people occlusion, you can create the illusion that someone is actually walking through a collection of virtual tin toys as if it was right there in your world.

This uses the person segmentation data provided by ARKit to know what part of the camera feed should remain drawn on top of the virtual content.

People occlusion takes advantage of the Apple neural engine and will be available on all A12 devices and later. Now, this is a really hard problem to solve, but if you're curious to learn more, I recommended checking out the Introducing ARKit 3 session.

Digital cameras can only focus on a certain distance at any given time. Objects closer or further from this distance will look out of focus. Without this effect all virtual objects will look in focus, which stands out from the camera feed.

Reality Kit takes the object's distance into account tor ender it as if it was captured from your camera. And this allows the virtual object to blend in with the rest of the camera feed to make it seem as if it was really there. This is available on all A12X devices. Motion blur is the phenomenon caused by real-world objects moving faster than what the camera can capture, creating a steak-like effects. As you can see in the freeze frame here, the robot on the left does not fit with the scene when motion blur is turned off. Reality Kit applies motion blur to the virtual content by interpolating between textures of two consecutive frames, which depends on the device motion and the length of exposure to better match the camera feed. You'll be able to experience this effect in AR Quick Look on A12X devices.

We strive to bring more realism to the virtual content with these new rendering effects, but we also have to keep in mind that these are expensive operations that must be done in real-time. We optimize and finetune the rendering effects to create the best experience at both realistic and performant on all iOS 13 devices. That's why AR Quick Look would automatically apply the best rendering effect for your device so that you can focus on creating rich content and experiences.

Now, in addition to making content feel like it is part of the world, we've also made enhancements to the entire view experience. First off, AR Quick Look now launches straight into AR so that you can immediately see and interact with virtual content in your world. For example, you can quickly put virtual objects on detective planes or try on sunglasses and take selfies. We've also made improvement to scene understanding for better and faster plane detection to push the object directly into the world as quickly as possible. We used machine learning to better detect walls as well as to extend the breadth of already detected planes. And just like last year, AR Quick Look securely runs in a sandbox process, separate from the host. By launching straight into AR, websites and apps do not have access to the camera feed or any user activity.

And here's a quick video showing how fast this can be. Going to tap on the thumbnail and place a Newton's cradle directly on the table. We've also added support for viewing multiple models through a nested USDZ file. This is a special USDZ which contains metadata and a library of USDZs. This can be useful to create collections of related objects, such as a furniture set, a shopping cart, or in this case, sports equipment. In AR Quick Look, all containing USDZ files are loaded and lined up in ascending height order, which offers a clear method of displaying a variety of different models.

And these can then be manipulated independently once placed into the world and arranged to your liking.

There's a saying that a picture is worth a thousand words, and a video is perhaps millions. But what about animated AR content? Animations bring a whole new level to the experience, and now users can control the playback of animations such as play/pause, rewind, skip, and fine scrubbing. And the great thing about viewing AR animated content is that users can rewatch it from different angles, allowing themselves to be the director.

For USDZ models, the animation scrubber will be shown if the animation duration is at least 10 seconds.

And for content less than that, the animation scrubber will be hidden, and the entire animation sequence will continue to loop.

For reality files, the animation scrubber will be shown if there's a single on start trigger with at least one animation action. And optionally, you can attach sound action so that it plays along with your animated content. And here's how that would look like in Reality Composer.

Tapping on the behaviors panel shows this. So, here I have a single same start trigger followed by one USDZ animation as well as a sound to go along with it. Now, we have a really cool gesture. It's called levitation.

Simply swipe up with two fingers to levitate objects up. For example, you can levitate these balloons to decorate your party. AR Quick Look provides a portal for users to enjoy immersive experiences, but wouldn't it be cool to share what you see on the screen with other people? Now, you can record exactly what you see such as organizing that birthday party simply by tapping and holding on the shutter button and release when you're done. Videos are automatically saved to the photos library.

We made improvements to macOS Quick Look Viewer as well. In addition to being able to preview USDZ files, it also supports reality files, and thumbnails will be generated right away so that you can find the files faster. It is built on top of Reality Kit, which uses the same physics-based rendering as iOS, and this provides a consistent previewing experience on all your devices.

We think this will make it easier to preview author content and take advantage of all the power and tools available on a Mac. That's a quick run through of all the visual improvements and previewing experience in AR Quick Look. And now I'll hand it back to David, who will talk more about the integrations on the web. Thanks. Thanks Jerry. So we all just saw the new features and improvements added to AR Quick Look this year. So, now, let's talk about how to view those experiences on the web. With iOS 12, you can view USDZ files on the web through AR Quick Look. For your website to support this, simply add HTML markup and specify the MIME type. This automatically adds the AR badge in the top right-hand corner of your thumbnail image to let users know that they can view this content in AR. Tapping on the thumbnail will launch AR Quick Look.

This easily transforms your website into a seamless AR viewing experience as there's no need to download any application. Adding support consists of two things. The HTML markup and the MIME type. The first step is to add the HTML markup, which involves adding the rel equals AR attribute to your A link element, allowing Safari to recognize and launch AR Quick Look from the same webpage.

The link must contain a single child that is either an image or a picture element, and the image that you provide inside the anchor will be used as a thumbnail image for your virtual content.

The second step that's needed in order for Safari to recognize AR content is to add the appropriate MIME type when serving that content. To add support for previewing USDZ files on the web, simply add this MIME type for USDZ files. This provides the metadata for Safari to open both regular and nested USDZs directly in AR Quick Look. And likewise, you would need to configure your web server to serve the appropriate headers for reality files. And adding this MIME type will open reality files within AR Quick Look from the web.

In addition to downloading content from a server, you can also open up AR Quick Look by passing in local data. One supported format is the data URIs, which allows developers to load content from a base 64 encoded data string. So, for example, here's how to load a USDZ file through a data URL. Notice that you specify the same MIME type at the beginning of the data string, and to load a reality file, simply switch the MIME type.

Not only that, you can also load data through blob URLs, which are more efficient compared to data URIs since they do not need to be base 64 encoded. The blob URL is never manually created and always generated through an API like drag and drop, file upload, or URL download. We've heard from developers the desire to customize a viewing experience within AR Quick Look. So, starting with iOS 13, we're introducing two customization options that you can modify to tailor the viewer to best fit your content and your application. Developers can choose to disable scaling of virtual content for each model that's being previewed and also provide a canonical webpage instead of the actual 3D model that'll be shared through the iOS sharesheet. So, let's take a look at the disable content scaling option.

Typically, allowing scaling for virtual content is a great way to preview objects at different sizes and picking the right dimensions to best see your content in the environment that you're in. However, that's not always the case, especially when previewing objects with known fixed sizes like furniture.

For these type of products, AR Quick Look makes it really easy for web and app developers to disable content scaling so that users will always view the object at the correct and intended size.

So, let's see what this behavior looks like with this nice dining room set that I'm sending to customers. The set comes in a specific dimension that cannot be configured. You can see as I try to scale the dining table set larger or smaller with our two finger pinch gesture that you're met with resistance and that the object scales back down to its true real-world size. This immediate feedback is super important to let our customers know that the object cannot be scaled and that they're intended to be viewed at this specific size.

For the web, the API is exposed directly through the URL via the fragment identifier. The fragment identifier syntax starts with a hash mark followed by the parameter option to customize the AR Quick Look experience, and in this case, this allows content scaling behavior. The values to these parameters are Boolean values where 0 represents false, and 1 represents true. When launched AR Quick Look processes the fragment identifier data and ignores any invalid data along with parameter names that are not defined by the syntax. If the original URL does not contain a fragment string, AR Quick Look will launch and load the 3D content for previewing using the default behavior, which is to allow content scaling.

Going through the URL fragment identifier allows for future customization options and enhancements to the 3D content previewing experience, as more features get added to AR Quick Look.

Another customization option that we added is the ability to enable sharing the canonical webpage URL. When challenged and sharing just 3D model file is the lack of context for where that model came from. I know my colleague Jerry is in the market for a new dining table, so if I send him an iMessage to previous 3D model, he has no idea where to buy it let alone how much the set even costs. For retail products, it's better to share the link to the product description page. And by sharing a product link instead of a 3D model file itself, people can go to the source and get not only more information but the most up-to-date information about the product.

And on iOS 13, AR Quick Look will automatically share the URL of the originating website for the 3D content and applications can choose to continue sharing that 3D model or to provide a link instead. This is great to get more information and learn more about the content. Now let's take a look at this behavior in Safari. Here, I'm viewing the guitar from the AR Quick Look gallery. As I tap on the share button, you'll see that were now sharing a reference back to the gallery website. And in Apple News, we're automatically sharing the source news article as well through the iOS share sheet. This is great for shareability as we pass 3D models around and for viewing in AR with even more contextual information. You can also integrate AR Quick Look into your iOS applications. It's really easy to integrate and get started with viewing 3D content and placing them into the world.

Since AR Quick Look is built into the OS adopting it gives users a familiar, yet consistent previewing experience. You have full control over the view controller frame and presentation of AR Quick Look so you can put it inline with the rest of your app content or present it full screen for the best experience. AR Quick Look is built on top of the Quick Look framework to preview and present AR content. So the same customization options that are on the web are also available for your apps through our new API. To do so, use the new AR Quick Look preview item class defined in the ARKit framework, which conforms to the QL previewItem. Simply conform to the QLPreviewController data source protocol and return an instance of an AR QL preview item. To create one, pass in the file URL of the 3D content that you wish to view.

And now, you can optionally customize and provide the canonical website URL use of sharing within AR Quick Look and whether to allow content scaling of the virtual content, all similar to the web fragment identifier method. This provides a consistent developer and API story for both web and iOS applications.

We have a new documentation and resources where you can learn more about the APIs that we've covered along with details on how to enable AR Quick Look in your application and website. So, check out this link, which can be found on the developer portal.

Augmented reality is a really powerful way to visualize furniture and see how they fit within your space. So, I'll be showing you how to decorate a kid's bedroom with these six items.

So, as you can see here, there are two picture frames, a basket, a nightstand, a bed, and a bookshelf. And all of these object are packaged together in a single nested USDZ file. So let's get started moving this bed. You can see how I'm able to freely move the bed that's contained in this multiple model file independently to my desired location in the room.

Let's go ahead and grab the picture frames, that's on the left, and move them closer to us. With two fingers, you can see that we support multimodel translation, leveraging the power of multitouch. This is great for quickly moving objects around in the world. I will now touch and drag my favorite picture from the floor and anchor it so that it sits right above the bed. This is all possible now because by default AR Quick Look detects and supports placing objects on both horizontal and vertical surfaces. Now let's figure the best place to put my bookshelf. I'll grab the bookshelf and first place it onto the wall.

Now, I really need to compare where the best spot for it is, so let's place it back onto the floor.

So now I'm thinking, I want as much floor space for the kid as they grow up and as they collect more items. So let's keep it onto the wall since it really matches the setting of the picture frame and the rest of my layout. I'm able to quickly make these decisions and see what objects look like in my room with the new support in AR Quick Look for easily moving between horizontal and vertical surfaces. So, let's tidy up the rest of this scene by moving the remaining objects, which is the basket, and over to the right near the bookshelf.

I'll also do the same thing with the nightstand and a picture frame but move it to the left side of the bed.

And as Jerry mentioned, there's a new levity gesture, and this is extremely powerful for not only seeing the underside of the objects but also lifting the object up. So I'll go ahead and lift the picture frame to be slightly above the nightstand and move it so that it's positioned just on top of the stand. Lastly, I'll rotate the frame so it's angled towards the bed for a nice finishing touch.

And now, let's take a look at the final shot of the kid's bedroom scene that we've just made.

This looks absolutely incredible as a prime example of a retail experience that can be enabled in AR Quick Look. But you might be thinking, I really wish there was an easy way for me to buy this after seeing this in my own space. And you weren't alone in thinking that. We've learned that over the last year from retailers that when customers see their products in their own space, they have significantly more confidence in their purchase, which is great for customers and great for businesses. So, this year, we are adding a support for a customizable call to action in AR Quick Look including direct support for Apple Pay.

With Apple Pay support directly in AR Quick Look, users can purchase the actual objects that they just previewed in the world with just a single tap. So, what does this actually look like? There's a brand-new view located at the bottom of AR Quick Look with two fields, a title and a subtitle that you can customize separately to match your workflow. The title can describe what it is that the user is looking at to buy and you can use a subtitle field to include the price and product description.

Underneath the text field is a reference to the canonical web domain name to show you where the product can be found. And on the right, you can provide your own call to action, such as active cart and checkout along with using any Apple Pay button with varying different styles to best fit and match your shopping workflow.

So this great feature will be available later this fall, and we are excited to share that Warby Parker will be offering virtual try-on in their website once Apple Pay support and AR Quick Look is available later this year. Warby Parker was the first to offer virtual try-on in their app, and we're excited with the new updates to AR Quick Look and iOS 13 they will be extending their virtual try-on to their website. We can't wait to see how other merchants integrate this into their retail workflow.

Now, a majority of these models that we've seen from the keynote and this session can be found on our updated AR Quick Look gallery page. Here, you'll find a range of static and animated models that you can play with, and we'll be adding reality files soon. So, if you have iOS 13 on the device, visit this gallery page, tap on a 3D model, and give them a try. And also, if you didn't get the opportunity to check out the Mac Pro or the Pro Display XDR in person from earlier in the conference, don't fret. You can view these products in AR Quick Look and see what they look like in your workspace. The models can be found on their respective product pages.

A Python script to create these nested USDZ files will be available soon on the AR Quick Look gallery page under the USDZ tools section. So, in summary, we've added a ton of capabilities to AR Quick Look this year to provide and even more compelling and believable experience, to view AR content in the real world. This started with supporting reality files, authored in Reality Composer, where we're able to reflect the richness that reality kit provides, like more anchor types, such as Face and Real World images and interactive content behaviors through the use of triggers and actions. We've made the content belong with the world with new rendering effects like depth of field, motion blur, and people occlusion. We also enhance the viewing experience with new levity gestures and built-in animation scrubber to allow you to have better control over the content and its animation sequence.

We've introduced a new API on both the web and iOS to allow developers to better customize their previewing experience for the workflows. And lastly, there's better retail integration with support for Apple Pay and call to actions that are coming real soon. To learn more and get more information about the topics covered in this session, check out the session's website. We also have a lab later today at 11 AM. So please stop buy and bring any questions you have about AR Quick Look, and we'd be happy to help answer them. Thank you, and enjoy the rest of your conference. [ Applause ]

-