-

Introducing Core Haptics

Core Haptics lets you design fully customized haptic patterns with synchronized audio. See examples of how haptics and audio enables you to create a greater sense of immersion in your app or game. Learn how to create, play back, and share content, and where Core Haptics fits in with other audio and vibration APIs.

Resources

- Updating Continuous and Transient Haptic Parameters in Real Time

- Playing a Custom Haptic Pattern from a File

- Playing Collision-Based Haptic Patterns

- Core Haptics

- Human Interface Guidelines: Playing haptics

- Presentation Slides (PDF)

Related Videos

WWDC21

WWDC19

-

Search this video…

I am Michael Diu from the Interactive Haptics Team. And I am forward to sharing the many advances in haptics in iOS 13 with you. Let's take a look at our agenda.

First, we'll find out where we can use Core Haptics. How it fits in with other audio and haptic APIs.

And we'll talk about the two groups of classes in the API. And the basic dimensions and descriptors that we will use to describe our haptics and audio content.

We're going to walk through the basic recipe to start playing out that content.

And then we're going to move on to introducing dynamic parameters. And dynamic parameters are a way that you can customize your haptic patterns at playback time in response to your user or your apps behavior.

And we're going to explore a new way to express, store, and share your audio haptics content. A new file format we're calling the Apple Haptic Audio Pattern, or AHAP.

So let's get to it.

First, what is Core Haptics? We can think of it as an event-based audio and haptic rendering API. Or synthesizer for iPhone.

We can continue to use our other audio and haptics and feedback API's like AVAudioPlayer and UIKit's UIFeedbackGenerator in parallel with Core Haptics.

You might be wondering, "Which iPhones can I use this on?" With one, just one API and one file format, we will be able to access hundreds of millions of haptic engine-equipped iPhones starting from iPhone 8 onward. And we've taken care that your haptic patterns will have the same feel across all of these products. So much so that you're going to be able to prototype and release just using one product.

And these iPhones aren't equipped with just any old commodity actuator. They all have the Apple-designed taptic engine. Which offers you that unique combination of power, a wide, expressive range, and an unmatched precision and control and subtlety.

Next, I'd like to talk about those of you who have already started adopting haptics on iPhone with UIKit's feedback-generated API's. Now Core Haptics is not a replacement for this API.

In most cases, you want to keep on using FeedbackGenerator, especially for UIKit controls and adding haptics to that. With that API, you indicate the design intent for your event. Whether that's a selection, an impact, or a notification. And you let someone else, Apple, worry about developing a vocabulary to express that and mixing the right modalities like audio haptics animation to communicate that message.

Now, this API is also being improved in iOS 13. So please check out its documentation for more details.

In contrast, Core Haptics is good when you want to be your own sound and haptic designer. With it, you develop your own patterns. And you can have a lot more control over exactly what time it gets played. So you can synchronize with other API's like an animation from Core Animation. Or a sound event from AVAudioEngine. You have a much richer set of playback and modulation controls. Now, UIKit is built on top of Core Haptics. So both API's share the same low-latency performance characteristics. Now designing your own haptic patterns is going to take more time. But when it allows you to do something that you couldn't otherwise do and when it allows you to differentiate your app, then it's worth thinking about. Now next, I'd like to talk a bit more about those audio capabilities.

So Core Haptics is also an Audio API. And so that allows you to play short, synthesized or custom waveform audio in synchronization, tight sync with your haptics.

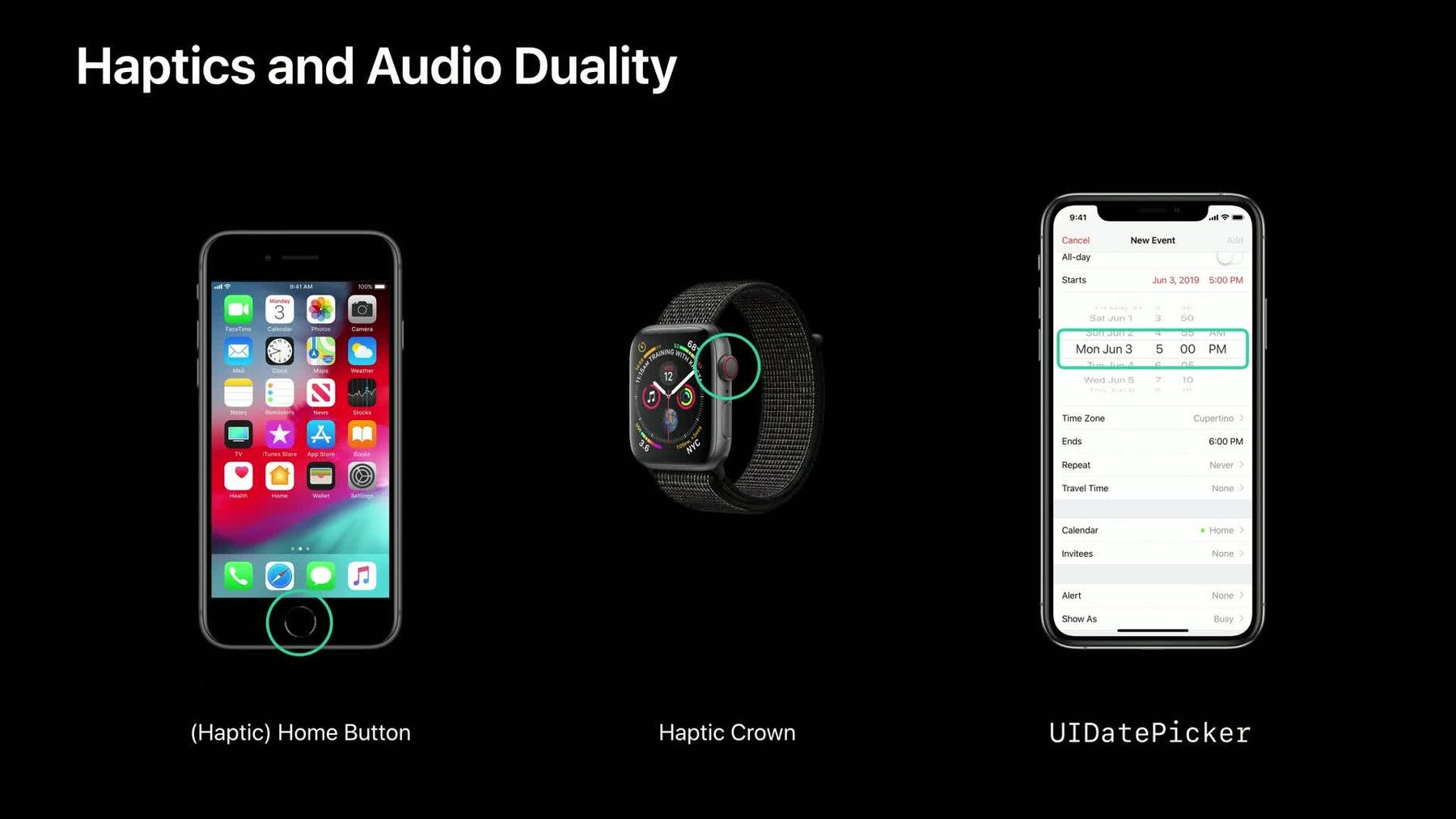

This type of audio haptic duality has been crucial to many of Apple's own haptics experiences. Like the haptic home button in iPhone 7, the haptic crown in Series 4 Watch, and the UIDatePicker, those scrolling wheels that you use to select dates and times and alarms and calendars. And you may not have realized that. You may not even have noticed that there was audio in these experiences. But if you were to cover up that audio once you take it away, you'll realize that it's an inseparable an integral part of that experience. So now, you can do the same with Core Haptics in your own apps. And I want to talk about some categories of apps, one app -- a huge category in particular where you might want to think about Core Haptics, and that's games.

So imagine we are at the race track. We want to go into turbo mode. Let's imagine. When you've got that brute force message to deliver, think about using synchronized haptics and audio in your app to generate those visceral explosions and rumbles.

Now another very nice application is for to simulate physical contact to make your applications feel more realistic. Think about a tennis game. You could have audio and haptic components where the pitch of your audio, the intensity of your haptics are proportional to how fast your swing is or how centered the ball lands in the middle of the racket. And you can even control how long the strings your racket will resonate for after the impact.

So another great area to think about using Core Haptics is in augmented reality apps.

And there, if you're working in this space, you're already familiar with the benefits of having high visual fidelity paired with 3D audio working in concert. Now we can reach for that next level of immersion by considering how custom haptic feedback can ground our user gestures, or respond to app, device, and AR object events. For example, moving your device around or moving your entire users around.

As an inspiration this year, we've enhanced the Swift Shot sample code by using haptics that are modulated based on how fast you pull back the sling. How fast you pull back your phone. You're going to feel the tension building up as you stretch it back. And then a very satisfying thunk as you release.

I'd like to show you a video of this and I'm going to use audio to represent just the haptics that you're going to feel. They're going to sound like this. Now, we're going to see the whole thing together. Visuals and haptics, no regular audio. So that was an example of how we can use haptics, sound, and visuals all synchronized together to enhance our AR experience. Now these are just a few categories of apps, games, and AR that are ripe for creative explorations, with haptics and the corresponding sounds. I'm sure you're going to think of many, many more.

So now let's get into how we can start expressing our content with Core Haptics.

There are just two groups of classes in Core Haptics. There's those to represent your content. And those to playback that content. Let's take a closer look at the content side first. The basic indivisible content elements is in Core Haptics is called a CHHapticEvent. Now, each event has a type and a time. And optionally, parameters that will customize its feel.

These events can overlap each other and when they do, they mix.

And all events are grouped into a pattern.

Next, I'd like to talk about those types of events that we can have.

Our first type is called the HapticTransient. The HapticTransient, I think of it as a gavel. It's a striking motion. It's momentary and instantaneous. And then we have two continuous types.

We have HapticContinuous and AudioContinuous. And there I think of, for example, bowing a stringed instrument. It is longer than a transient. It can be, for example, used as a background texture. And you have a much richer set of knobs that you can use. For example, to modulate the resonance of it. Lastly, we have the AudioCustom type. And the AudioCustom, as we -- as I mentioned earlier. Is where you can provide your own audio to be played back in sync with the haptics.

Next, let's talk about some of those optional parameters.

Our first event parameter is called HapticIntesnity. And it has an audio analog, audio volume, which you're probably already familiar with.

Now, with this parameter, you go from no output as you turn, and as you turn the knob from zero all the way up to one, you go to the maximum output of the system.

Our next parameter is called HapticSharpness. Now HapticSharpness is a new concept. There's no physical analog to this and there's also no audio analog.

In this world, I want you to instead think of moving along in a perceptual space from a very round and organic feel at zero, all the way to a more crisp and precise feel at one. And to help ground that a bit further, I'm going to use some examples from iOS 12. The flashlight button on your lock screen is an example of a very high sharpness haptic. And the App Switcher, that swipe up, that's an example of a more round, a lower sharpness haptic. As for the why. Why were those two types of experiences, you know, sharp and not sharp? I'm going to refer you to our talk on Audio Haptic Design. Now, we have several more types of event parameters, for example, that apply to audio, like pitch and pan. And for haptics, we have things that let you change those resonance and so forth. But these two, intensity and sharpness, will be enough to get us going.

Now, to develop a feel for that dynamic range and precision of intensity and sharpness. We've got a sample code, the palette, which allows you to try out these experiences for yourself. As you move, as you tap, or you drag your finger around, you'll be accessing the sharpness axis, as well as the intensity axis. And it's going to play out the corresponding continuous or transient haptic as you do that. This will help you get that intuition.

So that was an introduction about where we can use Core Haptics and also how to specify our content. Now, let's invite Doug Scott, our Core Haptics architect, to get us started with playing back Core Haptics, playing back those patterns, and integrating Core Haptics into our apps. Please welcome Doug.

Thank you, Michael. Good evening, everyone. I am thrilled to be here to talk to you about integrating the Core Haptics API into your applications.

Before I show a demo and dive into the code, let's walk through the basic steps that your application will follow when you want to play a haptic pattern.

Creating your content is the first good step because this can be done at any point prior to the point where you need to use it. In this example, we load an NSDictionary into a haptic pattern. The dictionary might have been something that we stored in our application as part of our resources. As we will see later, patterns can also be created right before they are to be played if they need to very interactively in response to changes in your application.

The next step is to create an instance of the haptic engine. This should be done as soon as your application knows that it will be making use of haptics.

Next, you create a haptic player for your haptic pattern. Each player is associated with a single pattern and a particular haptic engine.

Starting the haptic engine tells the system to initialize the audio and haptic hardware in preparation for request to play the pattern.

At the moment that your application wants the pattern to play. You start the player. This can be done in two modes. The first is called -- which we call immediate mode tells the system that you wish this pattern to play at the soonest possible moment with the minimal latency. The second, in scheduled mode, you hand it an absolute timestamp which tells the system that you want to synchronize this event with some other systems such as another audio player or a game event or a graphics event.

If you want to know when your pattern is finished playing, you can have the haptic engine notify you via callback when your player or players are done.

Here, the engine calls back to the application. And the application can now choose to stop the haptic engine or continue on with the next haptic pattern.

Those are the basic steps. Now let's see an example of an application which uses this system.

But before we do, we need to let you in on a secret demonstrating the use of an API which generates tactile feedback prevents -- presents a unique problem. You in the audience can't feel it. The way we handle this was by adding an audio equivalent for each haptic event into the output which will let you hear the effect of the haptics.

This application uses a simple physics engine to move the ball around the screen in response to the accelerometer. It generates haptic and audio feedback when the ball impacts the edges of the screen. The user has the sense they are feeling the impacts through the edges of the game wall as well as hearing them. The harder the ball hits the edge, the more intense the haptic and the louder the audio.

Okay, let's look at the code for this example to see how to integrate the Core Haptics API into your application. We'll see how event parameters are used to produce changes in the haptics and audio. The example here, all this code, is taken from the sample code on the website. But it's been edited down to show the important points.

First, we import the Core Haptics module. Along with the other modules we need for the application.

The CHHapticEngine is declared as a member variable of our ViewController because we want to be able to control its lifetime and have it exist for the lifetime of the application.

As discussed in our flow chart earlier, we set up the haptic engine in advance of when we want to use it. Here, we call a helper method at the point that the view has loaded.

In the helper method, we began by creating the instance of the haptic engine and check for possible errors. The engine is assigned to our member variable so we can keep it around.

It is optional, but extremely useful to assign a closure to the engine's stoppedHandler property. This will be called if the engine is stopped by some action other than the application itself asking it to. Some possible reasons that this might happen are an audio session interruption or the application being suspended.

We finish this method by starting the haptic engine and checking for possible errors. The engine will continue to run until the application, or a possible outside action stops it.

Note that the application tracks whether or not the engine needs to be restarted. Typically, you might leave the engine running for the entire time that you have any view visible on the screen which has haptic interaction.

Here's the location in the application where the simple physics engine lets us know that the ball has collided with the wall. In this example, we want to generate our haptic and audio pattern to interactively track the velocity of the ball. So the pattern player and its pattern are created at the moment they are needed.

This method is responsible for creating the pattern to be played in response to the ball collision. In here, we will create a pattern with two events. One haptic and one audio.

We create a haptic event of type hapticTransient to produce that impactful feel.

And we give it two event parameters which configure the event's sharpness and intensity, which you've heard about already, based upon the velocity of the ball.

Then, we create the audio event with type audioContinuous with a set of event parameters for volume and envelope decay also calculated from the ball's velocity. The sustained parameter here assures us that the intensity of this event will die off to zero instead of continuing on for the length of the event.

We create a pattern containing these two events synchronized in time.

Finally, we create the pattern player from this pattern and return it to this layer, back in the method that responds to the collision. The final step is to start the pattern player at time CHHapticTimeImmediate, which indicates to play it back as soon as possible with minimal latency.

Notice that the app does not hold on to the instance of this player. Its pattern is guaranteed to continue playing until it is finished. So the application can simply fire and forget it.

And that's the basic recipe for playing your content using a pattern that is created programmatically within your app's code. Again, because this app is continuously interactive we don't stop the haptic engine until the game screen is no longer visible.

Now let's take a moment to talk about one of the most powerful capabilities of Core Haptics, dynamic parameters.

Dynamic parameters let you increase and decrease the value of the existing event parameters for all active and upcoming events in a pattern as it plays.

Dynamic parameters take effect at the timestamp you provide. You can adjust multiple different parameters at the same time or with any arbitrary time relationship.

You can include dynamic parameters when you create your pattern. or send them to your player in real time during playback.

This allows you to use a single pattern to generate an infinite number of haptic and audio variations by adjusting the pattern dynamically.

Let's take a look at an example. In this diagram, on the bottom, we have a haptic pattern that was designed with all haptic event intensities set to their maximum value. The first half are HapticTransients. The second half is the HapticContinuous.

We'd like to reduce the intensity of all the games haptics temporarily. For example, if a game -- if a character was speaking in the game.

I send a dynamic parameter for intensity with a value of 0.3 that takes effect at time .5 seconds. You can see that it reduced the intensity of the event at that time significantly to about a third of what it was unmodified.

Finally, let's look at another way to create patterns.

So what exactly is AHAP? The Apple Haptic Audio Pattern is a specification for describing a Core Haptics pattern in a text-based format. It is built from nested key value pairs which become quite familiar for you, to you once you start working with the classes which make up the Core Haptics API.

It is a schema for the widely established JSON file format. Which means you have already quite a number of different frameworks which can read, write, and edit these, including such things as the Swift Codable framework.

AHAP makes it easy to share and edit haptic patterns because it is a format that all developers can agree on.

Loading your haptic patterns from external AHAP files allows you to separate your content from your application code.

Using the magic of a slide deck, we're going to create a simple AHAP file here.

We start with a version string. Which indicates which version of the system this pattern was designed for.

Next, we add the key for our pattern. Which will be an array of dictionaries.

We add our first event dictionary to our pattern array. This event has two required key value pairs. A time in seconds at which the event should happen relative to the start of the pattern and the type of event. This is a haptic transient event starting as soon as the pattern starts.

To this event, we add event parameters which will affect only this event. These are stored in their own array of dictionaries.

We add an event parameter to control the intensity of the event and another to control its sharpness.

We can add a second event in the same fashion. This one starts at an offset of .5 seconds from the first and is of type HapticContinuous. For the event parameters, we use the same as we had for the first event.

Events type HapticContinuous and AudioContinuous require an event duration in addition to the time and event type. This duration value is always specified in seconds.

Here is a visual representation of the pattern we just created. You can see the two types of events; the HapticTransient at the very beginning, the Continuous later with a relative timing and duration and their intensity and sharpness parameter values.

That was a quick tour of AHAP. This diagram shows a summary of the AHAP file structure. A single pattern consisting of an array of event dictionaries, optional dynamic parameters, and optional use of parameter curves which are an extension of dynamic parameters. Which you can read about more on, within the information that we have on the website.

You can find a full link to the AHAP specification on our sessions page.

Also on our sessions page, you'll find a code example that shows how to create, load, and play the patterns described by AHAP files. This haptic sampler app includes a range of patterns that highlight the subtlety, dynamic range, and audio haptic sync. That's possible with the Core Haptics API.

Thank you very much. And now, I'd like to return the stage to my colleague, Michael.

Thanks, Doug. So although we covered a lot of ground today there is still much more to discover with Core Haptics.

Check out the online doc reference for details. Once you're up and running with the basics of specifying contents and playing that content you'll probably be wondering about the design principles for these joints haptic audio patterns.

You'll be wondering do the rules, the guidelines for sound design carry over to haptic design? What are some common pitfalls I should be aware of? The good news is that our Audio and Haptic Design teams have been doing this for years and they've helped put together some advice and guidance in an updated human interface guidelines or HIG for haptics. As well as in the accompanying talk in WWDC this year. Check it out.

So let's recap. Today, we talked about where haptics can help you reach for that next level of immersion and make your app interactions more effortless.

Having synchronized and complimentary audio and haptics together is a particularly effective combination. But there haven't been API's that allowed you to actually do this. With iOS 13, we now have the necessary ingredients to create these rich multimodal experiences. We have the vocabulary to describe haptics and audio events and a file format, AHAP. We've got a new performant API Core Haptics which is designed for low latency and real-time modulation. We've put together sample code, sample patterns, design guidelines, and support from Apple. And lastly, you've got an incredible audience, incredible hardware where you can feel your haptics as you intended. A huge installed base of taptic engines that give you the most powerful, expressive, and precise haptics hardware available. So please come on down to the labs on Thursday and Friday where you can check out some of these haptics samples that we showed today and discuss your own ideas for your apps. You'll also find all of these guidelines and references online at our sessions page.

I know you're going to have a lot of fun creating and using these haptic patterns in your apps. We can't wait to hear and feel what you come up with. Thank you, and good night. [ Applause ]

-