-

Advances in Camera Capture & Photo Segmentation

Powerful new features in the AVCapture API let you capture photos and video from multiple cameras simultaneously. Photos now benefit from semantic segmentation that allows you to isolate hair, skin, and teeth in a photo. Learn how these advances enable you to create great camera apps and easily achieve stunning photo effects.

Resources

- AVMultiCamPiP: Capturing from Multiple Cameras

- AVCam: Building a camera app

- AVFoundation

- Capture setup

- Presentation Slides (PDF)

Related Videos

WWDC19

WWDC17

-

Search this video…

Good afternoon. Welcome to session 225.

My name is Brad Ford. I work on the Camera Software Team.

Thank you for hanging in there till the bitter end. I know it's been a long day. We appreciate you staying with us for The Late, Late Show. And as 5:00 o'clock sessions go, this is a pretty good one.

We'll be introducing two exciting additions to the iOS Camera Stack today. I'll spend the first 40 minutes or so talking about Multi-Camera capture. And then I'll invite Jacob and David up to talk about Semantic Segmentation.

So first up, Multi-Camera Capture, or as we like to call it internally.

MultiCam.

MultiCam is our single-most requested third-party feature. We hear it year after year in the labs. So what we're talking about here is the ability to simultaneously capture video, audio, Metadata, depth, and photos from multiple cameras and microphones simultaneously.

Third parties aren't the only ones who benefit from this, though.

We've had many and repeated requests from first-party clients as well for MultiCam Capture. Chief among them is ARKit. And if you heard the keynote, you heard about the introduction of ARKit 3.

These APIs use front camera for face and pose tracking, while also using the back camera for World tracking, which helps them know where to place virtual characters in the scene by knowing what you're gazing at. So we've supported MultiCam on the Mac since the very first appearance of AVFoundation, way the heck back in Lion.

But on iOS, AVFoundation still limits clients to one active camera at a time.

And it's not because we're mean. There were good reasons for it. The first reason is hardware limitations.

I'm talking about cameras sharing power rails. And not physically being able to provide enough power to power two cameras simultaneously full bore. And the second reason was our desire to ship a responsible API.

One that would help you not burn the phone down when doing all of this processing power with multiple cameras simultaneously. So we wanted to make sure that we delivered something to you that would help you deal with the hardware, thermal, and bandwidth constraints that are reality in our world.

All right. So great news in iOS 13. We do finally support MultiCam Capture. And we do it on all recent hardware, iPhone XS, XS Max, XR, and the new iPad Pro. On all of these platforms, the aforementioned hardware limitations have been solved, thankfully.

So let's dive right into the fun stuff. We've got a new set of APIs for building MultiCamSessions.

Now, if you've used AVFoundation before for Camera Capture, you know that we have four main groups of classes: Inputs, outputs, the session, and connections.

The AVCaptureSession is the center of our world. It's the thing that marshals data.

It's the thing that you tell to start or stop running.

You add to it one or more inputs, AVCaptureInputs. One such is the AVCaptureDeviceInput, which is a wrapper for either a camera or a microphone.

You also need to add one or more AVCaptureOutputs to receive the data. Otherwise, those producers have nowhere to put it. And then the session automatically creates connections on your behalf between inputs and outputs that have compatible media types.

So note what I'm showing you here is the traditional AVCaptureSession, which on iOS only allows one camera input per session.

New in iOS 13: We're introducing a subclass of AVCaptureSession called AVCaptureMultiCamSession. So this lets you do multiple ins and outs.

AVCaptureSession is not deprecated. It's not going away. In fact, the existing AVCaptureSession is still the preferred class when you're doing single cam capture. The reason for that is that MultiCamSession, while being a power tool, has some limitations and I'll address those later.

All right. So let me give you an example of a bread and butter use case for our new AVCaptureMultiCamSession.

Let's say you want to add two devices-- one for the front, and one for the back camera to a MultiCamSession; and do two to VideoDataOutputs simultaneously, one receiving frames from the back camera, one from the front.

And then let's say, if you want to do a real time preview, you can add separate VideoPreviewLayers; one for the front, one for the back.

You needn't stop there, though.

You can do simultaneous MetadataOutputs, if you want to do simultaneous barcode scanning or face detection.

You could do multiple MovieFileOutputs, if you want to record one for the front and one for the back.

You could add multiple PhotoOutputs if you want to do real-time capture of photos from different cameras. So as you can see, these graphs are starting to look pretty complicated with a lot of arrows going from a lot of inputs to a lot of outputs.

Those little arrows are called AVCaptureConnections, and they define the flow of data from an input to an output.

Let me zoom in for a moment on the device input to illustrate the anatomy of connection.

Capture inputs have AVCaptureInput ports which I like to think of as little electrical outlets.

You have one outlet per media type that the input can produce.

If nothing is plugged into the port, no data flows from that port, just like an electrical outlet. You have to plug something in to get the electricity.

Now, to find out what ports are available for a particular input, you can query that input's ports property and it will tell you, "I have this array of AVCaptureInput ports. So for the Dual Camera, these are the ports that you would find. One for video, one for depth, one for Metadata objects such as barcode scanning and faces, and one for Metadata items, which can be hooked up to a MovieFileOutput.

Now whenever you use AVCaptureSessions addInput method to add an input to the session or addOutput to add an output to the session, the session will look for compatible media types and implicitly form connections if it can.

So here, we had a VideoDataOutput. VideoDataOutputs receive video, accept video. And we had an electrical plug that can produce video. And so the connection was made automatically.

That is how most of you are accustomed to working with AVCaptureSession, if you've worked with our classes before.

MultiCamSession is a different beast.

That is because inputs and outputs-- You have multiple inputs now with multiple outputs. You probably want to make sure that the connections are happening from A to A and B to B. And not crossing where you didn't intend them to. So when building a MultiCamSession, we urge you not to use implicit connection forming. But instead use these special purpose adders, addInputWithNoConnections, or addOutputWithNoConnections.

And there are likewise ones that you can use for VideoPreviewLayer, which are setSessionWithNoConnections. When you use these, it basically just tells the session: Here are these inputs, here these outputs. You now know about them but keep your hands off them. I'm going to add connections as I want to later on manually. And the way you do that is you create the AVCaptureConnection yourself by telling it, "I want you to connect this port or ports to this output." And then you tell the session, "Please add this connection." And now you're ready to go. That was very wordy. It's better shown than talked about. So I'd like to bring up Nik Gallo.

Also from the Camera Software group, to demonstrate AVMultiCamPiP. Nik? Thanks, Brad.

AVMultiCampPiP is an app that demonstrates streaming from the front and back cameras simultaneously. Here, we have two video previews; one displaying the front camera and one displaying the back camera. And when I double tap the screen, I can swap which camera appears full screen and which camera appears PiP.

There we go. Now, we can see here that Brad is live at Apple Park. And before I ask him a few questions, I will press the record button here at the bottom to watch this conversation later.

Hey, Brad.

So tell me how's it going over at Apple Park? Nik, it's pandemonium here at Apple Park. As you can see in front of the reflecting pool, there's all kinds of activity happening. I hear a rushing of water. It sounds like I'm about to be drenched at any moment.

I hear wild animals behind me, like ducks or something. I honestly fear for my life here. Well, Brad, that seems absolutely terrifying. Hope you stay safe out there. Okay, thanks. Got it.

So now that we finished recording the movie. Let's go take a look at what we just recorded.

Here we have the movie.

As you can see, when I swap between the two cameras, it swaps just like we did when using the app. And that's AVMultiCamPiP. Back to Brad.

Thanks, Nik. Awesome demo.

All right, so let's look at what's happening under the hood in AVMultiCamPiP.

So we have two device inputs: One for the front camera, one for the back camera, added, with no connections, as I mentioned before. We also have two VideoDataOutputs, one for each. And two VideoPreviewLayers. Now to place them on screen, it's just a matter of taking those VideoPreviewLayers and ordering them so that one is on top of the other and one is sized smaller. And when Nik double tapped on them, we simply repositioned them and reversed the Z ordering. Now there is some magic happening in the Metal Shader compositor code. There it's taking those two VideoDataOutputs and compositing them so that the smaller PiP is arranged within one frame. So it's compositing them to a single video buffer and then sending them to an AVAssetWriter where they are recorded to one video track in a movie.

This sample code is available right now. It's associated with this session. You can take a look and start doing your own MultiCam Captures. All right.

Time to talk about limitations. While AVMultiCamSession is a power tool, it doesn't do everything. And let me tell you what it does not do.

First up, you cannot pretend that one camera is two cameras.

AVCaptureDeviceInput API will let you create multiple instances for say the back camera. You could make ten of them if you want. But if you try to add all those instances to one MultiCamSession, it will say, uh-uh.

And it will throw an exception.

Please, only one input per camera in a session.

Also, you're not allowed to clone a camera to two outputs of the same type.

Such as taking one camera and splitting its signal to two VideoDataOutputs.

You can, of course, add multiple cameras and connect them to a VideoDataOutput each, but you cannot fan out from one to many.

You're also not allowed-- The opposite holds true as well. AVCaptureOutputs on iOS do not support media mixing. So all the data outputs only can take a single input.

You can't, for instance, try to jam two cameras sources into a single data output. It wouldn't know what to do with the second video since it doesn't know how to mix them.

You can, of course, use separate VideoDataOutputs and then composite those buffers in your own code, such as the Metal Shader Composite that we used in MultiCamPiP.

You can do that however you like. But as far as session building is concerned, do not try to jam multiple cameras into a single output.

All right. A word about presets.

The traditional AVCaptureSession has this concept of a session preset, which dictates a common quality of service for the whole session. And it applies to all inputs and outputs within that session. For instance, when you set the session preset to high, the session configures the device's resolution and frame rate, and all of the outputs so that they are delivering a high-quality video experience such as 1080p30.

Presets are a problem for MultiCamSession.

Think again about something that looks like this.

MultiCamSession configurations are hybrid. They're heterogeneous. What does it mean to have high quality for the whole thing? You might want to do different qualities of service on different branches of the graph. For instance, on the front camera, you might want to just do a low res preview, such as 640x480, while also simultaneously doing something really high quality, 1080p60, for instance, on the back. Well, obviously, we don't have presets for all of these hybrid situations.

We've decided to keep things simple in MultiCamSession. It does not support presets. It supports one, and one preset only, which is input priority. So that means it leaves the inputs and outputs alone. When you add them, you must configure the active format yourself.

All right, onto the Cost Functions.

I mentioned at the beginning that we took our time with this MultiCam support because we wanted to deliver a very responsible API, one that could help you account for the various costs that you incur when running multiple cameras and lighting up virtually every block on the phone.

So this is trite but true.

There is no such thing as a free lunch. And so this is the part of the session where I become your father and I'm going to give you the Dad Talk.

In the Dad Talk, I will explain how credit cards work, and how you need to be responsible with your money and live within your means, and, like, such things.

So it's a fact of life that we have limited hardware bandwidth on iOS. And though we have multiple cameras-- so we have multiple sensors-- we only have one ISP, or image signal processor.

So all of those sensors, all the pixels being -- going through those sensors need to be processed by a single ISP. And it is limited by how many pixels it can run per clock at a given frequency.

So there are limiters to the number of pixels that you can run at a time.

The contributors to the HardwareCosts are, as you would expect, video resolution.

Higher resolution means more pixels to cram through there.

The max frame rate. If you're delivering those pixels faster, it's got to do more pixels per clock as well. And then a third one, which you may or may not have heard of, is called Sensor Binning.

Sensor Binning refers to a way to combine information in adjacent pixels to reduce bandwidth.

So for instance, if we have an image here, and we do a 2x2 binning, it's going to take 4 pixels in squares and sum them into one so that we get a reduction in size by 4X.

It gives you a reduction in noise.

It gives you a reduction in bandwidth. It gives you 4X intensity per pixel. So there are a lot of great things about Sensor Binning. The downside is that you get a little reduction in image quality. So diagonal lines might look a little stair stepped.

But their most redeeming quality is that binned formats are super low power. In fact, whenever you use ARKit with the camera, you are using a binned format because ARKit uses binned formats exclusively to save on that power for all the interesting AR things that you'd like to do.

All right. How do we account for cost? Or how do we report those costs? MultiCamSession tallies up your HardwareCost as you configure your session.

So each time you change something, it keeps track of it. Just like filling up a shopping cart, or going to an online store and putting things into the cart before you pay for them, you know when you're getting close to your limit on your budget. And you can kind of try things out, and then put new things in or move old things out. You see the costs before you have to pay.

It's the same with MultiCamSession.

We have a new property called HardwareCost. And this HardwareCost starts at zero when you make a brand new session.

And it increments as you add more features, more inputs, more outputs. And you're fine as long as you stay under 1.0.

Anything under 1.0 is runnable.

The minute you hit 1.0 or greater, you're in trouble. And that's because the ISP bandwidth limit is hard. It's not like you can, you know, deliver every other frame.

No. This is an all-or-nothing proposal. You have to either make it, or you don't. So if you're over 1.0, and you try to run the AVCaptureMultiCamSession, it will say, "Uh-uh." It will give you a notification of a Runtime error, indicating that the reason it had to stop is because of a HardwareCost overage.

Now, you're probably wondering, "How do I reduce that cost?" The most obvious way you can do it is to pick a lower resolution.

Another way you can do it is if you want to keep the same resolution, if there was a binned format at the same resolution, pick that one instead. It's a little bit lower quality but way lower in power. Next, you would think that lowering the frame rate would help, but it doesn't.

The reason is that AVCaptureDevice allows you and has allowed you since, I think, iOS 4, to change the frame rate on the fly.

So if you have a 120 fps format, and you say, "Set the active format to 60," you still have to pay the cost for 120, not 60. Because at any point while you're running, you could increase the frame rate up to 120. We must assume the worst case.

But good news. We're now offering an override property on the AVCaptureDeviceInput.

By setting it, you can turn a high frame rate format into a lower frame rate format by promising that you will go no higher than a particular frame rate.

Now, this is a point of confusion in our APIs. We don't talk about frame rates as rates. We talked about them as durations. So to set a frame rate, you set one over the duration. That's the same as the frame rate. So if you want to take a 60 fps format and make it into a 30 fps format.

You do that by making a CMTime with 1 over 30, which is the duration. And then set that deviceInputs videoMinFrameDuration override to 30 fps. Congratulations. You've just turned a 60 fps format into a 30 fps format. And you only pay the hardware cost for 30. I should also mention that there is a great function in the AVMultiCamPiP app that shows how to iteratively reduce your cost. It's a recursive function that kind of picks things that are most important to it and it throttles down things that are less important until it gets under the HardwareCost.

Now next up is System Pressure Costs. This is the second-big contributor that we report. As you're well aware, phones are extremely powerful computers in little, bitty, thermally challenged packages.

And in iOS 11, we introduced camera system pressure states.

These help you monitor the camera's current situation.

Camera system pressure consists of system temperature. That is, overall OS thermals.

Peak power demands. And that has to do with the battery. How much charge does it currently have? Is it able to ramp up its voltage fast enough to meet the demands of running whatever you want to do right now? And the infrared projector temperature.

On devices that support TrueDepth Camera, we have an infrared camera as well as an RGB camera. Well, that generates its own heat. And so that's part of the contribution to system pressure states.

We have five of them. Nominal all the way up to Shutdown.

When the system pressure state is nominal, you're in great shape. You can do whatever you want. When it's Fair, you can still almost do whatever you want. But at Serious, you start getting into a situation where the system is going to throttle back. Meaning you have fewer cycles for the GPU. Your quality might be compromised. And, at Critical, you are getting a whole lot of throttling. At Shutdown, we cannot run the camera any longer for fear of hurting the hardware. So at Shutdown, we automatically interrupt your session. Stop it. Tell you that you're interrupted because of a system pressure state. And then we wait for the device to go all the way back to Nominal before we'll let you run the camera again.

That was all iOS 11. Now, in iOS 13, we're offering you a way to account for the system pressure cost up front, okay? Instead of just telling you what's happening right now, which may be influenced by the fact that you played Clash of Clans before you started the camera, we now have a way to tell you what the camera cost as far as system pressure is, independent of all other factors.

So the contributors to this cost are the same as the ones for hardware, along with a lot of other ones. Such as video image stabilization, or optical image stabilization. All of those cost power. We have a Smart HDR feature, etc. All of those things listed here are contributors to overall system pressure cost.

MultiCamSession can tally that score up front, just like it does for hardware, and it will only account for the factors that it knows about. So if you're going to be doing some wild GPU processing at the same time, the score won't include that. It will just include what you're doing with the camera. Here's how you use it.

By querying the systemPressureCost, you can find out how long you would be runnable in an otherwise quiescent system. So if it's less than 1.0, you can run indefinitely. You're a cool customer.

If it's between 1.0 and 2.0, you should be runnable for up to 15 minutes. 2.0 to 3.0, up to 10 minutes.

And higher than 3.0, you may be able to run for a short little bit. And, in fact, we will let you run the camera, even if you're over 3. But you have to understand that it's not going to stay cool very long. And once it gets up to a Critical or Shutdown level, your session will become interrupted. So we'll save the hardware, even if you don't want to. But hey, it's great. If you can get what you need to get done in 30 seconds of running at a very, very high system pressure cost, by all means, do that.

Now how do you reduce your system pressure while running? I'm not talking about while you're configuring your session. I'm talking about once you're already running and you notice that you're starting to elevate in system pressure. The quickest and easiest way to do it is to lower the frame rate. Immediately. That will relieve system pressure. Also, if you're doing things that we don't know about, such as heavy GPU or CPU work, you can throttle that back.

As a last resort, you might try disabling one or more of the cameras that you're using. AVMultiCamSession has a neat little feature that, while running, you can disable one of the cameras without affecting preview on the other. We don't shut everything down. So if for instance, you're running with the front and the back. You notice that you're way over budget, and you're soon going to go critical, you could choose to shut down the front camera. The back camera will keep previewing. It won't lose its focus, exposure, or white balance.

And when you shut down the last active input port on the camera that you want to disable by setting its input ports enabled property to false, we will stop that camera streaming and save a ton of power and give that system a chance to cool off.

All right. So I just talked about two very important costs, hardware and system pressure.

There are other costs that we are not reporting. I didn't want to trick you into believing that there aren't other things at work here. There are, of course, other costs, such as memory. But in iOS 13, we are artificially limiting the device combinations that we will allow you to run, the ones that we are confident will run, and that will not get you into trouble.

So we have a limited number of supported device combinations. Here, I'm listing the ones that are supported on iPhone XS. This is kind of an eye chart. I don't expect you to remember this. You can pause the video later. But there are six supported configs. And the simple rule to remember is that you're allowed to run two physical cameras at a time.

You might be questioning like, "Brad, what about config number one there? There's only one checkbox." That's because it's the Dual Camera. And the Dual Camera is a software camera that's actually comprised of the wide and the telephoto. So it is two physical cameras.

How do you find out if MultiCam is supported? Like I said, it's only supported on newer hardware. So you need to check if MultiCamSession will let you run multiple cameras or not on the device that you have.

There's a class method called isMultiCamSupported, which you can right away decide yes or no. And then further, when you want to decide, "Am I allowed to run this combination of devices together?" You can create an AVCaptureDeviceDiscoverySession with the devices that you're interested in. And then ask it for its new property, supportedMultiCamDeviceSets. And this will produce an array of unordered sets that tell you which ones you're allowed to use together.

Next up is a way that we are artificially limiting the formats that you're allowed to run.

The supported formats, last I checked on an iPhone XS, there were more than 40 formats on the back camera. So there are tons to choose from. But we are limiting the actual video formats allowed to run with MultiCamSession because these are the ones that we can comfortably run simultaneously on end devices. So, again, this is a bit of an eye chart, but I'm going to draw your attention to groups.

First group is the binned formats. Remember low power? Yay. These are our friends. At the sensor, you're getting that 2x2 binning. So you're getting a very low power.

All of these are available up to 60 fps. You've got choices from 640x480 all the way up to 1920x1440. Next group is the 1920x1080 at 30. This is an unbinned format. And this is the same as the one you would get if you chose the high preset on a regular traditional session.

This one is available for MultiCam use. The final one is 1920x1440 unbinned at 30 fps. This is kind of a good stand-in for the photo format.

We do not support 12 megapixel on end cameras. That would certainly do bad things to the phone. But we do allow you to do 1920x1440 at 30 fps. And notice it still allows you to do 12 megapixel high res stills. So this is a very good proxy for when you want to do photography with multiple cameras simultaneously.

Now, how do you find out if a format supports MultiCam? You just ask it. So while iterating through the formats. You can say isMultiCamSupported? And if it is, you're allowed to use it.

In this code here, I'm iterating through the formats on a device and picking the next lowest one in resolution that supports MultiCam, and then setting it as my active format. Last way that we're artificially limiting is because we need to report costs, and those costs are reported by the MultiCamSession, we're specifically not supporting on iOS multiple sessions with multiple cameras in an app. And we're also not supporting multiple cameras in multiple apps simultaneously. Just be aware that the support on iOS is still limited to one session at a time. But of course, you can do -- run multiple cameras at a time. Thus concludes the Dad Talk.

Okay? Write good code.

Be home by 11:00. If your plans change, call me. All right. All right, now back to the fun stuff.

Synchronized Streaming.

I talked a little bit about software cameras.

Dual Camera, for one, was introduced on iPhone 7 Plus. And it's now present on the iPhone XS and XS Max as well. And the TrueDepth camera is also another kind of software camera because it's comprised of an infrared camera and an RGB camera that is able to do depth by taking the disparity between those two.

Now, we've never given these special types of cameras a name.

But we're doing that now. In iOS 13, we're calling them virtual cameras.

DualCam is one of them. It presents one video stream at a time and it switches between them based on your field -- your zoom factor. So as you get closer to a 2X, it switches over to the telephoto camera instead of the wide camera. It also can do neat tricks with depth because it has two images that it can use to generate disparity between them.

But still, from your perspective, you've only been able to get one stream at a time. Because we have a name now, they are also a property in the API, which you can query. So as you're looking at your camera devices, you can find out programmatically is this one a virtual device? And if it is, you can ask it, "Well, what are your physical devices?" And in the API, we call this its constituentDevices.

Synchronized streaming is all about taking those constituent devices of a virtual device and running them synchronized. In other words, for the first time, we're allowing you to stream synchronized video from the wide and the tele at the same time. You continue to set the properties on the virtual device, not on the constituent devices.

And there are some rules in place.

When you run the virtual device, the constituent devices aren't allowed to run willy nilly.

They have the same active resolution. They have the same frame rate.

And at a hardware level, they are synchronized. That means that they are reading out. The sensor is reading out those frames in a synchronized fashion. So that the middle of -- middle line of the readout is exactly at the same clock time.

So, that means that they match at the frame centers. It also means that the exposure, white balance, and focus happen in tandem, which is really nice. It makes it look like virtually it is the same camera. It just happens to be at two different fields of view.

This is best shown rather than talked about. So, let's do a demo. This one's called AVDualCam.

There we are.

Okay, AVDualCam lets you see what a virtual camera sees by showing you a display of the two cameras running synchronized. And it does this by showing you several different views of those cameras.

Okay, here I've got the wide and the tele constituent streams of the Dual Camera running synchronized. On the left is the wide. And on the right is the tele.

Don't believe me? Here.

I'm going to put my finger over one side. Ooh. I'm going to put my finger over the other side. See? They're different cameras.

All I've done with the wide is zoom it so it's at the same field of view as the tele.

But you can notice that they're running perfectly synchronized. There's no tearing. There's no weirdness in the vertical blanking.

Their exposures and focuses change at the same time.

Now we can have a little bit more fun if we change from the side-by-side view to the split view.

Now, this is a little bit hard to see. But I'm showing the tele-- the wide on the left and the tele on the right. So I'm only showing you half of each frame.

Now if I triple tap, I bring up Distance-o-meter, which lets me change the plane of depth convergence for the two images.

This app knows how to register the two images relative to one another. So, it lets me play with the plane at which the depth converges. Kind of like with your eyes.

When you focus on something up close or far away, you're kind of changing that depth plane of convergence. So for instance, up close with my hand, I can find the place where the depth converges nicely. There we go. Now I've got one hand.

But that's not right for the car behind me. So I can keep going further -- be further away.

There we go. And that's not right for the car behind it.

So now I can pull that guy back too. And that's Dual Camera streaming-- Synchronized from the Dual Cameras.

Here's a diagram showing AVDualCam's graph.

Instead of using separate device inputs, it just has one.

So it's using a single device input for the Dual Camera. But it's sourcing wide and tele frames in a synchronized fashion to two VideoDataOutputs.

You'll notice that there is a little object, a little pill at the bottom called the AVCaptureDataOutputSynchronizer. I don't want to confuse you. That thing is not doing the hardware synchronization that I talked about. It's just an object that sits at the bottom of a session if you desire, which lets you get multiple callbacks for the same time in a single callback. So, instead of getting a separate VideoDataOutput callback for the wide and the tele you can slap a DataOutputSynchronizer at the bottom and get both frames for the same time through a single callback. So it's very handy that way.

Now below it, there's a Metal Shader Filter / Compositor that's doing some magic.

Like I said, it's knowing how to blend those frames together. And it decides where to render those frames to the correct places in the preview. And it also can send them off to an AVAssetWriter to record into a video track.

Now recall my earlier diagram.

I showed you a close-up view of the AVCaptureDeviceInput, specifically the Dual Camera one.

The ports property of the Dual Camera input exposes which ports you see there.

Anybody see two video ports there? I don't see two video ports. So how do we get both wide and tele out of those input ports that we see here? Is that one video port somehow giving us two? No. It's not giving us wide or tele. It's giving us whatever the Dual Camera decides is right for the given zoom factor. That's not going to help us get both constituent streams at the same time. So how do we do that? Well, I'll tell you.

But it's a secret. So you have to promise not to tell anybody.

Okay? Virtual devices have secret ports. Okay? These secret ports, previously unbeknownst to you, are now available. But you don't get them out of the ports array. You get them by knowing what to ask for.

So, instead of just getting an array of every conceivable type of port including ports that are not allowed to be used with Single cam session, you can ask for them by name. So here we have the dualCameraInput. And I'm asking for its ports with source device type wide-angle camera and source device type telephoto camera.

It goes, aha. Those are the secret ports that I know about. I'll give them to you now. Once you've got those input ports. You can hook them up to a connection the same way that you would when doing your own manual connection creation.

Then you're streaming from either the wide or the tele or both.

Now in the AVDualCam demo, I was able to change the depth convergence plane of the wide and tele cameras with the correct perspective. And you saw that it wasn't kind of moving and shaking all over. It was just moving along the plane that I wanted it to. It was just along the plane of the baseline. And I was able to do that because AVFoundation offers us some homography aids. Homography is, if you're unfamiliar with the term, it just relates two images on the same plane.

They are the basis for computer vision. They are common for such tasks as image rectification, image registration.

Now camera intrinsics are not new to iOS. We introduced those in iOS 11.

They're presented as a 3x3 matrix that describes the geometric properties of a camera, namely its focal length and its optical center seen here using the pinhole camera where you can see where it enters through the pinhole and hits the sensor, and that being the optical sensor, and the distance between the two being the focal length.

Now you can opt-in to receive per-frame intrinsics by messaging the AVCaptureConnection and saying you want to opt-in for intrinsic delivery. Once you've done that, then every VideoDataOutput buffer that you receive has this attachment on it. CameraIntrinsicMatrix, which again is an NS data wrapping a matrix float, 3x3, which is a SIMDI type.

You'll get -- when you get the wide frame, it has the matrix for the wide camera. When you get the tele frame, it has the matrix for the tele camera. Now new in iOS 13, we offer camera extrinsics at the device level. Extrinsics are a rotation matrix and a translation vector that are kind of crammed into one matrix together. And those describe the camera's pose compared to a reference camera. This helps you if you want to kind of relate where the two cameras are, both their tilt and how far away they are. So AVDualCam uses the extrinsics to know how to align the wide and the tele camera frames with respect to one another so it's able to do those neat perspective shifts. That was a very, very brief refresher on intrinsics and extrinsics. So I described them in absolutely excruciating detail two years ago in session 507. So I'd invite you to review that session if you have a very strong stomach for puns. Okay, the last topic of MultiCam Capture is Multi-Mic Capture.

All right. Let's review the default Behaviors of a microphone capture when using a traditional AVCaptureSession.

The mic follows the camera. That's as simple as I can put it. So if you have a front-facing camera attached to your session and a mic, it will automatically choose the mic that's pointed the same direction as the front camera.

Same goes for the back. And it will make a nice cardioid pattern so that it rejects audio out the side that you don't want.

That way, you're able to follow your subject, be it back or front. If you have an audio-only session, we're not really sure what direction to direct the audio. So, we just give you an omnidirectional field. And as a power feature, you can disable all of that by saying, "Hands off, AVCaptureSession. I want to use my own AV audio session and configure my audio on my own." And we'll honor that. So now comes the time for another dirty little secret.

There is no such thing as a front mic. I totally just lied to you.

In actuality, iPhones contain arrays of microphones. And there are different numbers depending on the devices. Recent iPhones happen to have four. iPads have five. And they are positioned at different strategic locations. On recent iPhones, you happen to have two that point straight out the bottom. And at the top, you have one pointing out each side. All of them are omnidirectional mics.

Now, the top ones do get some acoustic separation because they've got the body of the device in between them, which acts as a baffle. But it's still not giving a nice directional pattern like you would want. So what do you do to actually get something approximating a front or back mic? What you do, it's called Microphone Beam Forming. And this is a way of processing the raw audio signals to get them to be directional. And this is something that Core Audio does on our behalf.

Here, we've got two blue dots, which represent two microphones on either side of an iPhone. And the circles are roughly the pattern of audio that they are hearing. Remember, they're both omnidirectional mics. If we take those two signals, and we just simply subtract them. We wind up with a figure eight pattern, which is cool. It's not what we want, but it's cool.

If we want to further shape that, we can add some gain to the one that we want to keep before subtracting them. And now we wind up with a little Pac-Man ghost. And that's good. Now we've got rejection out the side that we don't want. But unfortunately, we've also attenuated the signal. So it's much quieter than we want.

But, if after doing all that, we apply some gain to that signal, we get a nice, big Pac-Man ghost. And now we've got that beautiful cardioid pattern that we want, which rejects out of the side of the camera that we don't want.

Now, this is extremely over simplified. There's a lot of filtering going on to ensure that white noise isn't gained up.

But essentially that is what is happening. And, up to now, only one microphone beam form has been supported at a time. But the good folks over in Core Audio land did some great work for this MultiCam feature. And as of iOS 13, we now support multiple simultaneous beam forming.

So going back to the AVCaptureSession, when you get a microphone device input, and you find its audio port, that port lives many lives. It can be the front, back, or omni depending on what cameras the session finds.

But when you're using the MultiCamSession, the behavior is rigid.

The first port, the one -- the first audio port you find is always for omni.

And then you can find those secret ports that I was talking about to get a dedicated back beam or dedicated front beam.

The way you do that is by using those same device input port getters; this time; by specifying which position you're interested in.

So you can ask for the front position or the back position. And that will give you the ports that you're interested in. And you'll get a nice back or front beam form.

Here's for the front.

And here's for the back. Now going back to the MultiCamPiP demonstration we had with Nik. We stuck to the video side while we were showing you the whizzy part of the graph. Now I'm going to go back and tell you what we were doing on the audio side.

We were running all the time a single device input with two beam forms, one for the back and one for the front. And we were running those to two different audio data outputs. This slide should say audio data outputs.

And then choosing between them at Runtime. So depending on which is the larger of the two, we would switch to back or front and give you the beam form that we desired.

There are a couple of rules to know about multi-mic capture. Beam forming only works with built-in mics. If you've got something external USB, we don't know what that is. We don't know how to beam form with it.

If you do happen to plug in something else, including AirPods, we will capture audio of course. But we don't know how to beam form. So we'll just pipe that microphone through all of the inputs that you have connected. Thus, ensuring that you don't lose the signal. And that's the end of the Multi-Camera Capture part of today's talk. Let's do a quick summary.

MultiCam Capture session is the new way to do multiple cameras simultaneously on iOS.

It is a power tool. But it has some limitations. Know them.

And thoughtfully handle hardware and system pressure costs as you're doing your programming. And if you want to do synchronized streaming, use those virtual devices with constituent device ports. And lastly, if you want to do multi-mic capture, be aware that you can use front or back-beam formed or omni. And with that, I'm going to turn it over to Jacob to talk about semantic segmentation mattes. Thank you.

Hi, I'm Jacob. I'm here to tell you about the semantic segmentation mattes. So first, I'm going to go through what are these new types of mattes. And then David is going to talk you through how to leverage Core Image to work with these new mattes.

So remember, in iOS 12, we introduced the portrait effects matte. So this was a matte designed explicitly to provide effects for portraits.

So we use it internally to render beautifully looking portrait mode photos and portrait lighting photos. So in taking a closer look at the portrait effects matte, you can see how it -- that clearly delineates the foreground subject from the background. So this is beautifully represented here as a black and white matte. So values of one indicating foreground and values of zero indicating background.

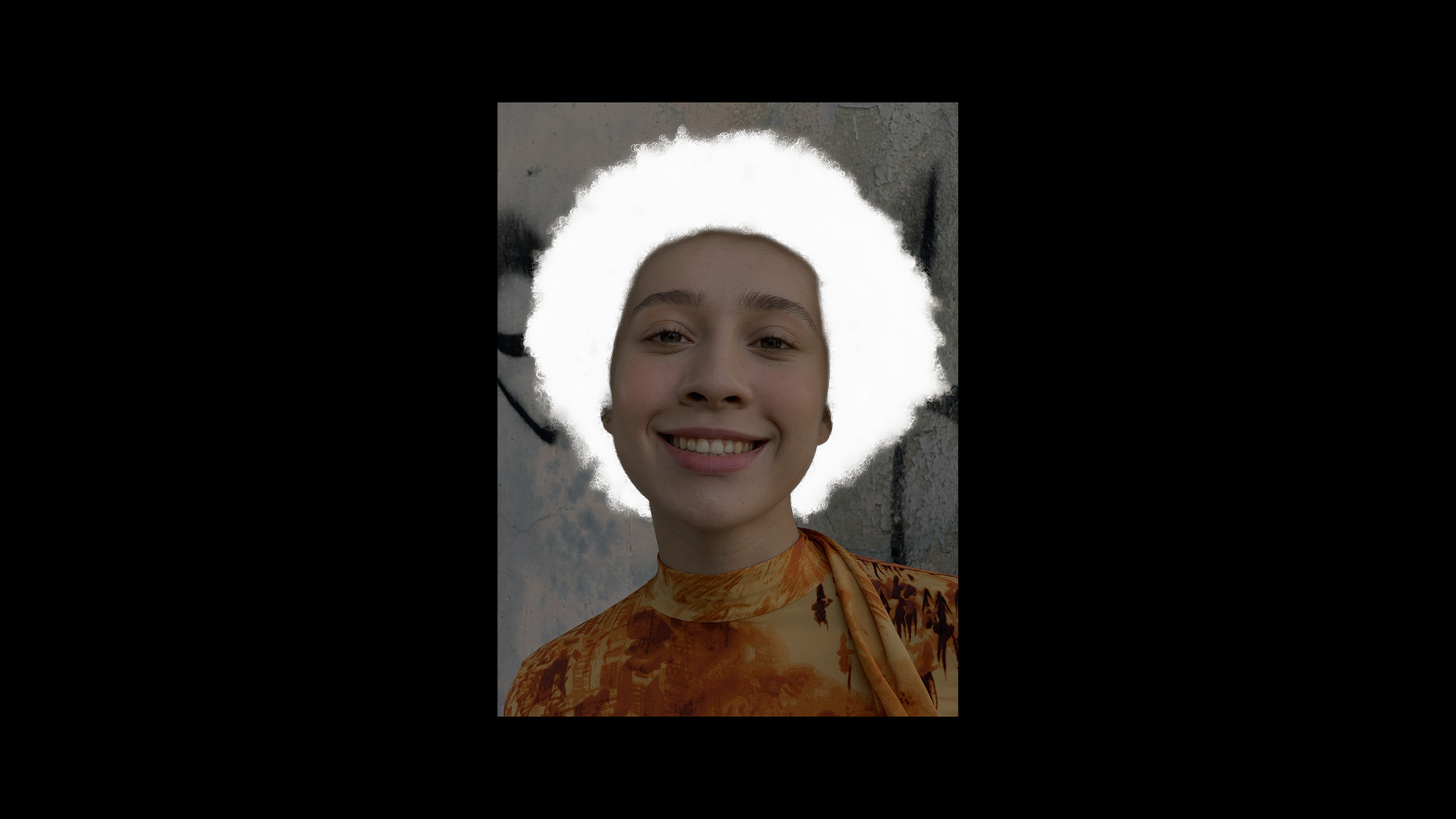

In iOS 13, we're taking this a step further with semantic segmentation mattes.

So we're introducing hair, -- skin, and teeth. So taking a closer look at the hair matte, for instance, you can see how this is beautifully separating the hair region from the non-hair regions. So we get great hair details against the background. And we get great separation between the non-hair regions and the hair.

Similarly for the skin regions where now we have alpha values indicating how much of a pixel is of type skin. So an alpha value of .7, for instance, would indicate that a that a pixel is 70% of type skin.

So we hope these new three types of -- three new types of mattes will give you the creative freedom to render some cool effects and beautiful-looking photos.

So a few things to notice-- That the mattes are half size of the original image. That means they're half in each dimension of the original image. And that means quarter resolution.

So another thing to remember is that that these segmentation mattes can actually overlap. So this is particularly true for the portrait effects matte and the skin matte that will inherently overlap.

So these mattes do not come for free. So we heavily leverage the Apple Neural Engines for machine learning spectral graph theory and looking a bit under the hood, what we do is we take the original size image. We feed it through the Apple Neural Engine. And together with the original-sized image, we render these high-resolution, high-quality, and with high-consistency segmentation mattes. These are then ready to be embedded into the HEIF or JPEG files as you know them, together with the original size, the image, and the depth as you know from iOS 11.

So there are two distinct ways to generate these new types of mattes. So one is that they're embedded in the old portrait mode captures. So you can grab them from those files. Or even better, you can write your own capture app and opt-in to these mattes on capture. So if you have files with the segmentation mattes in them, you can work with them through Core Image and Image IO. David is going to talk more about that. But first, I'm going to talk you through how to capture with the AVFoundation API.

There are four phases we're going to go through here that relates to the extension. So, the first is when we set up the AVCapturePhotoOutput. Second is when the capture request is being initiated in any point in the life cycle of your app. Then two of the callbacks. So one is when the settings are resolved for your capture. And the final one is the one the photo did finish processing. So, for full details on this, please refer to Brad's 2017 talk on this exact topic.

Yeah, let's go through the how to set up the AVCapturePhotoOutput. So this usually happens when you're setting or you are configuring your session. So you're already, at this point, done session that begin configuration. You've set your presets. You've added your device inputs. You add your AVCapturePhotoOutput. At this point is when you tell the API what superset of segmentation mattes are you're going to ask for at any point in life cycle of your app.

When you actually want to initiate your capture requests, you need to specify the AVCapturePhotoSettings. So this is where you tell the API, "This is what I really want in this particular capture." So, here again, you can specify all the ones that you already enabled. Or you can specify a subset, say hair or skin.

Now you initiate your capture request. So you give it the AVCapturePhotoSettings. And you give it the delegate where you want to have your callbacks.

So time passes.

And soon after, you will get that -- get a will begin capture for callback. This is when the API tells you you may have asked for something, but this is what you're actually going to get. So this is important for the portrait effects matte and the semantic segmentation mattes. Because if there are no people in the scene, you'll actually not get a matte here. So you need to check for the dimensions of the semantic segmentation mattes. There will be zero in such case.

More time passes. The photo did finish processing. So this is when you get the -- your AV semantic segmentation matte back. This is the variable matte in this case. So this new class had the same type of methods and properties as you know from the portrait effects matte. That means you can rotate according to EXIF data. You can get your CVPixelBuffer reference. Or you can get a dictionary representation for easy file IO. So for full walkthrough of the lifecycle of how to make these captures, please refer to the AVCam sample app. It has been updated with the semantic segmentation mattes and will take you through all these different steps.

I'm going to hand it over to David, who going to talk about the Core Image.

All right. Thank you very much. Now that we've learned how to capture images with semantic segmentation mattes, we get to have some fun and learn how we can leverage Core Image to apply some fun effects. Now, I'm going to have a demo next. But I should warn you if -- there's clowns in this image. So if you have any coulrophobia, or irrational fear of clowns, you know, avert your eyes. All right, so here we have an image that was captured in portrait mode on a device. And what we can see in this application is that we can now very easily see all the different semantic segmentation mattes that are present in this file.

We can use the traditional portrait effects matte. Or we can also see the skin matte. Or we can see my-- the hair matte or the teeth matte. And it's also possible to use Core Image to combine these various mattes into other mattes, such as this one I've synthesized by using logical operations to create a matte of just eyes and mouth.

If we go back to the main image, however, we see this is a picture of me In Apple Park. And one of the great things you could do with semantic -- with portrait effects mattes is you could add a background very easily. And as you can see here, we can put me in a circus tent. And while that really does look like a circus tent, I don't look like I fit in. So now we can use some fun effects. For example, we can make it look like I've got some clown makeup on.

Or if we want to go a little further, we can give myself some green hair. And lastly, we can use some of these other mattes to give myself some makeup.

So that's what I'd like to talk to you about today is how we can do these kind of fun effects in your application.

All right, so most of the clown references are gone now. So it's safe to look back. All right, so we're going to be talking about three things today. One is how you create matte images using Core Image, how you can apply filters to those images, and lastly, how you can save these into files. So firstly, let's talk about creating matte images using Core Image. There are two ways. One is you can create matte images by using the AVCapturePhoto APIs. And then, from that, you can create a Core Image. So, the code for this is very simple. What we're going to be doing is using the semanticSegmentationMatte API and specifying that we want to do the hair or the skin or the teeth.

And that returns an AVSemanticSegementMatteObject.

And from that, it's trivial to create a CIImage where we can just instantiate a CIImage from that object.

The other common way you're going to want to create matte images is by loading them from a HEIF or JPEG file.

These files have a main image you're familiar with, a typical RGB image. But they also have auxiliary images, such as the portrait effects matte, as well as the new mattes that we're talking about, the skin segmentation matte, and the hair, and the teeth.

The code for this is very simple. The traditional code to create a CIImage from a HEIF file is just to say CIImage and specify a URL.

To create these auxiliary images, all you do is make the same call and provide an options dictionary, specifying which matte image you want to return. So we can specify the auxiliary segmentation hair matte.

Or if we want, we can get the mattes for the other semantic segmentations.

So very simple, just a couple lines of code.

The next thing we want to do is talk about how you can apply effects to these images.

So, I showed a bunch of effects. I'm going to talk about one in a little bit of detail. What we're going to do is we're going to start with a base RGB image, and then we're going to apply some effects to that. Let's say we want to do the washed-out clown white makeup.

So, I'm going to apply some adjustments to that. Those adjustments, however, apply to the entire image. So we want those to be limited to just the skin area.

So, we're going to use the skin matte.

And then we're going to combine these three images to produce the result we want.

Let me walk you through the code for it because it's actually quite simple.

But first, I want to talk about the top feature requests we've had for Core Image, which is to make it easier for people to discover and use the 200-plus built-in filters we have. And that is the new header called CoreImage.CIFilterBuiltins. And these allow you to use all of the built-in filters without having to remember the names of the filters or the names of the inputs.

So it's really great.

So let me show you some code that will use this new header.

So the first thing we're going to do is create the base image. And we're just going to call image with contents of URL. And that will produce the traditional RGB image.

Now, we're going to start applying some effects. So the first thing I want to do is I'm going to convert it to grayscale. And I'm going to use a filter called the maximum component.

And I'm going to give that filter an input image of the base image.

And then I'm going to ask for that filters output, and that produces an image that looks grayscale like this.

This doesn't look quite bright enough to look like clown makeup. So we're going to apply an additional filter. We're going to say use the gamma adjustment filter. And the input to this will be the previous filter's output, and then we're going to specify the power for the gamma function, and ask for the output image.

And you'll notice it's now very easy to specify the power for the gamma filter. It's a float rather than having to remember to use an NS number.

So that's the first part of our effect.

The next thing we want to do is start by getting the skin segmentation matte. So again, as I described earlier, we're going to start with a URL to specify that we want the skin matte. However, when we get this image, you notice it's smaller than the other image. As we mentioned before, these are half size by default.

So we need to scale that up to match the image, the main image size. So we're going to create a CGAffineTransform that scales from the matte size to the base image size. And then we're going to apply a transform to the image. And that produces a new image, which, as you expect, matches the correct size.

The next step we're going to do is start combining these two.

And we're going to use the blendWithMask filter. And this is great. And we use this throughout the sample I just showed.

We're going to specify the background image to be the base RGB image, which looks like this.

Next, we're going to specify the input image, which will be the foreground image, which is the image which has the white makeup applied. And lastly, we're going to specify a mask image, which is the image that I showed previously.

Given these three inputs, you can ask the blend filter for its output. And the result looks like this.

Now, as you can see, this is just the starting point. And you can combine all sorts of interesting effects to produce great results in your application.

Once you're done applying these effects, you want to save them.

And most typically, you want to save them as a HEIF or a JPEG file, which supports saving auxiliary images as well.

So, in addition to the main image, you can also store the semantic segmentation mattes so that either your application or other applications can apply additional effects.

The code for this is very simple. You use this Core Image API writeHEIFRepresentation, and, typically you specify the main image, the URL that you want to save it to. And then the pixel format that you want it to be saved as. And the color space you want it to be saved as.

And what I want to highlight today is another set of options that you can provide when you're saving the image.

So, for example, you can specify the key semantic segmentation skin matte. And specify the skin image, or the hair image, or the teeth image. And all four of these images will be saved into the resulting HEIF or JPEG file.

Now there's an alternate way of getting this result, which is if you want, you can save a main image and specify the segmentation mattes as AVSemanticSegmentationMatte objects. This again, the API is very simple. You specify the URL, the primary image, the pixel format, and the color space.

In this case, if you want to specify these objects to be saved in the file, you just say AVSemanticSegmentationMattes, and you provide an array of mattes.

So, that's what you can do using Core Image with these mattes. What I've talked about today is how to create images for mattes, how to apply filters, and how to save them. I will however, mention that the sample app I showed you has been written as a Photos app plugin. And if you want to learn about how you can do that in your application so that you can save these images not just to HEIFs but also into the user's photo library, I recommend you consult these earlier presentations, especially the introduction to the photos frameworks from WWDC in 2014.

All right, and thank you all very much. I really look forward to seeing what you do with these great features. Thanks.

[ Applause ]

-