-

Advances in HTTP Live Streaming

HTTP Live Streaming allows you to stream live and on-demand content to global audiences. Learn about great new features and enhancements to HTTP Live Streaming. Highlights include support for HEVC, playlist metavariables, IMSC1 subtitles, and synchronized playback of multiple streams. Discover how to simplify your FairPlay key handling with the new AVContentKeySession API, and take advantage of enhancements to offline HLS playback.

Resources

Related Videos

Tech Talks

WWDC17

WWDC16

-

Search this video…

Hey there! Hi, everyone. Good afternoon and welcome to this year's edition of what the heck have those HLS folks been up to lately.

It wouldn't fit on the slide though so you kind of get what you get. My name is Roger Pantos. I'm going to be your host here tonight and we have a bunch of stuff to talk about. We have some new CODECS that are interesting, we have some new streaming features, and we have some enhancements to our APIs.

But first, we have our own announcement to make. I am happy to announce that the IETF has approved the publication of the HLS spec as an Internet draft. So what this means is that the current draft that's published on the site now, which is -23, will move through the IETF publication process and once it does then it will be assigned an RFC number.

Now we've been sort of refreshing the draft for like 8 or 9 years now and there's a bit of question, okay, well why now? Why publish an RFC? One of the reasons is that we have heard feedback from some people that it's a little scary to be writing to a spec that's sort of continuously in draft mode. And we get that. We understand it. And so publishing the spec as an RFC will allow it to serve as a stable reference going forward, which means that you'll be able to build on it with confidence, you'll be able to cite it in other specifications, and we hope it will help improve things a little bit in some areas of the industry.

Now, are we going to stop improving HLS? No, of course not. We're going to continue to evolve it. And we're going to make it better and better for the user's streaming experience, starting with the things we're going to be talking about today. And so the way we're going to do that is we're going to introduce a new internet draft that will build upon the coming RFC as a baseline and so keep an eye out for that. Okay. Now, let's move to the other big news of the conference which is HEVC. As you heard at the keynotes and elsewhere, Apple has chosen HEVC as our next generation video CODEC. Now, why did we do it? In one word, efficiency. And primarily, encoding efficiency. HEVC is around abouts 40% more efficient than AVC. I mean, it depends on your content and it depends how good your encoder is, but 40% is a nice ballpark figure.

And for those of us who spend our time schlepping media over network that's exciting because first of all it means that your user is going to see startup at a decent quality 40% faster, and when the player adapts its way all the way up they'll see content that looks 40% better.

And so that's an important thing for us. So HEVC is good. Where can you get it? Well as we've said, we are making HEVC widely available. And in fact, on our newest devices, our newest iOS devices with A9 and later and our latest generation of Macintoshes, we have support for HEVC built into the hardware and so that includes support for FairPlay Streaming. Even on devices, on older devices that don't have that hardware support, we are still going to deploy a software HEVC CODEC. And so that will be on all the iOS devices receiving, iOS 11. That will include the Apple TV with tvOS 11 and Macintoshes that are upgraded to High Sierra.

So, HEVC is going to be a lot of different places. We'd like you to use it. And to use it with HLS there are a few things you need to keep in mind. The first is that HEVC represents an entirely brand new encode of your content for a lot of people, and that means on the bright side there's no compatibility version -- burden, rather. And so we decided that it was a good time to refocus our attention on a single container format and we looked at the alternatives and we decided that fragmented MPEG-4 had the most legs going forward. So that means if you're going to deploy your HEVC content to HLS, it has to be packaged as MP4 fragments.

Now, another nice thing there is that it makes the encoding story or rather the encryption story a little bit simpler because our old buddy common encryption in cbcs mode works the same way with HEVC as it does with H.264. And so there are no new rules there, you just have to do the same sort of thing you do to your HEVC bit streams. As with any new CODEC, you're going to be deploying it to an ecosystem where some devices can't speak HEVC and so it is critically important that you mark your bit streams as HEVC so the devices that don't understand it can steer around it. And the way to do that, of course in HLS, is with the CODECS attribute in your master playlist. I've got an example here of a CODECS attribute for HEVC. It's a little bit more complicated than H.264 but it's not -- it's got a few more things in it but it's not too bad. And the entire format is documented in the HEVC spec. So speaking of older clients in H.264, there is naturally kind of a compatibility question around here of, first of all, can you deploy a single asset to both older and new clients? And the answer is yes.

Here we go. You can mix HEVC and H.264 variants in the same master playlist, and that goes for both the regular video variance and also the I-frame variance. You can have HEVC and H.264 versions of those as well.

As I said, you do need to package your HEVC in MPEG-4 fragments, but for backward compatibility H.264 can either be in transforce frames or in fragmented MPEG-4, your choice. Again, it becomes even more critically important that you label your media playlist so that we know what is what.

And finally, we have actually updated -- we have -- if you-guys don't know, we have a document called the HLS Authoring guidelines, Best practices for Apple TV and stuff. I think we changed the name. But anyway, it doesn't matter because there's actually a video on demand talk that's available today called the HLS Authoring Update, and we've updated that for HEVC. And so the Authoring guide now has some additional considerations for HEVC as well as sort of a initial set of recommended bit-rate tiers to get you-guys going on that. So check out that talk. I hear the guy giving it is awesome.

Next, we've got a new subtitle format, and this is called IMSC. Now many of you will not have heard of IMSC. In the consumer space it's not quite as big as VTT is yet, but it is sort of a grandchild of a more well-known format called the Timed Text Markup Language or TTML. TTML is kind of a extremely expressive, not particularly lightweight authoring language, primarily, for subtitles and captions and it's used for mezzanines and interchange and that kind of thing. And so what the Timed Text working group did was they took TTML and they kind of slimed it down, streamlined it a little bit for delivery of regular captions to consumers over the Internet. And so that is called IMSC.

Now, we already do VTT and so it brings the obvious question of well why is IMSC different? And the primary difference is that IMSC has much better support, much more extensive styling controls than VTT does. VTT kind of has a few basic styling controls and then for the rest of it leans on CSS. IMSC has a much more self-contained set of styling features that are focused on the kind of stylings you need to do for subtitles and captions. And so it's gotten a certain amount of traction, particularly in the broadcasting industry, and so much so that it was chosen last year as the baseline format for MPEG's common media application format that we told you about last year.

And so what we're doing in iOS 11 and the other various releases is we are rolling out a first generation ground-up implementation of IMSC and so that's in your seat today, and we expect to refine it going forward.

So just as with HEVC, there are a few things you need to know about IMSC in order to use it with HLS. The first thing is how it's packaged. Unlike VTT, which the segments -- where the segments are just little text files, the carriage of IMSC is defined by MPEG-4, part 30 and it basically what it comes down to is XML text inside of MPEG-4 fragments, and so it takes advantage of all the MPEG-4 timing facilities. And I say text because IMSC actually defines 2 profiles, an image profile and a text profile. Our client only supports the text profile of IMSC, and so when you label your playlist, which you should do because you face the same issue with old clients not understanding IMSC, you'll want to add the CODECS tag for IMSC, and I've included a sample here which is stpp.TTML.im1t, and that essentially says I have subtitles in my playlist that conform to the text profile of IMSC1.

Now, I've been talking about IMSC and HEVC sort of in the same breath. I just want to sort of emphasize that they're not linked. You can use them independently. You can use HEVC with VTT. You can use IMSC with H.264. You can use them all together, and you can actually even have a single playlist that has VTT and IMSC so that newer clients can get the benefits of IMSC styling and older clients can continue to use VTT. And so let's take a look at a playlist and show you what that looks like.

I've got a fragment of a master playlist here and that first set of lines up there should be pretty familiar to you. That's what your master playlist would look like if you had a video variant called bipbop gear1 and it had VTT based subtitles in it. That next set of tags has the same video tier but in its CODECS attribute you see it's labeled as IMSC. And so the first one will pull down the VTT.m3u8 playlist, the second will pull down the IMSC if the client can understand that. If we sort of dive into those two media playlists they're actually pretty similar. You can see that the VTT is, you know, as you'd expect, just a list of .VTT segments and the IMSC is also sort of, in this case MP4 segments because there are MPEG-4 fragments and it does have them tagged because that's what fragmented MPEG-4 requires is we need to be able to point at the movie box. So, but other than that they're very similar. So IMSC, VTT they're very similar. They do the same thing. Why would I switch my HLS streams for IMSC? Well, you might switch your streams if, first of all, if you want more stylist to control and you don't have an entire CSS parser to lean on in your playback devices then IMSC can be attractive.

A second good reason is that you may be authoring your captions already in TTML, or perhaps you're getting them from a service provider in one of those formats. And you may find that translating TTML's IMSC is both simpler and perhaps higher fidelity than doing the same translation to VTT because they're much more similar formats. So another good reason. And a final reason is that you may find yourself producing IMSC1 streams anyway. We mentioned that CMAF is requiring IMSC for captions in CMAF presentations, and if you find yourself wanting to take advantage of the pool of compatible devices that we hope CMAF will produce then you're going to end up with IMSC1 streams and you may be able to just drop the VTT streams and that would simplify your tool chain and your production workflow. So now having said all of that, maybe none of that applies to you in which case sticking with VTT is a fine choice. It's going to continue being around. In fact, maybe you're mainly focused on North American market in which case 608 is fine too. It's not going anywhere. So, we've just got different choices.

Now, I've been talking a lot about IMSC1. There might be a question lingering as to well is there an IMSC2? And the answer is kind of. It hasn't been finalized yet, in fact it's still working through its process but the Time Text working group is planning on defining an IMSC2, and one of the features in it that we're keeping an eye on is some more silent controls that are particularly aimed at some advanced Japanese topography features such as shatti and ticochiyoko [phonetically spelled]. And so the short story is that IMSC2, we expect the story to evolve over the next couple of years and so keep an eye out for it. Okay. So that's our CODECS story, now let's talk about some streaming features. And first I'd like to talk about something that we have that we hope will make the lives of those longsuffering produces of live streams a little bit easier in terms of helping them maintain a robust experience because HLS players are usually pretty good when everything going on in the back end is kind of running tickety-boo, but when things start to fall over on the back end the clients could actually do a little bit better to help things move along. Let me show you what I mean.

So here we have -- I guess here we have a -- your typical live HLS playlist. Got a ten-second target duration, segments are you now 10 seconds or thereabouts, after 10 seconds you might want to reload it. File sequence 12 is at the bottom there, but oh now file sequence 13 appears. 10 seconds later you reload again, file sequence 14 appears. Those things aren't appearing by magic, of course. You've got some encoder worker somewhere that's chewing on a media source, and every 10 seconds it's writing on a new segment file into the CEN. What would happen if for whatever reason the encoder suddenly rebooted? Or maybe you knock your microwave dish with your elbow or something, you lose your media feed. Well, prior to this HLS clients had no way of knowing that that had happened and so they had no way to help out. But now we defined a new tag which is called the GAP tag, and so now what you can do instead on your back end is when you lose your encoder or you lose your media source your packager can continue writing segments but instead of writing the media data it can simply write a dummy URL and affix that with a GAP tag. And it can continue doing this as long as the encoding for that stream is disabled. And so 10 seconds later you might get another segment that is indicated as a gap. So this tells the player that the stream is still alive, it's still chugging along, it's still updating but things aren't so good in actually media data land. Once things are restored, once your encoder comes back or your -- you've straightened out your microwave dish or what have you, then the packager can continue producing segments as it did before. So, what does the GAP tag mean to you as a client? Primarily it means that there's no media data here and so of course as a first approximation the player shouldn't attempt to download it because it's not there, but more interestingly as soon as the player sees a GAP tag appear in a media playlist it can go off and attempt to find another variant that doesn't have the same gaps because you may have multiple redundant encoders producing different variants or redundant variants, and so maybe we're playing the 2-megabit stream and we might find that when we find the 1-megabit stream that oh, actually he doesn't have a gap and so we can just cleanly switch down to it, we can play through the 1-megabit stream. Once we're done with the gap we can switch back up to the 3-megabit stream, the 2-megabit stream, and it's all good. The user won't even notice. So, we have a fallback case which is that you might just have one encoder, or like I said you might have knocked out your media source, you may have no media for the entire gap, and the behavior of our player in that case is we'll just in a live stream scenario continue to play through silence until media comes back and we can resume the presentation.

Now, the new GAP tag amongst other things is described in a version of the HLS beta spec that I was up last night writing, and so I think we're posting it today, which is awesome. I can't wait to see it.

Yeah. Okay. Feedback of course is welcome. So that's the GAP tag. Let's talk about another new feature, and this is also kind of aimed at your back-end folks. And what we're doing is we are supporting simple variable substitution in m3u8 playlists, and do to that we kind of barrowed a little bit of syntax from PHP. And so what that means is if you see something like that highlighted bit in a playlist what it is saying is take that thing that's surrounded by the braces and replace it with the value of the variable whose name is file name. And so if the variable value happens to be foo then you would end up with a string that is foo.ts.

Now, to define these things we defined a new tag and it's pretty simple, I'll show it to you in a second, and it either defines a variable inline the playlist or it can import it. Now, this is what makes things interesting because remember I said makes life a little bit better for people producing streams? Well how does it do that? I mean, yeah, you could -- if you've got big honking URL's you could use variables to make your playlist shorter but, I mean, gsip already does a better job of that so that's not -- that's kind of -- but what's interesting is when you have the ability to define a variable in a master playlist and make use of it in a media playlist that allows you to construct your media playlists ahead of time with little placeholders that are filled in sort of in a late binding way when your master playlist is defined. So for instance, you could have a bunch of variables references in your media playlist on your CDN and you could produce your master playlist dynamically from your application and at that point you can say I want my variable to be this, and suddenly all your media playlists on the CDN will take advantage of it. So let's see what that looks like. Here's a master playlist. It's pretty simple example. So I've got a define tag here. It has two attributes, the name attribute says the variable name is auth and it has a value which is this definition of an auth token.

And you can use this various places in the master playlist. For instance, in my gear1 thing here I've decided to tack on the auth token to the URL for the first variant, but now let's imagine that we load that media playlist.

Again, media playlists can have variables just like master playlists do. In this case, I've got a path that has this big long path. I don't want to type a whole bunch of different times. But the second define is a little more interesting. In this case, we're importing that auth variable that we defined in the master playlist and we're applying it to different places such as this URL in the media playlist, and so this allows you to sort of have a loose coupling effect between your master playlist and your media playlist. I think that people are going to find a lot of interesting uses for it. Next, okay. So we talked about some back-end features. Let's talk about something that you can actually use to provide a compelling user experience. Now what do I mean? Well for instance, what if you wanted your users to be able to, when they're watching a match, to be watching one camera of someone sort of crossing the ball but at the same time watching the goal keeper cameras so they could see his point of view as well. Or what if you're watching a race and you want to see the car camera in Hamilton's car but you also want to keep an eye on Vettel behind him. And so in that case what unites these kind of features is the ability to play multiple live streams that are synchronized with each other so that one doesn't get ahead of another. This is your future.

So in order to do that, all we require is that you have two or more live streams that are synchronized by the use of a date-time tag. That basically means you put dates in your playlist and the dates are both derived or are derived in all the playlists from a common clock. And once you've done that then you can create multiple independent AVPlayers and you can start the first one playing and then start the second one playing in sync by using the AVPlayer setRatetime atHostTime method. Now, I should be up front here and say that using this method in AVPlayer gets you some serious AVFoundation street cred because it's not the simplest API in the world.

But to help you out with that, we've actually got some sample code for you this year. It's an Apple TV app. It's called SyncStartTV and I thought rather than just talk about it maybe I should show it. You-guys want to see it? Yeah. All right. Let's do it.

So I'm going to switch us over to the Apple TV here, if I remember which device it is. I think it's this one. We've got -- I have my little -- in order to demonstrate a live synchronized stream, I first had to produce a live streaming setup and so I'll just talk a little about what's going on up here. I wrote a little app that takes the feed from the back camera and chops it up and actually serves it from the phone as an HLS stream. And I've got two of these guys here, two phones, both sort of focused on the same thing. I've got my left and my right and they're actually connected over the network and they're using a clock that is a pretty precise clock that's being shared between them, and so they are off there doing their thing. So let me start my -- I don't have a -- I couldn't get the rights to anything really exciting so we're going to do this instead. So let's see. I'll start up my -- something going on here. And let's start one of the cameras. In fact, let's get them both going here. So you've got you.

Right is online.

And left is online. Okay. So here we go.

Get my remote oriented correctly. Okay. So SyncStartTV. When you launch SyncStartTV it gives you the opportunity to select either left or right videos. So let's select this guy. Pops up a Bonjour picker and you can see that both of my streams show up here in Bonjour. So let's start with stage right and see what's going on over there. Nothing much going on over there. Let's start this guy going in sort of -- all right. Now select left video.

And look at that.

They're in sync! Oh my goodness. Hold on a second.

All right.

Thank you. How do I get my slides back? All right. Here we go.

Well, this is fun for all ages.

Okay. So I guess I skipped right past my slide. Oh well. One of the things I wanted to mention about this kind of presentation where you're showing multiple streams at once is that you do have to be careful to make sure one of your streams doesn't sort of suck down all your network bandwidth and leave the other ones starved. So normally you would do this by throttling each stream. We're giving you another tool for that this year and that is a resolution cap. And so essentially it's as the name applies, it allows you to programmatically say you know what, I'm displaying this in a little 480p window, there's no point in switching up the way to 1080p. And so when you have an app that's got video thumbnails or multi-stream or stuff like this and you don't want to have to dive into the gory details of your playlist and set a bandwidth cap, this is kind of a handy thing to have. There is a case where you may set a max resolution is actually smaller than anything -- any of the tiers that are available. In that case we'll just pick the lowest one and we'll play that.

It's really easy to use. If you've got a player item you just set its preferred maximum resolution to the CG size and in a few seconds it should take effect.

Both sort of up and down.

Okay. So let's talk about a few other things. Last year we introduced HLS offline support which is the ability to download your HLS streams and play them somewhere like an airplane where you don't have a network. And when we talked about that we mentioned that we would be at some point in the future taking a more active role in managing that disk space. Well, the future is now and so I'd like to introduce you to a new part of our settings app in iOS 11. This has a section where a user can go and see all the apps that have offline assets and how much disk space their using, and the user can choose to delete them if they want to free up disk space.

So what this means for you folks is primarily that the OS is now sort of capable of going off and deleting your assets while your app is not running. And there can be a couple different ways that could happen. The user could decide to do it themselves or we may ask the user if it's okay to delete some of their content if we need space for like an OS update or something like that. And so in iOS 11 we're introducing a new API that allows you to influence the choice of what assets get deleted when, and it's called the AVAssetDownloadStorageManager. And the way it works is you create a policy on each offline asset that you have on your disk and you set it with manger. Right now the download storage management policy has two properties, expiration date and priority. And today we define just two priorities that are important to default. So for most people what you're going to do is mark assets the user hasn't watched yet as important, and once I've watched them switch them back down to default. The expiration date property is there in case your asset at some point becomes no longer eligible to be played. For instance, you may find that you may be in a situation where a particular show may be leaving your catalog, you no longer have rights to stream it. If that's the case you can set the expiration date and it will be sort of bumped up in the deletion queue.

So, using it is fairly straight forward. The DownloadStorageManger is the singleton so you go grab that. You create a new mutable policy, you set the attributes, and then you tell the storage manager to use that policy for an AVAsset at a particular file URL. You can also go back and look up the current policy later on. So that's coming your way.

The other thing about offline is that we got some feedback from some of the folks who have adopted it that downloading more than one rendition, like if you want your English audio but also your Spanish audio, is maybe a little bit harder than it could be primarily if your application is subject to going to the background and being quit half way through, and so I'm pleased to say that in iOS 11 we're introducing a new way to batch up your offline downloads. It's called an AggregateAssetDownloadTask and it allows you to, for a given asset, indicate an array of media selections like this and then when you kick off the download task we'll go off, we'll download each one, we'll give you progress as we do, and then we'll let you know when the whole thing is done so hopefully it will make things a little bit easier.

Okay. The next set of things we're going to talk about here today all revolve around managing content keys on your device, and it's a fairly complicated topic and so what I'm going to do is hand you-guys over to our very own HLS key master, Anil Katti, and he's going to walk you through it. Thank you very much. Thank you, Roger.

Good evening everyone. Welcome to WWDC. So two years ago we introduced FairPlay Streaming, a content protection technology that helps protect your HLS assets.

Since this introduction we have seen phenomenal growth.

FairPlay Streaming is used protect premium content that is delivered on our platforms, and today we are excited to announce a few enhancements to the FairPlay Streaming key delivery process that will allow you to simplify your workflow, scale your FairPlay Streaming adoption, and support new content production features. But before we talk about the enhancements I would like to start with a quick overview of FairPlay Streaming.

FairPlay Streaming specifies how to deliver -- how to securely deliver content decryption keys.

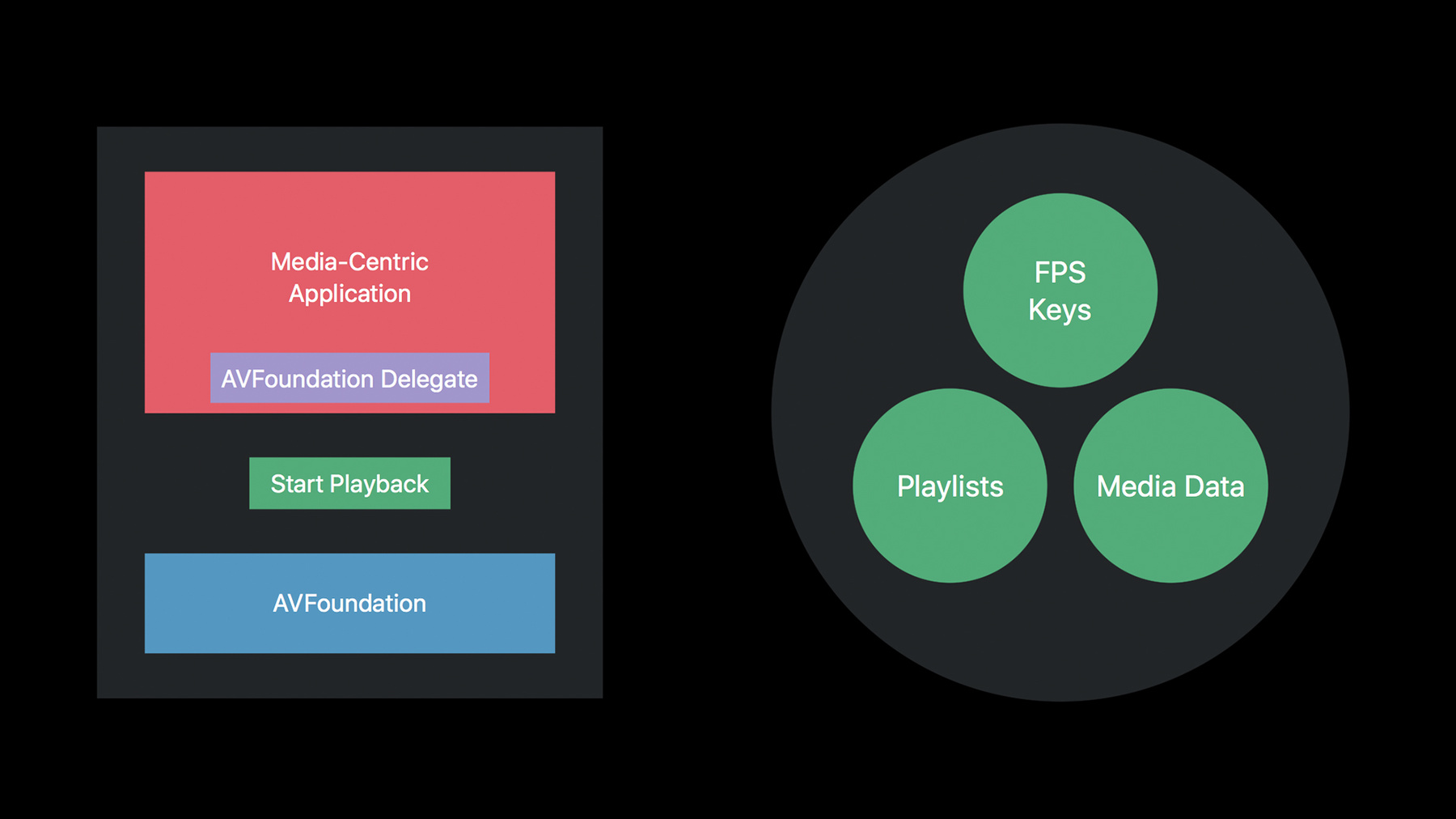

There are three main elements in the FairPlay Streaming system, your media-centric application, the one that holds the playback session is at the center.

You have your key server on one side that provides the decryption keys, and AVFoundation is on the other side that gives you support for decryption and playback of the content.

There are five steps involved in delivering content decryption keys and it all starts when the user is browsing through your app trying to pick something to play, and when it does that your app creates an asset and as AVFoundation decide to playback.

When AVFoundation receives the playback request it starts passing the playlist and when it sees that the content is encrypted and uses FairPlay Streaming for key delivery asks your app for the key by sending you a key request through the delegate call back.

At that point, you could use the key request object to create what we call server playback context or SPC in short, which is an encrypted data blob that contains the information your key server requires to create content decryption keys. Your app then sends the content decryption key to your key server and obtains content key context in return, or CKC, which is another encrypted data blob that contains the actual decryption keys. As a last step, your application provides the CKC as a response to the key request object that it initially received.

So assuming AVFoundation has already loaded playlist and media data by now it now has the FairPlay Streaming keys that it requires to decrypt the content and start the playback. Well in a sense, FairPlay Streaming keys are similar to these other resources, and in fact if you recall, if you're using FairPlay Streaming for key delivery today FairPlay Streaming keys are delivered with AVAsset accessory APIs, similar to other custom resources, but that's pretty much where the similarity ends, right? FairPlay Streaming keys are specialized resources that have very specific operations defined on them. I'll give you a couple of examples now. So AVFoundation allows you to free FairPlay Streaming keys so that you could save them in your app storage and use them later on like when the user is trying to play content offline. And you could also define FairPlay Streaming keys to expire after a certain duration and the keys have to be renewed before they expired in order to continue decryption. As content production features continue to evolve, FairPlay Streaming keys will continue to get more specialized and so does the key delivery process. Further, FairPlay Streaming keys do not have to be associated with assets at the time of loading. So we think by decoupling the key loading from media loading or even the playback, we'll be able to provide you more flexibility that can be exploited to address some of the existence pain points and also provide better user experience.

So with all these things in mind we are so glad to introduce a new API that will allow you to better manage and deliver content decryption keys.

Introducing AVContentKeySession.

AVContentKeySession is an AVFoundation class that was designed around content decryption keys. It allows you to decouple key loading from media loading or even playback, for that matter.

And it also gives you a better control over the lifecycle of content decryption keys.

So if you recall, your application loads keys only when it receives a key request from AVFoundation and but that -- you can change that with AVContentKeySession.

With AVContentKeySession you get to decide when you would like to load keys.

However, if you choose not to load keys before requesting playback, AVFoundation still sends you a key request on demand like it does today. So now we provide you two ways to trigger the key loading process. You could use -- your application could use AVContentKeySession to explicitly initiate the key loading process, or AVFoundation initiates the key loading process on demand when it sees the content is encrypted.

So let's see how using AVContentKeySession to initiate the key loading process could be helpful.

And the first, use keys that I have for you today is around playback startup.

Key loading time could be a significant portion of your playback startup time because applications normally load keys when they receive an on-demand key request. You could improve the playback startup experience your user receives if you could load keys even before the user has picked something to play.

Well, AVContentKeySession allows you to do that. So you could use AVContentKeySession to initiate a key loading process and then use the key request that you get to load the keys independent of the playback session.

Now we call this key preloading and after loading the keys you could request a playback so during playback you don't have to load any keys, and the playback -- the decryption could start immediately.

The second use case I have for you today is gaining a lot of prominence day by day and it is around live playback. We have seen an explosion in the amount of live content that delivered on our platforms, thanks to a more immersive and integrated experience users receive on our devices.

With more users looking to consume sports and other live events on our devices, developers are using more advanced content production features like key rotation and key renewal to add an extra layer of protection while delivering premium live content. Due to the nature of live streaming, your keys servers get bombarded with millions of key requests all at once when the keys are being rotated or renewed.

Well, you could use AVContentKeySession to alleviate the situation by load balancing key requests at the point of origin.

Let me explain how you could do this with a simple illustration here.

Consider the scenario in which millions of users are watching a popular live stream like Apple's Keynote.

It's possible that they all started at different points in time but when it's time to renew or update the key they all send requests to your key server at the exact same time. That presents a huge load on the key server for a short duration of time and then things get back to normal, until it's time to renew or update the key again, right? So this pattern continues leading to input load on your key serer.

You could use AVContentKeySession to spread out key requests by picking like a random point within a small time window before the key actually expires and initiating the key loading yourself.

So what this allows you to do is scale your live offering without having to throw mode or of your key server.

So now that we have seen some use cases where initiating key loading process with AVContentKeySession was helpful, let's see how to do it in terms of code. You could initiate a key loading process which is three lines of code, it's that simple, and here it is.

So the first thing you do is create an instance of AVContentKeySession for FairPlay Streaming. You then set up your app as a contentKeySession Delegate.

You should expect to receive all delegate callbacks on the delegate queue that you specify here. The third step is to invoke processContentKeyRequest method and that will initiate the key loading process.

So have to note a couple of things here.

There are no changes required in your keys or your key server module implementation in order to use this feature.

This is -- all implementation here is on the client side which is great.

Second, the identifier that you specify here should match with the identifier that you specify in your EXT-X-KEY tag in your media playlist. What that allows us to do is match the keys that you loaded here with the keys that you would request during playback. And third, you should have an out-of-band process to obtain the keys for a particular asset from your key server so that you could load all these keys at this point. When you invoke processContentKeyRequest method on AVContentKeySession, we send you an AVContentKey request object through a delegate call back, and this is exact same delegate method that gets called even when AVFoundation initiates the key loading process.

So now you could use the key request object to do all the FairPlay Streamings plus operations like you could request an SPC, this is very similar to the way you do your request SPC with AVAsset key sorting request.

You then send SPC to your key server, obtain the CKC and as the last step you would create a response object with the CKC and set that as a response the ContentKeyRequest object. So you have to keep a couple of things in mind while responding to a key request.

As soon as you set the CKC as a response to the key request, you would consume a secure decrypt slot on the device and there are a limited number of those.

So it's okay to initiate key loading process for any number of keys and you can obtain the CKC's as well for all those different key requests, but be careful with the last step.

You should set CKCs on only those keys that you predict might be used during playback, and do it just before you request a playback.

So with that background, let's shift gears and see how we could use AVContentKeySession in the context of offline HLS playback.

We introduced persistent keys last year when we modified FairPlay Streaming to protect your offline HLS assets. AVContentKeySession could be used to create persistent keys as well. Before requesting the download of HLS assets, you could use AVContentKeySession to initiate key loading process and use the key request object to create persistent keys.

Then you could store the persistent keys in your app storage for future use.

With that, this will make your workflow a little bit simpler because now you don't have to define the EXT-X Session keys in your master playlist and so on, you could just use AVContentKeySession.

Further, while creating and using persistent keys, you should work with the sub class of AVContentKeyRequest, which is an AVPersistableContentKeyRequest.

I'll explain in terms of code how to request and AVPersistableContentKeyRequest and also to work -- also how to work with an AVPersistableContentKeyRequest. So if you recall, this is the delegate method that gets called when you initiate a key loading process. At this point, if you're trying to create a persistent key you should just respond to the key request and request for PersistableContentKeyRequest, and we would send you an AVPersistableContentKeyRequest object through a new delegate callback. You could use AVPersistableContentKeyRequest to do all your FairPlay Streaming specific operations, like create SPC, send SPC to your key server, get a CKC and use the CKC to now create a persistent key which you can store in your app storage so that you could use it later when the user is offline.

When it's time to use the persistent key, all you have to do is create a response object with the persistent key data blob and set that as a response on the ContentKeyRequest object.

That's it.

So if you're using FairPlay Streaming for key delivery today you would have observed that AVContentKeySession is designed to work similarly to what you're already used today, AVAssetRsourceLoader API. In place of AVAssetResourceLoading request, we have AVContentKeyRequest.

AVAsset is just where the delegates call apart, it's called AVContentKeySession delegate.

However, that is a key difference. Unlike AVAssetResourceLoader, AVContentKeySession is not tied to an asset at the time of creation, so you could create an AVContentKeySession at any point and use that to load all the keys, and just before you request playback you should add your AVasset as a content key recipient.

That will allow your AVAsset to access all the keys that you preloaded with the ContentKeySession object.

So now we have 2 API's, AVContentKeySession and AVAssetResourceLoader, and you might be wondering which API to use for loading different types of resources, and here's what we recommend.

Use AVContentKeySession for loading content decryption keys, and use AVAssetResourceLoader for loading everything else.

I have to point out that we are not duplicating key handling aspects of AVAssetResourceLoader at this point so you could continue using AVAssetResourceLoader like you do today for loading FairPlay Streaming keys, but we highly recommend that you switch over to AVContentKeySession for that purpose. So, who is responsible for loading decryption keys if an asset has both AVAssetResourceLoader delegate and AVContentKeySession delegate associated with it? Well, to be consistent we enforce that all content decryption keys are loaded exclusively with AVContentKeySession delegate.

So your AVAssetResourceLoader delegate receives all resource loading requests including those four content decryption keys.

The app is expected to just defer all the content decryption key loading by calling finish loading on the loading request so AVFoundation could re allow the request to the AVContentKeySession delegate. Let me show you how to do that in code. It's really simple.

So here's a delegate method that gets called when AVFoundation is trying to load a resource, and when you see that the resource is for a content decryption key you should just set the content pipe to say that it's a content key and call finish loading. So at that point we will send a new content key loading request to AVContentKeySession delegate. If it's any other resource you could just continue loading the resource here. So I hope this whirlwind tour of the new API provided you some context around what you can accomplish with AVContentKeySession.

Before I wrap up the talk I have one more exciting feature to share with you, and this is available through AVContentKeySession.

We are providing double expiry windows support for FairPlay Streaming persistent keys. Now, what does this double expiry window thing? If you've ever rented a movie on iTunes you would see that you have -- once you rent a movie you have 30 days to watch it. And once you start watching the moving you have 24 hours to finish it.

We call this dual expiry window model for rentals.

So what this feature allows you to do is define and customize two expiry windows for FairPlay Streaming persistent keys.

That will allow you to support items like rental feature without much engineering effort on the silver side and the best thing is it works on both offline and online playback.

So in order to use this feature, you have to first opt in by sending suitable descriptor in CKC and that will allow you to specify two expiry windows.

The first one is called a storage expiry window which starts as soon as the persistent key is created, and then we have a playback expiry window which starts when the persistent key is used to start the playback.

To explain this feature better let me just give you -- let me just take you over a timeline of events and the context of offline playback.

When the user rents a movie to play offline you would create a persistent key with a CKC that opts in to use this feature.

This persistent key is said to expire at the end of storage expiry window which was 30 days from our example. You would typically store this persistent key in your apps storage and use it to answer a key request later on.

Now when the user comes back within these 30 days and asks you to start play -- ask you to play the content, you will get a key request and you would use this persistent key to answer the key request.

At that point, we will send you an updated persistent key which is set to expire at the end of playback experiment which was 24 hours from our example.

Along with that we'll also explicitly expire the original persistent key that you've created, so you're expected to save the updated persistent key in your app storage and use that to answer future key loading requests. That is when the user stops and resumes playback within the next 24 hours.

In terms of code this is very similar to the persistent key workflow that we just saw a few slides ago, however, we'll send you this new updated persistent key through a new AVContentKeySession delegate callback. So those were all the enhancements that we had for FairPlay Streaming key delivery process this year.

In conclusion, we introduced two new CODECS. We introduced a this year, HEVC and IMSC1. We added the EXT-X-GAP tag that allows you to indicate gaps in your livestreams.

And now you can define and use PHP style variables in your HLS streams. You can now synchronize two or more HLS streams, and we provided new APIs that will allow you to have better control over offline HLS assets. Now there is a new API AVContentKeySession that allows you to get better manage and deliver content decryption keys.

And finally, we added dual expiry window support for all FairPlay Streaming persistent keys so that will allow you to support rental model with your offline HLS assets.

It was really exciting for all of us to work on these new features and we look forward to the adoption.

Thanks a lot for attending this session. You can get more information by visiting our session page on the developer site.

You can also download all the sample code for different things that we covered during this talk.

We have a bunch of related sessions for you. I strongly recommend watching Error Handling Best Practices, and Authoring Update for HLS.

These are available as on-demand videos and your WWDC app, and if you miss any of the other live sessions you could always go to your WWDC app to watch them offline or watch them on demand.

Thank you so much. Have a great rest of the week. Good night.

-