-

Discover the Journaling Suggestions API

Find out how the new Journaling Suggestions API can help people reflect on the small moments and big events in their lives though your app — all while protecting their privacy. Learn how to leverage the API to retrieve assets and metadata for journaling suggestions, invoke a picker on top of the app, let people save suggested content, and more.

Resources

-

Search this video…

Hello My name is René I am part of the Sensing And Connectivity team. I am going to take you through the new Journaling Suggestions API that was announced with iOS 17.

In iOS 17.2, Apple is introducing the Journal app to help people reflect on their lives. The practice of keeping a journal has a rich history, and taking a moment to reflect and write has even been shown to improve wellbeing and mental health. But sometimes, it can be difficult to know where to start. So to help someone get started with their writing, iOS can sense meaningful events in their life and propose a starting point for their Journal entries. We call them Journaling Suggestions, and they are made of photos someone took, workouts, places they went, and more...

Journaling Suggestions is a Private Access picker, and so it is available for your application as well! Journaling suggestions runs in a separate process from your app, but is rendered on top of it. Only what a person actually selects is passed back to your app. And of course, they can review what iOS generated for them before choosing and sending a suggestion to your application. This lets your app take advantage of suggestions from many different categories of data like location and photos, without having to prompt for access to each category. And so, if you’re developing a journaling or wellbeing app that helps someone reflect on their life, then the Journaling Suggestions API is for you. Now I am going to show you how to use it. I will start with more details about what Journaling Suggestions API is about, and how User Privacy is at the core of it. Then, go through how to invoke the picker with a very simple code example.

Next, demonstrate how to retrieve the details from the suggestions. And then, explain all of the conveniency built in this API, and how you can integrate suggestions content very quickly in your app. And finally, I 'll walk through the experience a person will come across when they use Journaling Suggestions for the first time and how they can configure the content of their sheet.

But first, let’s walk through what Journaling Suggestion API is about, how User Privacy comes into the picture, and introduce a few definitions and concepts.

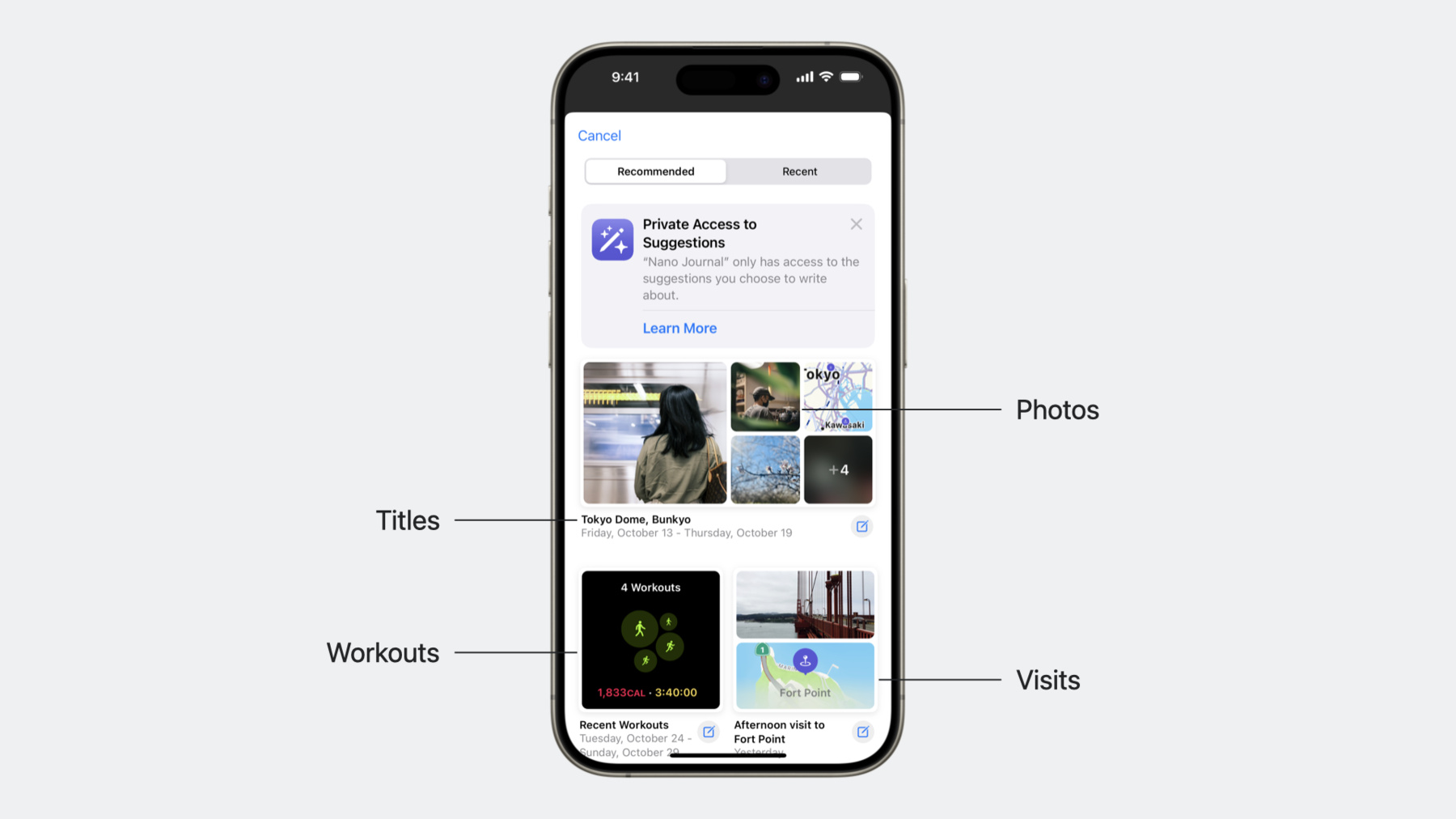

When the picker is shown on the screen, initially you will see is a list of suggestions. Each suggestion is made of a title, and a number of assets such as, photos, workouts, visits, and more. Each suggestion can have up to 13 item content assets. The list is organized under two tabs, one called “Recommended”, and the other one called “Recent”.

Under “Recommended”, the picker will order suggestions to show the most meaningful ones at the top. Our ranking algorithm uses a combination of advanced machine learning techniques to find the right balance of diversity, recency, as well as a match of what a person will engage with the most. This tab will also have special suggestions, including highlights from photo memories, as well as suggestions that last over multiple days, such as weekly summaries and multi day trips.

Under the “recent” tab, suggestions are organized in a more temporal manner. This allows a person to navigate easily back in time.

When someone taps on a suggestion they get into an interstitial screen where they can review all the details, as well as all the attached asset content.

The Carousel view allows them to review assets in larger size. This is important, because they are about to share their data with your App! And the List view allows them to review all the meta data associated with each asset. Each view allows them to select or unselect any asset and really curate what is going to be sent They can even edit the suggestion title. Once they are fully happy with their selection, they can just tap the “Add to your application” button to deliver the content! And remember, this is designed with privacy-first in mind. Your app does not need to ask permission because it runs out of your App process Also, your app will only receive the content of the suggestion they choose to add, nothing else. This is how iOS can propose all of this meaningful content while keeping it private! Ok, I’m sure you’re as excited as I am about this! Let’s look at how you would invoke this picker in your application. The things you need to do are: Add the Journaling Suggestions capability to your app. This will add the appropriate entitlement and allow your app to use the journaling suggestions API Import the Journaling Suggestions framework in your SwiftUI code such that you can create the picker instance. Instrument your picker instance closures to retrieve the content that is sent to your app by the picker And then of course, show the picker on top of your app. Let’s start with adding the journaling suggestions capability now.

Adding the capability is a simple step you do in Xcode by tapping on your app’s build target. then under the “Signing and Capabilities” tab, tap on “+ Capability” and then find Journaling Suggestions. This tells iOS your app intends to show the picker. And now let’s look at an example of an app using the API! This simple app just has a button to invoke the picker and retrieve the suggestion title I start by importing the framework, And create the picker instance, with a label. The picker comes with an “onCompletion” closure, that will give you all the details you need once a person choses to share with your app.

Here I am just keeping the title, so I can show it below my button. And... here is a demo of the result. by tapping on the button, I get the picker on top of my app.

I can browse through, pick one suggestion... the content looks fine. Let me share this with my app.

The picker dismisses, and now the suggestion title is showing in the app! Easy! Ok. At this point, you learned how to configure Xcode, how to import the API, and how to build a simple demo to receive the title of the suggestion but there’s a lot more to get from that closure function. There are in fact 9 different types of assets you could receive for a single suggestion. Let’s review them before you learn how to build a new example that leverages photo assets.

Workout, which can be represented in two forms, depending if a route is attached or not. In both cases though, they will provide additional information such as duration, calories spent, as well as average heart rate when available Contact, which comes with a contact profile photo, or a generated image with the initials when no photo is available.

Location, indicating the places someone went, including meta data such as place name and pinned location.

Song, which includes playback items like song title, album, and cover art.

Podcast, which also includes podcast name, episode name, and cover art.

Photo, which includes an url to the photo.

LivePhoto, which includes an url to the video, as well as its preview.

Video, which includes an url to the video.

and MotionActivity, which has step counts and an icon. Now here is how you can retrieve those assets in the closure.

When its called, you can wait for the items of a certain type to be transferred over with the content(forType:) method of the suggestion, specifying a type of asset you are interested in.

And so for instance, if you want to get the Photos, you would call the content(forType:) photo.

After you receive the photos, you can use them right away and populate the view with them.

In this example, the photos are inlined in a list view by going over all the items of type “photo”, and then inserting them as an AsyncImage, for which the url can be retrieved from each item.

AsyncImage might not allow you to save the image, but it’s convenient for view composition. Later you will find that some assets can be transformed into other SwiftUI types to help speed up your integration. Now let’s review the code of this improved example.

It starts with the picker creation.

The closure block retrieves the suggestion title and the photo assets. And then the photos are inserted in the view with an AsyncImage.

And here is a demo of the result.

Tapping on the button invokes the picker again on top of my app I pick one suggestion and tap “add to my App”.

The picker dismisses, and now, I get the title like before, as well as the inlined photos received from the picker! And this is how you retrieve assets from the suggestion a person choses to add.

Ok now you know how to invoke the picker, how to retrieve the suggestion, and how to retrieve the photos for this suggestion. You also know all 9 asset types, each coming with their own properties.

In the previous example I requested all photo assets and created an AsyncImage out of each of them, but there are actually other assets that contain images. And so I could request all of them as an UIImage or an Image View, for instance, and treat them the same. Let’s review the mapping now. Some assets can be retrieved as UIImage or an Image View for conveniency, those are: Contact, Song, Podcast, Photo and LivePhoto. And so for instance, if you want to save that image to disk, you would use the url content. Also, in the previous example, I could use the same code to insert all those assets directly into the list view. Let’s capture all the UIImage compatible assets now.

For this, I can modify the code to look at all UIImage compatible assets instead of just the Photo assets.

When inserting this into the list view, I will then construct an UIImage instead of an AsyncImage, and pass the image wrapped into the item. And that’s how you retrieve assets as UIImage independently of their specific type! I am going to demo this updated example now.

Note that not only the photos were shown, but also the “Song” album art was converted into an image.

You can check the Journaling Suggestions API documentation online to learn more! And now, I want to remind you how a person can control the content that is generated by iOS when the picker is presented to them. You don’t control that, but it determines what type of content your app might receive. When the picker is invoked for the first time, either in Journal or in another app like yours, a flow will start to explain what Journaling Suggestions can do for people. You don’t have to do anything for it, this is done by iOS.

During the flow, a person can chose the different types of events or data that iOS will sense to produce suggestions for them. They are configured through five switches: Activity for workout and exercise, Media for podcasts and music, Contacts for the people interacted with, Photos for library, memories, and shared photos, and Significant Locations for the places where they spent time at. Those switches will define what iOS will list for them in the picker.

The configuration applies to all applications. And people can change this configuration whenever they want in the Settings App under Privacy and Security, and then, Journaling Suggestions.

To wrap up, the new Journaling Suggestions API allows you to quickly build experiences that help people reflect on the small moments and important events in their lives. It allows you to present the picker on top of your app, and retrieve assets and details about the suggestion that a person chooses to add to your App. And it’s out of process, so it fully protects privacy in a very elegant way! Thanks for watching! We’re so excited about the new experiences you are going to build with the Journaling Suggestions API!

-