-

Discover new Metal profiling tools for M3 and A17 Pro

Learn how the new profiling tools in Xcode 15 can help you achieve the best Metal performance on Apple family 9 GPUs. Discover how to use shader cost graphs, performance heat maps, and shader execution history tools to profile and optimize your Metal code. Find out how to use new GPU counters to optimize GPU occupancy and ray-tracing performance.

Resources

Related Videos

Tech Talks

WWDC23

WWDC22

-

Search this video…

Hello, my name is Ruiwei, and I am a software engineer working on Metal developer tools. Today, with my colleague, Irfan, we are going to show you the latest Metal profiling tools.

The Apple family 9 GPUs, the M3 and A17 Pro, feature a completely new shader core architecture.

We took this opportunity to reimagine the profiling tools, and build state-of-the-art new workflows.

To learn more about this new architecture, please check out "Explore GPU advancements in M3 and A17 Pro".

In this talk, I will start by showing you the amazing new tools in Xcode 15.

Then, as occupancy management is very important for achieving the best performance, Irfan will show you how to profile occupancy with a new set of performance counters.

Lastly, you will learn how to profile ray tracing on this new architecture.

Let's start with the new profiling tools.

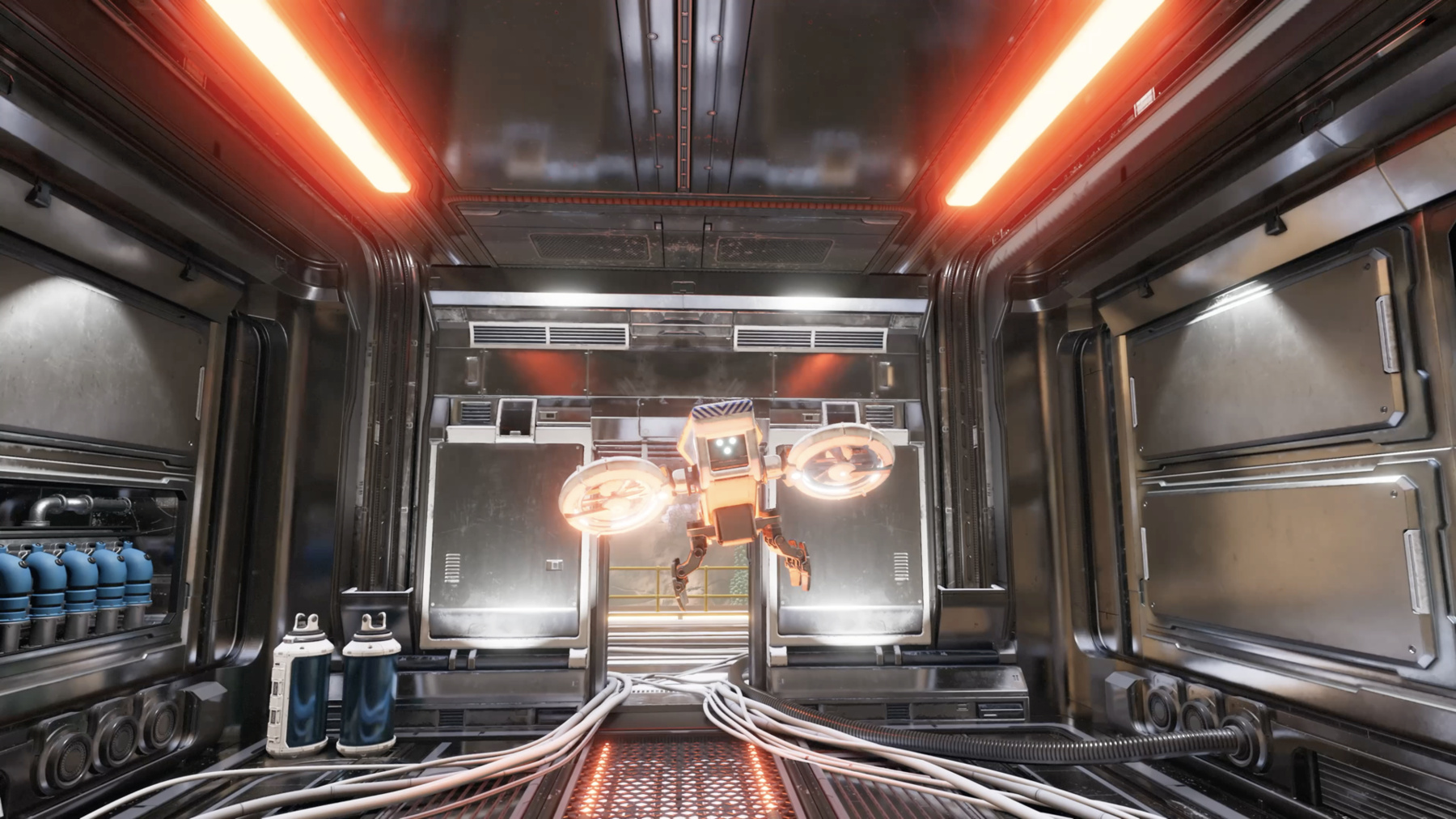

I am working on rendering a street with a modern GPU-driven pipeline. The rendered image looks beautiful, but I'm noticing some performance issues.

There are two major approaches for charging the performance bottlenecks for these kind of applications. The first is identify the most expensive shaders, and start from there to understand what are the costly functions and lines.

Another approach is start by finding the expensive objects or pixels. If we are dealing with shaders, they may behave differently, depending on the fragment position or thread ID.

Thanks to the new profiling architecture in M3 and A17 Pro, Xcode 15 includes multiple new tools to simplify these tasks. Today, I'm going to use the new tools and walk you through how I found the performance issue of my workload.

First, let me introduce Shader Cost Graph. It is a new tool that can help you find and triage expensive shaders.

Here is a GPU capture of the workload I just profiled in Xcode 15. By navigating to the performance view, the GPU timeline shows the execution and performance counters of the workload.

To view the Shader Cost Graph, I can switch to the new Shaders tab.

The performance navigator on the left shows me a list of passes and pipeline states, sorted by cost.

I can see that the GBuffer Pass is about 50% of the total cost, which is more than what I'm expecting.

To investigate, I will start by looking at the expensive pipeline states used in the GBuffer Pass.

Selecting a pipeline state in the navigator will reveal the Shader Cost Graph.

For random pipelines, Fragment Shader will be selected by default.

Shader Cost Graph is divided into two major parts. On top is a flame graph visualizing the most expensive shader function cost.

Below the graph is the corresponding shader source code.

This is the first time we have a flame graph for Metal shaders, and it looks amazing. By using the flame graph, I can easily identify the most expensive functions of my Fragment Shader. Selecting a function in the graph will make the source editor jump right to where the source is.

The source code is annotated with performance annotations in the left sidebar, showing how expensive each line is.

This is a full-screen shader that applies lighting to every pixel of the image. With the performance annotations in the left sidebar, I can quickly identify the most expensive shader source lines. And hover over the pie chart shows me the performance popover.

The popover provides a detailed breakdown of the line, such as exactly how many instructions were executed by the GPU, and the cost of different instruction categories.

Because this is a full-screen shader, the behavior can vary, depending on the fragment location, it is possible the performance bottleneck is caused by specific areas or pixels. To fully understand the issue, I need to find the pixels that are more expensive, and this is a great opportunity to use the next new tool, Performance Heat Maps.

Performance Heat Maps is a way of visualizing pixel, or compute thread information, and performance metrics. They are built using the fragment position, or compute thread ID of the GPU threads.

Let's take a look at the different types of Performance Heat Maps of the GBuffer pass.

First is the Shader Execution Cost Heat Map. The cost is calculated by looking at the execution time and the latency hiding of GPU threads.

I can easily notice that the pixels in the right half of the image are shown in red, which means they are more expensive.

Next is the Thread Divergence Heat Map. It visualizes the amount of GPU thread divergence in the SIMD groups.

Divergence increases with control flow differences among threads, which can occur with conditional branches or inactive threads due to the shape of the geometry.

Overdraw Heat Map visualizes pixels that have been rendered by more than one GPU thread.

This could be caused by overlapping geometry, with blending enabled from one or more render commands. Be sure to group your GPU commands so that opaque objects are rendered first, and then render the transparent ones to achieve the best performance on Apple GPUs.

Instruction Count Heat Map shows exactly how many instructions were executed on the GPU for each pixel or SIMD group.

And lastly, Draw ID Heat Map color codes different GPU commands. In this case, I can see most of the workload is rendered by a single command. Only the transparent window is separate.

Now that you know what heat maps are and how they look, let's take a look how to access them in Xcode 15.

To access the Performance Heat Maps, I can click the Heat Map tab on the top bar.

By default, the Shader Execution Cost Heat Map and the first attachment are shown.

Notice that the street part of the scene has a much higher execution cost. Let's add more heat maps to investigate further.

By clicking the plus button on the bottom bar, the heat map popover will show up. This allows me to quickly enable or disable different types of heat maps.

The Instruction Count Heat Map confirms that the GPU executes many more instructions for pixels of the street, which could explain the high cost.

I can move the pointer to hover over the pixels and look at the details, such as the percentile of the cost and exact number of instructions.

The heat maps already gives me ideas of why those pixels are more expensive.

I can also take it further and see exactly how my shader renders those pixels with the next new tool, Shader Execution History.

Clicking on the pixel in the performance heat map will select the underlying SIMD group.

This will reveal the shader execution history for the SIMD group below the heat maps.

Shader Execution History is divided into two major parts, a timeline on top, and the shader source code below it.

The timeline from left to right shows the progress of the selected SIMD group. From top to bottom, the full shader call stack is shown at each point of execution. This is the first time I can see exactly how SIMD groups are executed by the Apple GPUs with this powerful visualization.

By inspecting the timeline, I can immediately identify shader functions that takes most of the execution time. Metal Debugger also automatically detects loops to help you understand the progress better. Under my most expensive shader function, there's a loop with 12 iterations, and is 79% of the total SIMD group execution time.

In each iteration, apply spotlight is called. And there are even more loops within the function call, sampling a texture.

This is odd, there shouldn't be 12 spotlights lighting the pixels of the street. After checking my workload, I notice that there's a misconfiguration duplicating the spotlights. After removing the extra lights, the performance of the GBuffer Pass is much better.

To recap, Shader Execution History visualizes how SIMD groups were executed by the GPU.

This includes the state of the threads, function call stacks, and loops.

This provides unprecedented understanding of the shader execution that was not possible before.

So these are the new profiling tools in Xcode 15 available for M3 and A17 pro. I cannot wait to see what you can do with them.

Now, let me hand over to my colleague, Irfan, to tell you all about profiling occupancy.

Thank you, Ruiwei, for showing the brand new tools and workflows for the GPU in M3 and A17 Pro. Hello, I'm Irfan, and I will start by showing you how occupancy profiling works on the new GPU architecture, as well as new counters to help you profile hardware ray tracing workloads.

Before I show you how to profile occupancy, I recommend watching "Explore GPU advancements in M3 and A17 Pro." That will help you better understand what I will be covering. Let's start by recapping some key concepts that are most relevant for this section.

Apple family 9 GPUs include the M3 and A17 Pro.

The GPU in both chips have various components. Each shader core has multiple execution pipelines for executing different types of instructions, such as FP32, FP16, and also reads and writes to texture and buffer resources.

It also has on-chip memory for storing different types of data a shader program may use, such as registers for storing the values of variables, thread group and tile memory for storing data shared across a compute thread group, or color attachment data shared across a tile.

These on-chip memories share L1 cache, and are backed by GPU last level cache and device memory.

Now, let's talk about how GP performance and occupancy are related to each other.

Suppose your Metal shader, after executing some math operations using the ALU execution pipelines, reads a buffer, whose result will be used immediately after.

Accessing the buffer may require going all the way out to device memory, which is a long latency operation. During this time, the SIMD group can't execute other operations, which causes the ALU pipelines to go unused.

To mitigate this, the shader core can execute instructions from a different SIMD group, which may have some ALU instructions of its own.

This reduces the amount of time the ALUs go unused, and allows the SIMD groups to run in parallel, thereby improving performance.

If there are additional SIMD groups running on the shader core, this can be done many times over, until the ALUs and other execution pipelines are never starved of instructions to execute.

The number of SIMD groups that are concurrently running on a shader core is called its occupancy. To achieve optimal performance, you should increase the occupancy until the ALUs on the shader core are kept busy as much as possible.

Next, let me quickly provide the motivation for occupancy management on Apple family 9 GPU.

Registers, threadgroup, tile stack, and other shader core memory types are assigned dynamically from L1 cache, which is then backed by GPU last level cache and device memory.

Each SIMD group may use a large amount of various on-chip shader program memories. With the increase in number of SIMD groups, they may come a point when your workload uses more memory than what is available in on-chip storage, which causes spills to next cache levels. The shader cores balance thread occupancy and cache utilization to prevent memory cache thrashing.

This results in the shader data staying on-chip and this will keep the execution pipelines busy, resulting in better shader performance.

Xcode 15 has new set of performance counters that can help you to easily identify and address the cause of low occupancy in your workload, and achieve great performance.

Next, I will show you a workflow that can help you meet the workload performance target by increasing occupancy.

The first thing you need to see is how your Metal workload is running on the GPU, and what is the occupancy throughout its execution. Let me show you how you can do that, using Metal Debugger. Here you will see the workload execution on the GPU when the Timeline tab is selected.

It will show the duration of all the workload encoders for each shader stage. You can also view execution of shader pipelines in the section for each shader stage.

Below the encoder section is a counter section, where you can view top level performance limiters and utilizations and other helpful performance counters, like occupancy.

These counters are collected periodically, while their workload executed on the GPU.

I will be frequently mentioning performance utilization and limiter during this section, so let me briefly review what it means.

Work is the number of items processed in the hardware block, like arithmetic instructions in the ALU, address translation requests in the MMU, et cetera. Stall is the number of times an available item is held off by a downstream block. For example, memory instruction request stalled by cache, waiting for the request to come back from next level cache or device memory. Here are the math equations to calculate utilization and limiter for a hardware block. Utilization is the work done in the sample period by the hardware block, as the percentage of the hardware block's peak processing rate times the sample period. Limiter is calculated similarly, it includes both work and stalls in the sample period.

Next, I will show you how you can triage low occupancy.

Let me check the counter track, where total occupancy looks to be low. Let me also take a look at other performance limiters when the occupancy is low.

While the total occupancy is low, you can see performance limiter for FP16, which is an ALU subunit, is around 100%. And that means FP16 was busy throughout the interval. In this scenario, if you try to increase the occupancy, you may not see any performance improvement if the newly added SIMD groups primarily wanted to do FP16 work.

Reducing the FP16 instructions in your shader will most likely improve overall shader performance.

Here is a different workload, where you can see both occupancy and all ALU limiters are low. And that means the occupancy is not high enough to avoid ALU starvation. Once I establish that occupancy is making my ALU units to starve, which is in fact contrary to my optimization goal of keeping the ALUs busy, I will show you how to triage the reasons of low occupancy and increase it enough to make the workload either limited by ALU or memory bandwidth, rather than occupancy.

Shader Launch Limited Counter includes both the work done for launching the threads in the shader core, and the stalls when threads cannot be launched due to back pressure. A low value for this counter indicates that not enough threads are getting launched due to small workload size. Conversely, a high value indicates otherwise.

First, I will start by checking if enough shader threads are getting launched into the shader cores, by inspecting this counter value in the counters track. Here, you can see the Compute Shader Launch limiter is just 0.07%. As I mentioned earlier, a small counter value indicates that the shader cores are getting starved because this workload is not large enough to fill the GPU.

Now, let's look at a different workload I profiled.

And here you can see shader launch limiters are high. This means that either enough threads are getting launched, or thread launch is getting stalled due to back pressure, possibly due to running out of memory resources needed by those threads.

Let's understand what to do next in order to continue the investigation.

There can be a couple of reasons of low occupancy when Shader Launch Limiter counter is high. First, I will check if any compute dispatch that is executing during this time is using a large amount of thread group memory. If that is the case, then shader cores will stall launching new threads due to unavailability of thread group memory, resulting in low occupancy.

Here I profiled a different and simpler workload, consisting of just one compute pass. On GPU timeline, you can see the dispatches that were executing at any given time. With a compute encoder selected in the GPU timeline, you can see how much thread group memory is being set for each dispatch in the encoder. As thread group memory usage for the dispatch is just two kilobyte, which is low, I can rule out thread group memory causing shader launch stalls. Shader cores can set a target on the maximum occupancy to balance thread utilization and cache thrashing, using the occupancy manager. For the current workload, that leaves me with Occupancy Manager Target Counter to check if the GPU is restricting occupancy.

This is done to keep the registers, threadgroup, tile, and stack memory on-chip. I can check Occupancy Manager Target Counter in the timeline counter track. As you can see, Occupancy Manager Target Counter is lower than 100%. This indicates that occupancy manager is engaged by the GPU to keep various shader data memory types to stay on-chip, which would otherwise spill to the GPU, loss level cache, or, worse, to device memory.

Here is a flow chart that you can use to triage low occupancy when Occupancy Manager Target Counter is low. I will start by inspecting L1 eviction rate counter. This will provide a measure of how much registers, threadgroup, tile, and stack memories are able to stay on-chip, instead of being spilled to next level cache. Here in the L1 eviction rate counter track, I can see the counter show high spikes, which indicates L1 cash is being thrashed due to heavy shader core memory accesses, and getting evicted.

Now, let me show you how you can figure out which of these shader core memories are the cause of these evictions.

To figure out which L1 backed on-chip shader core memory is causing the evictions, we need to see which memory type is accessing L1 most frequently. And which memory has allocated the largest percentage of cache lines.

If you inspect L1 load and store bandwidth counter track in GPU timeline, you can see L1 bandwidths of various on-chip L1 backed memories. As can be seen here, image block L1 has the highest L1 memory store bandwidth.

Similarly, image block L1 has the highest L1 load bandwidth, and it is causing most of the L1 evictions.

L1 residency counter track will show you the breakdown of L1 cache allocations among various on-chip memories, and you can find out which shader core memory has the largest allocation in L1.

Here you can see, again, image block L1 memory has the largest working set size, and is most likely the cause of high L1 eviction rate. In this case, you can reduce the L1 eviction rate by using smallest pixel formats. If you're using MSAA, and your workload has highly complex geometry, then reducing sample count will help reduce the L1 eviction rate.

After reducing the frequency of accesses and the allocation size of on-chip memory causing L1 evictions, I needed to make sure that my changes have the desired effect.

After optimizing memory and reprofiling, I will make sure, if my workload is not ALU or memory bandwidth limited, I will check other limiters first. If that is the case, then the workload is not occupancy limited, and I don't need to triage low occupancy. If workload is not limited by ALU or memory bandwidth, I will check values of occupancy and Occupancy Manager Target Counter again, and keep repeating this process till the L1 eviction rate is low.

Here, L1 eviction rate is low. So occupancy manager target, in this case, seems to be engaged due to GPU last level cache or MMU stalls. This can happen when device perform memory accesses, thrash loss level cache, or generate DLB misses. Let me show you how to see these stalls for a different workload. GPU last level cache utilization measures the time during which it serviced, read, and write requests as a percentage of peak loss level cache bandwidth. Loss level cache limiter includes its utilization time, and the time during which it is stalled due to cache thrashing or back pressure from main memory. If you see GPU last level cache limiter is much higher than its utilization, then that indicates it is getting stalled a lot due to cache thrashing. You can reduce these stalls by reducing your buffer size, improving spatial and temporal locality.

Similarly, in the MMU counter track, if you see MMU limiter is much higher than MMU utilization, then device buffer accesses are causing TLB misses and MMU is thrashing. Reducing incoherent memory accesses to buffers can help reduce these stalls.

Once I have optimized device memory accesses and updated the workload, I will profile again. If other limiters are high, then I will focus on reducing those limiters, as workload is not occupancy limited anymore. If other limiters are low, I will keep repeating the triaging process, as I showed earlier, till the workload is not limited due to low occupancy.

Using these newly added performance counters, you will be able to keep the instruction execution pipelines busy, resulting in great shader performance. Now, let's move over to ray tracing profiling. With the new ray tracing hardware accelerator built into Apple family 9 GPUs, you can render incredibly realistic life-like scenes in real time. Let me show you how Metal Debugger in Xcode can help you optimize the performance for your ray tracing workloads.

I've been working on this app that renders a truck, and I've used ray racing to render some pretty nice reflections. It is already rendering incredibly fast with the new hardware, but I'm curious to see if I can make it even faster. So to help you achieve the best ray tracing performance, Xcode includes a new set of ray tracing counters, in addition to the Acceleration Structure Viewer you know and love. You can also use the Shader Cost Graph that Ruiwei showed earlier for analyzing your shaders and custom intersection functions. Let's start with the counters.

I have captured a frame of my renderer and opened up the performance timeline. Xcode now includes a new ray tracing group, which contains a comprehensive set of tracks to help you understand how your workload is running on the new ray tracing hardware.

Let's inspect each one.

The first track shows ray occupancy. The hardware is capable of executing a large number of rays concurrently, and ray occupancy shows the percentage that are active. And just like with thread occupancy, the Apple family 9 GPU shader core also automatically optimizes ray occupancy to ensure your app run with maximum performance.

Assuming the workload I have is not starved by the number of rays, I will start by checking the occupancy manager target.

Follow the same process as before, but pay particular attention to the ray tracing scratch category within L1 residency and bandwidth.

The ray tracing unit uses a sizable portion of L1 as a scratch buffer, which you can reduce by optimizing your payload size.

Re-profile and repeat the triaging process I showed you in the last section.

The next set of tracks provide a percentage breakdown of what the active rays are working on, which can help you gain a better understanding of areas to improve. For example, at this point in time, 75% of my active rays were performing instance transform. That seems quite high, considering my scene should only have two instances of truck in it.

Now, if you notice something like this in your own workload, it might be worth investigating your scene to make sure there's minimal instance overlap. I'll dig deeper into that later, using the acceleration structure viewer. So let's move on for now.

Finally, the intersection test tracks show a percentage breakdown for the primitive intersections being performed. For my renderer, it shows that the hardware is only running opaque triangle tests without any motion. To achieve the best performance, try to maximize opaque triangle tests, and only use custom intersection functions on geometries that need them, like objects requiring alpha testing.

And that concludes the new ray tracing counters. They are fantastic for gaining an understanding of how the hardware is executing your workload, and are a great place to start triaging performance. In this case, I found the instance transforms were suspiciously high, which indicates a potential issue with a scene.

To triage scene issues like potential instance overlap, you can use Acceleration Structure Viewer, let's take a look. First, let's find a dispatch where I use the acceleration structure. I'll click on the encoder in the timeline, then select the dispatch.

Finally, double click on the instance, acceleration structure.

This opens the Acceleration Structure Viewer, which provides a breakdown of my acceleration structure on the left, and a preview on the right. I can also highlight different aspects of my acceleration structure.

Now, I want to investigate transforms, so let me turn on instance traversals highlight mode. The hotspots are shown in blue. From the preview, it already seems like there are way more than the two instances I was expecting. I'll hover my mouse over this dark blue area to inspect exactly how many instances the ray is traversing. Eight, that means my ray needs to traverse eight instances before it finds the closest intersection. That's way more than two. This explains why the active rays were mostly working on instance transforms, but why are there so many? Let me switch over to the instance highlight mode, which gives each instance its own distinct color.

Ah, it looks like different parts of the truck are different instances, and they're all on top of each other. To achieve the best performance in this case, I should concatenate these instances together into a single primitive acceleration structure. However, that might not be a code change. The issue with my acceleration structure could be a symptom of a problem in my asset pipeline.

So I did some investigation, and got a new truck asset, which fixed the issue. Notice how the instance traversal are much better now.

To recap, you can use both the new ray tracing counters and the Acceleration Structure Viewer to unlock the incredible ray tracing performance of Apple family 9 GPUs. You should also continue to follow the best practices for ray tracing, which you can learn more about in these other talks.

Now, let's recap everything that was covered today.

Xcode 15 added new state-of-the-art GPU profiling tools that are available for Apple family 9 GPU. You can use Shader Cost Graph to immediately find and triage expensive shaders.

Using Performance Heat Maps, you can determine exactly which object or pixels cause the shader to be more expensive.

With the Shader Execution History tool, identifying shader functions that take most of the execution time is a breeze.

You can triage reasons of low occupancy, using the newly added performance counter in Xcode 15 and get the best performance.

Using the new performance counters for ray tracing, in addition to Acceleration Structure Viewer, you can get the best ray tracing performance.

Thanks for watching.

(no audio)

-