Retired Document

Important: This document does not represent best practices for current development. You should convert your app to use AVFoundation instead. See Transitioning QTKit Code to AV Foundation.

Creating a QTKit Stop Motion Application

Following the steps outlined in this chapter, you construct a simple stop motion recorder application that lets you capture a live video feed, grab frames one at a time with great accuracy, and then record the output of those frames to a QuickTime movie. You accomplish this with less than 100 lines of Objective-C code, constructing the sample as you’ve done in previous chapters, in Xcode 3.2 and Interface Builder 3.2.

In building your stop motion recorder application, you work with the following three QTKit classes:

QTCaptureSession. The primary interface for capturing media streams.QTCaptureDecompressedVideoOutput. The output destination for aQTCaptureSessionobject that you can use to process decompressed frames from the video that is being captured. Using the methods provided in this class, you can produce decompressed video frames that are suitable for high-quality video processing.QTCaptureDeviceInput. The input source for media devices, such as cameras and microphones.

The sample code described in this chapter does not support input from DV cameras, which are of type QTMediaTypeMuxed, rather than QTMediaTypeVideo. To add support for DV cameras, read the chapter Extending the Media Player Application.

Prototype the Stop Motion Recorder Application

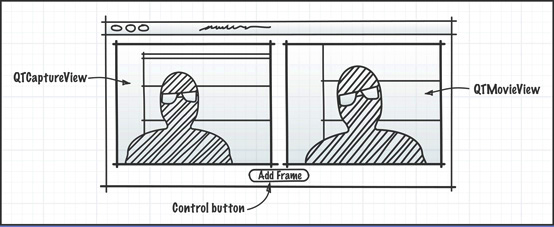

Just as you’ve done in the section Prototype the Recorder, start by creating a rough sketch of your QTKit stop motion recorder application. Think, again, of what design elements you want to incorporate into the application.

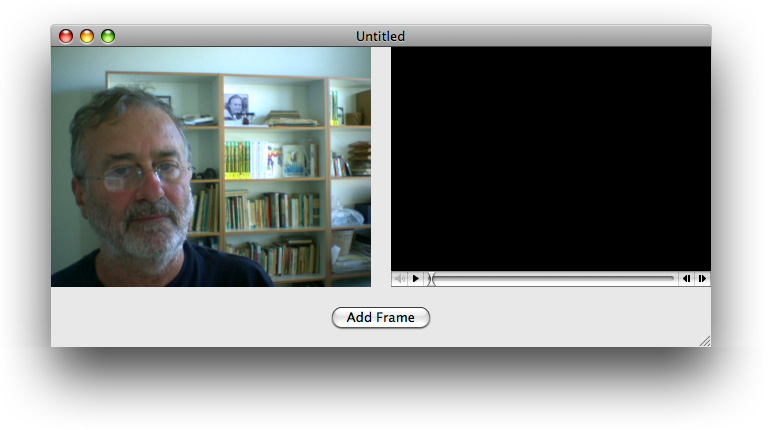

In this design prototype, you start with three simple objects: a capture view, a QuickTime movie view, and a single button to add frames. These will be the building blocks for your application. You can add more complexity to the design later on. After you’ve sketched out your prototype, think how to hook up the objects in Interface Builder and what code you need in your Xcode project to make this happen. Note that you need to add a movie controller to the QTMovieView object in the illustration above.

Create the Project Using Xcode 3.2

To create the project, follow these steps, as in previous chapters:

Launch Xcode 3.2 and choose File > New Project.

When the new project window appears, select Cocoa Document-based Application and click Choose.

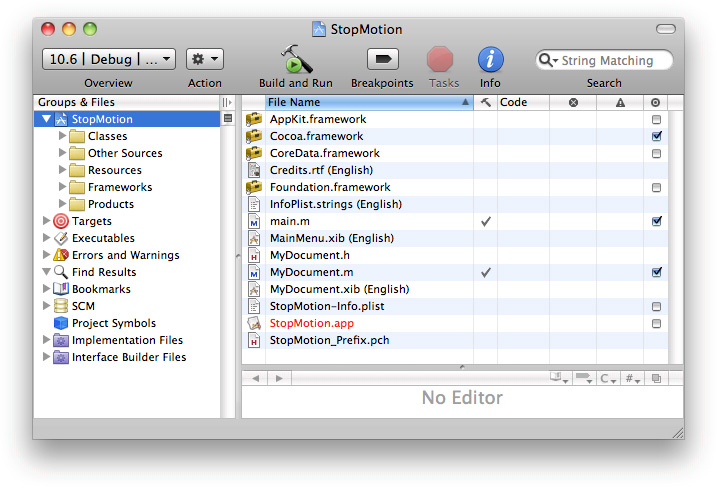

Name the project

StopMotionand navigate to the location where you want the Xcode application to create the project folder.The Xcode project window appears.

From the Action menu in your Xcode project, choose Add > Add to Existing Frameworks.

Add the QTKit framework to your

StopMotionproject, which resides in the/System/Library/Frameworksdirectory.Now add the Quartz Core framework to your project, which also resides in the

/System/Library/Frameworksdirectory.Select

QuartzCore.framework, and click Add when the Add To Targets window appears.

This completes the first sequence of steps in your project. In the next sequence, you define actions and outlets in Xcode before working with Interface Builder.

Because you’ve already prototyped your QTKit stop motion recorder application, at least in rough form with a clearly defined data model, you can now determine which actions and outlets need to be implemented. In this case, you have a

QTCaptureViewobject, which is a subclass ofNSView, aQTMovieViewobject to display your captured frames and one button to record your captured media content and add each single frame to your QuickTime movie output.

Import the QTKit Headers and Set Up Your Implementation File

Open the

MyDocument.hdeclaration file in your Xcode project.In the file, delete the Cocoa import statement and replace it with the QTKit import statement.

#import <QTKit/QTKit.h>

Open the

MyDocument.mimplementation file in your project. Delete the contents of the file except for the following lines of code:#import "MyDocument.h"

@implementation MyDocument

- (NSString *)windowNibName

{return @"MyDocument";

}

@end

Save your file.

Determine the Actions and Outlets You Want

Now begin adding outlets and actions.

In your

MyDocument.hfile, add the instance variablesmCaptureViewandmMovieView.IBOutlet QTCaptureView *mCaptureView;

IBOutlet QTMovieView *mMovieView;

Add the

addFrame:action method.- (IBAction)addFrame:(id)sender;

Save your file.

Now open your

MyDocument.mfile and add the same action method, followed by braces for the code you add later to implement this action.- (IBAction)addFrame:(id)sender

{}

This completes the second stage of your project. Now you work with Interface Builder 3.2 to construct the user interface for your project. Be sure that you have saved both your declaration and implementation files, so that the actions and outlets you declared are synchronously updated in your Interface Builder nib.

Create the User Interface

In the next phase of your project you construct the user interface for your project.

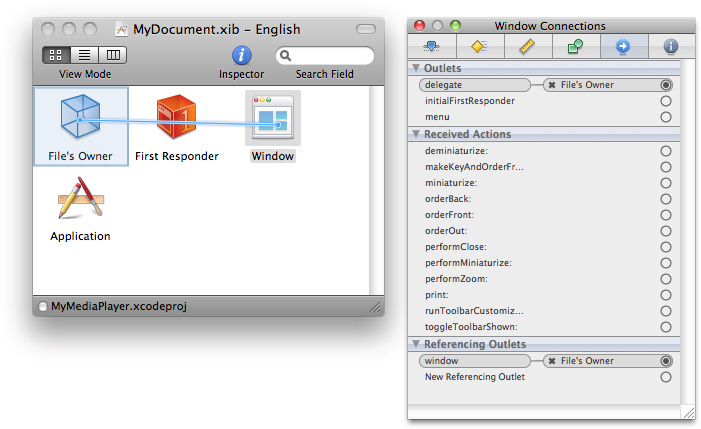

Open Interface Builder 3.2 and click the

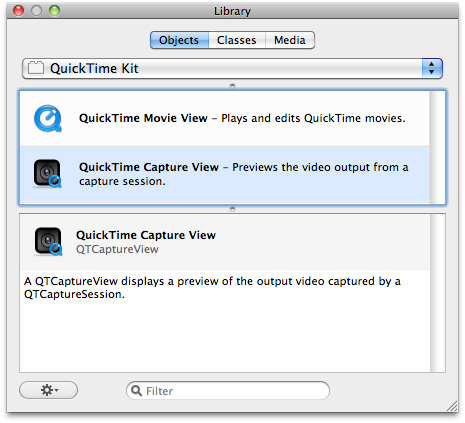

MyDocument.xibfile in your Xcode project window.In Interface Builder 3.2, select Tools > Library to open a library of objects.

Scroll down to the QuickTime capture view object.

The

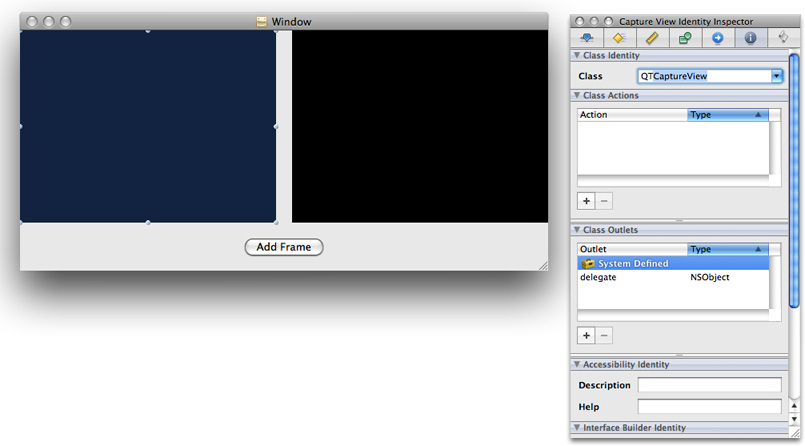

QTCaptureViewobject provides you with an instance of a view subclass to display a preview of the video output that is captured by a capture session.Drag the

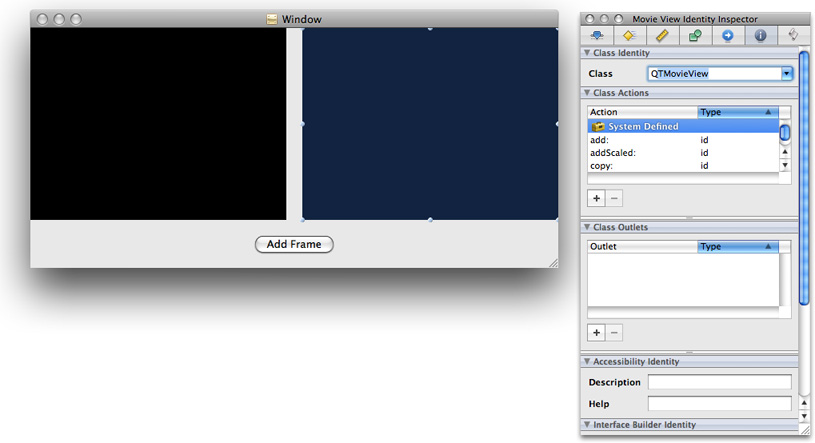

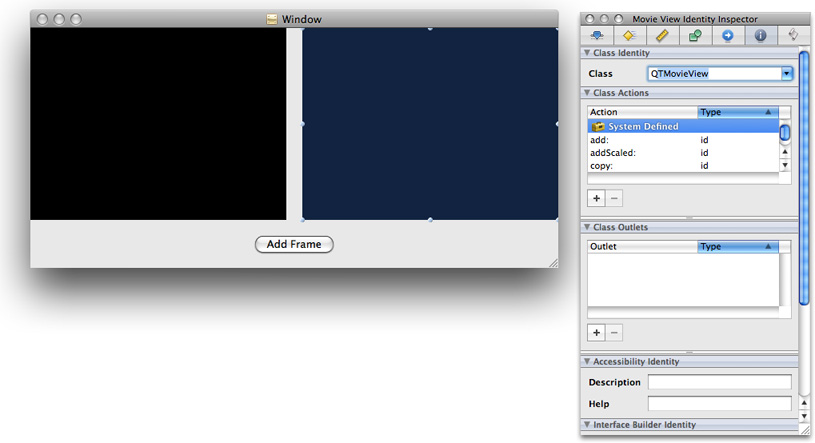

QTCaptureViewobject into your window and resize the object to fit the window, allowing room at the bottom for your Add Frame button (already shown in the illustration below) and to the right for yourQTMovieViewobject in your QTKit stop motion recorder application.Choose Tools > Inspector. In the Identity panel, select the information (“i”) icon. Click in the field Class and your

QTCaptureViewobject appears.

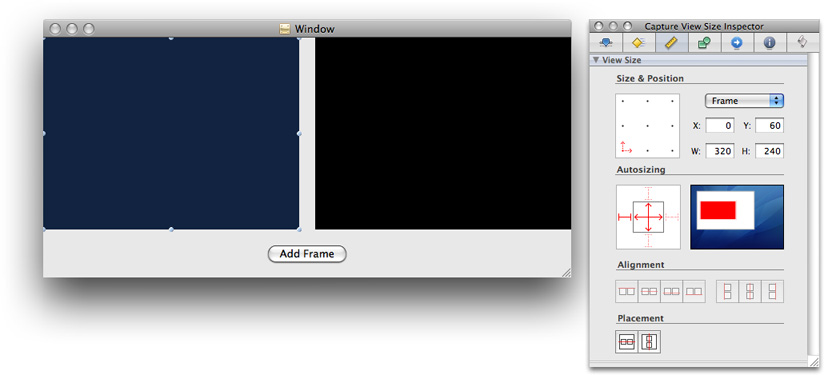

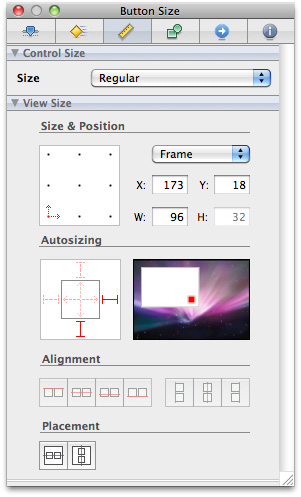

Set the autosizing for the object in the Capture View Size panel.

Now repeat the same sequence described in Step #2 to add your

QTMovieViewobject to your window (already shown above).Scroll to the

QTMovieViewobject.Select the

QTMovieViewobject (symbolized by the blue Q) and drag it into your Window next to theQTCaptureViewobject, shown below.Choose Tools > Inspector. In the Identity Inspector, select the information (“i”) icon. Click in the Class field and your

QTMovieViewobject appears.

Set the autosizing for your

QTMovieViewobject, as you did for theQTCaptureViewobject in the steps above.

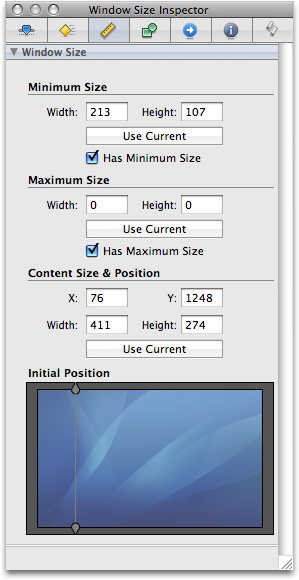

Now specify the window attributes in your

MyDocument.xibfile.Select the window object in your nib.

Click the attributes icon in the panel.

Define the window size you want in your

MyDocument.xibby selecting the size icon (symbolized by a yellow ruler) in the Window Size panel.

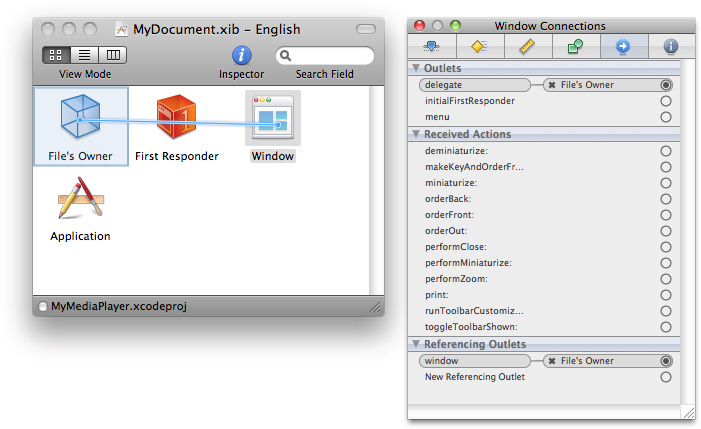

Specify the delegate outlet connections of File’s Owner in the Window Connections panel.

In the Library, select the Push Button control and drag it to the window.

Enter the text

Add Frame.Set the autosizing for the button at the center and right outside corner, leaving the inside struts untouched, as shown in the illustration.

Select the

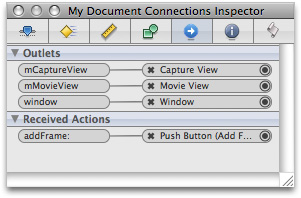

MyDocument.xibfile and click the Connections Inspector.Now wire up the outlets and received actions.

Control-drag each outlet instance variable to the File’s Owner object.

Select the File’s Owner object in your

MyDocument.xibfile.Click the Class Identity icon in the Interface Builder Inspector.

Note that the green light at the left corner of your

StopMotion.xcodeprojis turned on, indicating that Xcode and Interface Builder have synchronized the actions and outlets in your project.

Save your Interface Builder file.

Prepare to Capture Single-Frame Video

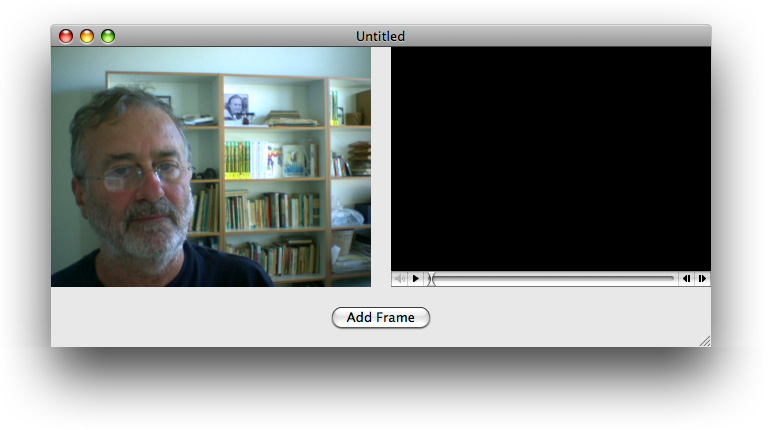

In the last phase of your project, after adding and completing the implementation code, you’re ready to capture single-frame video, using your stop motion recorder application, as shown below.

Complete the Implementation of the MyDocument Class in Xcode

To complete the implementation of the MyDocument class, you define the instance variables that point to the capture session, as well as to the device input and decompressed video output objects.

In your Xcode project, add the instance variables to the interface declaration.

Add these lines of code in your

MyDocument.hdeclaration file:@interface MyDocument : NSDocument

{QTMovie *mMovie;

QTCaptureSession *mCaptureSession;

QTCaptureDeviceInput *mCaptureDeviceInput;

QTCaptureDecompressedVideoOutput *mCaptureDecompressedVideoOutput;

}

The

mMovieinstance variable points to theQTMovieobject, and themCaptureSessioninstance variable points to theQTCaptureSessionobject. Likewise, the*mCaptureDeviceInputinstance variable points to theQTCaptureDeviceInputobject, and the next line declares that themCaptureDecompressedVideoOutputinstance variable points to theQTCaptureDecompressedVideoOutputobject.Declare the

mCurrentImageBufferinstance variable.CVImageBufferRef mCurrentImageBuffer;

This instance variable stores the most recent frame that you’ve grabbed in a

CVImageBufferRef.That completes the code you need to add to your

MyDocument.hfile.Open your

MyDocument.mfile and follow these steps.Create an empty movie that writes to mutable data in memory, using the

initToWritableData:method.- (void)windowControllerDidLoadNib:(NSWindowController *) aController

{NSError *error = nil;

[super windowControllerDidLoadNib:aController];

[[aController window] setDelegate:self];

if (!mMovie) {mMovie = [[QTMovie alloc] initToWritableData:[NSMutableData data] error:&error];

if (!mMovie) {[[NSAlert alertWithError:error] runModal];

return;

}

}

Set up a capture session that outputs the raw frames you want to grab.

[mMovieView setMovie:mMovie];

if (!mCaptureSession) {BOOL success;

mCaptureSession = [[QTCaptureSession alloc] init];

Find a video device and add a device input for that device to the capture session.

QTCaptureDevice *device = [QTCaptureDevice defaultInputDeviceWithMediaType:QTMediaTypeVideo];

success = [device open:&error];

if (!success) {[[NSAlert alertWithError:error] runModal];

return;

}

mCaptureDeviceInput = [[QTCaptureDeviceInput alloc] initWithDevice:device];

success = [mCaptureSession addInput:mCaptureDeviceInput error:&error];

if (!success) {[[NSAlert alertWithError:error] runModal];

return;

}

Add a decompressed video output that returns the raw frames you’ve grabbed to the session and then previews the video from the session in the document window.

mCaptureDecompressedVideoOutput = [[QTCaptureDecompressedVideoOutput alloc] init];

[mCaptureDecompressedVideoOutput setDelegate:self];

success = [mCaptureSession addOutput:mCaptureDecompressedVideoOutput error:&error];

if (!success) {[[NSAlert alertWithError:error] runModal];

return;

}

Preview the video from the session in the document window.

[mCaptureView setCaptureSession:mCaptureSession];

Start the session, using the

startRunningmethod you’ve used previously in theMyRecordersample code.[mCaptureSession startRunning];

}

}

Implement a delegate method that

QTCaptureDecompressedVideoOutputcalls whenever it receives a frame.- (void)captureOutput:(QTCaptureOutput *)captureOutput didOutputVideoFrame:(CVImageBufferRef)videoFrame withSampleBuffer:(QTSampleBuffer *)sampleBuffer fromConnection:(QTCaptureConnection *)connection

Store the latest frame. Do this in a

@synchronizedblock because the delegate method is not called on the main thread.CVImageBufferRef imageBufferToRelease;

CVBufferRetain(videoFrame);

@synchronized (self) {imageBufferToRelease = mCurrentImageBuffer;

mCurrentImageBuffer = videoFrame;

}

CVBufferRelease(imageBufferToRelease);

}

Handle window closing notifications for your device input and stop the capture session.

- (void)windowWillClose:(NSNotification *)notification

{[mCaptureSession stopRunning];

QTCaptureDevice *device = [mCaptureDeviceInput device];

if ([device isOpen])

[device close];

}

Deallocate memory for your capture objects.

- (void)dealloc

{[mMovie release];

[mCaptureSession release];

[mCaptureDeviceInput release];

[mCaptureDecompressedVideoOutput release];

[super dealloc];

}

Specify the output destination for your recorded media, in this case an editable QuickTime movie.

- (BOOL)readFromURL:(NSURL *)absoluteURL ofType:(NSString *)typeName error:(NSError **)outError

{QTMovie *newMovie = [[QTMovie alloc] initWithURL:absoluteURL error:outError];

if (newMovie) {[newMovie setAttribute:[NSNumber numberWithBool:YES] forKey:QTMovieEditableAttribute];

[mMovie release];

mMovie = newMovie;

}

return (newMovie != nil);

}

- (BOOL)writeToURL:(NSURL *)absoluteURL ofType:(NSString *)typeName error:(NSError **)outError

{return [mMovie writeToFile:[absoluteURL path] withAttributes:[NSDictionary dictionaryWithObject:[NSNumber numberWithBool:YES] forKey:QTMovieFlatten] error:outError];

}

Add the

addFrame:action method that you specified previously in your implementation file. This enables you to get the most recent frame that you’ve grabbed. Do this in a@synchronizedblock because the delegate method that sets the most recent frame is not called on the main thread. Note that you’re wrapping aCVImageBufferRefobject into anNSImage. After you create anNSImage, you can then add it to the movie.- (IBAction)addFrame:(id)sender

{CVImageBufferRef imageBuffer;

@synchronized (self) {imageBuffer = CVBufferRetain(mCurrentImageBuffer);

}

if (imageBuffer) {NSCIImageRep *imageRep = [NSCIImageRep imageRepWithCIImage:[CIImage imageWithCVImageBuffer:imageBuffer]];

NSImage *image = [[[NSImage alloc] initWithSize:[imageRep size]] autorelease];

[image addRepresentation:imageRep];

CVBufferRelease(imageBuffer);

[mMovie addImage:image forDuration:QTMakeTime(1, 10) withAttributes:[NSDictionary dictionaryWithObjectsAndKeys:

@"jpeg", QTAddImageCodecType, nil]];

[mMovie setCurrentTime:[mMovie duration]];

[mMovieView setNeedsDisplay:YES];

[self updateChangeCount:NSChangeDone];

}

}

Build and Compile Your Stop Motion Recorder Application

After you’ve saved your project, click Build and Go. After compiling, click the Add Frame button to record each captured frame and output that frame to a QuickTime movie. The output of your captured session is saved as a QuickTime movie.

Now you can begin capturing and recording with your QTKit stop motion recorder application. Typically, you can record any number of frames illustrating movement or action, using objects of clay or stick figures, for example, which, when combined and recorded, will create the illusion of motion in a movie or animated sequence. The technique is common in working with various inanimate objects that can be assembled into a particular story or narrative.

Summary

In this chapter, you focused on building a stop motion recorder application using three capture objects: QTCaptureSession, QTCaptureDecompressedVideoOutput and QTCaptureDeviceInput. These were the essential building blocks for your project. You learned how to:

Prototype and design the data model and control for your project in a rough sketch before constructing the actual user interface.

Define a specific IB action as a button to add frames to your recording.

Create the user interface using the

QTCaptureViewplug-in from the Interface Builder library of controls.Wire up the Add Frame control button for your user interface.

Define the instance variables that point to the capture session, as well as to the device input and decompressed video output objects.

Build and run the stop motion recorder application, using the iSight camera as your input device.

Output the single captured frames to a QuickTime movie for animated effects.

Copyright © 2016 Apple Inc. All Rights Reserved. Terms of Use | Privacy Policy | Updated: 2016-08-26